How to find the best hyperparameters for machine learning model

Victor Isaac Oshimua

Posted on September 3, 2023

Manually finding the best hyperparameters to improve your machine learning (ML) model takes a lot of time and effort. These are the types of tasks that need to be automated to increase productivity as a data scientist or ML engineer.

Having faced this problem myself, I was inspired to build a solution called HyperTune.

HyperTune is a Python package designed to assist data practitioners in finding the optimal hyperparameters for their pretrained ML models.

HyperTune leverages the GridSearchCV cross-validation technique to identify the best hyperparameters for a given model and retrain the model with these hyperparameters.

Before delving into the workings of HyperTune, let's first grasp some key concepts.

Prerequisites

- You should be familiar with machine learning concepts, i.e., you can build a machine learning model.

- Install HyperTune by running the command on your command line:

python3 -m pip install --index-url https://test.pypi.org/simple/ --no-deps hypertune

What are hyperparameters?

Hyperparameters are parameters that control the machine learning training process. They are typically set by data scientists /data practitioners and play a crucial role in optimising the performance of ML models.

What is cross-validation?

Cross-validation is a model performance evaluation technique in which the available data is divided into multiple folds or subsets. These folds are used as validation sets, and the model is trained on the remaining data. This process is repeated using different fold combinations, ensuring that each fold serves as a validation set at least once.

After multiple iterations, the average performance of the model across these validations is calculated. This helps assess how well the model generalises to unseen data and provides a more robust estimate of its performance than a single train-test split. Common forms of cross-validation include k-fold cross-validation and stratified k-fold cross-validation.

What is GridSearchCV?

GridSearchCV is a cross-validation technique used to search for the best hyperparameters from a predefined grid of hyperparameter values. It systematically evaluates the model's performance with each combination of hyperparameters within the grid.

In essence, GridSearchCV trains multiple models, each using a different set of hyperparameter values from the grid. For each combination, it computes the model's performance using a defined evaluation metric. After evaluating all possible combinations, GridSearchCV returns the model with the best performance according to the specified evaluation metric. This process helps automate the tedious task of manually tuning hyperparameters and ensures that you find the optimal configuration for your machine learning model.

Now that you have a good understanding of what HyperTune is all about, let's explore how HyperTune can assist you in discovering the best hyperparameters for your model.

Here's the scenario: You're currently engaged in a machine learning project with the goal of building a model capable of predicting house prices. To ensure that this model is ready for production and delivers the highest possible accuracy, you need to train it with the most optimal set of hyperparameters.

Therefore, to build your machine learning solution successfully, you should follow these steps:

Step 1: Import necessary libraries

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

from hypertune.tune import tune_hyperparameters

Step 2: Load and prepare training data

# Sample data for regression

data = load_boston()

X, y = data.data, data.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Step 3: Define set of hyperparameters to tune

# Define the hyperparameter grid to search

param_grid = {

'n_estimators': [50, 100, 150],

'learning_rate': [0.01, 0.1, 0.2],

'max_depth': [3, 4, 5],

}

Step 4: Build model

# Create a GradientBoostingRegressor model

regressor = GradientBoostingRegressor(random_state=42)

Step 5: Use HyperTune to find best hyperparameters

# Use HyperTune to find the best hyperparameters

best_regressor = tune_hyperparameters(regressor, param_grid, X_train, y_train, scoring='r2')

Step 6: Train the model with the best hyperparameters

# Now you can use the best_regressor for predictions

y_pred = best_regressor.predict(X_test)

# Print the best hyperparameters

print("Best Model Parameters:")

print(best_regressor.get_params())

# Evaluate the model using your preferred regression metric (e.g., R-squared)

from sklearn.metrics import r2_score

r2 = r2_score(y_test, y_pred)

print(f"R-squared: {r2:.2f}")

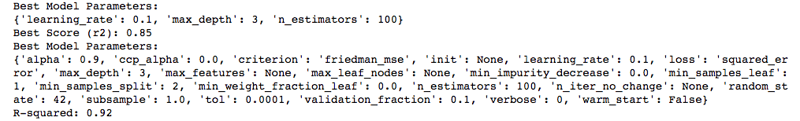

Result:

With the help of HyperTune, you were able to train your regression model using the optimal hyperparameters, and the evaluation metrics score demonstrated that the model performed well.

Final thoughts

The use of HyperTune in your machine learning project indeed streamlined the hyperparameter tuning process and resulted in a high-performing model for predicting house prices. Automated tools like HyperTune can greatly improve the efficiency and effectiveness of your machine learning workflow, leading to more accurate and successful models in real-world applications.

If you have any questions or would like to connect, please feel free to reach out to me via email at victorkingoshimua@gmail.com. You can also connect with me on LinkedIn, where I regularly share content related to topics like this. Thank you for your engagement, and I look forward to connecting with you.

Posted on September 3, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.