EnqueueZero Techshack 2019-02

Ju

Posted on January 13, 2019

EnqueueZero Techshack 2019-02

Original post: Original post: https://enqueuezero.com/techshack.weekly/2019-02.html

Kia ora! I am the creator of Enqueue Zero (https://enqueuezero.com), a site that explains code principles to Developers/DevOps/Sysadmins. This site is updated under a single man team since 2018. The `EnqueueZero Techshack` series is comprised of a bunch of interesting posts I wrote or found on the Internet. More importantly, I hope these posts would please you.

To keep me motivated and this website ad-free, appreciate your kind donation!

Enjoy reading this week's posts.

Dive into Deep Learning

This is a nice book to keep up the tread of Deep Learning. It explains the Deep Learning Basics CNN, RNN, NLP, etc. Each chapter goes with mathematics, figures and code.

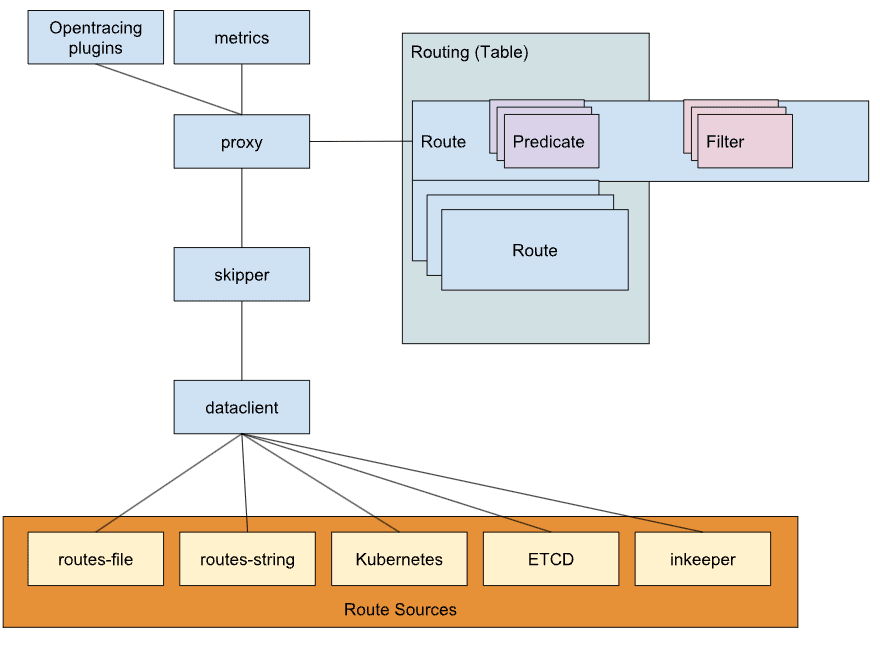

The Architecture of Skipper

Skipper is an HTTP router and reverse proxy for service composition.

- It's released as a server package

skipperand a client packageeskip. - The

skipperpackage can load routes from botheskipconfig file and Kubernetes Ingress resource objects. - The internal package

proxyofskipperdynamically changes routing table populated byskipper. - A route is one entry in the routing table, consisting of

predicatesfor routing HTTP requests,filtersfor modifying the request and the response, and a backend, or<shunt>or<loopback>.

For each request,

- Proxy creates a context enhancing it with an Opentracing API Span.

- Proxy checks ratelimits and lookup the route in the routing table.

- Skipper applies request filters.

- Skipper checks route local ratelimits, the circuitbreakers and do the backend call.

- Skipper automatically retry if there is TCP/TLS connection error.

- Skipper applies response filters.

Two special cases that skipper won't forward requests to the backend.

- Shunted route(

<shunt>): skipper serves the request alone, by using only the filters. - Loopback route(

<loopback>): the request is passed to the routing table for finding another route, based on the changes that the filters made to the request.

Tools for creating beautiful diagrams

Tools mentioned in the thread: yeD, draw.io, visio, plantuml, simplediagrams, AsciiFlow, Gliffy, Omnigraffle, Graphviz, ipe, Dia, gravit.io, limnu.com, LucidChart, plotdevice.io, monodraw, mermaid, whimsical.co, LaTeX/TikZ, ...

This is HN at it’s best, nobody answered the original question.

Understanding Parser Combinators

fsharpforfunandprofit.com 1 | fsharpforfunandprofit.com 2 | fsharpforfunandprofit.com 3 | fsharpforfunandprofit.com 4

The series provides a step-by-step tutorial on creating a basic JSON parser library by parser combinators. Although the implementation is in F#, the concept is the same in other programming languages.

- Step 1: The parser gives the result (true, "remaining characters") or (false, "original characters"), according to if the parser can successfully consume the characters.

- Step 2: The parser gives the result ("success message", "remaining characters") or ("error message", "original characters").

- Step 3: The parser gives the result Success(charToMatch, remaining) or Failure(message).

- Step 4: Convert the parser function to a curried implementation to support more characters.

- Step 5: Encapsulate the parsing function in a type.

- Step 6: Implement andThen combinator. For example,

parserA andThen parserB= handle char A, and then handle char B. - Step 7: Similarly, implement "orElse" combinator.

- Step 8: Use

mapPto support parsing multiple characters at once and aggregating them into a string. For example,run parseThreeDigitsAsInt "123A"givesSuccess(123, "A") - Step 9: Use

sequenceto transform a list of parsers into a single parser. For example,let combined = sequence [parseThreeDigitsAsInt; parserA]can parse "123AZ" toSuccess([123, 'A'], "Z"). - Step 10: Use

many/many1to match a parser multiple times, pretty much like regex(pattern)*and(pattern)+. - Step 11: Throw some results away. For example, when parsing

'abc', we want the resultString("abc"), notString("'abc'"). - Step 12: Add error messages if the input is incorrect.

- Step 13: Apply all blocks introduced above and define the JSON parsing rules https://www.json.org/

Many Nodes, One Distributed System

- A single process or computer that does not communicate with others is NOT a distributed system.

- A distributed system involves multiple entities talking to one another in some way, while also performing their own operations.

- If the system is a bunch of distributed servers, then maybe the components could be referred to as “servers”; if the system involves processes talking to one another, then perhaps the entities are just “processes”. Whatever it is, we call it nodes, the individual entities in a distributed system.

- The nodes in a distributed system run their own operations - these operations are fast. But the nodes also communicate with each other - communication is slow, which happens to be one of the biggest problems in distributed computing.

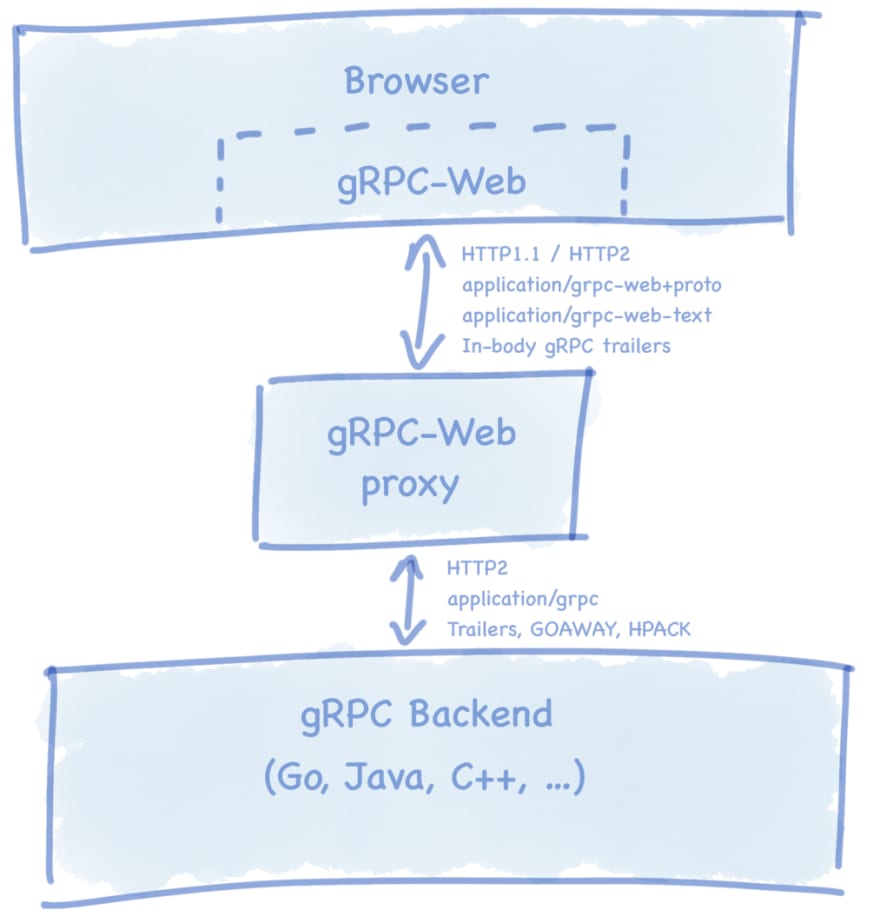

The state of gRPC in the browser

- The challenge of gRPC in the browser: There is no way to force using HTTP/2. Also, The browser doesn't support accessing raw HTTP/2 frames.

- How to rescue? Place a gRPC-Web as a proxy in between the browser side and the gRPC server side. The proxy can support both HTTP/1 and HTTP/2.

- There are two implementations of gPRC-web: improbable-eng/grpc-web and grpc/grpc-web.

How To Speed Up The Code Review

Review strategies:

- Feature changes: fulfillment of business requirements and design.

- Structure refactoring: backward compatibility and design improvements.

- Simple refactoring: readability improvements. Because these changes are mostly may be done by IDE.

- Renaming/removing classes: whether the namespaces structure has become better.

- Removing unused code: backward compatibility.

- Code style fixes: in most cases instant merge.

- Formatting fixes in most cases instant merge.

- Simple refactoring. The code has become more readable? - merge.

- Renaming/moving classes. The class has been moved to a better namespace? - merge.

- Removing unused (dead) code - merge.

- Code style or formatting fixes - merge, people shouldn’t review them, it is the task for linters.

Don’t create huge pull-requests with mixed categories of changes.

- Optimize your code for reading. The code is read much more often than it is written.

- Describe suggested changes in order to provide a context for diffs in the request.

- You should review your code by yourself before submitting the request. Review your own request as it is not yours. Sometimes you may find something you have missed. This will reduce the circles of rejecting/fixing.

Monorepo: Please do v/s Please don't

Monorepo a software development strategy where code for many projects are stored in the same repository.

- Though we get the benefit of easier collaboration / code sharing / single build / no dependency management, it creates tight coupling, and more importantly, at scale, a monorepo must solve every problem that a polyrepo must solve, with the downside of encouraging tight coupling, and the additional herculean effort of tackling VCS scalability.

- The default behavior of a polyrepo is isolation — that’s the whole point. The default behavior of a monorepo is shared responsibility and visibility — that’s the whole point.

Netflix Play API: Building an Evolutionary Architecture

Key takeaways from the talk included:

- Services that have a single identity/responsibility are easier to maintain and upgrade.

- Engineers should spend time identifying core decisions when building services if they requires thorough deliberation or rapid experimentations.

- Engineers should start asking why a service exists in order to determine its responsibility.

- Identify your Type 1 and Type 2 decisions; Spend 80% of your time debating and aligning on Type 1 decisions.

- > Type 1 decisions are highly consequential and have long-ranging impact, and so these decisions must by made methodically and by engaging in consultation with others.

- > Type 2 decisions are easily changeable, and do not have long-ranging implications, and therefore these decisions should be made quickly and by "high judgements individuals or small groups".

Logs and Metrics and Graphs, Oh My!

- The goal of monitoring is to be able to detect, debug and resolve any problems that occur.

- Monitoring means knowing what’s going on inside your system, how much traffic it’s getting, how it’s performing, how many errors there are.

- Logs come from Unix system, applications.

- Metrics are variables collected from applications.

- Both approaches allow creation of high-level graphs to know how many requests your application is serving, how quickly and with how many errors. Both also allow you to slice and dice.

- Metrics give you an aggregated view over this instrumentation, meaning they can look your system from an overall perspective.

- Logs give you a view of a smaller number of metrics, meaning you can drill down to a specific incident.

- Logs and metrics are complementary.

Courier: Dropbox migration to gRPC

Dropbox's core SOA framework is Courier, a gRPC-based protocol.

- It encodes each service's identity into the TLS certificate. Plus, it brings Access Control Lists (ACLs) and rate limits.

- By using the service identity, the service's logs, stats, traces are searchable in the dashboard. Each service has granular attribution of load, errors, and latency.

- Every gRPC request includes a deadline, indicating how long the client will wait for a reply.

- It introduces an LIFO queue acting as an automatic circuit breaker. The queue is not only bounded by size, but critically, it’s also bounded by time. A request can only spend so long in the queue.

- It modifies both gRPC-core and gRPC-python to support session resumption to not do TLS handshake at all, which makes the request faster.

- Migrate from the old RPC framework to Courier.

- Write a common interface supporting both the two.

- Migrate to the new.

- Switch clients to use Courier RPC

- Clean up the legacy code.

- Lesson Learned

- Observability is a feature. Having all the metrics and breakdowns out-of-the-box is invaluable during troubleshooting.

- Standardization and uniformity are important. They lower cognitive load, and simplify operations and code maintenance.

- Try to minimize the amount of boilerplate code developers need to write. Codegen is your friend here.

- Make migration as easy as possible. Migration will likely take way more time than the development itself. Also, migration is only finished after cleanup is performed.

- RPC framework can be a place to add infrastructure-wide reliability improvements, e.g. mandatory deadlines, overload protection, etc. Common reliability issues can be identified by aggregating incident reports on a quarterly basis.

Posted on January 13, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.