The case of disappearing metrics in Kubernetes

Shyamala

Posted on May 24, 2020

🧐 Context 🧐

My team hosts the kubernetes platform running on EKS for several teams. When EKS announced the support for Kubernetes version 1.16 we upgraded our infrastructure. This is pretty routine process for us. We use Prometheus and grafana dashboard to observe and monitor our infrastructure.

After we upgraded, our metrics dashboard stopped showing RAM and CPU utilization of pods. I set out to find why?

⚠️ Disclaimer ⚠️

Before we move ahead,

- I am a newbie to Kubernetes learning the tricks on the job

- This is my journey on how I debugged the issue, so what is obvious to a Kubernetes expert is not obvious to me.

TL;DR

If you already know what caused this issue, jump to final section

Debugging Step 1:

So the dashboard is not showing metrics, normally Kubectl has commands that shows the CPU and RAM utilization of the Nodes and Pods. So I checked if those worked.

> kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

ip-xx-xx-xxx-xxx.ec2.internal 8xxm 5% 2xxxxMi 23%

ip-xx-xx-xxx-xxx.ec2.internal 2xxxxm 15% 3xxxxMi 29%

ip-....

> kubectl top pods

NAME CPU(cores) MEMORY(bytes)

xxxx-wnpgj xxm xxMi

xxxx-r4k85 xxm xxxMi

...

🤔 What does this mean? 🤔

Kubernetes is able to fetch the metrics. These are the metrics that have to be used by the HPA to scale the Pods based on the resource utilization.

Debugging Step 2:

Since the dashboard does not show the resource utilization metrics, but the kubectl top command works, my next thought was may be the scaling does not work properly anymore.

According to Resource usage monitoring

components such as the Horizontal Pod Autoscaler controller, as well as the kubectl top utility. These metrics are collected by the lightweight, short-term, in-memory metrics-server and are exposed via the metrics.k8s.io API

So now I wanted to see what is the status with one of our teams.

> kubectl get hpa -n xxx

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

xxx-worker Deployment/xxx-worker 82/150, 5%/100% 1 6 1 4d19h

Since the Targets look fine in the above output. I thought let me see if at any point the scaling actually happened.

> kubectl describe hpa -n xxx

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedGetResourceMetric 25m (x24 over 2d23h) horizontal-pod-autoscaler unable to get metrics for resource cpu: no metrics returned from resource metrics API

Normal SuccessfulRescale 9m56s (x233 over 2d23h) horizontal-pod-autoscaler New size: 2; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 4m9s (x233 over 2d22h) horizontal-pod-autoscaler New size: 1; reason: All metrics below target

🤔 What does this mean? 🤔

As we can see at some point fetching the resource metric failed but we can also see that the system recovered and was able to scale successfully.

Since there is a FailedGetResourceMetric at some point, I thought this could lead me to why metrics do not appear in the dashboard.

Debugging Step 3:

As we have seen from several documentation mentioned above and also from the error no metrics returned from resource metrics API. There is some component in Kubernetes that is responsible for the metrics. So I cast a wide net

> kubectl get all -n metrics | grep metrics | grep pod

pod/metrics-server-xxxxxxx-xxxxx 1/1 Running 0 4d21h

pod/prometheus-kube-state-metrics-xxxx-xxxxx 1/1 Running 0 4d21h

This sound promising. So metrics-server sounds like some server dealing with metrics. Naturally I am interested in logs

> k logs -n metrics pod/metrics-server-xxxxxxx-xxxxx

I0524 11:17:54.371856 1 manager.go:120] Querying source: kubelet_summary:ip-xx-xx-xxx-xxx.ec2.internal

I0524 11:17:54.388840 1 manager.go:120] Querying source: kubelet_summary:ip-xx-xx-xxx-xxx.ec2.internal

I0524 11:17:54.495829 1 manager.go:148] ScrapeMetrics: time: 233.232388ms, nodes: x, pods: xxx

E0524 11:18:18.542051 1 reststorage.go:160] unable to fetch pod metrics for pod my-namespace/team-worker-xxxxx-xxxx: no metrics known for pod

🤔 What does this mean? 🤔

As soon as I saw the words ScrapeMetrics (my brain translated it to Prometheus related) and the error unable to fetch pod metrics for pod my-namespace/team-worker-xxxxx-xxxx: no metrics known for pod

I thought I have found the issue.

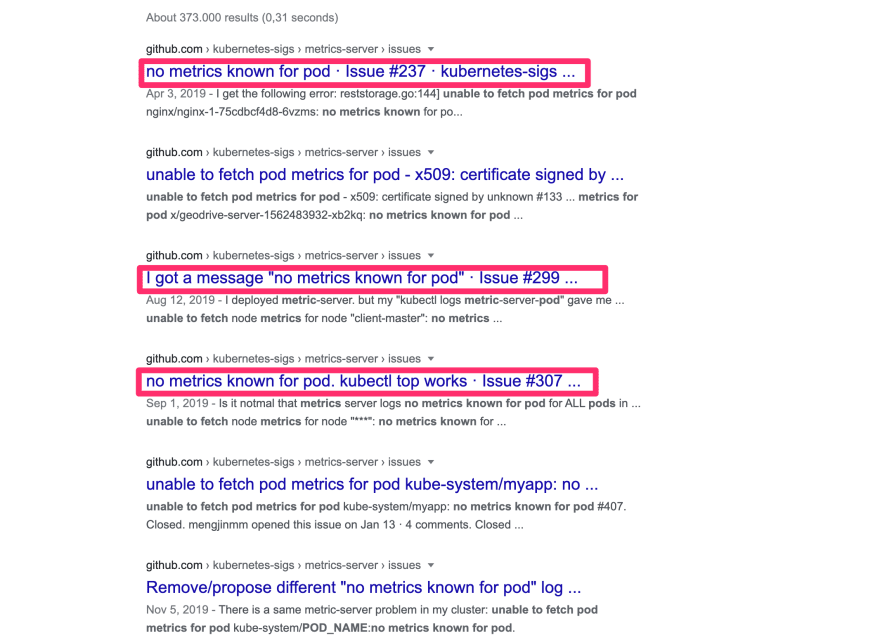

Debugging Step 4:

As any seasoned developer would do, I took the error message and googled it. Which resulted in some links and on a quick scan, I found couple of interesting Links

After quickly scanning through the links, every one seem to suggest this following change in metrics-server

command:

- /metrics-server

- --kubelet-insecure-tls #<-- 🤓

- --kubelet-preferred-address-types=InternalIP #<-- 🤓

--kubelet-insecure-tls seems like something you would do in a test setup. So I wanted to understand a bit more what this flags do.

From the documentation, it is clear that the above flag is intended for testing purposes only and in the logs of metrics server I did not find any error pertaining to certificates. So I did not add the flag.

--kubelet-preferred-address-types this configuration is to determine which address type to use to connect to the nodes for getting the metrics.

So before adding this config I wanted to verify if the metrics server api was reachable or not.

> kubectl get apiservices | grep metrics-server

v1beta1.metrics.k8s.io kube-system/metrics-server True 4d23h

since the status of this service is available, those two flags would not make any sense. I wanted to see if I can get some raw metrics by querying this API.

> kubectl get --raw "/apis/metrics.k8s.io/v1beta1/pods" | jq '.items | .[] | select(.metadata.namespace == "xxx")'

{

"metadata": {

"name": "xxx-worker-6c5bd59857-xxxxx",

"namespace": "xxx",

"selfLink": "/apis/metrics.k8s.io/v1beta1/namespaces/xxx/pods/xxx-worker-6c5bd59857-xxxxx",

"creationTimestamp": "2020-05-24T12:06:45Z"

},

"timestamp": "2020-05-24T12:05:48Z",

"window": "30s",

"containers": [

{

"name": "xxx-worker",

"usage": {

"cpu": "xxxxxn",

"memory": "xxxxxxxKi"

}

}

]

}

🤔 What does this mean? 🤔

So everything seems to function well. I do not know why the metrics I am clearly able to query via Kubectl is not available for the dashboard.

Debugging Step 5:

Now I want to see the source code of metrics-server to figure out under what circumstance this error happens

podMetrics, err := m.getPodMetrics(pod)

if err == nil && len(podMetrics) == 0 {

err = fmt.Errorf("no metrics known for pod \"%s/%s\"", pod.Namespace, pod.Name)

}

if err != nil {

klog.Errorf("unable to fetch pod metrics for pod %s/%s: %v", pod.Namespace, pod.Name, err)

return nil, errors.NewNotFound(m.groupResource, fmt.Sprintf("%v/%v", namespace, name))

}

It is very clear from the code that the Pod exists but metrics is not available. So I tailed the logs and got the pod for which metrics is not found

> kubectl get po -n xxx

NAME READY STATUS RESTARTS AGE

xxx-worker-6c5bd59857-xxxxx 1/1 Running 0 1m5s

🤔 What does this mean? 🤔

It is very clear from above that all these pods where metrics are not available are pods that have been recently created

and since metrics server scrapes for metrics periodically, it's just not available at the moment.

In my opinion it is really not an ERROR rather an expected behaviour. A WARN or INFO would be reasonable. It is good to see am not alone in this

Remove/propose different "no metrics known for pod" log

#349

Remove/propose different "no metrics known for pod" log

#349

There are a lot of issues where users are seeing metrics-server reporting error "no metrics known for pod" and asking for help.

To my understanding this error is expected to occur in normal healthy metrics-server. Metrics Server periodically scrapes all nodes to gather metrics and populate it's internal cache. When there is a request to Metrics API, metrics-server reaches to this cache and looks for existing value for pod. If there is no value for existing pod in k8s, metrics server reports error "no metrics known for pod". This means this error can happen in situation when:

- fresh metrics-server is deployed with clean cache

- query is about fresh pod/node that was not yet scraped

Providing better information to users would greatly reduce throughput of tickets.

I have been chasing a wrong lead after all.

Debugging Step 5:

I have eliminated that Metrics server is not the reason for the bug. This got me to thinking, I should find how Prometheus is scraping the metrics.

After a lot of reading I figured out Prometheus gets the metrics exposed by the kube-state-metrics. It is very important to understand the difference between metrics-server and kube-state-metrics

Comment for

#55

Comment for

#55

kube-state-metrics focuses on

...on the health of the various objects inside, such as deployments, nodes and pods.

metrics-server on the other hand implements the Resource Metrics API

To summarize in a sentence: kube-state-metrics exposes metrics for all sorts of Kubernetes objects. metrics-server only exposes very few metrics to Kubernetes itself (not scrapable directly with Prometheus), like node & pod utilization.

Looking at the logs of prometheus server and kube-state-metrics nothing jumped out of ordinary.

Finally

I asked for help, I explained what I have done so far and my team mate suggested the obvious, did you check if the query works!!

so I went to prometheus query explorer and executed

container_cpu_usage_seconds_total{namespace="xxx", pod_name="xxx-worker-6c5bd59857-xxxxx"}

Returned no results

container_cpu_usage_seconds_total{namespace="xxx"}

Returned results

container_cpu_usage_seconds_total{namespace="xxx", pod="xxx-worker-6c5bd59857-xxxxx"}

Returned results

🤔 What does this mean? 🤔

So some where during the EKS version upgrade the support for label pod_name is dropped.

After fixing the queries in grafana, I could see the metrics finally.

Lessons Learned

- Learn the components and its purpose before assuming

- Always read the release notes when upgrading even minor versions

Removed cadvisor metric labels pod_name and container_name to match instrumentation guidelines. Any Prometheus queries that match pod_name and container_name labels (e.g. cadvisor or kubelet probe metrics) must be updated to use pod and container instead. (#80376, @ehashman)

- The solution is always the obvious solution

I welcome all constructive feedback and comments

Posted on May 24, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.