03.12.19 - Turn, return, turn

R Sanjabi

Posted on December 4, 2019

My weekly accountability report for my self-study approach to learning data science.

This past week has been about downtime, sweet, sweet, downtime. But also returning to old things.

Even though I don't attend a school or am even following a scheduled curriculum at the moment, when Wednesday of last week rolled around, the day before Thanksgiving, I was felt like I was back in high school. My mind would not settle. Focus laughed in my face while concentration flipped the bird at me. I gave up on trying to be productive and did the holidays with family. After that, the post-holiday chill stretched into the post-holiday weekend lethargy and not a lot happened until Monday kicked me in the seat. I do believe in the power of R&R (I'm too old to believe otherwise) BUT I still wish I had more to report.

In some ways, I've let go of trying to focus too much on what I should know to get hired and instead have given in to the fact that I know quite a bit about some things and nothing about quite a few things. So I'll just keep returning to study on whatever seems most pressing at any given second. Right now that means, reviewing my results and the suggested study materials from Workera. It's not exactly what I would have chosen to focus on but giving it a modicum of effort will hopefully pay off if I score better on retries.

I do think feedback from interviewing will direct me towards weak spots and areas to focus on. Or perhaps I don't get any interviews and I may conclude that I should spend more time on portfolio work to get said interviews and worry about passing them at some later point in time.

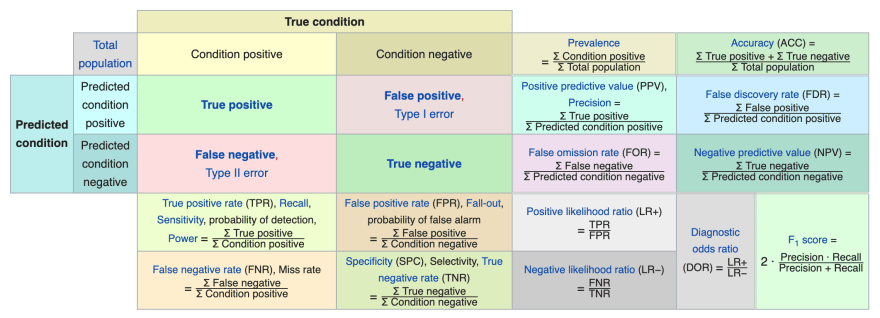

So more specifically what does my studying look like? Mostly returning to concepts I've already been exposed to. I reviewed on speed blast the videos from Full Stack Deep Learning and I went through my Coursera Deep Learning notes. Things I covered included: CNN layer mathematics, ResNet and InceptionNet architectures, loss functions, infrastructure for development, training and deployment (serverless functions vs instances vs on-premises hardware). I spent time reviewing this table. We covered it in the 365 Data Science MOOC I did on Udemy, but 9 months later and some freelance work later, it holds more significance than it did earlier.

I ending up taking the Full Stack Deep Learning assessment test today but haven't heard back yet how I did.

I'm also returning to B-trees (trying Leetcode this time after doing Hackerrank earlier in the year) because I haven't looked at them in decades. (Fun fact: B-trees are older than me but not by much.) I find it fascinating that I'm reviewing both deep learning (which wasn't even a thing when I took AI in graduate school - I learned about neural nets and expert systems and genetic algorithms) and introductory computer science algorithms.

Moral of the story, no one ever really dies No. no one ever really gets it on the first try Still no. If at first you don't put your computer science degree to use, try try again? Eh, maybe take a break now and then and watch The Mandalorian.

Posted on December 4, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.