Abel Peter

Posted on July 28, 2023

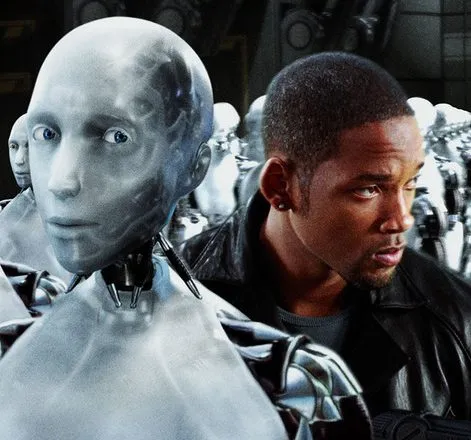

Giving AI rights.

The alignment problem is about how to get AI on our side. With every technology, we have always had to find a way to make it align with our interests. For example, cars. Cars have been a massive improvement in our mobility as a society. I heard someone say the 20th century was the car century. Economies and households all over the world based their entire livelihood on cars. Aligning cars has never been easy though, cars today are still involved in a lot of death-causing accidents, you can add the point of human judgment being the main culprit here which is true but we also have built seatbelts, a lot of design improvements and technology but still, here we are. With every new technology before AI, success always meant a good symbiosis between humans and the technology, but now success comes from standing at a healthy distance and letting it do its thing. With every new technology, we move further and further from the action. This is the crux of the issue. How far do we stand from the black box?

Viewing it this way insights a lot of fantastical ideas about having an AGI that is either perfect and is always great, or it’s going to kill everyone. Sorry, even cars can’t live up to that standard.

The alignment problem is walking on a thread line problem, if you end up losing balance and falling, it would still be a momentous achievement.

On the journey in this line, we can anticipate events, one that Hinton mentioned is “AGI suing for legal rights?”. That is obviously way up in the tree for now.

We need balance.

The potential risks associated with AGI development cannot be overstated. AGI systems possess the capacity to surpass human-level intelligence and exhibit autonomous decision-making capabilities. Without careful regulation and safety measures, there is a real possibility of unintended consequences and severe negative outcomes. These could range from unintended harmful actions due to misaligned goals to the emergence of super intelligent systems that could surpass human control and impact society in ways that we cannot predict or manage effectively.

On the other hand, it is equally important to avoid stagnation or unnecessarily restrictive regulations that hinder AGI development. AGI has the potential to bring about tremendous societal benefits, from advancements in healthcare and scientific research to improvements in transportation and automation. It holds the promise of addressing complex global challenges, such as climate change, poverty, and disease. However, this potential can only be realized if AGI is developed responsibly and in a manner that prioritizes safety and human well-being.

Either way, a slow and deliberate approach to AGI development offers tactical advantages by prioritizing safety, ethical considerations, reliability, comprehensive understanding, societal integration, and iterative improvement. It allows us to navigate the complexities of AGI development more effectively and ensure that the technology is developed in a manner that aligns with human values, minimizes risks, and maximizes its potential for positive impact.

A difference between cars and AGI, is cars are decentralized in harm, they cause harm to some people all the time, and that gives us time to correct our mistakes, as opposed to AGI which can cause pandemic-level catastrophes.

It’s better to have an AGI that makes mistakes in small events but cannot cause major black swan events. It’s better to have an AGI that writes dumb lyrics and isn’t coming up with bioweapons, than an AGI that cures cancer and old age next year but causes world war z level genocide the year after.

AI has to work in small teams before it works in large societies.

The concept of AGI working in small teams before operating in larger societal contexts is a prudent approach that offers numerous benefits. By starting deployment in small teams, we can foster experimentation and optimize its ability to contribute effectively. Here are some key reasons why this strategy is advantageous:

Iterative Learning and Improvement: Small teams provide a controlled environment where AI systems can be tested, refined, and improved iteratively.

Risk Containment: Deploying AI in small teams helps to contain potential risks and mitigate unintended consequences. In a controlled setting, any adverse effects or errors caused by AI systems are limited to the specific team or project, minimizing the potential impact on a larger scale.

Ethical Considerations and Value Alignment: Small teams offer an opportunity to explore the ethical dimensions of AI and align its values with human values.

Efficient Resource Utilization: Starting with small teams allows for efficient utilization of resources. AI development and deployment often require significant investments in terms of time, expertise, and infrastructure.

AGI can’t do time.

Another problem on that thread line is our laws necessarily allow for mistakes, such that, you break the law first, then the consequences come later. We are not prepared for that when it comes to AGI. You will not be received kindly if you suggested that AGI should be allowed to break the law and then get punished after.

First of all, our laws are based on human beings having remorse and the historical precedent of antisocial behavior is very old. AGI can’t go to jail, or we would have to tell it to feel like it’s in jail. These are questions for future lawyers to argue about, not us.

For this reason, AGI has to have “human parents” for a long time. Almost all big tech companies have a version of their own model that they could scale up. These companies will have to be liable for their creation’s misadventures and spend a lot of resources and brain power to work on the problem of safety before the next 10 years or so when practically every entity (business and people) will have an AI wizard whispering all sorts of incantation into their ears.

Posted on July 28, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.