Public Cloud Group

Posted on March 10, 2022

Written by Arne Hase

Originally published on October 20th 2021

Books can be filled with details about how to CICD - this article gives a quick, yet detailed view about why and how to establish a whole CICD workflow with the use of gitlab.com CI and a sample AWS ECS application

Developing complex software projects and running them is a huge task, especially for large teams. Especially when you consider that a project in development needs to be built, deployed, tested and improved continuously. No matter if services are running on hosts on premise or on any cloud computing platform – from an early time on in the lifecycle, as much as possible recurring tasks need to be automated – otherwise the overhead will be far too much handle. To be able to quickly reproduce and setup your project, best practice is to automate service deployment, possibly using Infrastructure as Code (IaC) for provisioning your infrastructure in the cloud, for example AWS. With both you must keep track of changes in the code, as well as for the infrastructure itself, processes for running updates, checks, tests and whatever is needed to keep the application up to date and running the latest working and released version.

This is where Continuous Integration / Continuous Deployment (CICD) comes into play and changes the game: CI and CD are concepts that have been in use ever since the nineties. The goal is to continuously integrate applications in development and to continuously deliver them all completely automated. The main benefits are faster release cycles, continuous testing, better fault detection and isolation, which reduces human error factor and reusability in different environments and projects. It is a great help for developing robust and reliable applications.

Using the CICD approach involves version control for your sources, as well as worker instances for running automated tests and deploying your infrastructure and the workloads. Countless possible tools can be used to achieve all this. One very well known combination would be GitHub for version control and Jenkins for the workers plus the AWS cloud for running the workloads. The IaC part with terraform for example, deployed by the Jenkins. In such a scenario, jenkins would cover running tests, deployments and other maintenance jobs. The disadvantage of such an approach is that it includes several platforms to be managed and a great number of different tools is involved. Many levels of authentication must be implemented and maintained. I’d like to introduce the possibility of having it all under one hood by using gitlab and its integrated CICD solution. The great advantage here is the integration of all the necessary components in one very well implemented and highly flexible solution. Starting with the git repo which your CICD workers can access and react on all kinds of git events such as pushes to named branches - all without leaving the scope to another platform and/or host. No need to trigger pipelines on a distant Jenkins, simply use integrated gitlab CI pipelines and workers.

We'll have a detailed view on how to establish a whole CICD workflow with the use of gitlab CI using a sample AWS ECS application in the next chapter.

Some quick facts for integration and pricing:

Integration-wise, use it as SaaS or self-managed (on premise or using a cloud service such as AWS, there even are official docker images available).

For the pricing, there is a free tier available and two paid plans to chose from. With the free tier, you can perfectly cover testing. It includes 400 CICD minutes per month (the total time your workers are running). If you'd be using the SaaS version (self-managed doesn't have any minute restriction for workers). As for the feature enriched premium ($19 user/month) and ultimate ($99 user/month) versions, the minutes included increase to 10,000 and 50,000 minutes per month for the SaaS usage.

kreuzwerker as an official gitlab partner happily answers any detail question about the solution and purchases.

The sample scenario

In this sample scenario, a simple containerised java web application that provides a REST API is deployed into a AWS Elastic Container Service.

The CICD scenario covers automatic building, testing and deployment of the application.

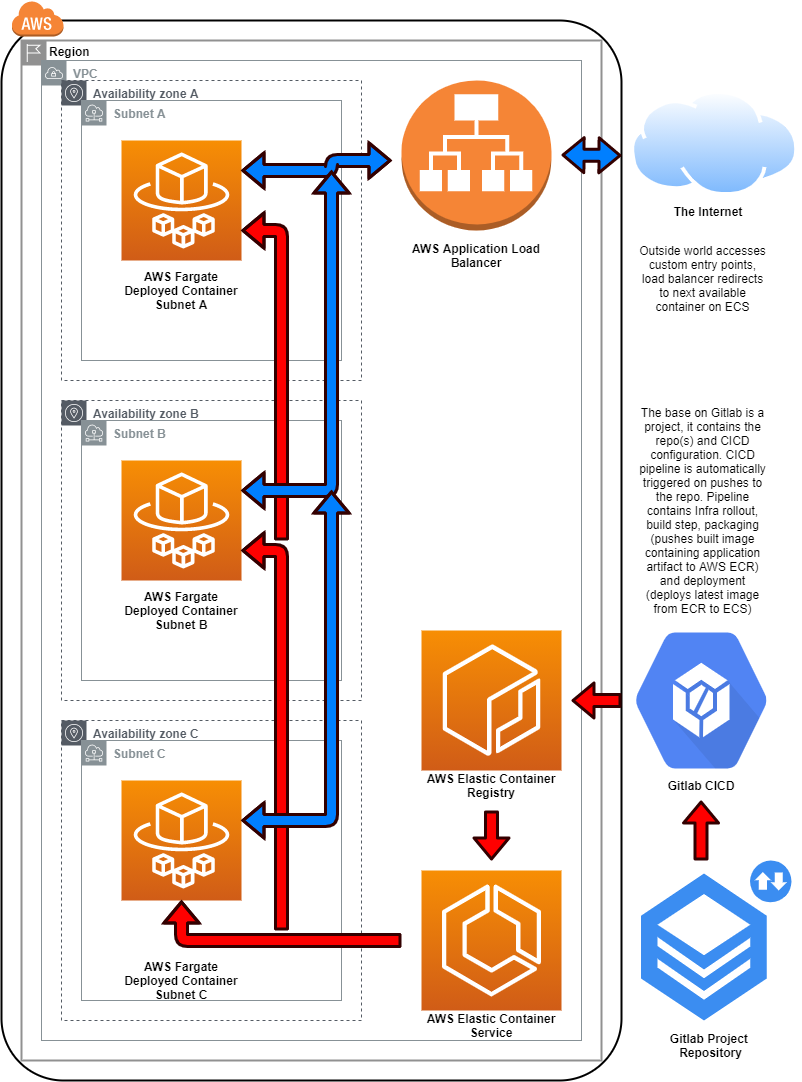

The infrastructure of our sample scenario consists of the following parts (illustrated in the diagram below):

- a VPC with subnets, routing tables, an internet gateway and a NAT gateway

- an ECS Cluster to run the application tasks

- an ECR repository to store images built to run the application

- an ALB and Route53 config to reach the application from the internet

This is an outline of the infra hosting the sample application on the AWS cloud:

The sample application (visible in the above diagram as the Fargate containers) which is the workload of this scenario needs to be built and deployed to the infra just introduced - details about the steps to achieve all this follow in the detailed description of the pipeline.

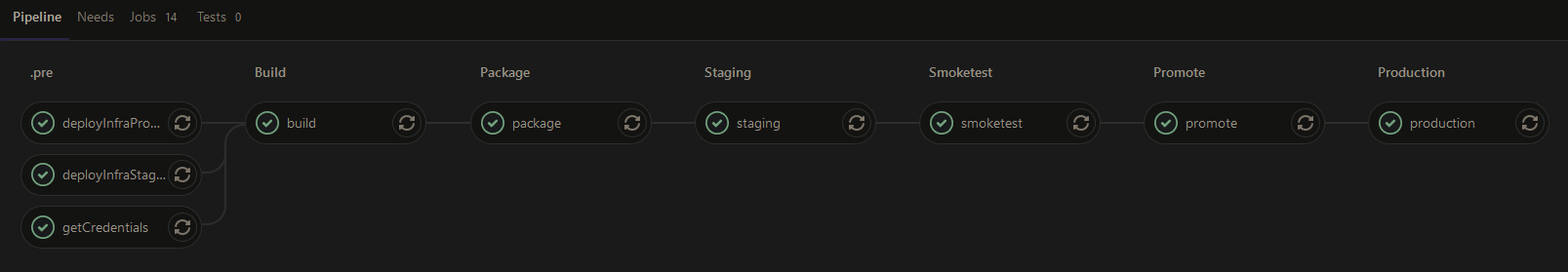

The pipeline layout

Let's introduce the stages of our CICD pipeline:

.pre stage:

- deployInfraProduction step: deployment of the Cloudformation stack containing all infrastructure code for the production environment. If changes have been done to the Cloudformation code, this step will update the infrastructure - if not, it will be left untouched

- deployInfraStaging step: deployment of the Cloudformation stack containing all infrastructure code for the staging environment. If changes have been done to the Cloudformation code, this step will update the infrastructure - if not, it will be left untouched

- GetCredentials step: this step retrieves the ECR credentials from the infrastructure that are needed in later stages and stores the in an artifact Build stage:

- build step: builds the java application using gradle of the openjdk image of the gitlab cloud-deploy registry and store the artifacts for later use Package stage:

- package step: builds the container using docker build with latest docker image of the gitlab cloud-deploy registry and pushes to staging staging container registry in ECR Staging stage:

- staging step: deploys the container to the staging ECS cluster using the image stored in staging ECR repository Smoketest stage:

- smoketest step: checks the availability and data of the exposed api of the application in the staging environment Promote stage:

- promote step: pulls image from staging ECR repository, tags it for production and pushes it to production ECR repository. Uses latest docker image of the gitlab cloud-deploy registry . Production stage:

- production step: deploys the container to the production ECS cluster using the image stored in production ECR repository

The pipeline will be triggered by git events such as commits and merges to the repo in the gitlab project.

For the first phase, the deployment of the infra to the AWS cloud, you need to provide secrets that grant the access level needed to do the job.

The secrets can be stored as secured and masked variables in the gitlab CI configuration, which looks like this:

All about using variables with gitlab can be found here.

The whole pipeline configuration must be defined in the file .gitlab-ci.yml the root of your repository (needs to be exactly .gitlab-ci.yml)

Stage definition looks like this in .gitlab-ci.yml:

stages:

- .pre

- build

- package

- staging

- smoketest

- promote

- production

For each stage, you can specify in great detail the kind of event that triggers it. For example, you can specify that the .pre stage is only triggered by pushes to the master branch, while the build stage is also triggered by pushes to feature branches.

In our example we used:

- Cloudformation to provision the infrastructure resources, but this is only an example. Whatever suits your needs best should be your choice.

- gradle to build the sample java application which exposes a simple web page showing a value taken from the actual commit reference of gitlab to show the actual version running.

- Docker to build, tag and push images for the AWS ECS tanks to be run to the AWS ECR repository

- A simple shell script using curl to test if the staging environment would show the expected value from the SSM parameter on the correct URL

The pipeline details per stage

The .pre stage

The .pre stage covers all the infrastructure related tasks:

- create one set of resources/environment for staging

- create one set of resources/environment for production

- obtain credentials to be passed as an artifact for the package and promote stages

For all steps in this stage, the image aws-base:latest from the gitlab registry is used as AWS cli is needed to run the deployment of Cloudformation stacks

The code for all steps in the .pre stage:

getCredentials:

stage: .pre

image: registry.gitlab.com/gitlab-org/cloud-deploy/aws-base:latest

interruptible: true

only:

- branches

script:

- aws --region eu-west-1 ecr get-login-password > credentials

artifacts:

paths:

- credentials

.deployInfra:

stage: .pre

image: registry.gitlab.com/gitlab-org/cloud-deploy/aws-base:latest

only:

- master

script:

- cd cfn

- ./deploy-infra.sh

deployInfraStaging:

extends: .deployInfra

variables:

Stage: stage

resource_group: staging

deployInfraProduction:

extends: .deployInfra

variables:

Stage: prod

resource_group: production

The build stage

The build process for the application is next in line. It uses gradle to build the Java sample application. Therefore the jdk must be available on his stage, so the images chosen for this stage is image: openjdk:11. The resulting artifact will be cached in $APP_DIR/build/libs/*.jar for usage in the later packaging step.

The code for the build stage:

build:

stage: build

image: openjdk:11

interruptible: true

only:

- branches

script:

- cd $APP_DIR

- ./gradlew build

artifacts:

paths:

- $APP_DIR/build/libs/*.jar

cache:

paths:

- $GRADLE_HOME/caches/

The packaging stage

In this stage the docker image is built containing the runtime environment for the prepared Java sample app and pushed to AWS ECR repository. The repo resides in the staging environment, which was provided in the .pre stage.Requirements for this stage: access to the built Java artifact from the last step and access to the credentials file, which was generated in the .pre step to get access to the ECR repository in the staging environment.The image needed to do the packaging (image: docker:latest, the official docker image as the docker cli is needed for the tasks in this step) does not have the AWS cli available. This is why the authentication to the AWS ECR had to be covered in a step earlier and is reused here:

In the before_script phase of this stage, which is the stage specific preparation phase, the credentials file (artifacts) is mounted into the worker instance. It is needed to grant docker access to the AWS ECR repository. Now the docker cli can authenticate with the AWS ECR and upload the built image.

And of course the built sample application needs to be available to build a complete docker image ready for deploy to AWS ECS. It is made available through the mount of artifacts as well.

Now that everything is in place, docker cli can build the image, then push it to the AWS ECR repository.The code for the packaging stage:

package:

image: docker:latest

interruptible: true

only:

- branches

stage: package

services:

- docker:dind

before_script:

- ls -lah

- ls -lah /

- ls -lah /builds

- mount

- find / -name credentials 2> /dev/null

- docker login -u AWS --password-stdin $StageImageRepositoryUrl < credentials

script:

- cd $APP_DIR

- docker build --pull -t "$StageImageRepositoryUrl:$ImageTag" .

- docker push "$StageImageRepositoryUrl:$ImageTag"

artifacts:

paths:

- credentials

- $APP_DIR/build/libs/*.jar

resource_group: staging

The staging stage

Provided everything has gone well up to now, the pipeline would continue in the staging phase - where the sample application image is deployed to the AWS ECS cluster in the staging environment.

The image used for this gitlab CICD stage is the same as used for the infra deployment of the .pre stage.

In this stage another Cloudformation template is deployed which is creating a task in staging ECS cluster. The docker image used for it was pushed to the staging environment ECR repository in the stage before.

The code for the staging stage:

staging:

extends: .deploy

stage: staging

variables:

Stage: stage

resource_group: staging

.deploy:

image: registry.gitlab.com/gitlab-org/cloud-deploy/aws-base:latest

only:

- master

script:

- cd cfn

- ./app-deploy.sh

environment:

name: $CI_JOB_STAGE

url: https://$Stage.subdomain.kreuzwerker.de/hello

The smoketest stage

Now that there is a running instance in the staging ECS cluster, we can perform some tests:

A simple shell script checks if the exposed URLs of the sample web application do respond correctly.

- If yes, do nothing. Pipeline execution will continue.

- If no, exit the script with non-zero result so the pipeline fails.

The code for this stage:

smoketest:

stage: smoketest

only:

- master

before_script:

# install jq to parse JSON retrieved by CURL

- apt update

- apt install jq -y

script:

- echo check for branch $CI_COMMIT_REF_SLUG and commit id $CI_COMMIT_SHORT_SHA

- ./testApplication.sh

The promote stage

In this stage the previously built and tested image is promoted to the production level. Simple retagging can do the job:

- pull the tested image from the staging environment ECR repository

- retag the image

- push the image to the production environments ECR repository

the code for the promote stage:

promote:

# Official docker image.

image: docker:latest

only:

- master

stage: promote

services:

- docker:dind

variables:

ProdImageRepositoryUrl: $AWS_ACCOUNT_NUMBER.dkr.ecr.eu-west-1.amazonaws.com/$PROJECT_NAME-prod-ecr

before_script:

- ls -lah

- ls -lah /

- ls -lah /builds

- mount

- find / -name credentials 2> /dev/null

- docker login -u AWS --password-stdin $StageImageRepositoryUrl < credentials

script:

- docker login -u AWS --password-stdin $StageImageRepositoryUrl < credentials

- docker pull $StageImageRepositoryUrl:$ImageTag

- docker tag $StageImageRepositoryUrl:$ImageTag $ProdImageRepositoryUrl:$ImageTag

- docker push $ProdImageRepositoryUrl:$ImageTag

- echo done

artifacts:

paths:

- credentials

resource_group: production

The production stage

Deploy the image to the production ECS Cluster - script and image are the same used in the staging stage - but this time variables use $prefix=production as well as the $ImageRepositoryUri pointing to the production environments ECR repository.

The code for the production stage:

production:

extends: .deploy

stage: production

variables:

Stage: prod

resource_group: production

This stage actually uses the same .deploy section defined for the "staging" stage code. You only need it once in the code, so it is only listed in the staging section.

Scripts/commands will know form resource_group: production to work in production context.

The whole pipeline setup I used to run this example project can be found here.

Some quick facts

- The pipeline is triggered when pushes are done to the repository within the gitlab project.

- Always the whole pipeline is executed. All steps will be run if not special conditions defined would override for single steps.

- As usual for CICD pipelines, the next step is executed after successful execution only - execution stops on breaking steps.

- If nothing changed for the IaC part of the repository, no changes to infrastructure will be done. The Cloudformation execution simply will detect that no changes need to be done as everything is up and running already. If it is the first time this pipeline was triggered, the infrastructure will be created as defined. It would work similar if terraform was used instead.

- Secrets for accessing the AWS infrastructure will be used from variables stored in the configuration of gitlab CI

- Additional to the self defined variables, there is a great number of pre-defined variables to use.

Conclusion

Following the CICD approach is the logical and right direction to go for developing complex projects, and there are countless tools and services to use. One of the the most convenient solutions is gitlab.com. I gave you a glimpse of the possibilities in this article. The integration of everything needed to cover the whole workflow under one roof can be a huge time saver. Everything about gitlab is very well documented and accessible. You can either use the hosted SaaS gitlab version (GCP in the USA) or self-managed ones under your full control, hosted where it is most convenient for you.

Should you be interested in using gitlab, you are very welcome to contact kreuzwerker as an official gitlab partner for more information.

Posted on March 10, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.