RaspberryPi cloud backup Part 2

Jorge Rodrigues (mrscripting)

Posted on August 22, 2022

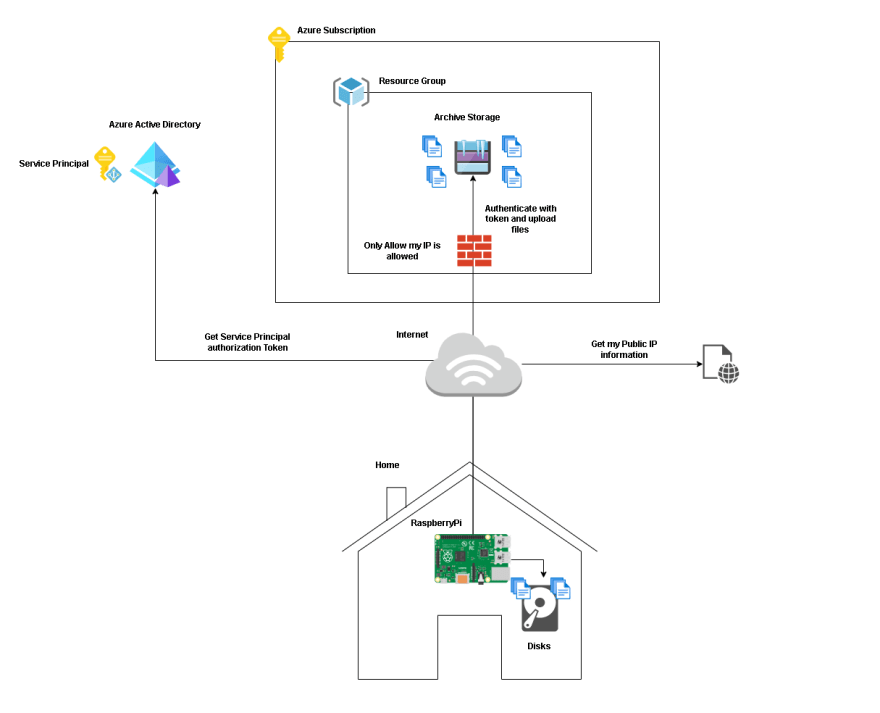

Architecture

In our last post, we compared the costs of different cloud providers and chose Azure to store our data.

Let's now analyze the architectural drawing of our implementation.

First, we need to determine the components that will be used:

- RaspberryPi as data source and computing power for script execution;

- Azure Storage as our target;

- Azure Active Directory to manage authentication;

- A service that tells us our public IP address so we can configure it in the storage account firewall.

Communication will be over the Internet using https, as we don't want to set up additional infrastructure such as a VPN.

Infrastructure

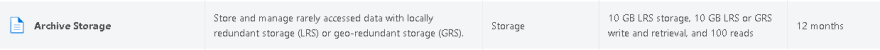

Let's build the infrastructure. We need a pay-as-you-go subscription which includes $200 for 30 days and then we have various services that are free for a year. And guess what archive storage is one of those services, with 10 GB for free.

To set up our Azure components, we use terraform. Terraform codifies cloud APIs into declarative configuration files that we can use to specify what infrastructure we want. It also works with multiple cloud providers, so you only have to learn one language instead of multiple. Isn't that cool?

Let's install the requirements:

Az Cli will be used to login to our new account and authorize terraform.

For the windows operating system, open a powershell window as administrator and paste the command bellow:

winget install --id Microsoft.AzureCLI

For debian based linux distros:

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

Now let's install terraform.

On windows through chocolatey:

Set-ExecutionPolicy Bypass -Scope Process -Force; [System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072; iex ((New-Object System.Net.WebClient).DownloadString('https://community.chocolatey.org/install.ps1'))

choco install terraform

For linux:

sudo apt-get update && sudo apt-get install -y gnupg software-properties-common

wget -O- https://apt.releases.hashicorp.com/gpg | \

gpg --dearmor | \

sudo tee /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] \

https://apt.releases.hashicorp.com $(lsb_release -cs) main" | \

sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update

sudo apt-get install terraform

You can now download the 2 files from my github called main.tf and example.tfvars

Place these 2 files into an empty directory and change your current directory in the shell to this new directory.

Login to azure using the command:

az login

The output should be something like this

[

{

"cloudName": "AzureCloud",

"homeTenantId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx",

"id": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"isDefault": true,

"managedByTenants": [],

"name": "Pay-As-You-Go",

"state": "Enabled",

"tenantId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx",

"user": {

"name": "mrscriptingfakeemail@outlook.com",

"type": "user"

}

}

]

Copy the id value from this output and replace the value for subscription_id in the example.tfvars file.

Copy the tenantId value from the output as well and replace the value for tenand_id in the example.tfvars file.

We are now ready to spin up our service principle, resource group and network restricted storage account.

let's run terraform:

terraform init

terraform plan -var-file="example.tfvars"

It should return the following output

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# azuread_application.appregistration will be created

+ resource "azuread_application" "appregistration" {

+ app_role_ids = (known after apply)

+ application_id = (known after apply)

+ disabled_by_microsoft = (known after apply)

+ display_name = "backupapplication"

+ id = (known after apply)

+ logo_url = (known after apply)

+ oauth2_permission_scope_ids = (known after apply)

+ object_id = (known after apply)

+ owners = [

+ "xxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx",

]

+ prevent_duplicate_names = false

+ publisher_domain = (known after apply)

+ sign_in_audience = "AzureADMyOrg"

+ tags = (known after apply)

+ template_id = (known after apply)

+ feature_tags {

+ custom_single_sign_on = (known after apply)

+ enterprise = (known after apply)

+ gallery = (known after apply)

+ hide = (known after apply)

}

}

# azuread_application_password.appregistrationPassword will be created

+ resource "azuread_application_password" "appregistrationPassword" {

+ application_object_id = (known after apply)

+ display_name = (known after apply)

+ end_date = (known after apply)

+ end_date_relative = "8765h48m"

+ id = (known after apply)

+ key_id = (known after apply)

+ start_date = (known after apply)

+ value = (sensitive value)

}

# azuread_service_principal.backupserviceprinciple will be created

+ resource "azuread_service_principal" "backupserviceprinciple" {

+ account_enabled = true

+ app_role_assignment_required = false

+ app_role_ids = (known after apply)

+ app_roles = (known after apply)

+ application_id = (known after apply)

+ application_tenant_id = (known after apply)

+ display_name = (known after apply)

+ homepage_url = (known after apply)

+ id = (known after apply)

+ logout_url = (known after apply)

+ oauth2_permission_scope_ids = (known after apply)

+ oauth2_permission_scopes = (known after apply)

+ object_id = (known after apply)

+ owners = [

+ "xxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx",

]

+ redirect_uris = (known after apply)

+ saml_metadata_url = (known after apply)

+ service_principal_names = (known after apply)

+ sign_in_audience = (known after apply)

+ tags = (known after apply)

+ type = (known after apply)

+ feature_tags {

+ custom_single_sign_on = (known after apply)

+ enterprise = (known after apply)

+ gallery = (known after apply)

+ hide = (known after apply)

}

+ features {

+ custom_single_sign_on_app = (known after apply)

+ enterprise_application = (known after apply)

+ gallery_application = (known after apply)

+ visible_to_users = (known after apply)

}

}

# azurerm_resource_group.resourcegroup will be created

+ resource "azurerm_resource_group" "resourcegroup" {

+ id = (known after apply)

+ location = "westeurope"

+ name = "homeresourcegroup"

+ tags = {

+ "environment" = "homesetup"

}

}

# azurerm_role_assignment.StorageBlobDataOwner will be created

+ resource "azurerm_role_assignment" "StorageBlobDataOwner" {

+ id = (known after apply)

+ name = (known after apply)

+ principal_id = (known after apply)

+ principal_type = (known after apply)

+ role_definition_id = (known after apply)

+ role_definition_name = "Storage Blob Data Owner"

+ scope = (known after apply)

+ skip_service_principal_aad_check = (known after apply)

}

# azurerm_storage_account.storage will be created

+ resource "azurerm_storage_account" "storage" {

+ access_tier = (known after apply)

+ account_kind = "StorageV2"

+ account_replication_type = "LRS"

+ account_tier = "Standard"

+ allow_nested_items_to_be_public = true

+ cross_tenant_replication_enabled = true

+ default_to_oauth_authentication = false

+ enable_https_traffic_only = true

+ id = (known after apply)

+ infrastructure_encryption_enabled = false

+ is_hns_enabled = false

+ large_file_share_enabled = (known after apply)

+ location = "westeurope"

+ min_tls_version = "TLS1_2"

+ name = "storageraspberrybackup"

+ nfsv3_enabled = false

+ primary_access_key = (sensitive value)

+ primary_blob_connection_string = (sensitive value)

+ primary_blob_endpoint = (known after apply)

+ primary_blob_host = (known after apply)

+ primary_connection_string = (sensitive value)

+ primary_dfs_endpoint = (known after apply)

+ primary_dfs_host = (known after apply)

+ primary_file_endpoint = (known after apply)

+ primary_file_host = (known after apply)

+ primary_location = (known after apply)

+ primary_queue_endpoint = (known after apply)

+ primary_queue_host = (known after apply)

+ primary_table_endpoint = (known after apply)

+ primary_table_host = (known after apply)

+ primary_web_endpoint = (known after apply)

+ primary_web_host = (known after apply)

+ queue_encryption_key_type = "Service"

+ resource_group_name = "homeresourcegroup"

+ secondary_access_key = (sensitive value)

+ secondary_blob_connection_string = (sensitive value)

+ secondary_blob_endpoint = (known after apply)

+ secondary_blob_host = (known after apply)

+ secondary_connection_string = (sensitive value)

+ secondary_dfs_endpoint = (known after apply)

+ secondary_dfs_host = (known after apply)

+ secondary_file_endpoint = (known after apply)

+ secondary_file_host = (known after apply)

+ secondary_location = (known after apply)

+ secondary_queue_endpoint = (known after apply)

+ secondary_queue_host = (known after apply)

+ secondary_table_endpoint = (known after apply)

+ secondary_table_host = (known after apply)

+ secondary_web_endpoint = (known after apply)

+ secondary_web_host = (known after apply)

+ shared_access_key_enabled = true

+ table_encryption_key_type = "Service"

+ tags = {

+ "environment" = "homesetup"

}

+ blob_properties {

+ change_feed_enabled = (known after apply)

+ change_feed_retention_in_days = (known after apply)

+ default_service_version = (known after apply)

+ last_access_time_enabled = (known after apply)

+ versioning_enabled = (known after apply)

+ container_delete_retention_policy {

+ days = (known after apply)

}

+ cors_rule {

+ allowed_headers = (known after apply)

+ allowed_methods = (known after apply)

+ allowed_origins = (known after apply)

+ exposed_headers = (known after apply)

+ max_age_in_seconds = (known after apply)

}

+ delete_retention_policy {

+ days = (known after apply)

}

}

+ network_rules {

+ bypass = (known after apply)

+ default_action = "Deny"

+ ip_rules = (known after apply)

+ virtual_network_subnet_ids = (known after apply)

}

+ queue_properties {

+ cors_rule {

+ allowed_headers = (known after apply)

+ allowed_methods = (known after apply)

+ allowed_origins = (known after apply)

+ exposed_headers = (known after apply)

+ max_age_in_seconds = (known after apply)

}

+ hour_metrics {

+ enabled = (known after apply)

+ include_apis = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

}

+ logging {

+ delete = (known after apply)

+ read = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

+ write = (known after apply)

}

+ minute_metrics {

+ enabled = (known after apply)

+ include_apis = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

}

}

+ routing {

+ choice = (known after apply)

+ publish_internet_endpoints = (known after apply)

+ publish_microsoft_endpoints = (known after apply)

}

+ share_properties {

+ cors_rule {

+ allowed_headers = (known after apply)

+ allowed_methods = (known after apply)

+ allowed_origins = (known after apply)

+ exposed_headers = (known after apply)

+ max_age_in_seconds = (known after apply)

}

+ retention_policy {

+ days = (known after apply)

}

+ smb {

+ authentication_types = (known after apply)

+ channel_encryption_type = (known after apply)

+ kerberos_ticket_encryption_type = (known after apply)

+ versions = (known after apply)

}

}

}

Plan: 6 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ appId = (known after apply)

+ displayName = "backupapplication"

+ password = (known after apply)

+ tenant = "xxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx"

Everything looks good so let's proceed with the deployment:

terraform apply -var-file="example.tfvars"

Take note of all the values from the outputs like appid, displayname, tenant and password (the password output is an exception and should not be used in production or any pipelines. If needed use Azure Keyvault instead)

And we are done with our infrastructure. The next step is to build a bash script that will backup our files in Azure using the output from terraform for authentication.

So don't miss part 3.

Posted on August 22, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.