Data Modeling: Good vs. Perfect

Anaiya

Posted on February 13, 2024

This article is written by Rafael Ochoa of Celebreak.

MongoDB schema is very flexible. This allows for building more accurate models adapted to different use cases. However, flexibility comes with a price. There are more factors to consider and more decisions to make when designing a data model.

There are good resources on the web to help you understand the possibilities from a data modeling point of view. Some of the most comprehensive ones are the patterns and antipatterns series from MongoDB. The former provides a good explanation of design patterns that could be used for different use cases, while the latter summarizes what you need to avoid when making design decisions.

This post is about a time we made a mistake in data modeling, the consequences, and what we learned from it.

The requirement

Our company, CeleBreak, organizes amateur football games. People pick games and pay to play through our mobile app. We hire the fields, provide the bibs and balls, and send an organizer to ensure the best experience for our customers.

In a simplified world, we have two collections, one for the users and one for the games, where games contain an embedded array of subdocuments with references to users. An external payments processor handles the payment flow, which is asynchronous, so the subdocuments have a status field to control the lifecycle of the guests, which starts in PREPARED and can change to CONFIRMED or CANCELED, depending on the result of the payment. They also contain information about whether the user attended the game or not. This is how this subdocument is defined:

const PlayerSchema = new Mongoose.Schema({

status: { type: String, enum: ['PREPARED', 'CONFIRMED', 'CANCELED'], required: true, index: true },

userId: { type: Mongoose.Types.ObjectId },

preparedAt: { type: Date },

confirmedAt: { type: Date },

canceledAt: { type: Date },

arrivedAt: { type: Date },

});

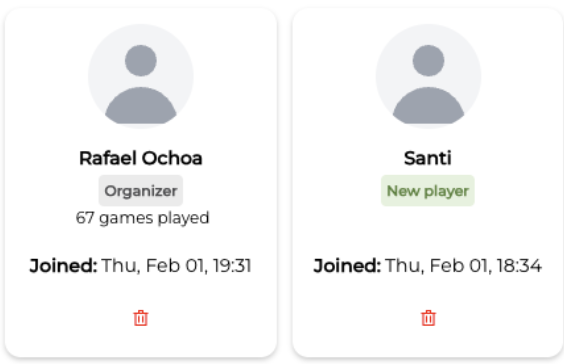

At some point, we received a requirement to display the number of games played for each user in the games’ player list on our operational website. This is how the player cards should look like there:

The analysis

When we designed our data structure, we decided to embed players in the game document. This makes it easy to get the list of players in a given game as we do in the players list view, and also simplifies the game-specific controls required to validate if a user can join a given game, for example, if the game still has available spots.

However, getting the history of games a user has played, as needed to fulfill the mentioned requirements, is more complicated. The information is split into different game documents, so we need to put them together to count them. To do so, we need to run a pipeline that looks up all games each user has participated in, then unwinds the array of guests of all these events to get only those of the user of interest and group them to get the count of games the user has played. An example can be seen on the Mongo Playground.

db.games.aggregate([

{

$match: {

_id: gameId

}

},

{

$unwind: "$players"

},

{

$match: {

"players.status": "CONFIRMED"

}

},

{

$lookup: {

from: "games",

localField: "players.userId",

foreignField: "players.userId",

as: "gamesByUser"

}

},

{

$unwind: "$gamesByUser"

},

{

$unwind: "$gamesByUser.players"

},

{

$match: {

$expr: {

$eq: [

"$gamesByUser.players.userId",

"$players.userId"

]

},

"gamesByUser.players.status": "CONFIRMED"

}

},

{

$group: {

_id: "$gamesByUser.players.userId",

gamesPlayed: {

$sum: 1

}

}

}

])

This pipeline queries the game of interest and then looks up the same collection to get all the games any of these players have participated in. Each game is loaded with all its players, so we need to unwind the array and match only those subdocuments that correspond to the players of the game. This could become very inefficient. Because of that, we decided to update our data structure to find a better way.

This information was going to be presented in the context of users. Also, we considered that the number of games a user could join was not going to grow too fast (a few tens per year, in most cases). Finally, we wanted to reflect real-time changes, like status updates of the players or the games, to support further analysis, if needed.

Considering these conditions, we concluded that denormalizing games information in the user document was a good option. It’d make it simple to get the count of games for each user, and other user-specific analytics — like the date of the first and last games played — by only analyzing the denormalized array in the server. We decided to update the denormalized objects in real-time, to have a more powerful and accurate source of information, if needed.

This is the schema we implemented:

const DenormalizedGameSchema = new Mongoose.Schema({

gameId: { type: Mongoose.Schema.Types.ObjectId, ref: 'Game' },

status: { type: String, enum: ['CONFIRMED', 'CANCELED'] },

players: {

status: { type: String, enum: ['PREPARED', 'CONFIRMED', 'CANCELED'] },

},

startingAt: { type: Date },

});

The consequences

The first issue appeared when we were implementing the logic to keep the denormalized information up to date in real-time. At that moment, we didn’t have a system to react to DB changes, so we polluted critical flows with updates to the denormalized objects. This was difficult to maintain and led us to errors that caused data inconsistencies in some edge cases.

At some point, this code was a part of the flow that added players to games, which is the most critical of our system:

const userQuery = isDataDenormalized

? {

_id: userId,

denormalizedGames: { $elemMatch: { gameId } },

} : {

_id: userId,

};

const userEventToAdd: UserDenormalizedEvent = {

gameId,

status: 'CONFIRMED',

players: {

status: 'CONFIRMED',

},

startingAt,

};

const userUpdate = isDataDenormalized

? {

$set: {

'denormalizedGames.$.players.status': 'CONFIRMED',

},

} : {

$push: {

'denormalizedData.events': userEventToAdd,

},

};

await User.findOneAndUpdate(userQuery, userUpdate);

If a given game had already been denormalized, then we only needed to update the status of the player. Otherwise, we needed to add the full object to the array. This illustrates the complexity this solution implied, considering that similar code was present in other critical flows of our system.

Then, we observed an increment in the loading times of the players' list in some games. We found a few users who had participated in thousands of games, so the denormalized data arrays in their documents had grown to thousands of entries, making them very large. This effect appeared very soon because we ran a migration to denormalize existing data when this change went live.

What we got wrong (i.e., the learnings)

As the flaws of our decision became evident, we reviewed our analysis to find the root causes and moved forward to find a better solution. In this retrospective, we found two big issues.

First, we shouldn’t have assumed that the number of games a user played wouldn’t grow too fast. There is no limit to the number of events a user can join over time, so in real life, there are many cases where this assumption was wrong. To make it worse, these were our power users, who were present in many different games, so the loading time of all those events was affected. It shows that we didn’t have a good understanding of some aspects of our business when we made this change.

Second, we shouldn’t have added real-time updates to the denormalized objects. It wasn’t needed to fulfill the original requirement and we underestimated the complexity of doing it. It made us spend more time on the implementation and it was prone to errors. This is not fully related to data modeling, but it was a consequence of a design decision.

Bonus: our (current) solution

Based on our retrospective analysis, we decided to take a different approach that has been in place since then. We created a new collection to hold the denormalized game joins, with the information required to easily calculate these values without the risk of user documents growing too much. Also, as the analytics didn’t need real-time updates, we decided to only denormalize final data, which means that it’s done when the game finishes. To do so, we have a scheduled function that runs this pipeline to update the denormalized collection daily:

[

{

$match: {

startingAt: {

$gte: <YESTERDAY AT THE START OF THE DAY>,

$lt: <TODAY AT THE START OF THE DAY>

},

status: 'CONFIRMED',

},

},

{

$project: {

_id: 1,

players: 1,

startingAt: 1,

},

},

{

$unwind: '$players',

},

{

$match: {

'players.status': 'CONFIRMED',

},

},

{

$project: {

_id: 0,

eventId: '$_id',

userId: '$players.userId',

startingAt: '$startingAt',

},

},

{

$merge: {

into: 'denorm_gameplayers',

on: ['gameId', 'userId'],

whenNotMatched: 'insert',

whenMatched: 'replace',

},

},

];

It has a filter to add events of the previous day, and the configuration of the $merge stage ensures that if a document already exists for that user joining that game, it’ll be overwritten. This way, we avoid the need for real-time updates on documents, given that we only require analytics for information on games that have already ended.

Compared to our original situation (players' information embedded in the game object), this approach is better because we don’t need to load all the games with all the players each game has to then filter them all but the user of interest. In this case, we can get the documents of interest in one single action.

This approach also tackled the two issues we found in our analysis. First, all the information lives in a different place that can be consulted when needed, and the user document, which is one of the most important ones in our system, stays lean. Second, we isolated the logic to keep the denormalized information up to date according to our requirements, so our critical flows are simpler and less prone to errors.

We’ve also fulfilled new requirements based on this solution, like calculating the number of churned, reactivated, and new players in a given time frame.

Currently, the denormalized collection holds 575K documents in our production system, more than 1K are being added every day, and we haven’t faced a performance bottleneck nor a significant complexity increase due to it, so we consider that this solution has been successful.

We anticipated a few reasonable future use cases without trying to cover too much, reducing the complexity of the solution. We know requirements change and this may be insufficient in the future. At that moment, we’ll keep iterating on it and improving our familiarity with our data to make better decisions.

Conclusion

The biggest learning we had from this experience is that understanding the details of your data and your use case is as important as being aware of the capabilities and tradeoffs of the technology. This is challenging and case-specific, so it takes time and errors can happen. Aiming for perfection may lead to analysis paralysis, so we need to make decisions, and be flexible enough to review them and learn if they happen to be wrong. Finally, an honest and thorough retrospective analysis is what will help to make it better next time.

Posted on February 13, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.