⚡ When Faster Isn't Better

Randall

Posted on August 6, 2022

Lately I keep hearing about Bun, a new JavaScript runtime meant to compete with Node.js and Deno. It's fast, they're saying, and indeed two of the three bullet points on its homepage are different ways of saying "it's fast".

Fast is sexy, developers love fast things, everyone does. But this kind of fast isn't likely to do you much good, and we're going to talk about why.

To be clear, I'm not trying to pick on Bun in particular. I actually know very little about it beyond what's on its homepage and what people say about it. It might be great. Regardless, the claims it makes and the way people talk about it make it a great starting point for this discussion, which is about math more than anything else.

"This Kind of Fast"

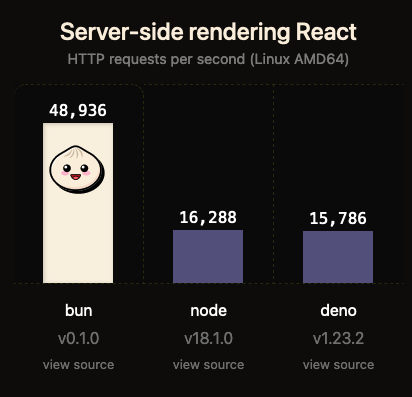

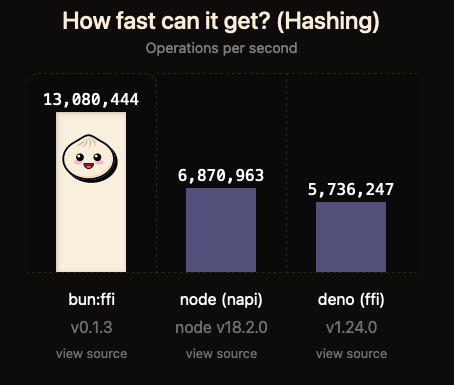

The graphics on Bun's homepage show it performing approximately 3x faster than Node.js on three CPU bound tasks which are typical of web server applications:

1: Rendering and serving (React) webpages:

2: Querying a SQLite database1

3: Hashing a string

The Billion Dollar Server

While the graphics above show the humble Node.js server rendering and serving about 16,000 pages per second, that's for an unrealistically simple case: a page that just says "Hello World" and is presumably served to a client running on the same machine.

The humble Node.js server won't get anywhere near that throughput on real-world workloads. As a rough estimate, let's suppose that a Next.js application running on a single-core Node.js server can serve approximately 100 pages per second. And let's suppose that Fast Engine's 3x performance (again, I'm not picking on Bun, I swear!) holds true here, and it can serve 300 pages per second.

Another way to look at this is: Fast Engine can serve 300 pages per second running on just one server. If you're using Node.js, you would have to buy an extra two servers to achieve that same throughput.

Here's the thing. Three hundred pages per second is about twenty five million per day. That's about seven hundred and fifty million per month.

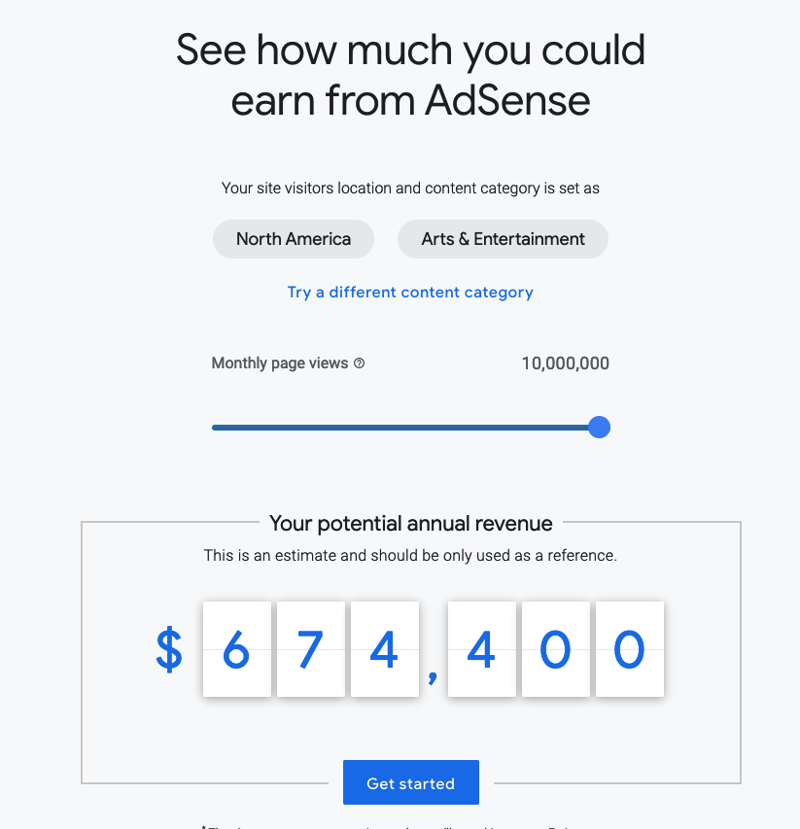

Let's slap Google AdSense on this baby:

Turns out their calculator only goes up to ten million page views per month, which would net us about $700,000 per year.

Meanwhile an extra two single-core servers will cost you around $10-$20 per month.

So Fast Engine is saving you ten bucks per month on your multi-million dollar gravy train. See the problem? You have better things to worry about than ten dollars, for example, what kind of private jet you're going to buy.

The 93.333 Millisecond Request

Okay, but if Fast Engine's throughput advantages don't really matter, what about its improved response time? Fast Engine finishes rendering the page faster, so visitors see it faster, and are more satisfied, right?

Actually, they won't notice or care. Roughly speaking, if the Node.js server can serve 100 pages per second, that implies that it spends about 10 milliseconds of CPU time on each page render. Meanwhile Fast Engine, serving 300 pages per second, spends about 3.33 milliseconds of CPU time on each page.

But the request duration from the user's browser to the server and back is dominated by the latency between them and the server, and potentially also the latency between the server and other APIs and databases. Consequently, running the same code on Fast Engine might reduce the total request duration from, say, 100ms to 93.333ms. Again, it's an improvement on paper, but no one will notice or care.

The $10,000 Developer

Let's say you're not convinced, and you decide to go with Fast Engine anyway, and hire a developer to build your server for you.

You might find that this project, which would take, say, three months to build on Node.js, will take four months to build on Fast Engine, due to Fast Engine being less compatible with popular modules, having a smaller community to get help from, etc.

In the US, employing a developer full-time for a month is likely to cost you at least ten thousand US dollars in total expenses. So while you may have saved ten dollars per month in server costs someday down the line when your service gets popular, and you may have shaved a few milliseconds off of request duration, you paid $10,000 for that, and got your product to market a month later. Those are not good trade-offs.

To be clear, it's not necessarily the case that the "faster" engine will take longer to write code for. That might not be true. The point is that before getting starry-eyed about fast technologies, there are other factors to consider, and in most cases you will save a lot more money if you prioritize developer efficiency over software execution efficiency.

The Number-Crunching Desktop

Time to start putting in a few asterisks. So far, we've been talking about web server applications, where the workloads tend to be IO-bound and horizontal scaling is relatively cheap, easy, and infinite.

These arguments don't necessarily apply well to other domains. For example in a graphics engine, rendering 3x as many frames per second would be a tremendous improvement. That would triple the frame rate!

Absolutely, screaming fast code has an important place in the world. But that place is not JavaScript code running on web servers.

The Snappy Lambda

One final thing I want to discuss is serverless compute platforms such as AWS Lambda and Google Cloud Functions.

If there's anywhere we need fast JavaScript servers, this is it, due to the problem of cold starts.

A cold start occurs when the serverless platform needs to spin up a new instance of your code in order to service increased traffic. This can involve unzipping dependencies, booting the runtime, establishing database sessions, and a variety of other relatively slow operations.

All of this can result in requests to your server sporadically being noticeably slow. If Fast Engine can significantly reduce how long cold starts take, that would be nice.

Still, the potential benefits here are fairly limited, as:

"According to an analysis of production Lambda workloads, cold starts typically occur in under 1% of invocations. The duration of a cold start varies from under 100 ms to over 1 second." - Amazon

Furthermore, a lot of the slow operations involved in cold starts have little to nothing to do with the engine that your code is running on, and would not be improved by a faster engine.

Conclusion

In a vacuum, 3x performance sounds great, and understandably sends a tingle of joy up the spine of any developer. But in truth it's pretty meaningless without a more careful examination of the use case and an understanding of exactly which sections of it are getting faster and which aren't.

Sometimes the slow thing is still plenty fast, and the fast thing isn't as much faster as it first appears. Sometimes it doesn't really matter much either way.

The average JavaScript web server is one such case. That being said, every situation is unique and every tool has its place.

What do you think?

Note #1 - The SQLite benchmark is more CPU bound than one might imagine. SQLite has internal caching, which lives in the same process as the client. Running the same query over and over will read data from the cache, though its size is limited.

Posted on August 6, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.