Krystian Maccs

Posted on May 2, 2023

Introduction

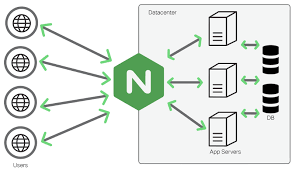

As a backend engineer, it's essential to understand how to configure Nginx, an open-source web server and reverse proxy, to act as a load balancer for multiple servers. In this article, we'll cover the syntax of Nginx configuration files and walk you through the steps for configuring Nginx as a load balancer for three servers.

Nginx Configuration Syntax

The Nginx configuration file can be found at /etc/nginx/nginx.conf, and it comprises directives that specify different aspects of Nginx's behavior. These directives use a syntax similar to that of other programming languages, with blocks, statements, and variables.

A block is enclosed within curly braces and contains a set of statements. Each statement comprises a directive and its arguments. Variables are used to store and retrieve values within the configuration file.

Here's an example of the basic syntax for an Nginx configuration file:

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

server {

listen 80;

server_name example.com;

root /usr/share/nginx/html;

}

}

In the above example, the user directive specifies the user under which Nginx should run. The worker_processes directive specifies the number of worker processes that should be spawned. The error_log directive specifies the path to the error log file, and the pid directive specifies the path to the process ID file.

The events block specifies the configuration for Nginx's event processing system, which is responsible for handling client connections and processing incoming requests. The http block specifies the configuration for Nginx's HTTP server.

The server block within the http block specifies the configuration for a single server. In this example, the server listens on port 80 and responds to requests for the domain example.com.

Configuring Nginx as a Load Balancer:

To configure Nginx as a load balancer, we need to modify the Nginx configuration file to specify the upstream servers and the load balancing algorithm to use.

Upstream Servers:

In Nginx, an upstream is a group of servers used for load balancing. When Nginx receives a request, it distributes the request among the servers in the upstream group based on the selected load balancing algorithm.

To specify the upstream servers, we use the upstream directive within the http block. This directive creates a group of servers that can be used for load balancing.

Here's an example of the upstream directive:

http {

upstream backend {

server server1.example.com;

server server2.example.com;

server server3.example.com;

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://backend;

}

}

}

In the above example, we have created an upstream group called backend that includes three servers: server1.example.com, server2.example.com, and server3.example.com. The server block within the http block specifies the configuration for a single server that listens on port 80 and responds to requests for the domain example.com.

*Load Balancing Algorithms:

*

Nginx supports several load balancing algorithms that can be used to distribute requests across the upstream servers. The most commonly used algorithms are:

Round-robin: The default algorithm, which distributes requests evenly across the upstream servers.

least-connected: This algorithm routes requests to the server with the least number of active connections, ensuring a more balanced distribution of workload.

ip-hash: This algorithm routes requests to servers based on the client's IP address, ensuring that requests from the same client are consistently sent to the same server. This is useful for maintaining session persistence or cache coherence.

Random: This algorithm selects a random server from the upstream group to handle each request.

Weighted Round Robin: This algorithm distributes requests based on the weight assigned to each server in the upstream group. Servers with higher weights receive a larger share of requests.

Least Time: This algorithm routes requests to the server with the lowest average response time.

Generic Hash: This algorithm routes requests based on the value of a user-defined variable.

To specify these algorithms, we can use the following parameters:

upstream backend {

server server1.example.com weight=3;

server server2.example.com;

server server3.example.com;

ip_hash;

least_time header;

}

In the above example, we have assigned a weight of 3 to server1.example.com for the Weighted Round Robin algorithm, specified the ip_hash algorithm to route requests based on client IP address, and the least_time algorithm to route requests based on the response time, using the "header" method.

Configuring Nginx for Single Port Server

By default, each server in an upstream group is required to run on a separate port, but we can configure Nginx to make all servers run on a single port. This can be useful in situations where ports are limited or when it's necessary to have all servers behind a single firewall.

To configure Nginx to use a single port for all upstream servers, we can use the "server" parameter of the "listen" directive. Here's an example:

upstream backend {

server server1.example.com:8080;

server server2.example.com:8080;

server server3.example.com:8080;

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://backend;

}

# Make all servers run on a single port

stream {

upstream backend {

server server1.example.com:8080;

server server2.example.com:8080;

server server3.example.com:8080;

}

server {

listen 80;

proxy_pass backend;

}

}

}

In the above example, we have used the "stream" block to configure Nginx to use a single port for all upstream servers. The "stream" block specifies that the servers will run on TCP instead of HTTP, and the "proxy_pass" directive is used instead of the "proxy_pass" directive within the "location" block.

Conclusion

In conclusion, Nginx is a powerful tool that can be used as a load balancer to distribute incoming requests across multiple servers. With its support for various load balancing algorithms, Nginx provides flexibility to optimize the distribution of incoming requests and ensure the availability and scalability of your web application.

In this article, we have covered the basic syntax of Nginx configuration files, how to configure Nginx as a load balancer using upstream servers, and the commonly used load balancing algorithms. We have also discussed additional load balancing algorithms and configuring Nginx to use a single port for all upstream servers.

With this knowledge, you can confidently implement Nginx as a load balancer and efficiently manage your application's traffic.

To further enhance your understanding, you may explore additional resources and documentation(which can be found here) on Nginx load balancing techniques. Happy load balancing!

Posted on May 2, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.