I spent $15 in DALL·E 2 credits creating this AI image, and here’s what I learned

Joy

Posted on August 19, 2022

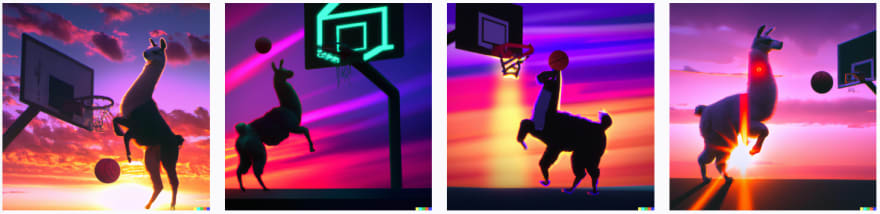

Yes, that’s a llama dunking a basketball. A summary of the process, limitations, and lessons learned while experimenting with the closed Beta version of DALL·E 2.

This article was originally published by me on Medium.

I’ve been dying to try DALL·E 2 ever since I first saw this artificially generated image of a “Shiba Inu Bento Box”.

Wow — now that’s disruptive technology.

For those of you unfamiliar, DALL·E 2 is a system created by OpenAI that can generate original images from text.

It’s currently in closed Beta — I signed up for the waitlist in early May and got access at the end of July. During the Beta, users receive credits (50 free in the first month, 15 credits every month after that) where every use costs 1 credit, and each use results in 3–4 images. You can also purchase 115 credits for US$15.

P.S. If you can’t wait to try it, give DALL·E mini a go for free. However, the quality of its images are generally poorer (giving rise to a host of DALL·E memes) and takes about ~60 seconds per prompt (DALL·E 2 in comparison only takes 5 seconds or so).

You’ve probably seen various cherry-picked images online showing what DALL·E 2 is capable of (provided the right creative prompt). In this article, I share a candid walkthrough of what it takes to create a usable image from scratch for the subject matter: “a llama playing basketball”. You might find it useful if you’re thinking of trying out DALL·E 2 yourself, or you’re just interested in understanding what it’s capable of.

The starting point

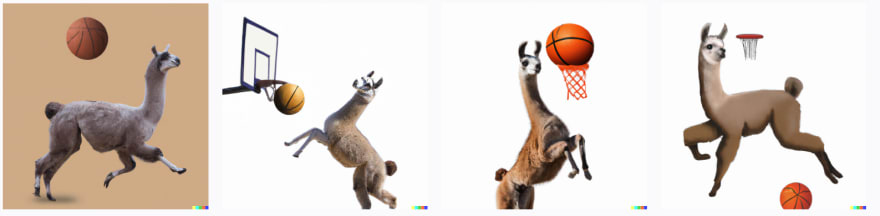

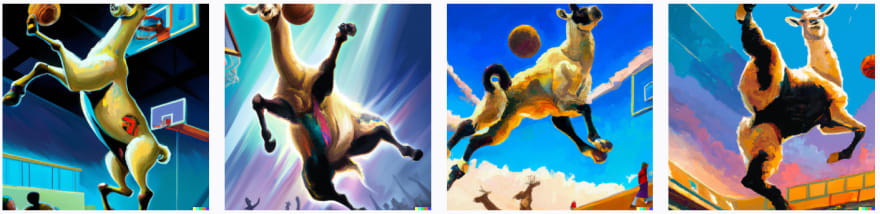

There’s both an art and science to knowing what prompt to feed DALL·E 2. To illustrate, here are the results for “llama playing basketball”:

Why is DALL·E 2 inclined to generate cartoon images for this prompt? I assume it has something to do with the lack of actual images of a llama playing basketball seen during training.

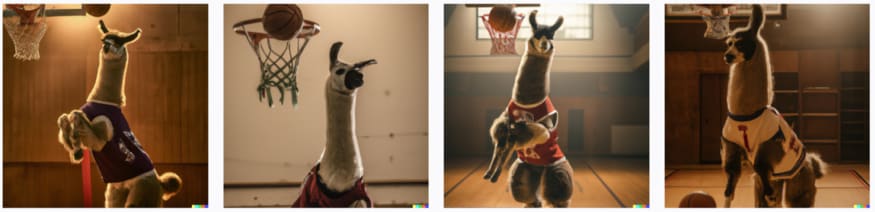

I attempted to go a step further by adding the key term ‘realistic photo of’:

That llama’s looking more photorealistic, but the whole image is starting to look like a botched Photoshop job. In this case, DALL·E 2 clearly needed some hand-holding to create a cohesive scene.

Prompt engineering, aka the art of specifying exactly what you want

In the context of DALL·E, prompt engineering refers to the process of designing prompts to give you the desired results.

The DALL·E 2 Prompt Book is a fantastic resource for this. It contains a detailed list of inspirations for prompts using keywords from photography and art.

Why is something like this necessary? Because getting a usable output from DALL·E 2 is finicky (especially when you’re not sure what DALL·E 2 is capable of). So much so that a new startup is creating a marketplace charging $1.99 for prompts to save you the time and money from coming up with your own.

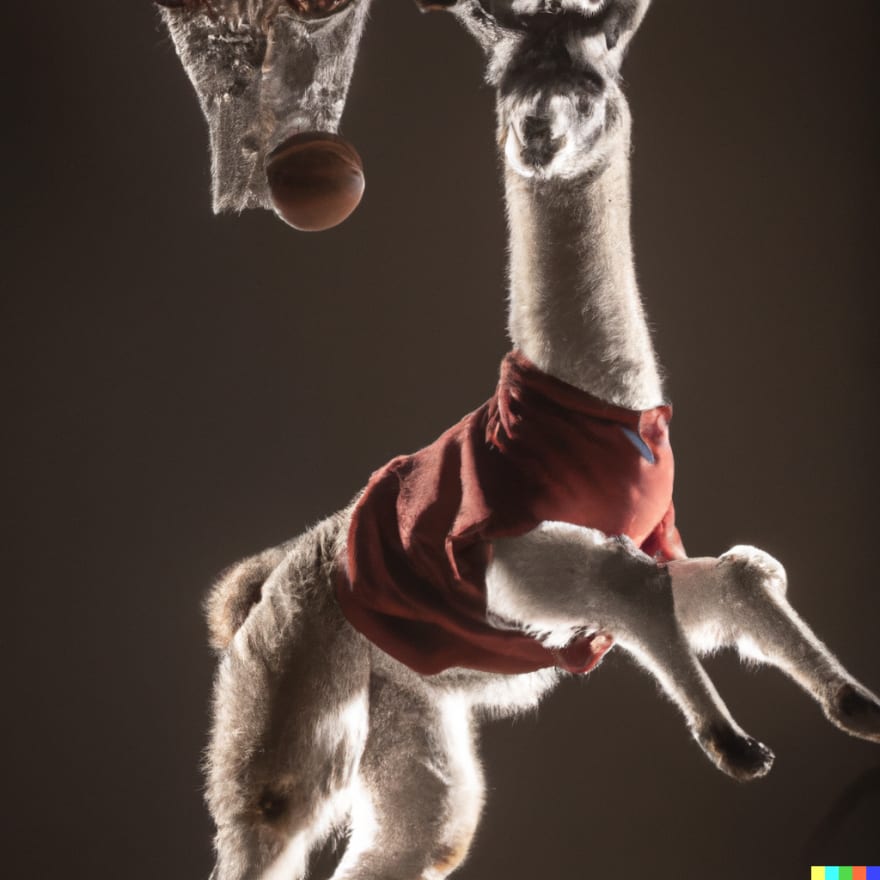

My personal favorite find is “dramatic backlighting”:

It’s important to tell DALL·E 2 exactly what you want. Apparently, it’s not obvious from the context that this llama should be dressed for the occasion. DALL·E 2 does a great job realizing this fantasy scene however, when ‘llama wearing a jersey’ is specified:

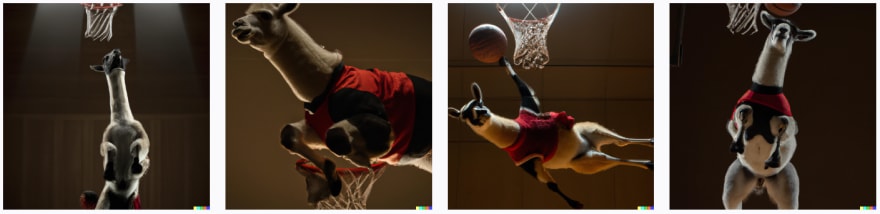

It doesn’t stop there. To add some drama to the image and really get this llama flying, I needed to specify phrases such as ‘dunking a basketball', ‘action shot of…’, or my personal favorite: “…llama in a jersey dunking a basketball like Michael Jordan”:

Tip: DALL·E 2 only stores the previous 50 generations in your history tab. Make sure to save your favourite images as you go.

You might have noticed: DALL·E 2 isn’t great at composition.

You’d think that from the context of ‘dunking a basketball,’ it’d be obvious where the relative positions of the llama, ball, and hoop should be. More often than not, the llama dunks the wrong way, or the ball is positioned in such a way that the llama has no real hope of making the shot. Though all the elements of the prompt are there, DALL·E 2 doesn’t truly ‘understand’ the relationship between them. This article covers the topic in more depth.

Another artifact of DALL·E 2 not really ‘understanding’ the scene is the occasional mix-up in textures. In the image below, the net is made out of fur (a morbid scene once you think about it):

DALL·E 2 struggles to generate realistic faces

According to some sources, this may have been a deliberate attempt to avoid generating deepfakes. I thought that would only apply to human subjects, but apparently, it applies to llamas too.

Some of the results were downright creepy.

Some other limitations of DALL·E 2

Here are some other minor issues I experienced:

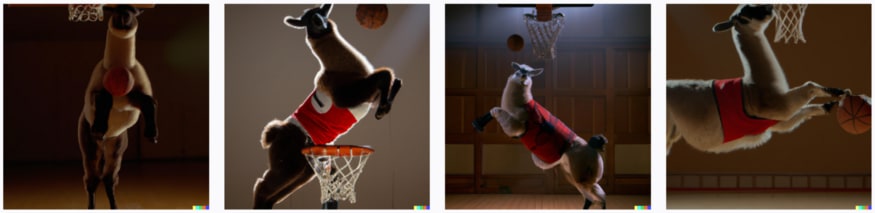

Angles and shots are interpreted loosely

No matter how many variants of ‘in the distance’ or ‘extreme long shot’ I used, it was difficult to find images where the entire llama fit within the frame.

In some cases, the framing was ignored entirely:

DALL·E 2 can’t spell

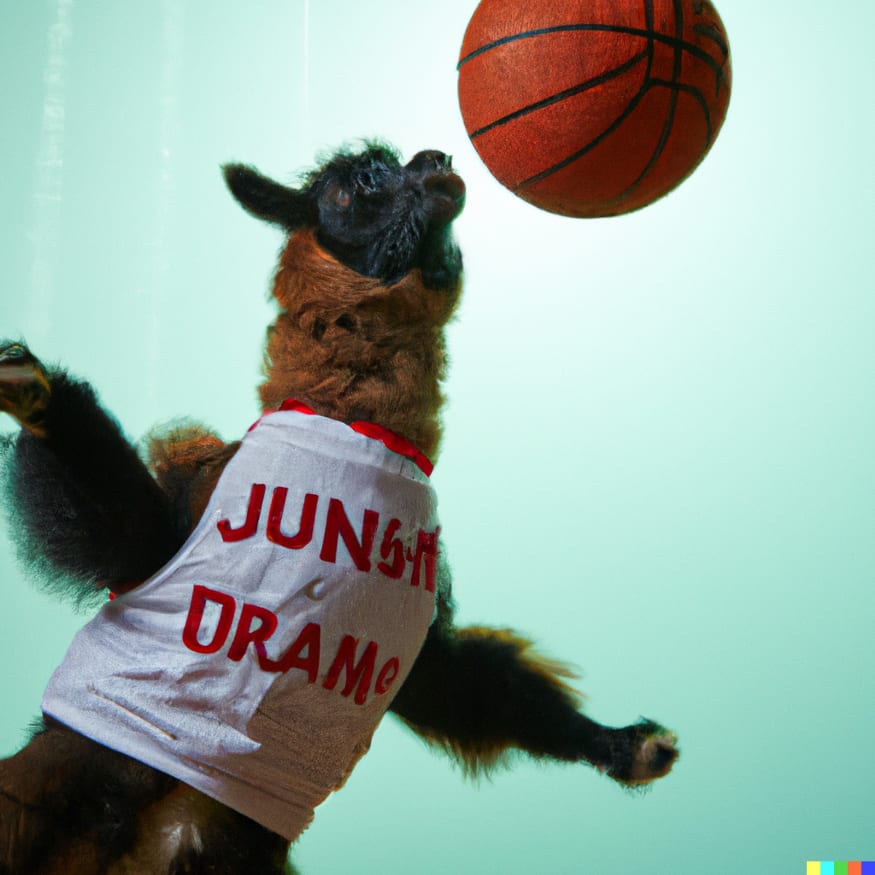

I guess this shouldn’t be too surprising given that DALL·E 2 struggles to ‘understand’ the relationship between components. It is, however, capable of attempting some fully formed letters in the right context:

DALL·E 2 can be temperamental with complex or poorly-worded prompts

Occasionally, adding keywords or phrasing the prompt in certain ways led to results that were completely different from what was expected.

In this case, the real subject of the prompt (llama wearing a jersey) was completely ignored:

Even adding the term ‘fluffy’ led to dramatically worse performance and multiple cases where it looked like DALL·E 2 just… broke:

In working with DALL·E 2, it’s important to be specific about what you want without over-stuffing or adding redundant words.

DALL·E 2’s ability to transfer styles is impressive

You need to try this!

Once you have your keyword subject matter, you can generate the image in an impressive number of other art styles.

‘Abstract painting of….’

‘Vaporwave’

‘Digital art’

‘Screenshots from the Miyazaki anime movie’

Final thoughts

After over 100 credits (~US$13) and a lot of trial-and-error, here’s my final image:

The image isn’t perfect, but DALL·E 2 managed to fulfill about 80% of the brief.

Most of the credits went towards trying to get the right combination of style, faces, and composition to work together.

According to OpenAI’s DALL·E announcement,

“…users get full usage rights to commercialize the images they create with DALL·E, including the right to reprint, sell, and merchandise.”

Expect many users to play fast and loose with these rules.

As a content creator, DALL·E 2 will be most useful for creating simple illustrations, photos, and graphics for blogs and websites. I’ll be using it as an alternative to Unsplash to create blog cover images that won’t look the same as everyone else’s.

If you’re about to try out DALL·E 2 yourself, here’s a tl;dr of tips before you start:

- Check out the DALL·E 2 Prompt Book! (Also, the fan-made Prompt Engineering Sheet).

- Be prepared to do some trial-and-error to get what you want. Fifteen free credits might sound like a lot, but it really isn’t. Expect to use at least 15 credits to generate a usable image. DALL·E 2 is not cheap.

- Don’t forget to save your favorite images as you go.

Thanks for reading! I’d love to hear your experience with DALL·E 2 and welcome any thoughts or feedback.

If you enjoyed reading this, here are some articles by other writers you might like as well:

- How I used DALL-E 2 to Generate The Logo for OctoSQL by Jacob Martins

- How I Used AI to Reimagine 10 Famous Landscape Paintings by Alberto Romero

- What DALL-E 2 can and cannot do by Swimmer963

Posted on August 19, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

August 19, 2022