Runtime Procedural Character Generation

Göran Syberg Falguera

Posted on May 11, 2022

Or storing 18 quintillion characters in a 64 bit integer.

Introduction

The purpose of this article is to give a high level survey of a field that I believe will become increasingly important in the future. I aim to not only describe the area but try to spark your imagination on things that can and will be done with this technology.

Increasing numbers of game developers are using procedural tools to create content for their games. A lot has been done for procedural generation of environments, levels, game modes, etc. and even though procedural character generation has been done, it has not been as popular a use case as for instance generation of thousands of game levels for replayability or for generating vibrant environments. The reason for the relative lack of progress in this area I attribute to game designs not needing it. For one thing, it turns out people get more attached to characters that they get to create themselves than having them generated. Even for NPC-creation, the created characters usually have a place in a story, and any arbitrary character wont be good enough. Maybe a specific gender or geographical inheritance is needed for the story.

There is however a group of games that will not only gain from this technology but requires it and I am working on one of them. After reading this, I think you will have the foundation to not only accept my proposed use cases but maybe think of a few new ones yourself. Maybe you want to come work on this at GOALS?

Asset Generation

Asset generation, as opposed to asset creation, alludes to some kind of automated process. The point, of course, being that you can create a lot more content faster. Now, you could go about this in a couple of different ways depending on what you need. For instance, you could generate characters based random inputs and get random outputs each time. This would be referred to as stochastic generation of content. Or you could have a set of parameters that you have decided on and generate say 100 different characters based on 100 different predefined values of characteristic X. Now, in computer science "randomness" is a tricky thing. For "true" randomness you have to look at some non-deterministic physical aspect outside of the computer, for instance the radioactive decay of an atom. This is a bit overkill for our current topic and most programming languages supply some kind of pseudo random number generator. The funny thing with these "random number generators" is that they are fully determined by their "seed" and hence not "random" at all. Or, you could generate the same random number every time you want to.

A seed is something you pass into the generator function to make it differ from the previous time you ran the function. The reason we need a seed is that a computer cant make stuff up. They are built specifically to not be random and to reproduce the exact same result given the same input.

So, if you pass the same seed into the same random number generator, you end up with the exact same "random" number every time you do it. A "pseudo random number generator" is actually a "procedural number generator"! How fun. More about this in the "Procedural Content" section. The point to take with you from this section is that the set of generated outcomes can be done in a deterministic, and hence reproducible way (same result every time), or in a stochastic unique (most often) way.

Now, if the seed is a 64 bit unsigned integer (unsigned means only positive numbers), which is the most common integer size in modern computers, we could then generate about 18 quintillion (18,446,744,073,709,551,615) different random numbers. And if a character, or other procedural content, was fully generated from this seed, it would mean we would have 18 quintillion different and fully deterministic (we could recreate them anytime we want) versions of that asset.

This is where my head exploded the first time I thought about this. The possibilities are just staggering. Keep this in mind for now and let's talk a bit about how these procedural algorithms could work.

Procedural creation of content

Imagine a game character in a world with screens only supporting 12x12 pixels:

To create this character in the traditional (manual) way, we would have to manually chose the color of each pixel. This gives us a lot of control but if we want to create another character, we would have to do it again. Hence, this process scales really badly.

What if we would instead of choosing the color of each pixel create a little program that did something like this for the head of the character:

Aligned to the top of the center of the body, draw a symmetrical elliptic shape with the parameters X and Y while X and Y vary between Z and I.

Obviously the "body" would have to be similarly defined ahead of this but by varying X and Y between Z and I we can create as much variation as the resolution permits.

If we specify the values we want to vary in a list, like for the colors in the image below, we can have quite a lot of control of the process. Or we just vary the RGB-values pseudo randomly and get some unexpected color. Varying randomly can be risky though and may require more rigorous testing.

Game Characters

Now its time to talk about some 3D game characters and what they are made up of so that we can figure out how to procedurally create them.

Game characters are generally made up of the skeleton, the model mesh and the skin and textures. Arguably cloth could be seen as a fourth part in modern game engines. Traditionally cloth and clothing was part of the model mesh.

Skeleton or rig

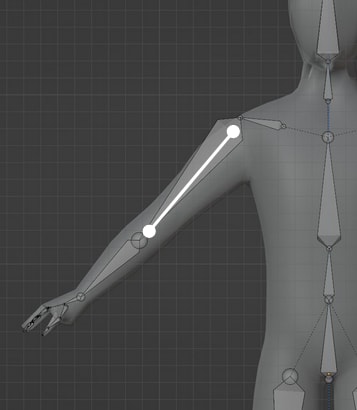

The skeleton in game characters is not used to keep the body upright (as in real life characters), these will not fall in a pile of skin without it. The reason to have a skeleton is to be able to reason about the movement of the limbs and the body in relation to each other.

The definition of a skeleton is commonly just a list or hierarchy of bones starting at the hip and going outwards to the extremities.

To manipulate a skeleton, given the same number of joints (joints being the points tying the whole thing together) one might just vary the bone length according to some rules. As long as we do it within certain limits, this fits perfect for our procedural tools! Now we can just set random variation on bone length similar to pixel characters head above.

It is conceivable to create more arbitrary skeleton configurations and it has been done in games to create wonderful variation of creatures. As you can imagine, animation and testing of these characters can be a daunting task. For our use case, a humanoid configuration will suffice. Maybe we have different limits on male and female skeletons etc.

The model mesh

Attached to the bone is what is arguably the most complex part of the character. The model or the mesh is what makes up the shape of the character. It is made up by a number of vertices, or points in space. Between these points we draw lines called edges and together they crate surfaces or faces. In the image below we have a mesh that is made up of quads, meaning four vertices, and you need at least 3 vertices to create a surface. (That is why you often hear talk about triangles when people talk about graphics in games).

To manipulate these meshes, you simply move one of the vertices. This is what applications like 3ds Max, Maya and Blender excel at. To do this in a procedural context and randomly move vertices around probably would not make sense as the outcome is very unpredictable. One could instead imagine we create two extreme characters, one light and one heavy. The same for big/small noses, etc. and then vary some input parameters to create variations between those extreme values. It is easy to imagine a classical character creator in a RPG with sliders being the randomized values.

Skin and textures

Now after creating our model mesh, we need some color on this gray clay model. And that is what skin and textures are for. We could make our lives easy and create something like the blue man group and then vary the colors just like on pixel character above. But we probably want something more sophisticated like this character made by an old colleague of mine Björn Arvidsson:

Looking at a character like this it can be a bit daunting to think about how we could possibly generate something looking so realistic but luckily for us, thanks to other businesses, procedural textures and specifically for skin, has gotten pretty far. Adding more variations for scars with some shader programming or tattoos with decals, we can create endless variation here as well.

Tools already available

There are other tools on the market to manipulate and create characters. Below is an example from Epic's MetaHuman. This is not a procedural tool per se but it would be trivial to provide MetaHuman with a bunch of random variables as input and create a lot of characters. The problem with tools like MetaHuman is that its a cloud service and we have no idea how much resources and assets go into creating those characters. Also, if you export these characters to disk they are pretty big and creating/storing millions of them is just not practical. But this takes us to the first word in the title of the article.

Runtime generation and the GOALS use case

At GOALS we need characters that can scale up and down since we are targeting both high and low end machines, they cannot be on disk as they don't exist (have not been born) when we ship and we cannot use any manual labor to create them, there are simply too many of them. Also, back to the MetaHuman example: Even if we were able to store them in the cloud, it is not a nice user experience to wait while we download them before you can play.

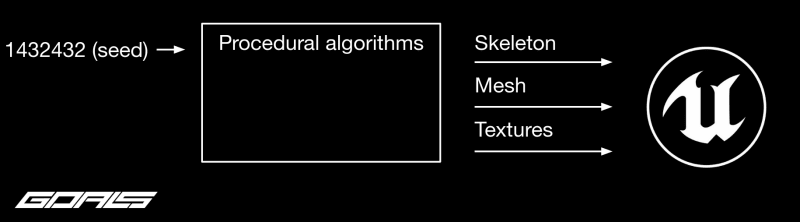

Enter runtime character generation. All we have done today needs to be done at runtime. Meaning while the game is starting or running. This is sometimes referred to as online procedural generation vs offline.

The idea is that when you boot the game, you get a list of seeds belonging to you. For each of them we pass it into our procedural box and create skeletons, meshes and textures that makes sense for Unreal engine in the GOALS use case. Then, to update with new cool features and looks etc. we just have to update the procedural algorithms. Tada!

Future use cases

Considering what has been mentioned above, imagine if someone would create engine adapters for multiple engines. Then you could in theory, provided you have the right to use a particular seed, use it as you pass between engines. This is the holy grail of the metaverse.

If this sounds exiting, I would recommend you come work on it at GOALS and share your work with the world!

Posted on May 11, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.