Medium Blog Scraper 🤖

Shreyas Mohite

Posted on June 10, 2023

Have you ever wonder what it's like to save you favorite blogs in you local machine to read it later on in offline mode or share directly to your own personal blogging site .

Well I came up with some scripts which will help us to save our blogs in markdown format which will help us to publish these blogs on your personal blog posting website.

Let's get started 🚀

First of all we required some dependencies for our scripts

lets create one file and name it as requirements.txt

requirements.txt

beautifulsoup4==4.11.1

bs4==0.0.1

certifi==2022.9.24

charset-normalizer==2.1.1

deta==1.1.0

idna==3.4

markdownify==0.11.6

python-dotenv==0.21.0

requests==2.28.1

six==1.16.0

soupsieve==2.3.2.post1

urllib3==1.26.12

save all the dependencies in file. And now just install it all

pip install -r requirements.txt

Now create a new python file named it as scrap.py

scrap.py

import requests

from bs4 import BeautifulSoup

import re

def ScrapBlogs(urls):

response = requests.get(str(urls)).text.encode('utf8').decode('ascii', 'ignore')

soup = BeautifulSoup(response, 'html.parser')

find_all_a = soup.find_all("a")

urls=set()

for x in find_all_a:

if 'https' not in x['href'] and \

"/tag/" not in x['href'] and \

"/?source" not in x['href'] and \

"/plans?" not in x['href'] and \

not re.match("/@\w*[a-zA-Z0-9]\?", x['href']):

urls.add(x['href'])

url_list = list(urls)

return url_list[1:7]

Once it's done we need another file for our main script

named it as main.py

main.py

from scrap import ScrapBlogs

import re

import requests

from bs4 import BeautifulSoup

import os

import markdownify

urls = "https://medium.com/tag/node-js-api-development"

dir=urls.split("/")[-1]

urls = ScrapBlogs(urls)

if os.path.isdir(dir):pass

else:os.mkdir(dir)

def blogs_scrap():

try:

for url in urls:

scrap_url = f"https://medium.com{url}"

find_name=re.findall('\w+', url)

name_list=[]

for x in find_name:

if x.isalpha():

name_list.append(x)

file_name="_".join(name_list)

response = requests.get(scrap_url).text.encode('utf8').decode('ascii', 'ignore')

soup = BeautifulSoup(response, 'html.parser')

find_section = soup.find("section")

markdown_section=markdownify.markdownify("""{0}""".format(find_section), heading_style="ATX")

print(scrap_url)

if find_section is None:

continue

else:

with open(f"{dir}/{file_name}.md","w") as f:

f.write(f"{scrap_url}")

f.write("\n")

f.write(markdown_section)

return "Scrapped Successfully"

except Exception as e:

print(e)

blogs_scrap()

Make sure to get URL which contains tag in it.

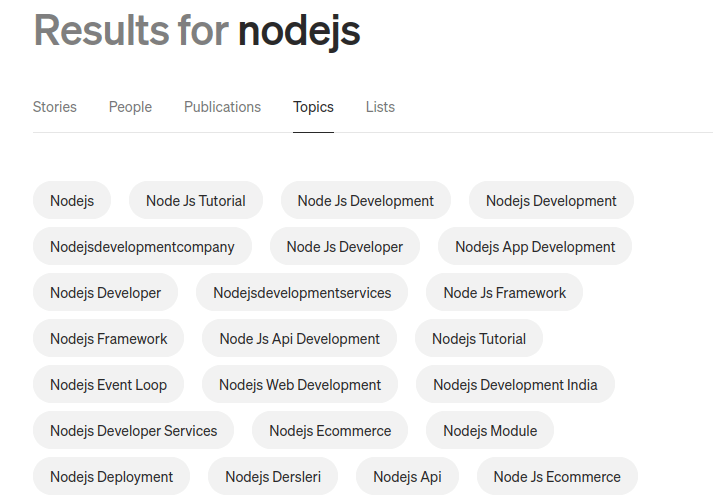

For Example let's consider following url

![]()

Now let's scrap some blog's related to follwoing url

As we run this script we will be able to retrieve following url data. As you can see in image

But why only six md file if we have passed in ratio of [1:8]

let me explain it to you, We are only getting blogs which are public to user's.

we can also change our blog ratio in scrap.py

![]()

If you like this blog please do give a like.

Posted on June 10, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.