Victor Dorneanu

Posted on May 11, 2023

Introduction

During this year’s keynote, the main focus was on the velocity companies - and especially start-ups - need in order to bring their products to the market, highlighting the importance of agility. I was particularly surprised to learn that Flixbus is fully managing their infrastructure in AWS, showcasing the power and potential of cloud technologies. It’s impressive to see how Flixbus has invested in their tech stack, especially during the pandemic when travel was at an all-time low.

As with any start-up, challenges such as speed , regulatory compliance , costs , and growth can pose significant obstacles. However, with the right mindset and strategy, start-ups can overcome these challenges and battle prove their products on the market. The ability to move quickly while still being compliant to regulations, minimizing costs, and driving sustainable growth is key to success in today’s fast-paced market.

Attended talks

PAS216: Overcoming Cloud Security Challenges at Scale

Summary

For the Security team it’s crucial to be aware of the challenges that come with migrating to the cloud. While it can be beneficial to move to the cloud, there are still certain challenges that need to be taken into consideration. It’s important for organizations to grow with the technology as well. To manage these challenges, having the right tool(s) is necessary. In selecting a tool suite, it’s critical to make sure that it aligns with the needs of both developers and the organization as a whole as everyone sits in the same boat. In this presentation, the author mainly talked how ORCA Security was able to solve their security problems.

Notes

Cloud Challenges in general

-

Scalability

- The size becomes very abstract

- "You can't walk through a data center to get a sense"

- Even small mistakes get expensive quickly

- Every manual process will break

-

Growth During Transformation

- There is no time - growth drives its own momentum

- Delay makes any problem bigger

- Organizational change is hard

- Even more for non-tech teams

-

Level of Complexity

- Multicloud by strategy (at least for SAP)

- Large portfolio of products, often deployed in regulated industries

- Transitioning to cloud-native and microservices architectures

- Bewildering organization with high autonomy within business units and developer teams

Cloud Challenges for Security

-

Scale

- Large scale means many findings (good or bad)

- everything is an engineering job

- Everything can break at any time, no 'test' environment

-

Growth During Transformation

- Our secunty budget doesn't grow linearly with growth in the landscape

- Security organizations often don't run or adapt to change as fast as DevOps teams

-

Level of Complexity

- How do you centralize Security functions when developer teams have even more autonomy?

- How do you make them not hate you, for making them do work to get more work?

- How do you get access to systems or get tooling deployed?

Shared Fate

- We are all in this together

- main keys with regards to the rest of the team

- collaborative

- Relieving Operational Burden

- Enabling

- Aligned Goals and Targets

Contextualization and Risk-Based Prioritization

-

Context

- Context determines the severity and urgency of the finding

- Enrich with organizational metadata to assess business risk to the organization

- Avoid wild goose chase or impossible standards and SLAs

-

Tool Sprawl

- Every additional data source requires data enrichment

- Each have their own costs

- Very nice if one tool does a lot and contextualizes across

-

Alert Fatigue

- The organization doesn't scale with the size of the landscape

- We have to focus on what is most important

- Limited developer time for security

- Don't misuse Security teams resources

COM204: Building Infrastructure AWS CDK vs Terraform

Summary

The ongoing “CDK vs. Terraform” controversy is deemed to be a highly debated topic among IT professionals. Though the writer was known to be a fan of Terraform, she now showed its main pain points and highlighted some solutions with CDK. The author believes that most DevOps professionals opt for Terraform due to its ease of use as it doesn’t require any programming knowledge, and it is considered one of the best frameworks for coding infrastructure. However, the often mentioned multi-cloud argument as deploying the same Terraform stack to another cloud provider is not an easy task. Additionally there are major differences in the state management of both solutions, and it’s crucial to consider distinct approaches for the safe and reliable deployment of infrastructure components.

Notes

Pain points with Terraform

- Conditions

variable "environment" {

description = "Environment for security groups"

type = string

default = "dev"

}

resource "aws_security_group" "web" {

count = var.environment == "prod" ? 1 : 0

name_prefix = "web-"

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_security_group" "db" {

count = var.environment == "prod" ? 1 : 0

name_prefix = "db-"

ingress {

from_port = 3306

to_port = 3306

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}

// Define the VPC

const vpc = new ec2.Vpc(stack, "VPC", {

maxAzs: 2,

natGateways: 1,

});

let enablePublicIp: boolean = true;

// Use a condition to determine whether to create an instance with a public IP

if (process.env.ENABLE_PUBLIC_IP == "false") {

enablePublicIp = false;

}

-

Policy handling

resource "aws_iam_policy" "example_policy" { name = "example_policy" policy = data.template_file.example_policy.rendered } data "template_file" "example_policy" { template = <<EOF { "Version": "2012-10-17", "Statement": [ { "Action": [ "s3:GetObject", "s3:PutObject" ], "Effect": "Allow", "Resource": [ "arn:aws:s3:::example-bucket/*" ] }, { "Action": [ "ec2:DescribeInstances" ], "Effect": "Allow", "Resource": "*" } ] } EOF }

const dynamoTable = new dynamodb.Table(this, 'MyDynamoTable', {

partitionKey: { name: 'id', type: dynamodb.AttributeType.STRING },

});

const ec2SecurityGroup = new ec2.SecurityGroup(this, 'MySecurityGroup', {

vpc,

allowAllOutbound: true,

});

dynamoTable.grantReadWriteData(ec2SecurityGroup);

-

Create Lambdas

provider "aws" { region = "us-west-2" access_key = "your_access_key" secret_key = "your_secret_key" } resource "aws_iam_role" "lambda_role" { name = "lambda_role" assume_role_policy = jsonencode({ Version = "2012-10-17" Statement = [ { Effect = "Allow" Principal = { Service = "lambda.amazonaws.com" } Action = "sts:AssumeRole" } ] }) } resource "aws_lambda_function" "my_lambda_function { filename = "my_lambda_function.zip" function_name = "my_lambda_function" role = aws_iam_role.lambda_role.arn runtime = "nodejs12.x" handler = "index.handler" }Create a Lambda function with Terraform

import * as cdk from 'aws-cdk-lib'; import * as lambda from 'aws-cdk-lib/aws-lambda'; import * as lambdaNodejs from 'aws-cdk-lib/aws-lambda-nodejs'; export class LambdaStack extends cdk.Stack { constructor(scope: cdk.Construct, id: string, props?: cdk.StackProps) { super(scope, id, props); const lambdaFn = new lambdaNodejs.NodejsFunction(this, "SimpleLambda", { entry: 'src/index.ts', handler: 'handler', runtime: lambda.Runtime.NODEJS_12_X, memorySize: 256 }); new cdk.CfnOutput(this, "LambdaFunctionArn", { value: lambdaFn.functionArn }); } }Create a Lambda function with CDK -

Testing

- For terraform you have

terratest - In CDK it's quite easy to test your expectations:

- For terraform you have

import { expect as expectCDK, SynthUtils } from "@aws-cdk/assert";

import * as cdk from "@aws-cdk/core";

import * as myapp from "../lib/myapp-stack";

test("MyAppStack creates an S3 bucket", () => {

const app = new cdk.App();

const stack = new myapp.MyAppStack(app, "MyAppStack");

const expected = SynthUtils.toCloudFormation(stack);

// Assert that the S3 bucket is created

expect(expected.Resources).toHaveProperty("MyAppS3Bucket");

// Assert that the S3 bucket has the correct properties

const bucket = expected.Resources.MyAppS3Bucket.Properties;

expect(bucket).toHaveProperty("BucketName", "myapp-bucket");

expect(bucket).toHaveProperty("VersioningConfiguration", {

Status: "Enabled",

});

});

- State management

- Terraform

- tf state

- keep it in S3 bucket

- enable versioning to be able to "rollback" to a previous workable state

- What if state is gone (for whatever reasons)?

- CDK

- CloudFormation based

- rollbacks are handled automagically

Decision template

Whether Terraform or CDK this depends on:

- team staffing

- the preferences of the developers

- availability of the services to be provisioned

- if workflows are easily automatable

- quality of the documentation

- compliance rules of the company

STP204: Web3 - Build, launch and scale your Web3 startup on AWS ft. 1inch

Summary

Tim Berger from AWS highlighted what is necessary in order to interact with a blockchain. With blockchain technology gaining significant traction, Tim emphasized the importance of understanding the fundamentals of blockchain interaction in order to take full advantage of its potential.

Furthermore, Tim also showcased some sample architectures where Amazon Managed Blockchain service has been successfully implemented. The audience gained insights into the various use cases of the service and how it can be integrated with other AWS products to achieve a seamless blockchain experience.

In addition to Tim’s presentation, Sergej Kunz, the co-founder of 1inch, also gave an exciting talk on how he and his team use AWS services for providing web3 based applications.

Also have a look at

- Build, launch, and scale your Web3 startup at AWS (from AWS Summit ANZ 2022).

- Deep Dive on Amazon Managed Blockchain

Open Datasets

-

For

BTC:- blocks/date={YYYY-MM-DD}/{id}.snappy.parquet

- transactions/date={YYYY-MM-DD}/{id}.snappy.parquet

aws s3 ls --no-sign-request s3://aws-public-blockchain/v1.0/btc/

-

For

ETH- blocks/date={YYYY-MM-DD}/{id}.snappy.parquet

- transactions /date={YYYY-MM-DD}/{id}.snappy.parquet

- logs/date={YYYY-MM-DD}/{id}.snappy.parquet

- token_transfers/date={YYYY-MM-DD}/{id}.snappy.parquet

- traces/date={YYYY-MM-DD}/{id}.snappy.parquet

- contracts/date={YYYY-MM-DD}/{id}.snappy.parquet

aws s3 ls --no-sign-request s3://aws-public-blockchain/v1.0/eth/

Some example:

aws s3 ls --no-sign-request s3://aws-public-blockchain/v1.0/btc/transactions/2023-05-08/4ed1639d0b03a562def6755a0c0465a11248c04cd389ea09cf87a35f6c95b6f9.snappy.parquet

ARC304: Reactive Architecture on AWS: from 0 to 1.6M events/minute

Reactive systems

First of all some introductory words about reactive systems. As stated in theReactive Manifesto today’s user expectations have changed dramatically over the past years. Users expect applications to be responsive (response time measured in miliseconds) and software developers hope their system will be resilient enough to cope with failures in an elegant way rather than full disaster.

The manifesto emphasized the important of building software that can easily adapt to changes in user demand, system loads and failure conditions. And all this has to be taken into consideration while still remaining flexible and scalable enough to handle future growth and evolution.

Reactive systems are:

-

responsive

- responsiveness is key here

- problems can be detected quickly

- response time is also an spect of the quality of service

-

resilient

- systems stay responsive in case of failure

- resilience is achieved by

- replication

- containment

- isolation

- delegation

-

elastic

- systems remain responsiveness under various loads

- auto-scaling of resources

-

message-driven

- in contrast to event-driven

- systems use async message-passing for communication between components

Bitpanda Pro

Bitpanda Pro is a trading platform that allow users to trade digital assets(such as cryptocurrencies) but also metals against fiat currencies such as EUR, USD, GBP. The platform offers various trading tools such as charts, advanced order types, real-time data and access to liquidity providers.

In this talk Bitpanda talks about their reactive architecture and how they managed to setup their infrastructure accordingly. Impressive enough was also the fact they’ve managed to server 1.6m events / minute!

Notes

The first take

The initial step taken to build the reactive infrastructure in AWS:

| Target | Solution |

|---|---|

| Avoid complexity of container orchestration | AWS ECS on AWS Fargate |

| Push back on the microservices hype | Mono-repo + single deployments |

| High-performance message-passing framework | vertx.io |

| App responsive during rapid client activity spike | ALB + Auto-Scaling groups |

| Isolate failures and guarantuee uninterrupted trading experience | Redundant deployment across 3 AZ |

| (near) Zero downtime - uninterrupted trading venue | AWS Aurora |

Second take

This one was about elasticity and message passing. Each traded pair (BTC, EUR, USD etc.) lives in an isolated world:

- they used a dedicated Kafka topic partition and ECS task

- one Kafka topic partition per traded pair

They've also implemented back-pressure control and load-shedding.

Fun stuff

serverlesspresso

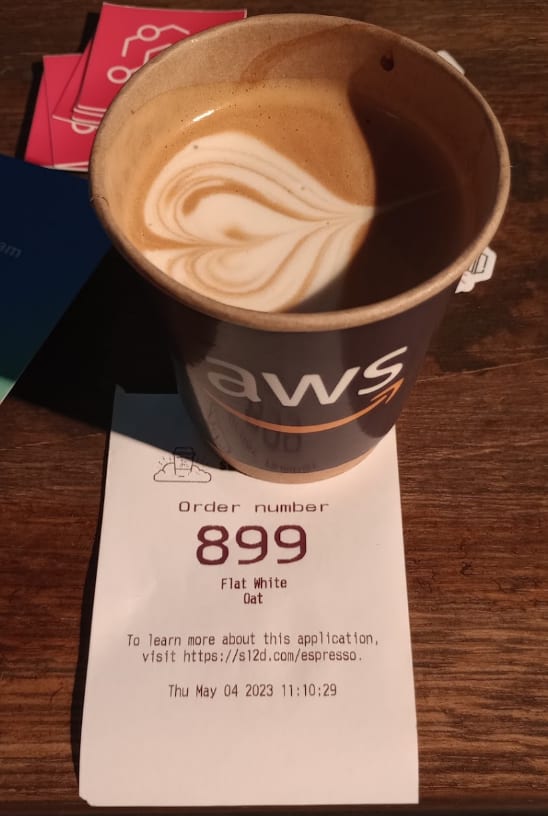

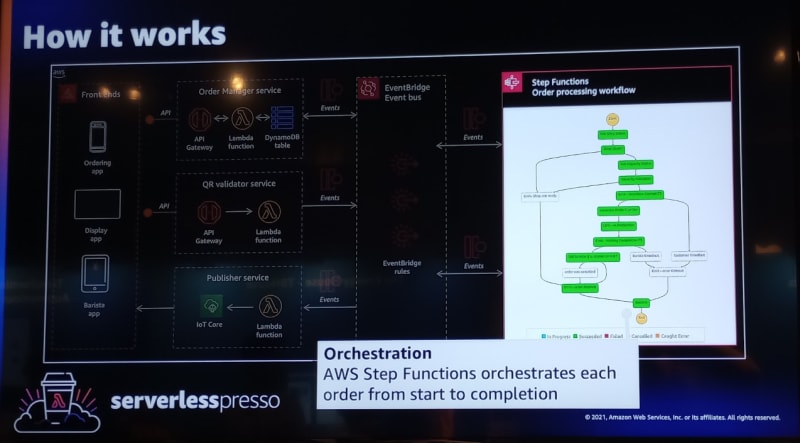

The serverlesspresso application is a serverlesscoffee bar exibit, first seen at AWS re:Invent 2021. I had the chance to see and also try it in Berlin 😎.

Serverless application

Ordering

The result ☕

The architecture

Posted on May 11, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.