ChatGPT vs. Bard: What Can We Expect?

Jose Francisco

Posted on February 16, 2023

Big tech just dropped some big news: Google is soon releasing its own ChatGPT-like large language model (LLM) called Bard. And now many are beginning to speculate about what exactly this AI battle is going to look like. Will the newcomer Bard dominate our reigning champion, ChatGPT? Or will the incumbent conversational AI model defend its crown? It's a real Rocky vs. Creed story. But honestly it's hard to tell which AI is the main character. To each, the other is a worthy opponent.

But here's what we can expect. Here, we'll take a look at how these models were trained (pun intended) and then examine their build (again, pun intended). By the end, we might be able to determine which model is the underdog and which is the public's favorite. 🥊

Their training...

Just like boxers, AI models have to train before they can show their skills to the public. Both ChatGPT and Bard have unique training styles. Specifically, ChatGPT runs on a GPT-3.5 model while Bard runs on LaMDA.

But what does that mean?

Well, we can think of GPT-3.5 as ChatGPT's "brain" while LaMDA is Bard's. The main commonality between them is the fact that they are both built on Transformers. Now, transformers are quite a hefty topic, so if you'd like to do a deep-dive, read our definitive guide on transformers here. But for our purposes here, the only thing you have to know about transformers is that they allow these language models to "read" human writing, to pay attention to how the words in such writing relate to one another, and to predict what words will come next based on the words that came ___.

(If you can fill in the blank above, you think like a Transformer-based AI language model. Or, more properly, the model thinks like you 😉)

Okay, so we know that both ChatGPT and LaMDA have neural net "brains" that can read. But as far as we know, that's where the commonalities end. Now come the differences. Mainly, they differ in what they read.

OpenAI has been relatively secretive about what dataset GPT-3.5 was trained on. But we do know that GPT-2 and GPT-3 were both trained at least in part on The Pile---a dataset that contains multiple, complete fiction and non-fiction books, texts from Github, all of Wikipedia, StackExchange, PubMed, and much more. As a result, we can assume that GPT-3.5 has at least a little bit of The Pile within its metaphorical gears as well. And this dataset is massive, weighing in at a little over 825 gigabytes of raw text.

Check out the contents of The Pile below (source):

But here's the rub: Conversational language is not the same as written language. An author may write rhapsodically but come off as curt in one-on-one conversation. Likewise, the way that I am writing in this article is different from the way I'd speak if you were in the same room as me. As a result, OpenAI couldn't just release GPT-3.5 under the alias "ChatGPT" and call it a day. Rather, OpenAI needed to fine-tune GPT-3.5 on conversational texts to create ChatGPT.

That is, OpenAI literally had humans roleplay with themselves---acting both as an AI assistant and its user through a process known as reinforcement learning from human feedback (RLHF). Then, after enough of these conversations were constructed, they were fed to GPT-3.5. And after enough exposure to conversational dialogue, ChatGPT emerged. You can read about this fine-tuning process in more detail under the "Methods" section of OpenAI's blog on ChatGPT.

And this is where some may feel that Bard has an edge. See, LaMDA wasn't trained on The Pile. Rather, LaMDA specialized in reading dialogue from the get-go. It didn't read books; it's patterned on the pace and patois of conversation. As a result, Bard's brain picked up on the details that distinguish open-ended conversation from other forms of communication.

For example, an article like this one focuses on a single topic the whole time over the period of around 1,200-1,300 words. Meanwhile, a conversation of the same word-count may shift from "How are you doing?" to "Oh my goodness, no way" to "I love that song, have you heard the album yet?" to "Wait, my boyfriend's calling. One sec."

In other words, whereas ChatGPT's brain first learned to read novels, research papers, code, and Wikipedia before it learned how to have a human-like conversation, Bard's brain learned nothing but conversation. Does this mean that Bard is going to be a better conversationalist than ChatGPT? Well, we're going to have to talk to it to find out.

Their build 💪🧠

Google's CEO states that, at least to start, Bard is backed by a lightweight version of the LaMDA model. But does that mean Bard is going to be in a lower weight class than ChatGPT?

Not necessarily.

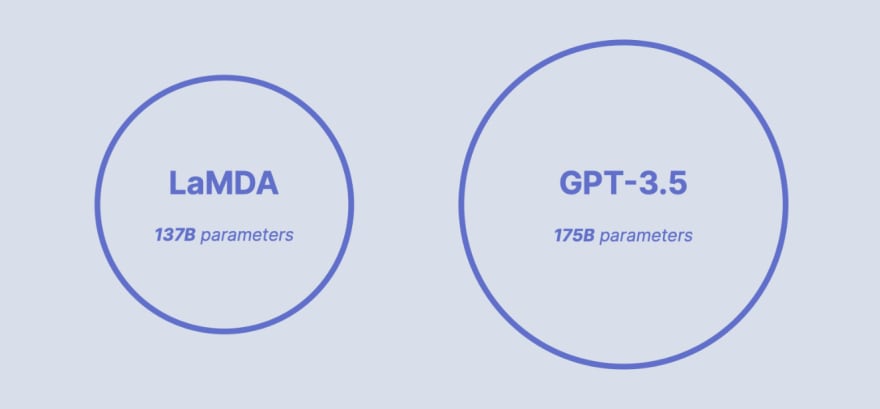

The stats would indicate that both these models are in the same weight class. Take a look:

🪙 Tokens 🪙

For our purposes, think of tokens as word fragments. For instance, the word "reorganizing" can be broken down into three tokens:

"re" → a prefix meaning "again",

"organiz" → the root of the word "organize"

"ing" → a suffix that denotes a gerund or present participle

Check out the image below to see more examples of tokenized word fragments:

See, GPT-3 and GPT-3.5 were trained on something to the order of 300-400 billion tokens. LaMDA, on the other hand, was trained on 1.56 trillion (with a T) tokens that were then broken down into 2.81 trillion SentencePiece tokens. And while this initial version of Bard contains a lightweight version of LaMDA, future iterations of the AI may come equipped with a full, heavyweight LaMDA. And who knows how that'll perform...

🤖 Parameters 🤖

Google publicly shares that LaMDA contains up to 137B model parameters while OpenAI says GPT-3.5 models hover around 175B parameters. It seems that, unlike tokens, the favor leans towards GPT in this category.

✅ Factual Correctness ✅

Here, Google proudly proclaims that they intentionally fleshed out the correctness of LaMDA's responses to knowledge-based questions by actively going through their dataset and annotating moments where LaMDA would have to resort to looking up factual information. When such questions arise, LaMDA is trained to call an external system to retrieve this encyclopedic information. This process is creatively called "information retrieval."

OpenAI, on the other hand, admits its limitations in this particular field. If you've ever used ChatGPT, you know about these shortcomings first-hand. Warnings are posted all over the place in the ChatGPT UI. So while we love our reigning champion, we have to admit that it isn't perfect.

In fact, ChatGPT---at least to date---is pretty definitive about saying "my training data ends in 2021." But Bard ostensibly remains okay with discussing more current events. But anyone outside of the current beta-testing group doesn't exactly know the extent to which that is the case.

The edge seems to lean towards Bard here---at least when it comes to discussing current-ish events. But we'll have to wait to use it to see for ourselves.

Conclusion

When it comes to conversation, Bard really seems to be a formidable competitor to ChatGPT. With its edge in conversational training and its explicit training in factual grounding, Bard might come out as the favorite. But this by no means makes ChatGPT an underdog. Again, we'll have to wait and see.

This battle may be the next iPhone vs. Android. Or the next Mac vs. PC. Or even the legendary emacs vs. vim. (Just don't get us started on tabs versus spaces.) Our headliners will indeed provide us with some interesting content. But for now, let's all rest happy, knowing that---regardless of the victor---we're all champions for being able to access, use, and analyze such incredible technology.

🎵Rocky outro theme fades into the background 🎵

(This blog post was written on February 8th 2023, two days after Google AI announced Bard.)

Posted on February 16, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.