Unity3D Fundamentals

Vishnu Sivan

Posted on May 30, 2022

Unity is a cross-platform game engine designed for the development of 2D and 3D games. It supports more than 20 platforms for deploying such as Android, PC, and iOS systems. Unity is also capable of creating AR VR content and is considered as one of the best platforms for building metaverse content.

In this article, we will learn the basic building block of Unity3D such as Assets, GameObjects, Prefabs, Lighting, Physics, and Animations.

Assets

An asset is the representation of an item used in the project. Assets are divided into two - internal and external. The assets created inside the Unity IDE are called internal assets. For example, Animator Controller, an Audio Mixer, Render Texture, etc. External assets are assets which are imported to Unity such as a 3D model, an audio file, or an image.

Image credits by Unity 3D documentation

A few important internal assets are listed below,

- Scenes − A container for holding the game objects.

- Animations − Holds gameObject animation data.

- Materials − Defines the appearance of an object.

- Scripts − Business logic applied on various gameObjects.

- Prefabs − Blueprint of a gameObject and can be generated at runtime.

To create an internal asset, right-click in the Assets folder and go to Create then select the required asset.

GameObjects

GameObjects are the building blocks of Unity. It acts as a container that holds the components of an object such as lighting, characters, and UI. We can create different game objects by the addition or removal of some components from the game object.

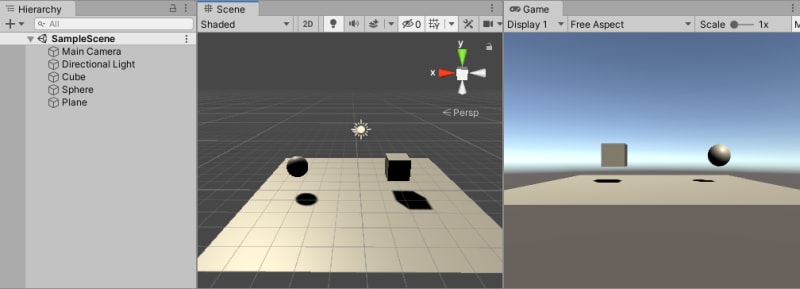

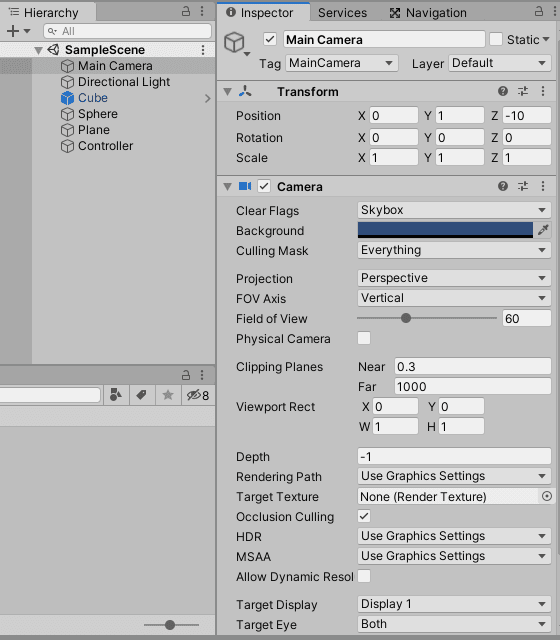

In figure (fig1), You can see the game objects - Main camera, directional light, Cube, Sphere and plane.

Scene Status properties

Users can modify the status of a game object via script or by using the inspector. A game object has three statuses - active (default), inactive, and static.

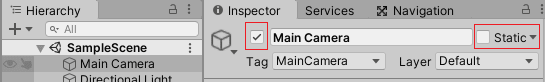

Active and Static Status

By default, the game object is set to active state. Users can set the status of a game object as active, inactive, or static. You can make the object inactive by deselecting the checkbox in the inspector which will make the object invisible. It will not receive any events such as Update or FixedUpdate. You can control the status of gameobject using the script GameObject.SetActive. You can make gameObjects static by checking the static checkbox in the inspector thereby restricting the transformations.

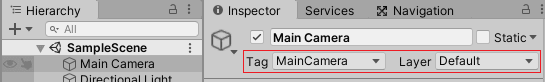

Tags and Layers

Tags are useful for identifying the type of a GameObject. The layers provide an option to include or exclude groups of GameObjects from certain actions such as rendering or physics collisions. You can modify tag and layer values using the GameObject.tag and GameObject.layer properties.

Creating and Destroying GameObjects

You can create and destroy GameObjects dynamically. We can create GameObjects in Unity by calling the Instantiate() method at run time. This process will create a duplicate element of the object provided it is passed in the argument. We can remove the contents from the scene by calling the Destroy() method.

Object Instantiation

public GameObject cube;

void Start() {

for (int i = 0; i < 5; i++) {

Instantiate(cube);

}

}

Object Destruction

void OnCollisionEnter(Collision otherObj) {

if (otherObj.gameObject.tag == "Cube") {

Destroy(otherObj,.5f);

}

}

This code snippet will destroy otherObj if it collides with the gameobject.

Finding GameObjects by Name or Tag

We can locate an individual gameobject by its name using the GameObject.Find() function whereas a collection of objects can be located by their tag using the GameObject.FindWithTag() and GameObject.FindGameObjectsWithTag() methods.

GameObject player;

GameObject bat;

GameObject[] balls;

void Start()

{

player = GameObject.Find("Player1");

bat = GameObject.FindWithTag("Bat");

balls = GameObject.FindGameObjectsWithTag("Ball");

}

Finding child GameObjects

The child GameObjects can be located using the parent object's transform component reference and the GetChild() method.

void Start()

{

for(int i=0;i<gameobject.transform.childCount;i++)

balls[i] = gameobject.transform.GetChild(i).gameObject;

}

Linking to GameObjects with variables

The simplest way to find a gameObject is to add a public variable to the script which refers to the gameObject.

public class Demo : MonoBehaviour

{

public GameObject bat;

// Other variables and functions...

}

The public variables will be visible in the Inspector. You can assign objects to the variables by dragging the object from the scene or from the Hierarchy panel.

Unity Components

Components are functional pieces of a GameObject. You can assign a component to the gameobject to add a functionality. Transform, Mesh Renderer, Mesh Filter, Box Collider are some of the components of GameObject.

As mentioned earlier, a GameObject is a container that holds different components. All GameObjects by default have a Transform component to define basic transformations such as translation, rotation and scale.

Component Manipulations

Accessing components

GetComponent() method is used to get a reference of the required component instance. Once you have a reference to a component instance, you can change the values of its properties or the references which are similar to changing values in the inspector panel.

void Start ()

{

Rigidbody rb = GetComponent<Rigidbody>();

rb.mass = 1f;

rb.AddForce(Vector3.down * 5f);

}

In this example, the script receives a reference of a Rigidbody component, the mass is set to 1, and AddForce is set to down direction with a multiplier of 5.

Add or remove components

You can add or remove components at runtime using the AddComponent and Destroy methods. The method can be used to add / remove gameobjects dynamically to the scene. However, you can enable or disable some components via script without destroying them.

You can add a component to the object using the AddComponent<Type> and by specifying the type of component within angle brackets as shown. To remove a component, you can use Object.Destroy method.

//Adding a component to the current gameObject

void Start ()

{

gameObject.AddComponent<Rigidbody>();

}

//Removing a component from the current gameObject

void Start ()

{

Rigidbody rb = GetComponent<Rigidbody>();

Destroy(rb);

}

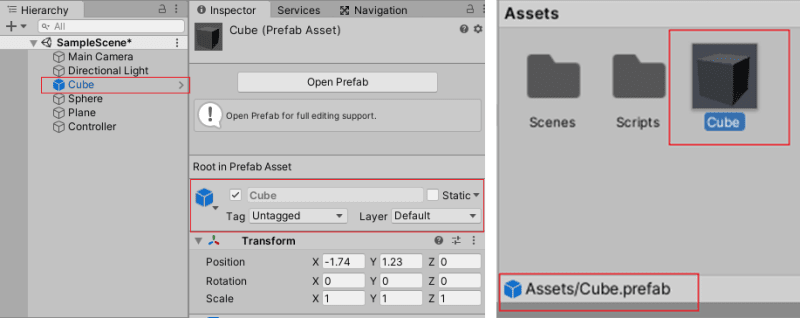

Prefabs

Prefabs are reuse gameobjects which allows the user to store a GameObject with its complete components and properties. These assets can then be shared between scenes. A major benefit of prefab is that the changes made or applied to the original Prefab will be propagated to all the other instances. This is useful when fixing object errors, making material changes, all in a single instance.

Prefabs are created automatically when an object is dragged from the Hierarchy into the Project window. Prefabs are represented with blue text and a blue cube and an extension of *.prefab.

Rendering

Camera

Camera is a component that captures the view from the scene. It is mandatory to have at least one camera in the scene to visualize the game objects. By default, there is a Main Camera component in the scene when a new scene is created.

Materials, Shaders and Textures

Materials, Shaders and Textures are the core components to render game objects in a scene.

Materials

Materials define how the surface is to be rendered which includes texture, transparency, color, reflectiveness, etc.

Fig 1. Material creation 2. Material location in Assets 3. Material specifications in Inspector

Materials act as a container for shaders and textures which can be applied to the model. Customization of Materials depends on the shader that is used in the material.

Shaders

Shaders are small scripts that render graphics data by integrating meshes, textures, etc. as the input and generating an image as the output.

Textures

Textures are bitmap images which can be used with material shaders to calculate the surface color of an object. In addition to the base color (albedo), textures can represent other aspects of the surface such as reflectivity and roughness.

Lighting

Lighting is useful to create realistic shadows and properly lit environment props. However, we also need to consider the performance of these lighting calculations.

Unity provides three modes for lighting based on lighting calculations. They are realtime, mixed, or baked lighting.

- Realtime lighting is the best lighting mode where we can create dynamic shadows. However, they are the most expensive ones to use. It is recommended to keep the real-time lighting to a minimum and add only when necessary.

- Baked lighting allows us to take a "snapshot" of our lighting in the game. It enables the calculation of shadows and highlights from the lights in the scene at runtime.

- Mixed lighting is a combination of real-time and baked lighting features.

Along with the lighting settings, you may also want to play around with some of the lighting settings. Go to Window > Rendering > Lighting Settings.

- Enable Real-time Lighting if you are not using any real-time lighting within your scene.

- Select a lighting mode from - Shadow mask, Baked Indirect, and Subtractive.

- Shadow mask provides the best lighting features but is expensive.

- Baked Indirect is useful for indoor scenes.

- Subtractive is the most performant and is used for WebGL or mobile platforms.

Lights

Directional Lights

Directional Lights can be thought of as a distant light source which exists infinitely far away like the sun.

Rays from Directional Lights are always parallel to one another, hence shadows cast look the same. We can place it anywhere in the scene without changing the effect of the light as it does not have a source position. These are useful for outdoor scenes.

Point Lights

A Point Light is a point from which light is emitted in all directions. The intensity of light diminishes from its full intensity at the center to zero at the light range. These are useful for creating effects like electric bulbs, lamps, and all.

Spotlights

Spotlights emit light in the forward (+Z) direction as a conical structure. The width of this cone is defined by the light's Spot Angle parameter. The light intensity diminishes towards the length of the cone and is minimum at its base.

Spotlights have many useful applications for scene lighting. They can be used to create street lights, wall downlights, or flashlights. These are extremely useful for creating a focus on a character as their area of influence can be precisely controlled.

Physics

Physics enables objects to be controlled by the forces which exist in the real world, such as gravity, velocity, and acceleration. Unity Physics is a combination of deterministic rigid body dynamics systems and spatial query systems. It is written from scratch using the Unity data-oriented tech stack.

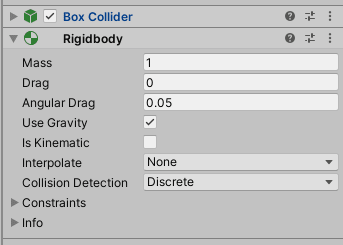

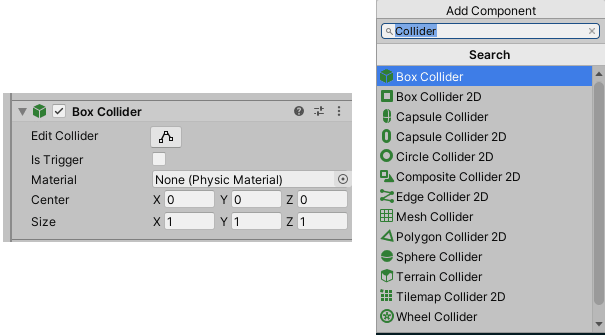

Colliders

Colliders are the components used to detect collisions when GameObjects strike or collider each other. We must add Rigidbody component to the GameObject where we attached the collider component.

Collider types: Box Collider, Capsule Collider, Mesh Collider, Sphere Collider, Terrain Collider, and Wheel Collider.

To add physics events to a GameObject, select the Add Component button in the Inspector window, select Physics, and specify the type of Collider.

Triggers

Triggers are enabled when Is Trigger checkbox is selected. This function disables the physics events thereby enabling objects to pass through the game objects. The physics events onTriggerEnter and onTriggerExit are called when the gameObject enters or exits the trigger.

Rigidbody

We can control a character in two ways using the physics engine.

In the Rigidbody approach, the character behaves like a regular physics object and is controlled indirectly by applying forces or by changing the velocity.

In the Kinematic approach, the character is controlled directly and only queries the physics engine to perform custom collision detection.

Adding the Rigidbody component is enough to turn the gameobject into a physics object. As a best practice, attach any one of the colliders with the gameobject.

Scripting

These are the physics events in Unity for detecting collisions.

OnCollisionEnter(Collision) is called when a collision is registered.

void OnCollisionEnter(Collision collision) {

if(collision.gameObject.CompareTag("Ball") {

//Hit the ball

}

}

-

OnCollisionStay(Collision)is called during a collision -

OnCollisionExit(Collision)is called when a collision has stopped -

useGravityis used to enable or disable gravity. -

AddForce()is used to add a force in a particular direction. Eg:rigidBody.AddForce(Vector3.up)

Animation

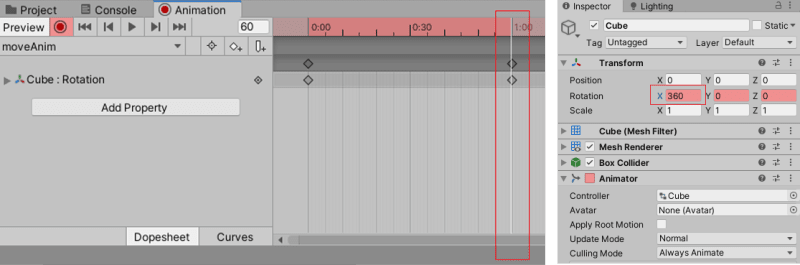

It is easy to create an animation in Unity. Unity has made the task simple with the help of Animator controls and Animation Graph. Unity calls the animator controllers to handle which animations to play and when to play them. The animation component is used to playback animations.

It is quite simple to interact with the animation using a script. First, you have to refer the animation clips to the animation component. Then get the Animator component reference in the script via GetComponent method or by making that variable public.

Finally, set enabled attribute value to true for enabling the animation and false for disabling it.

For creating animation on a gameObject,

- First, select the object in the hierarchy and press

ctrl + 6orWindow → Animation → Animation

- Click on Create. then a window will pop up asking you to specify the filename. Name it as per your likes. Here it is

rotateAnim. - In the animation window, click the record button and then Add Property button. Choose Transform → Rotation.

Change the keyframe to 60 and change the X-axis rotation value from 0 to 60. Change the X-axis rotation of the cube to 360 degrees.

Play the game. Your cube will rotate on x-axis every 1 second.

Thanks for reading this article.

Thanks Gowri M Bhatt for reviewing the content.

To get the article in pdf format: unity3d-fundamentals.pdf

If you enjoyed this article, please click on the heart button ♥ and share to help others find it!

If you are interested in further exploring, here are some resources I found helpful along the way:

Unity 3D C# scripting cheatsheet for beginners

Originally posted on Medium -

Unity3D Fundamentals

Posted on May 30, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.