Why is replicability essential?

Catriel Lopez

Posted on January 5, 2021

Table of Contents

- Introduction

- Quality control in science

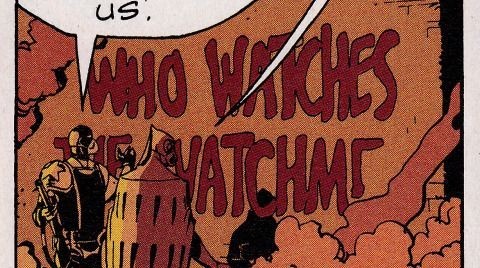

- Who watches the Watchmen? A broken peer review

- Our work

- References

Introduction

Reproducibility of results is imperative for a sound research project. The replication of experiments is an essential part of scientific research in which significant theories are based on reproducible results (Staddon et. al. 2017), so failing to provide this results go against the very basis of what we are doing. Replication is extremely important. It means that after a study is published, other scientists can then conduct the study themselves independently and come to about the same conclusions. If a study’s results can be consistently replicated by other scientists, that means the study is more likely to be valid.

In academic environments, the reproducibility factor is often overlooked and scientist don't put enough effort into establishing essential environments and workflows early on in the project life-cycle. Readers are often expected to figure things out themselves from the minimal information in the methods section if they want to reproduce the results themselves.

Quality control in science

As we said, readers are often expected to figure things out themselves from the minimal information in the methods section if they want to reproduce the results themselves. Why is that? There are three causes that we've found mentioned in the literature (among others):

Bias

Fierce competition, strong incentives to publish, and commercial interest have inadvertently lead to both conscious and unconscious bias in the scientific literature. And, the higher the vested interest in a field, the stronger the bias is likely to be. A major cause of low reproducibility is the publication bias (when the outcome of an experiment or research study influences the decision whether to publish or otherwise distribute it) and the selection bias (the sample obtained is not representative of the population intended to be analyzed).

One cause for lack of replicability is that many scientific journals have historically had explicit policies against publishing replication studies (Mahoney 1985). Over 70% of editors from 79 social science journals said they preferred new studies over replications and over 90% said they would did not encourage the submission of replication studies (Neuliep & Crandall 1990).

Lost data

A vast amount of data is lost simply because we don’t know how to access it or is not free. As Editor-in-Chief of "Molecular Brain", Tsuyoshi Miyakawa has handled hundres of manuscripts and has made countless editorial decisions requesting that the authors provide raw data. On his article "No raw data, no science: another possible source of the reproducibility crisis", Miyakawa details how simply asking for this leads to several authors to withdraw their submissions.

Flowchart of the manuscripts handled by Tsuyoshi Miyakawa in Molecular Brain from December 2017 to September 2019

"With regard to the manuscripts that the authors withdrew independently, there are possible reasons for withdrawing after being asked to provide raw data.

One possible reason is that although they actually had the raw data, the authors were not willing to gather all the raw data and upload them. It’s also possible that authors did not disclose raw data which they could use as an exclusive source for data mining to publish additional papers later. Another possible reason is that they chose journals where the disclosure of raw data is not required at the time of publication. However, the “data mining” hypothesis is unlikely for many of the authors in the cases considered here, since most of the rejected manuscripts did not contain big data that are suitable for data mining, as most of the requested data prior to peer review were images for western blotting or for tissue staining.

Note that I asked not only for raw data but also for absolute p-values and corrections for multiple statistical tests; therefore, the possibility cannot be excluded that some of them did not wish to provide absolute p-values or to conduct corrections for multiple tests, though I do not think that these can be the primary reasons for the withdrawal. As for the ones that I rejected, it is technically possible that the insufficiency or mismatch between raw data and results are honest and careless mistakes."

Even if he's not able to verify the reason why the authors decided to withdraw their work, he proposes that a lack of raw data or data fabrication is another possible cause of irreproducibility.

Fraud!

Fraud and questionable practices (excluding studies that didn't work, gathering more data that needed, rounding errors, etc) obviously affect the reproducibility of the study. If the work is wrong, how can you get to the same results without commiting fraud yourself?

Who Watches the Watchmen? A broken peer review

After all, who's resposible for verifying other people's work? On "Scientific Knowledge and Its Social Problems", author Jerome Ravetz states that it wouldn't be feasible to erect a formal system of categories of quality for a piece of work, and train a corp of experts as asessors to operate in their framework, "for the techniques are so subtle, the appropriate criteria of adequacy and values especialized, and the materials so rapidly changing, that any fixed and formalized categories would be a blunt and obsolete instrument as soon as it were brought in to use".

This results in the fact that if there are to be truly expert assesments of quality of work, they must be made by a section of those who are actually engaged upon that work. In itself, this provides no guarantee whatever that proper assessments of quality will be made, or that they will be used appropriately for the maintenance of quality.

Our work

As we continue our work on our graduate thesis, we have accumulated a suite of scripts that need organization and cleaning. A basic R package, with a good project layout, will not only make our life easier, but also allows us to tackle our reproducibility problem. It also helps ensure the integrity of our data, makes it simpler to share our code with someone else, allows us to easily upload our code with our manuscript submission, and makes it easier to pick the project back up after a break.

This follows from several tips that we have to keep in mind:

- For every result, keep track of how it was produced: we are avoiding manual changes in data, everything must be modified through a script to ensure that every change is registered.

- Version control all scripts: we are currently using Git, and a private repository on Github.

- For analyses that include randomness, note underlying random seeds.

- Always store raw data.

- Connect textual statements to underlying results: we are currently using R markdown files to write about our development and the changes we make to the data.

- Provide public access to scripts, runs, and results: for now, our work and data are private, but that has to change as soon as we are done!

References

Hard Problems in Data Science: Replication

10 Rules for Creating Reproducible Results in Data Science

No raw data, no science: another possible source of the reproducibility crisis

What Can Be Done to Fix the Replication Crisis in Science?

Special Section on Replicability in Psychological Science: A Crisis of Confidence?

Original cover photo by Brandable Box on Unsplash

Posted on January 5, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.