Common API mistakes and how to avoid them

Brian Neville-O'Neill

Posted on July 8, 2019

The advice in this article applies to any API. However, some of the issues we’ll consider are easier to encounter when the application is written in a dynamic language, such as JavaScript, versus a more static language, such as Java.

Node.js is sometimes referred to as the glue which holds system oriented architecture together due to the ease of communicating with multiple backend services and stitching the results together. For these reasons the examples we’ll look at will be written in Node.js-flavored JavaScript.

Be stingy with data

When encountering an object to be used in an API response it’s far too easy to deliver every property of the object. In fact, it’s usually easier to send the entire object, unmodified, than to decide which properties to add or remove. Consider the situation where you have a user from a social media platform. Perhaps within your application, the object resembles the following:

{

"id": 10,

"name": "Thomas Hunter II",

"username": "tlhunter",

"friend_count": 1337,

"avatar": "https://example.org/tlhunter.jpg",

"updated": "2018-12-24T21:13:22.933Z",

"hometown": "Ann Arbor, MI"

}

Assume you’re building an API and you’ve been specifically asked to provide the identifier of a user, their username, their human-readable name, and their avatar. However, delivering the complete object to the consumer of an API is very straightforward as one could simply do the following:

res.send(user);

Whereas sending strictly the requested user properties would look like this:

res.send({

id: user.id,

name: user.name,

username: user.username,

avatar: user.avatar

});

It’s even trivial to justify this decision. “Heck, we already have the data, someone might need it, let’s just pass it along!” This philosophy will get you into a world of hurt in the future.

First, consider the storage format used for this data, and think of how easy it is to get the data today and how that might change tomorrow. Perhaps our data is entirely stored in a single SQL database. The data needed to respond with this User object can be retrieved with a single query containing a subquery. Perhaps it looks something like this:

SELECT * FROM users,

(SELECT COUNT(*) AS friend_count FROM user_friends WHERE id = 10)

AS friend_count

WHERE id = 10 LIMIT 1;

Then one day we upgrade the storage mechanisms of our application. Friendships may be moved to a separate graph database. The last updated time might be kept in an ephemeral, in-memory database. The data we originally decided to offer to the consumer, because it was easy to access, has become very difficult to access. The singular, efficient query must now be replaced by three queries to different systems.

One should always look at the business requirements and determine what the absolute minimum amount of data can be provided that satisfies those requirements. What does the consumer of the API _really _need?

Perhaps nobody who consumes this API actually needed the friend_count and updated fields. But, as soon as a field has been offered in an API response, somebody is going to use it for something. Once this happens you need to support the field forever.

This is such an important concept in programming that it even has a name: You Aren’t Gonna Need It (YAGNI). Always be stingy with the data you’re sending. A solution to this issue, as well as other issues, can be implemented by representing data with well-defined objects.

Represent upstream data as well-defined objects

By representing data as well-defined objects, i.e. creating a JavaScript class out of them, we can avoid a few issues when designing APIs. This is something that many languages take for granted — taking data from one system, and hydrating it into a class instance is mandatory. With JavaScript, and particularly Node.js, this step is usually skipped.

Consider this simple example where a Node.js API retrieves data from another service and passes through in a response:

const request = require('request-promise');

const user = await request('https://api.github.com/users/tlhunter');

res.send(user);

What properties are being relayed? The simple answer is all of them, no matter what they could be. What happens if one of the properties we retrieved is of the wrong type? Or if it is vital for the consumer but the property is missing? By blindly sending the attributes along our API has no control over what is received by the consumer of the service. When we request data from an upstream service and convert it into an object, usually by using JSON.parse(), we’ve now created a POJO (Plain Old JavaScript Object). Such an object is both convenient and risky.

Instead, let’s represent these objects as a DO (Domain Object). These objects will demand that we apply some structure to the objects we’ve retrieved. They can also be used to enforce that properties exist and are of the right type, otherwise, the API can fail the request. Such a domain object for our above User might look something like this:

class User {

constructor(user) {

this.login = String(user.login);

this.id = Number(user.id);

this.avatar = String(user.avatar_url);

this.url = String(user.html_url);

this.followers = Number(user.followers);

// Don't pass along

this.privateGists = Number(user.private_gists);

if (!this.login || !this.id || !this.avatar || !this.url) {

throw new TypeError("User Object missing required fields");

}

}

static toJSON() {

return {

login: this.login,

id: this.id,

avatar: this.avatar,

url: this.url,

followers: this.followers

};

}

}

This class simply extracts properties from an incoming object, converts the data into the expected type, and throws an error if data is missing. If we were to keep an instance of the User DO in memory, instead of the entire upstream POJO representation, we would consume less RAM. The toJSON() method is called when an object is converted into JSON and allows us to still use the simple res.send(user) syntax. By throwing an error early we know that the data we’re passing around is always correct. If the upstream service is internal to our organization, and it decides to provide the users’ email in a field, then our API wouldn’t accidentally leak that email to the public.

Be sure to use the same Domain Objects throughout your API responses. For example, your API might respond with a top-level User object when making a request for a specific user, as well as an array of User objects when requesting a list of friends. By using the same Domain Object in both situations the consumer of the service can consistently deserialize your data into their own internal representation.

By representing upstream data internally as a Domain Object we can both circumvent a few bugs and provide a more consistent API.

Use forward compatible attribute naming

When naming attributes of objects in your API responses be sure to name them in such a manner that they’re going to be forward compatible with any updates you’re planning on making in the future. One of the worst things we can do to an API is to release a backwards-breaking change. As a rule of thumb, adding new fields to an object doesn’t break compatibility. Clients can simply choose to ignore new fields. Changing the type, or removing a field, will break clients and must be avoided.

Consider our User example again. Perhaps today our application simply provides information about a location with a simple City, State string. But, we know that we want to update our service to provide richer information about locations. If we name the attribute hometown, and only store a string of information, then we won’t be able to easily insert the richer information in a future release. To be forward compatible we can do one of two things.

The first option is more likely to violate YAGNI. We can provide an attribute on the User called hometown. It can be an object with the properties city and municipality. It might feel like we’ve complicated things a bit early though, especially if these end up being the only location attributes we ever support. This document might look something like this:

{

"name": "Thomas Hunter II",

"username": "tlhunter",

"hometown": {

"city": "Ann Arbor",

"municipality": "MI"

}

}

The second option is less likely to violate the YAGNI principle. In this situation, we can use the attribute name of hometown_name. Then, in a future update, we can provide an object called hometown which contains the richer information. This is nice because we maintain backwards compatibility. If the company pivots and decides to never provide the richer information then we were never stuck with an annoying hometown object. However, we are forever stuck with both a hometown_name and a hometown attribute, with the consumer stuck figuring out which to use:

{

"name": "Thomas Hunter II",

"username": "tlhunter",

"hometown_name": "Ann Arbor, MI",

"hometown": {

"city": "Ann Arbor",

"municipality": "MI",

"country": "US",

"latitude": 42.279438,

"longitude": -83.7458985

}

}

Neither option is perfect and many popular APIs follow one or the other approach.

Normalize concepts and attributes

As I mentioned earlier, Node.js contributes to many enterprises by being the glue which holds services together. The speed at which Node.js applications can be written and deployed is unrivaled.

A common pattern is that a large company will have multiple services deep in their infrastructure, such as a Java search application and a C# service with data backed in SQL. Then, the frontend engineers come along and they need data from both services combined in a single HTTP request so that their mobile app remains fast. But we can’t just ask the C# or Java team to build a service just for the frontend developers. Such a process would be slow and outside of the responsibilities of the upstream teams. This is when Node.js comes to the rescue. A frontend engineer can fairly easily build a service which consumes data from both systems and combine it into a single request.

When building a service that combines data from multiple services — an API Facade — we need to expose an API which is consistent within itself and consistent when compared to well-known “nouns” employed by the other services.

As an example, perhaps the Java service uses camelCase and the C# service uses PascalCase. Building an API that responds with a mixture of the two cases would result in a very frustrating developer experience. Anyone using the service would need to constantly refer to the documentation for each endpoint. Each casing system, even snake_case, is completely fine on their own. You need only pick one and stick to it.

Another issue that can happen is that different services use different nouns to refer to data. As another example, the Java service might refer to an entity as a company while the C# service might refer to it as an organization. When this happens try to determine whichever noun is more “correct”. Perhaps you’re building an API for public consumption and all user-facing documentation refers to the entity as an organization. In that case, it’s easy to choose the name. Other times you’ll need to meet with other teams and form a consensus.

It’s also important to normalize types. For example, if you’re consuming data from a MongoDB service you might be stuck with hexadecimal ObjectID types. When consuming data from SQL you might be left with integers that could potentially get very large. It’s usually safest to refer to all identifiers as strings. In these situations, it doesn’t matter so much if the underlying data is a hexadecimal “54482E” or base64 “VEg=” representation of binary or a number represented as a string like “13”. As long as the type used by the consumer is always a string they’ll be happy.

Use positive, “happy” names

Have you ever used an API where they mix both “positive” and “negative” attribute names? Examples of negative fields include disable_notification or hidden: false. Their positive opposites are enable_notification or visible: true. Normally I recommend picking either approach and using it consistently. But, when it comes to attribute names, I have to always recommend the positive variants.

The reason is that it’s easy as a developer to get confused by double negatives. For example, glance at the following attribute and try to time how long it takes to understand what it means: unavailable: false. I’m willing to bet it’s much quicker for you to comprehend available: true. Here are some examples of “negative” attributes to avoid: broken, taken, secret, debt. Here are their correlating “positive” attributes: functional, free, public, credit.

There is a caveat to this, however. Depending on how a product is marketed it may be necessary to choose negative names in situations where the reference is well-understood. Consider a service which allows a user to post status updates. Traditionally this service has only had status updates visible by everyone but then recently introduced the concept of private status updates. The word public is the positive version and private is the negative.

However, all the marketing material refers to the status posts as private. In this situation, adding a public: false field to the status update API would be confusing to consumers of the service, they would instead expect the private: true attribute. The rare negative attribute name is acceptable only when API consumers expect it to be named as such.

Apply the Robustness Principle

Be sure to follow the Robustness Principle wherever it may apply to your API. Quoting from Wikipedia, this principle is:

Be conservative in what you do, be liberal in what you accept from others.

The most obvious application of this principle is in regard to HTTP headers. According to the HTTP RFC, headers should have uppercase characters for the first letter of words and be separated by hyphens. As an example of this, we would have Content-Type. However, they can technically be of any capitalization and still be acceptable, such as content-TYPE.

The first half of the Robustness principle is to be conservative in what you do. That means you should always respond to the client using the preferred header casing. You can’t know, for certain, that the consumer of your API is able to properly read both nicely formatted and sloppily formatted headers. And an API should be usable by as many different consumers as possible.

The second half of the principle is to be liberal in what you accept from others. This means that, in the case of HTTP headers, you should normalize each incoming header into a consistent format so that you can read the intended values regardless of casing.

Whenever possible, as long as there is no introduction of ambiguity, even consider supporting the Robustness Principle with the internals of your API as well. For example, if you expect your API to receive a username property, and you receive a Username property, is there really any harm in accepting the incorrect case? There actually might be! If we accept both Username and username, what do we do when we receive both? HTTP headers do have defined semantics for handling duplicate header entries. JSON, however, does not. Accepting both casings for username might result in hard-to-debug errors.

What should an API do if it receives an attribute of the wrong type, such as a string when a number was expected? Perhaps this isn’t as big of a deal, especially if the provided string is numeric. For example, if your API accepts a numeric width argument, and receives a string of “640”, then it is hard to imagine any ambiguity in this situation. Deciding which fields to coerce from one type to another is a bit of a judgement call. Be sure to document situations where you perform such type coercing.

Test all error conditions

When a consumer communicates with a service it expects consistently-formatted responses for all requests. For example, if the consumer regularly transmits and receives JSON, then it’s reasonable to expect that the consumer will take any response that it receives and will parse the content as if it were JSON. If, when an error occurs, the response isn’t formatted as JSON, then this will break the consumer. There are all sorts of interesting edge-cases which need to be tested to prevent this from happening.

Consider a Node.js application written using Express. If within a request handler, the application throws an error, then the Express server may respond with a Content-Type: text/plain and a body which contains a stack trace. We’ve now broken the consumers JSON parser. This can usually be prevented by writing a middleware which converts any caught errors into nicely formatted JSON responses:

app.get('/', (req, res) => {

res.json({

error: false, // expected JSON response

data: 'Hello World!'

});

});

app.get('/trigger-error', (req, res) => {

// normally this returns a text/plain stacktrace

throw new Error('oh no something broke');

});

// generic error handler middleware

app.use((err, req, res, next) => {

console.log(err.stack); // log the error

res.status(500).json({

error: err.message // respond with JSON error

});

});

If possible, create acceptance tests which invoke various errors and test the responses. Create a secret endpoint in your application which throws an error. Try to upload a file which is too large, send a payload with an incorrect type, send malformed JSON requests, etc. If your API doesn’t use JSON over HTTP, such as a gRPC service, then, of course, an equivalent testing approach will need to be taken.

Take a step back

Within a corporate environment, it’s very easy to get into the pattern of allowing a complex client library to handle all of the communication with a service. Likewise, it’s easy to allow a complex service library to handle all of the serialization of objects into a client-consumable format. With so much abstraction a company may get to the point where nobody knows what the data being sent over the wire looks like anymore.

When these situations happen the amount of data transmitted over the network may balloon out of control. The risk of transferring Personally Identifiable Information (PII) also increases. And, if your API ever needs to be consumed by the outside world, this may result in lots of painful refactoring to clean up.

It’s important to “take a step back” every now and then. Stop looking at APIs using the organizations de facto tools. Instead, look at the API using a generic, off the shelf product. When working with HTTP APIs, one such product for achieving this is Postman. This tool is useful for viewing the raw HTTP payloads. It even has a convenient interface for generating requests and parsing responses.

While working at a large company I once worked on one service which consumed data from another service. The service would immediately parse the JSON response from the remote service into a POJO and then crawl that data structure. One thing that caught my eye was that another JSON parse was being called conditionally. Such a call was quite out of place since the response had already been parsed, but the code had operated in this manner for years.

I regenerated the request using Postman and discovered that there was a bug in the upstream service. It would, in certain circumstances, double-encode the JSON response. The consumer would then check an attribute; if it were a string it would first parse it into an object then continue on. Such a feat is fairly easy to do with JavaScript but might be a nightmare in a more static language. The first time this bug was encountered by an engineer they probably spent hours debugging it before discovering the problem and adding the conditional. Can you imagine if such an API were public and hundreds of engineers had to go through the same problem?

Conclusion

By following the advice in this article you’ll be sure to avoid some of the most common pitfalls present in modern APIs. While the advice here applied most heavily to dynamic languages like JavaScript, they’re generally applicable to every platform.

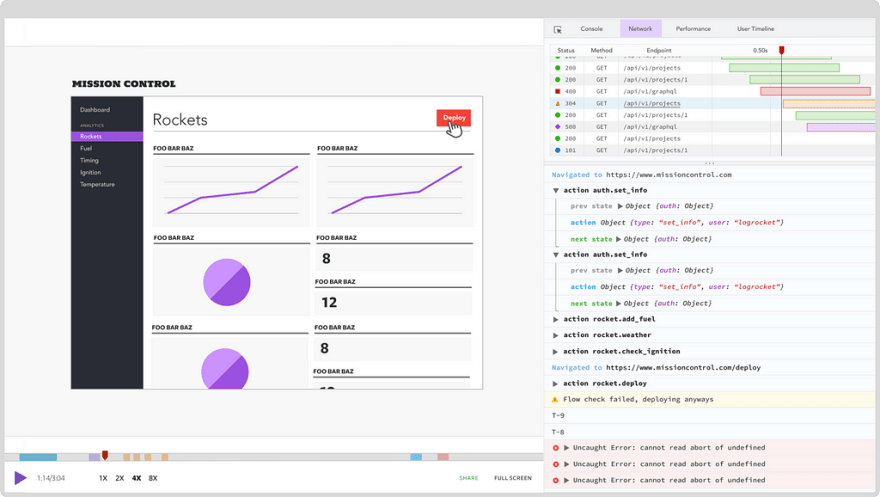

Plug: LogRocket, a DVR for web apps

LogRocket is a frontend logging tool that lets you replay problems as if they happened in your own browser. Instead of guessing why errors happen, or asking users for screenshots and log dumps, LogRocket lets you replay the session to quickly understand what went wrong. It works perfectly with any app, regardless of framework, and has plugins to log additional context from Redux, Vuex, and @ngrx/store.

In addition to logging Redux actions and state, LogRocket records console logs, JavaScript errors, stacktraces, network requests/responses with headers + bodies, browser metadata, and custom logs. It also instruments the DOM to record the HTML and CSS on the page, recreating pixel-perfect videos of even the most complex single page apps.

The post Common API mistakes and how to avoid them appeared first on LogRocket Blog.

Posted on July 8, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.