3 ways to manage concurrency in serverless applications

Yan Cui

Posted on March 21, 2023

Many software engineering concepts show up in different contexts. Modularity, the single-responsibility principle and separation of concerns are just a few examples that come to mind. They are equally applicable to how we write code, architect our systems and organize our teams.

Similarly, there are many parallels between multithreaded programming and event-driven architectures. For example, we often see multithreaded programming patterns such as the Thread Pool pattern and Fork Join pattern appear in event-driven architectures too.

Using reserved concurrency

To implement a “thread pool”, you can use reserved concurrency on a Lambda function to process events from EventBridge.

This works because:

- Reserved concurrency also acts as the maximum concurrency on the Lambda function.

- Asynchronous invocations (e.g. EventBridge, SNS) use an internal queue and respect the reserved concurrency setting on the function.

So you’re able to control the concurrency at which events are processed. This is a useful pattern for when you’re dealing with downstream systems that are not as scalable.

This is the simplest way to manage the concurrency of a serverless application. But it’s not without limitations.

Firstly, reserved concurrency is a useful tool when you need to use it for a small number of functions. However, the assigned concurrency units are taken out of the regional pool, leaving less concurrency available for all your other functions in the region. When managed poorly, this can lead to API functions being throttled and directly impacting user experience.

Reserved concurrency is therefore not something that should be used broadly. Alternatively, workloads that require frequent use of reserved concurrency should be moved to their own account to isolate them from workloads that require a lot of on-demand concurrencies, such as user-facing APIs.

Secondly, you can’t guarantee events are processed in the order in which they are received.

Thirdly, asynchronous Lambda invocations have an at-least-once semantic. This means you will occasionally see duplicated invocations. You can work around this problem by keeping track of which events have been processed in a DynamoDB table. For this to work, you will need to include a unique ID with each event and the Lambda function would check the DynamoDB table before processing any event it receives.

Using EventSourceMapping

If you need to process events in the order they’re received, then you should use Kinesis Data Streams or the combination of SNS FIFO and SQS FIFO.

In both cases, you can control the concurrency of your application using the relevant settings on the EventSourceMapping.

Using this approach, you don’t need to use Lambda’s reserved concurrency. Therefore you avoid the problems associated with reserved concurrency when concurrency control is required on a wide range of functions.

With Kinesis, ordering is preserved within a partition key. So if you need to ensure events related to an order are processed in sequence, then you would use the order ID as the partition key.

You can control the concurrency using a number of settings:

- Batch Size : how many messages do you process with each invocation?

- Batch window : how long should the Lambda service wait and gather records before invoking your function?

- Concurrent batches per shard (max 10): the max number of Lambda invocations for each shard in the stream.

- The number of shards in the stream: how many shards are there in the stream? Multiplying this with the concurrent batches per shard gives you the max concurrency for each of the subscriber functions. Please note that this setting is only applicable for Kinesis provisioned mode.

With SNS FIFO, you can’t fan out messages to Lambda functions directly (see official documentation here).

This is why you always need to have SQS FIFO in the mix as well.

Similar to Kinesis, event orders are preserved within a group, identified by the group ID in the messages. And recently, AWS introduced a max concurrency setting for SQS event sources (see here). This solves the long-standing problem of using reserved concurrency for SQS functions.

So, now you can use EventSourceMapping for SQS to control the concurrency of your application. And you don’t have to micro-manage the Lambda concurrency units in the region and worry about not having enough units for other functions to scale into.

Dynamic concurrency control

Both of the approaches we have discussed so far allow us to control the concurrency of our application. This is useful especially when we’re working with downstream systems that are not as scalable as our serverless architecture.

In both cases, we have a ballpark concurrency value in mind. That value is informed by our understanding of what these downstream systems can handle. However, in both cases, these settings are static. To change them, we would likely need to change our code and redeploy our application with the new settings.

But what if the situation calls for a system that can dynamically change its own concurrency based on external conditions?

I have previously written about this approach and how you can implement a “circuit breaker” type system for Kinesis functions. See this post from 2019 for more detail.

Back in 2018, I also wrote about how you can implement “semaphores” for Step Functions (see the post here).

The implementation details from these posts are fairly outdated by now, but the core principles behind them are still relevant today.

Namely…

Metaprogramming : Lambda functions can change their own settings on the fly by making API calls to the Lambda service.

They can react to external conditions such as changes to the response time and error rate from downstream systems and adapt their concurrency settings accordingly.

You would need some additional concurrency control here to make sure only one invocation can change the relevant settings at once.

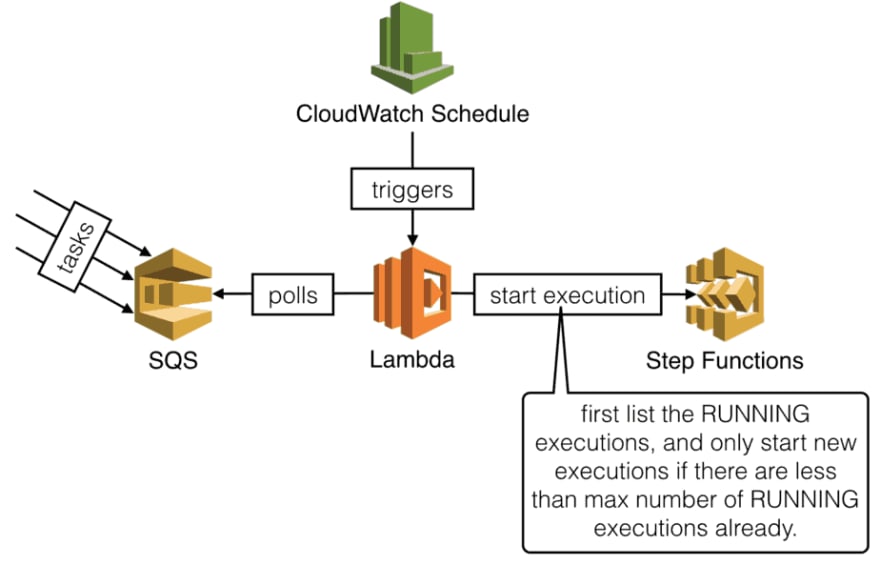

Using a controller process to manage concurrency : instead of the functions changing their own settings on the fly, you can have a separate process to manage them.

Here, you can draw a distinction between “blocking an ongoing execution from entering a critical section” and “don’t start another execution at all”. The trade-offs I discussed in the Step Functions semaphore post are still relevant. Most importantly?—?locking an execution mid-way makes choosing sensible timeouts more difficult.

Luckily, with Step Functions, we can now implement callback patterns using task tokens. No more polling loops in the middle of your state machine!

Outside of the context of Step Functions, you can still apply this approach. For example, you can have a separate (cron-triggered) Lambda function change the concurrency of your main function.

Applications that require this kind of dynamic concurrency control are few and far between. But in the unlikely event that you need something like this, I hope I have given you some food for thought and some ideas on how you might approach the problem!

Wrap up

I hope you have found this article insightful. It’s not the most flashy topic but it’s the sort of real-life problem that people often have to deal with.

I want to thank Tanner King for asking me a related question on LinkedIn, which gave me the inspiration to write this article.

If you want to learn more about building serverless architecture, then check out my upcoming workshop where I would be covering topics such as testing, security, observability and much more.

Hope to see you there.

The post 3 ways to manage concurrency in serverless applications appeared first on theburningmonk.com.

Posted on March 21, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.