A journey with Windows Docker containers

Arnaud Bezançon

Posted on November 28, 2020

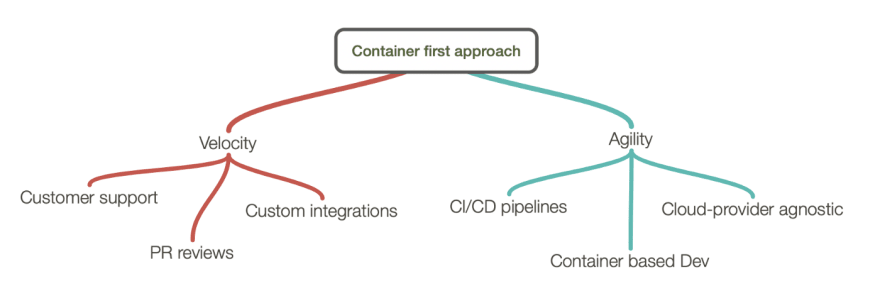

At a glance

- Windows containers can dramatically improve software development and delivery even if they aren’t as agile as their Linux-based counterparts.

- Dockerizing a web-based solution running on top of Windows Server requires some new skills and development efforts, but the benefits are tangible after each phase of the project.

- Containers, while providing modern CI/CD pipelines and tooling, give a second life to Windows Server–based technologies leveraging a mature ecosystem, which for some clients translates to integration cost reductions and reusing existing team skills.

The monolith

WorkflowGen is a web-based solution with server-side services running on Windows Server (IIS) with built-in C# for the .NET modules and TypeScript for the Node.js modules. Data management relies on Microsoft SQL Server and some file storage. WorkflowGen offers a modern GraphQL API along with webhooks, and supports the OpenID Connect (OIDC) authentication standard.

Even with this hybrid technologies approach, all of these server apps are managed in a single repository (the exception being our mobile app, which is developed in React-Native and has its own dedicated repo). The monolith way has its own virtues that fit pretty well with our development process. One of the qualities of a single repo–based solution we’ve appreciated a lot so far is the simplification of dependency management across the different app modules and communication management around GitHub issues.

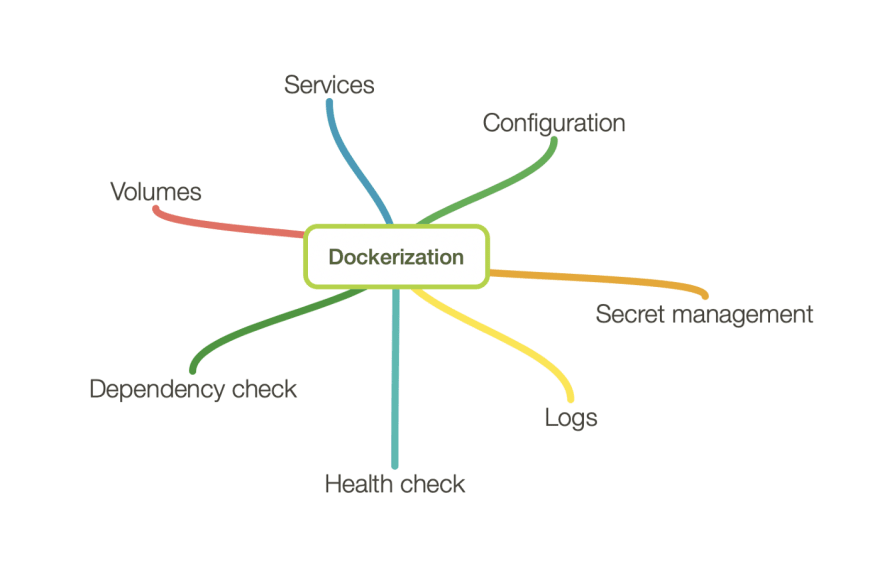

Dockerization phase

A container is not really a “VM lite”. It’s more a new piece of software infrastructure with a set of qualities required to enable its coexistence with other containers in different usage scenarios and ecosystems. This means that there’s a learning curve to appreciate the service composition to define and the technical requirements to implement.

Fortunately, Docker is a mature technology, and Windows containers now offer a lot of what Linux containers support out of the box. The impact on the existing code base has been minimal, but a bunch of PowerShell scripts have been created.

Our Dockerization process was essentially based on the following features:

Services

We have one database container for the SQL Server database (Windows- or Linux-based) and another WorkflowGen container for the application services. We provide the possibility to run some scalable (such as our web apps) and non-scalable application services (such as user directory synchronization) in dedicated containers using the same WorkflowGen image. This means that our clients can configure a fine-tuned auto-scalable container-based architecture, or have a simple two container–based solution for dev/test usage.

Volumes

Volumes are basically the persistent file storage used by our services (app data, SQL data, etc.).

Configuration

This part is quite important because it will define how flexible your container will be. We provide a set environment variables to adjust all of our services’ settings. Internally, our Docker entry point provides some PowerShell scripts to manage the IIS web.config file and other service configuration files.

Secret management

Some configuration settings are secrets. Docker provides a simple solution based on secret files to handle these cases.

Logs

This is the visible part of the container Iceberg. We have decided to expose IIS logs by default. You might decide to consolidate more logs according to the services running in the container.

Dependency check

Our app services rely mainly on SQL Database, so we just have to check if the SQL Server responds to some SQL queries.

Health management

We have implemented basic health management by pinging some web app URLs. We’ll consider more sophisticated metrics to evaluate app health status in the future.

Pipelines

Our CI/CD stack is based on GitHub and Azure Pipelines.

Our public Docker images are published on Docker Hub with the associated GitHub repo. The public GitHub repo is important for our clients to have the ability to check how the image is built and what components are installed, as well as for security audits.

We provide an image for each WorkflowGen version and Windows Server–based image version supported, for example:

advantys/workflowgen:7.18.1-win-ltsc2019.

In addition to our official releases, we have some private Docker Hub repos for our development images, and we also use Azure Container Registry for specific image uses.

Our build pipeline relies on containers to build the apps (.NET and TypeScript) and to run the unit and integration tests. To optimize Docker image pull performance we use a dedicated build machine on AWS with the Azure Pipeline agent installed. Indeed, by using a dedicated build machine we can leverage the Docker image cache, which dramatically improves build times for large Windows container images.

Our build machines are launched on-demand by our Azure Pipeline to reduce VM cost usage. We hope that the Azure Pipeline–managed build machines will offer more Docker cache options in the future to avoid having to handle this piece of infrastructure.

In addition to the product releases, development images are built upon each commit in our develop branch and for each pull request.

Initial benefits of the Dockerization phase

Customer support velocity

All of the product supported versions have a corresponding Docker image.

This means that when a ticket arrives, the support team can set up a fresh product environment/architecture including the database corresponding to the client version in a few seconds (or in a couple of minutes if the image is not in the user machine local cache) using simple Docker-Compose files.

Multiple product versions can be executed at the same time to compare behaviors across the versions, and fixes can be easily tested.

Thanks to the Docker volumes, multiple product versions can be tested with the same data without having to seed or migrate the data.

In the end, we’re talking about a massive improvement in productivity and quality for the client support process.

Pull request fluid collaboration

As mentioned, an image is generated for each pull request (PR). If the unit or integration tests fail, the image is not built, so the reviewers are aware of the test results associated to a PR.

The process review is streamlined, no need to build the code changes locally to evaluate the PR’s corresponding version.

The PR image is ready to go. It’s easy to test a new feature or a fix, and to compare with other product versions. Non-dev users can also have a look at a new feature or even demonstrate it to other team members or clients without having to wait for a dedicated alpha/beta image.

Industrialized client integration

For each product version, an -onbuild image variant is built. This image allows clients to create a custom image variant with their own requirements and integration points.

For example, a client can install other components, custom DLLs, custom configuration files or a new UI theme. The Docker image variant definition can be managed in a repo and the build can be automated with GitHub Actions, Azure Pipelines or any other CI/CD tool.

For clients, this means project delivery automation and quality improvements by limiting repetitive human tasks (such as environment installation and configuration, manual tests, etc.).

For a project with a lot of iterations, the gains can be measured in days or weeks.

Container-based development phase

Using containers during development makes sense especially when working on multiple product versions and/or features or fixes. Having a local dev environment with the same database, for example, can be tricky when the database schema or content has changed across product versions. A solution is to have one database installed for each product version, but this is error-prone (e.g. forgetting to change a connection string) with productivity impacts.

The ideal solution is to have a fresh product environment including the database corresponding to the branch we are working on. Thanks to the container development approach this is now possible!

For our .NET projects in Visual Studio and our TypeScript/Node.js app in VS Code, the code is built in the local environment but the distribution files are copied when changed in the development container (we have developed a set of PowerShell scripts to automate the process in the development image but we plan to evaluate Mutagen). Live debugging is also possible thanks to the VS remote debugging agent installed in the development image.

Container-based development is a new paradigm in terms of quality and productivity, especially for products with multiple services.

Having a brand new “production grade” environment in development reduces configuration errors and allows the dev team to focus on the new feature or fix to implement and not on local dev environment management.

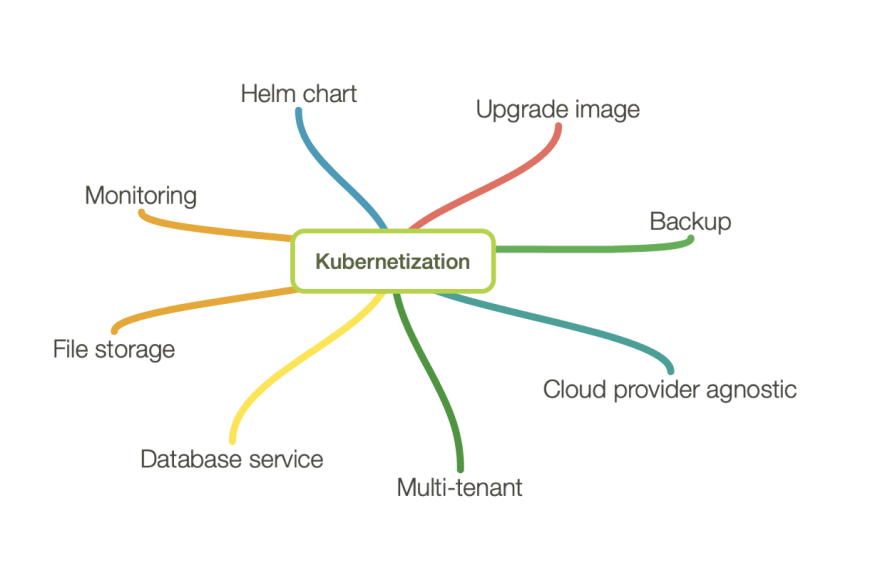

Kubernetization phase

Product Dockerization and container-based development are already game changers in our CI/CD workflows, but connecting those containers in production is the cherry on the cake.

Kubernetes (K8s) is the container orchestration platform designed for this purpose, supported by all the major cloud providers: Azure AKS, Google GKE and AWS EKS.

Windows containers adoption is fairly new in K8s, but it’s now ready for prime time with the support of hybrid container OSs (Windows/Linux).

To make WorkflowGen run in production in Kubernetes in an efficient way, we decided to provide the following features:

File storage and database services

File storage uses Azure Files with AKS (to support container scalability). The SQL database can be handled by a SQL Server container (Windows or Linux) or by Azure SQL managed services.

Helm Chart

Helm is a package manager for Kubernetes that allows an application to be easily deployed in a K8s cluster thanks to a chart file definition.

The result is impressive: you can deploy a full-blown WorkflowGen solution including the SQL Server database with this single command line:

helm install --set image.tag=7.18.1-win-ltsc2019 release-name ./chart-path

Upgrade image

To make WorkflowGen upgrades robust with the level of automation required by K8s, a Docker upgrade image has been created. This WorkflowGen upgrade image is mainly used to upgrade the database schema and/or content when needed by a new version.

Backup

We have successfully tested Velero to manage the database and file data backup, but other strategies and tools are possible.

Monitoring

The Kubernetes ecosystem already has already tools to monitor the containers, such as Prometheus. With AKS, we use Azure Monitor logs.

Cloud provider agnostic

One of the promises of K8s and Docker in general is to be portable across different providers or on-premise infrastructures. We have successfully tested our K8s deployments on Azure AKS and Google GKE. We still have to test AWS EKS.

Multi-tenant

Our OEM clients can now offer robust and secure multi-tenancy by providing a dedicated production environment and database for each tenant with the velocity previously offered by shared instances and databases.

Using WorkflowGen in production with Kubernetes offers not only a modern and cost-efficient orchestration platform but also accelerates business process delivery with an automated pipeline.

The agility offered by Kubernetes was once the privilege of Linux-based apps, but Windows Server apps can now experience it. It’s worth noting that creating a K8s app represents an opportunity to maximize existing technologies before migrating to a serverless or a new framework that requires a complete overhaul of the code base and human skills.

A bot for conclusion

Docker, Docker-Compose, Kubernetes, Helm Charts, etc. are new technology layers aimed to streamline app management and deployment, but the price of this level of efficiency translates into an accumulation of knowledge and skills that must be mastered. For our internal usage, and to prove that all of our efforts in the container world make sense in our day-to-day operations, we developed a simple Slack bot using the Bolt API and Azure Pipelines. When a team member wants to deploy a WorkflowGen instance for a trial or a POC, they just have to type a simple command in a Slack channel and a couple of minutes later a message is posted in the channel to notify that the deployment is ready (an email is also sent to the client when needed).

Complexity should be transparent.

Resources

Posted on November 28, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.