Saket

Posted on March 18, 2024

Airflow: A Beginner's Guide to Data Orchestration in Data Pipelines.

Data pipelines are the lifeblood of modern data-driven applications. These data pipelines help automate the flow of data from various sources to its single or multiple destinations by transforming it. But managing these pipelines, especially complex ones, can be a challenge. Apache Airflow an open source solution helps in overcoming this challenge. This is a very basic introduction to Apache Airflow for absolute beginners.

A Brief History of Airflow

Airflow was originally created by engineers at Airbnb to internally manage their evergrowing data processing pipelines. Recognizing the potential it has, Airbnb open-sourced Airflow in 2016, making it available for the wider developer community. Today, Airflow is a mature and widely adopted tool, used by companies of all sizes to orchestrate and automate their data workflows.

How Airflow Works

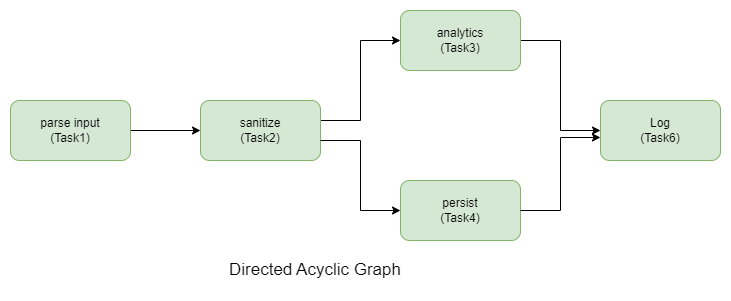

At its core, Airflow is a workflow orchestration tool. It allows you to define complex data pipelines as Directed Acyclic Graphs (DAGs). A DAG is a series of tasks with dependencies, ensuring they run in the correct order. By Acyclic, it means the flow of data never returns the same node in graph which it had crossed once before.

Here's a simplified breakdown of how Airflow works:

- Define Tasks: You define individual tasks within your workflow using Python code. There is support for other languages also through plugins or using kubernetes pod operator to define these tasks. These tasks could involve data extraction, transformation, loading, analyzing, cleaning or any other operations needed in your pipeline.

- Set Dependencies: You specify dependencies between tasks. For example, a data transformation task might depend on a data extraction task completing successfully before it can run.

- Schedule Workflows: Airflow allows you to schedule your workflows to run at specific times (cron expressions) or at regular intervals.

- Monitoring and Alerting: Airflow provides a web interface to monitor the status of your workflows and tasks. You can also configure alerts to be sent if tasks fail or encounter issues.

Deploying Airflow in Production

For production environments, proper deployment of Airflow is essential. Here are some considerations:

- Security: Implement role-based access control (RBAC) to restrict access to workflows and resources.

- High Availability: Consider setting up a high-availability configuration with redundant Airflow servers and worker nodes to ensure uptime.

- Monitoring and Logging: Integrate Airflow with monitoring tools to track performance and identify potential issues.

Advantages of Apache Airflow

Here's a breakdown of some key advantages Apache Airflow holds over other commonly available open-source data orchestration tools:

Maturity and Large Community:

- Extensive Resources: Airflow, being a mature project, boasts a vast collection of documentation, tutorials, and readily available solutions for common challenges. This translates to easier learning, faster troubleshooting, and a wealth of resources to tap into.

- Strong Community Support: The active and large Airflow community provides valuable support through forums, discussions, and contributions. This can be immensely helpful when encountering issues or seeking best practices.

Flexibility and Ease of Use:

- Python-Based Approach: Airflow leverages Python for defining tasks, making it accessible to users with varying coding experience. Python is a widely used language familiar to many data professionals.

- User-Friendly Interface: The web interface provides a decent level of user-friendliness for monitoring and managing workflows. It allows for visualization of task dependencies and overall workflow status.

Scalability and Extensibility:

- Horizontal Scaling: Airflow scales horizontally by adding more worker nodes to distribute the workload. This ensures smooth operation even for complex workflows with numerous tasks.

- Rich Plugin Ecosystem: Airflow offers a vast plugin ecosystem. These plugins extend its functionality for various data sources, operators (specific actions within workflows), and integrations with other tools. This allows for customization and tailoring Airflow to fit your specific needs.

DAG Paradigm:

- Clear Visualization: Airflow utilizes Directed Acyclic Graphs (DAGs) to define workflows. This allows for a clear visual representation of task dependencies and execution order. This visual approach promotes maintainability and simplifies debugging, especially for intricate pipelines.

Additional Advantages:

- Robust Scheduling: Airflow provides extensive scheduling capabilities. You can define how often tasks should run using cron expressions, periodic intervals, or custom triggers.

- Monitoring and Alerting: Built-in monitoring and alerting functionalities offer visibility into your workflows and send notifications for potential issues or task failures.

- Security: Airflow supports role-based access control (RBAC). This allows you to define user permissions and secure access to workflows and resources within your data pipelines.

Open-Source Alternatives to Airflow

- Luigi: While offering simplicity and Python-based workflows, Luigi might lack the same level of flexibility and scalability as Airflow.

- Prefect: Prefect excels in user-friendliness and visual design, but its in-process execution model might not be suitable for all scenarios compared to Airflow's worker-based approach.

- Dagster: Prioritizing data lineage is valuable, but Dagster might have a steeper learning curve compared to Airflow.

- Argo Workflows/Kubeflow Pipelines: For Kubernetes-centric environments, these tools offer tight integration, but they might not be as general-purpose as Airflow for broader data orchestration needs.

Absolutely, here's an example of a simple "Hello World" DAG in Apache Airflow:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

def say_hello():

"""Prints a hello world message"""

print("Hello World from Airflow!")

# Define the DAG

with DAG(

dag_id='hello_world_dag',

start_date=datetime(2024, 3, 19), # Set the start date for the DAG

schedule_interval=None, # Don't schedule this DAG to run automatically

) as dag:

# Define a Python task to print the message

say_hello_task = PythonOperator(

task_id='say_hello_world',

python_callable=say_hello,

)

*Explanation for the above simple DAG *

-

Import Libraries: We import necessary libraries from

airflow. -

Define Hello World Function: The

say_hellofunction simply prints a message. -

Create DAG: We define a DAG using

DAGobject. Here, we specify:-

dag_id: Unique identifier for the DAG (here, "hello_world_dag"). -

start_date: The date on which the DAG can first be run (set to today's date). -

schedule_interval: Set toNoneas we don't want this DAG to run automatically (you can trigger it manually in the Airflow UI).

-

-

Define Task: We define a Python task using

PythonOperator. Here, we specify:-

task_id: Unique identifier for the task (here, "say_hello_world"). -

python_callable: The Python function to be executed (here, thesay_hellofunction).

-

Running the DAG:

- Save this code as a Python file (e.g.,

hello_world_dag.py). - Place this file in your Airflow DAGs directory (usually

$AIRFLOW_HOME/dags). - Go to the Airflow UI (usually at

http://localhost:8080). - Find your "hello_world_dag" DAG and click on it.

- You'll see the task "say_hello_world". Click on the play button next to it to run the task manually.

If everything is set up correctly and executes without error, you should see the "Hello World from Airflow!" message printed in the task logs. This is a simple example, but it demonstrates the basic structure of defining a DAG and tasks in Airflow.

Posted on March 18, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.