Conversational Queries with ElasticSearch

Ajay Krupal K

Posted on April 3, 2024

Elasticsearch is a search and analytics engine designed to handle your data needs with speed and relevance. Whether you're dealing with structured or unstructured, numerical or geospatial data, Elasticsearch will be able to handle it efficiently. It is most commonly used for ingesting and analyzing log data in near-real time.

Each analytics or search engine has its own unique querying methods. Elasticsearch follows the DSL (Domain Specific Language) method for querying data which is based on a key-value format called JSON (JavaScript Object Notation). But imagine not having to learn these rigid syntaxes, this is where conversational queries come into play. So what are conversational queries? It simplifies the way users interact with data. Users just have to type a query in plain language without having to follow any specific syntax, and with the help of AI chat models such as GPT, Gemini, and Claude, it will respond back to you in natural language. It's like talking to your data - easy, intuitive, and efficient.

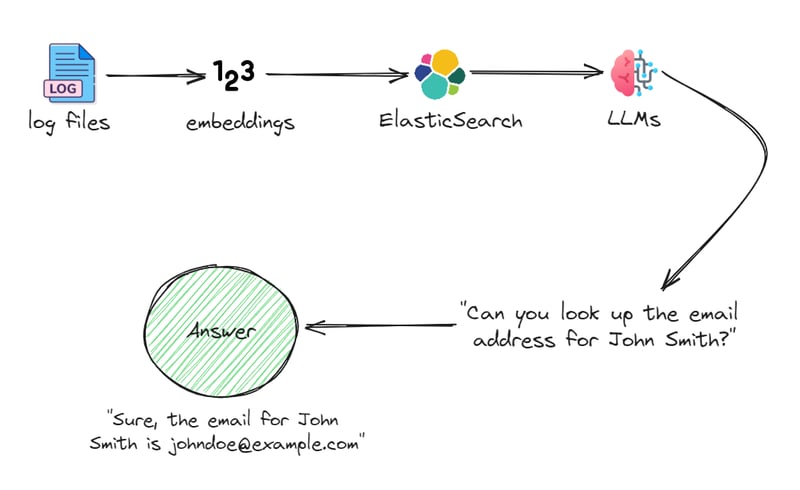

The process for the conversational queries with Elasticsearch is as follows:

- The logs of your application are directed to flow to Elasticsearch

- These logs have to be converted to embeddings which are collections of vectors where similar data points will be mapped to vectors that are close together in the vector space, while dissimilar data points will be mapped to vectors that are far apart. This improves efficient data retrieval.

- Elasticsearch will act as a vector database storing the collection of all log embeddings.

- An LLM of your choice can then be integrated with your Elasticsearch to respond to users' conversational queries

- When a user asks the LLM a conversational query, the LLM retrieves all embedded documents similar to the query from Elasticsearch.

- Compiling all the retrieved documents, the LLM will output a response in natural language

This is just one example of how you could use a common storage application like Elasticsearch to respond to users' queries in natural language by leveraging the power of vector embeddings and large language models. The combination of vector databases and conversational AI offers exciting possibilities for making data more accessible and user-friendly across various domains and use cases.

Follow me on twitter for more here

Posted on April 3, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.