Jordan Plays Pool (multi-threading with a pool queue)

Jordan Hansen

Posted on August 1, 2019

Reddit commentor

I actually love reddit. I love that I can find feedback from real people on almost ANY subject. And a lot of the time the feedback comes really quickly. I shared my last post on r/node and got an interesting, and accurate, comment.

u/m03geek accurately pointed out that my script wasn’t taking advantage of the full power of multiple threads. My with-threads branch sped things up a lot while link checking but how I was doing it (see below) was just running 10 (or however many threads I wanted to run) at once and then it would wait. If 8 of the tasks completed really quickly, they would then sit idle while it waited for the other two to complete.

const promises: any[] = [];

const amountOfThreads = 10;

for (let linkToCheckIndex = 0; linkToCheckIndex < amountOfThreads; linkToCheckIndex++) {

if (links[i + linkToCheckIndex]) {

promises.push(checkLink(links[i + linkToCheckIndex], domain));

}

}

const checkLinkResponses = await Promise.all(promises);

Honestly, I had been looking into getting pools and pool queues to work. I hadn’t even thought of this specific thing though and he was spot on. My post today is the same link checker library except using pools. The results are pretty neat.

I think it’s also worth noting that u/m03geek also mentioned “But links checker is not good example for using workers because node has multithreaded i/o and what you (or author of that topic) need is just a simple queue that will limit maximum number of ongoing requests to 20 for example or 50.” I’m hoping to try and compare speeds of harnessing the mulithreaded i/o to the pool queue next week.

Results

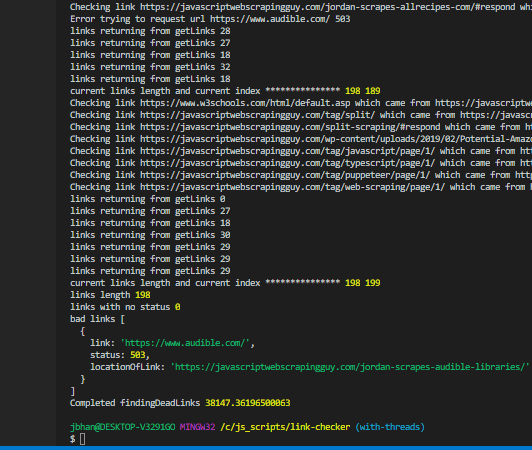

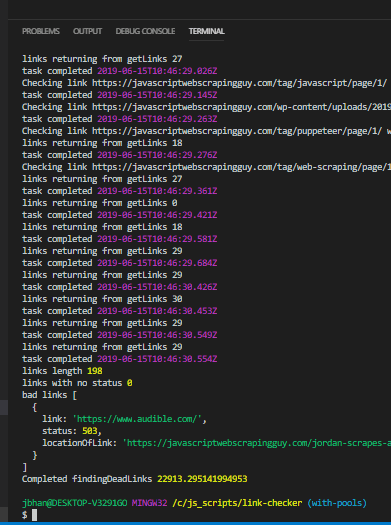

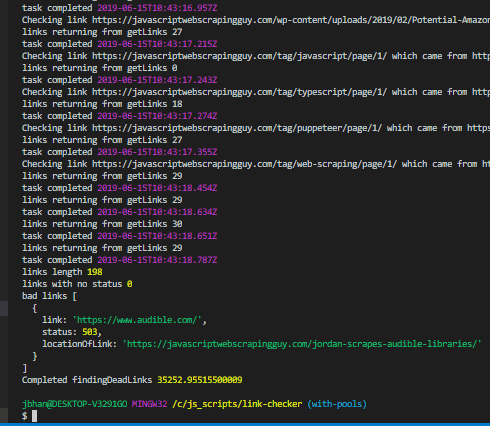

Let’s go over the results first. I’m just going to post them in a list for easier readability and then I’ll post the screen shots. We are checking 198 links and they all were successful in finding the same bad link.

- Normal single thread, 128.492 seconds

- 10 threads the old way, pushing to a promise, 38.147 seconds

- Pool with a limit of 20 threads, 22.720 seconds

- Pool with a limit of 10 threads, 20.927 seconds

- Pool with a limit of 8 threads, 22.913 seconds

- Pool with a limit of 6 threads, 26.728 seconds

- Pool with a limit of 4 threads, 35.252 seconds

- Pool with a limit of 2 threads, 62.526 seconds

I think it’s interesting to note that 20 threads actually performed worse than 10 threads. Not by much but I tested both 3-4 times and 20 consistently took longer. I realize 3-4 times isn’t a solid sample size but at the very minimum the improvement is hardly anything from 10 to 20.

I think it’s also interesting to talk about the significant difference between a pool with 2, 4, 6, and 8 threads. I actually only have four cores so really the difference for everything after 4 shouldn’t be that noticeable. And while the improvement did slow down some after 4, it was still enough to be worthwhile. There is clearly a huge difference between 2 and 4.

Code changes

The code builds off of the code we had before and so I’m only going to focus on the part I changed for using threads in a pool. You can find the full code in the branch on github. There is also further documentation at the threads.js library, written by Andy who has been incredibly helpful and quick responding.

const spawnLinkChecker = () => {

return spawn(new Worker('./../../../../dist/checkLinkWorker.js'));

}

const pool = Pool(spawnLinkChecker, 10);

for (let i = 0; i < links.length; i++) {

if (!links[i].status) {

pool.queue(linkChecker => linkChecker(links[i], domain));

}

}

pool.events().subscribe((event) => {

if (event.type === 'taskCompleted' && event.returnValue.links) {

console.log('task completed', new Date());

// Replace the link we were checking with the completed object

let linkToReplaceIndex = links.findIndex(linkObject => linkObject.link === event.returnValue.link.link);

links[linkToReplaceIndex] = event.returnValue.link;

for (let linkToCheck of event.returnValue.links) {

// We want to check if we've already checked this link

if (links.filter(linkObject => linkObject.link === linkToCheck.link).length < 1) {

console.log('pushed in ', linkToCheck.link);

links.push(linkToCheck);

pool.queue(linkChecker => linkChecker(linkToCheck, domain));

}

}

}

});

Using pools actually cleaned things up quite a bit. I just get all the links from the domain home page like before and then with a loop throw them all into the pool queue. In the example above I’m setting my worker limit to 10 and the pool will automatically keep the work going as jobs complete.

I was really worried about being able to update the link I was checking and then handle the new links found doing it this way but subscribing to pool.events() made it a piece of cake. I just watch for the taskCompleted event and then handle the returnValue, which includes the link with the updated status and the new links. I loop through those new links, adding any links I hadn’t had before and then immediately push them into the pool queue and let it continue its magic.

And it really almost feels like magic. Andy has done a killer job with this library. I’m really grateful for awesome people like him that make the software community so amazing. People that are just creating things for the cool feel of being able to create them.

The post Jordan Plays Pool (multi-threading with a pool queue) appeared first on JavaScript Web Scraping Guy.

Posted on August 1, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.