How To: Build an Augmented Reality Remote Assistance App in Android

ysc1995

Posted on April 10, 2020

This might have happened to many of us at some point: you plugged in that hair dryer or that heater and all of a sudden, all your lights went dark and your TV turned black.

Now, you have to call the customer support of your service provider in desperation. You explained what happened and they said you might just need to switch on your air circuit-breaker. However, when you open the electric panel, you find yourself wondering: which one of these is the air circuit-breaker? After a 20-minute conversation with the customer support representative trying to locate this switch, you find that you don’t have one at your house in the first place.

Most remote assistance today is done through voice calls or text messages. This is hardly convenient for users trying to describe their issues or understand the new concepts and terminology needed for the troubleshooting.

Luckily, technology has reached a point where we can solve this issue by using Video Chat and Augmented Reality. In this guide, I will provide a step-by-step guide to show you how to build an Android app that uses ARCore and video chat to create an interactive experience.

Prerequisites

- A basic to intermediate understanding of Java and Android SDK

- Basic understanding of ARCore and Augmented Reality concepts

- Agora.io Developer Account

- Hardware: 2 Android devices running Android API level 24 or higher and originally shipped with Google Play Store

*You can check detailed device requirements here.

Please Note: While no Java/Android knowledge is needed to follow along, certain basic concepts in Java/ARCore won’t be explained along the way.

Overview

In this guide, we are going to build a AR-enabled customer support app. One user will create a channel by inputting a channel name, joining the channel as a streamer (people who need support). While the other user can join the same channel by entering the same channel name as an audience member (customer support). When both users are in the channels, the streamer will broadcast their rear camera to the audience. The audience can draw on their own device and have the touch input rendered in augmented reality in the streamer’s world!

Here are all the steps that we’ll be going through in this article:

- Set Up New Project

- Create UI

- Enable ARCore

- Enable Streamer’s Video Call

- Enable Audience’s Video Call

- Remote Assistance Feature

- Build And Test on Device

You can find my Github demo app as a reference for this article.

Set Up New Project

To start, let’s open Android studio and create a new, blank project.

- Open Android Studio and click Start a new Android Studio project.

- On the Choose your project panel, choose Phone and Tablet > Empty Activity, and click Next.

- Click Finish. Follow the on-screen instructions if you need to install any plug-ins.

Integrate the SDK

Add the following line in the /app/build.gradle file of your project:

dependencies {

implementation fileTree(dir: 'libs', include: ['*.jar'])

implementation 'androidx.appcompat:appcompat:1.1.0'

implementation 'androidx.constraintlayout:constraintlayout:1.1.3'

testImplementation 'junit:junit:4.12'

androidTestImplementation 'androidx.test.ext:junit:1.1.1'

androidTestImplementation 'androidx.test.espresso:espresso-core:3.2.0'

//ARCore

implementation 'com.google.ar:core:1.0.0'

implementation 'de.javagl:obj:0.2.1'

implementation 'com.google.android.material:material:1.1.0'

implementation 'com.android.support:appcompat-v7:27.0.2'

implementation 'com.android.support:design:27.0.2'

//Video

implementation 'io.agora.rtc:full-sdk:2.9.4'

}

Sync the project after the changes. Add the following project permissions in /app/src/main/AndroidManifest.xml file:

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.BLUETOOTH" />

In order to run ARCore, we also need to add the following in the AndroidManifest.xml file. This indicates that this application requires ARCore.

<uses-feature

android:name="android.hardware.camera.ar"

android:required="true" />

<application

...

<meta-data

android:name="com.google.ar.core"

android:value="required" />

</application>

Create UI

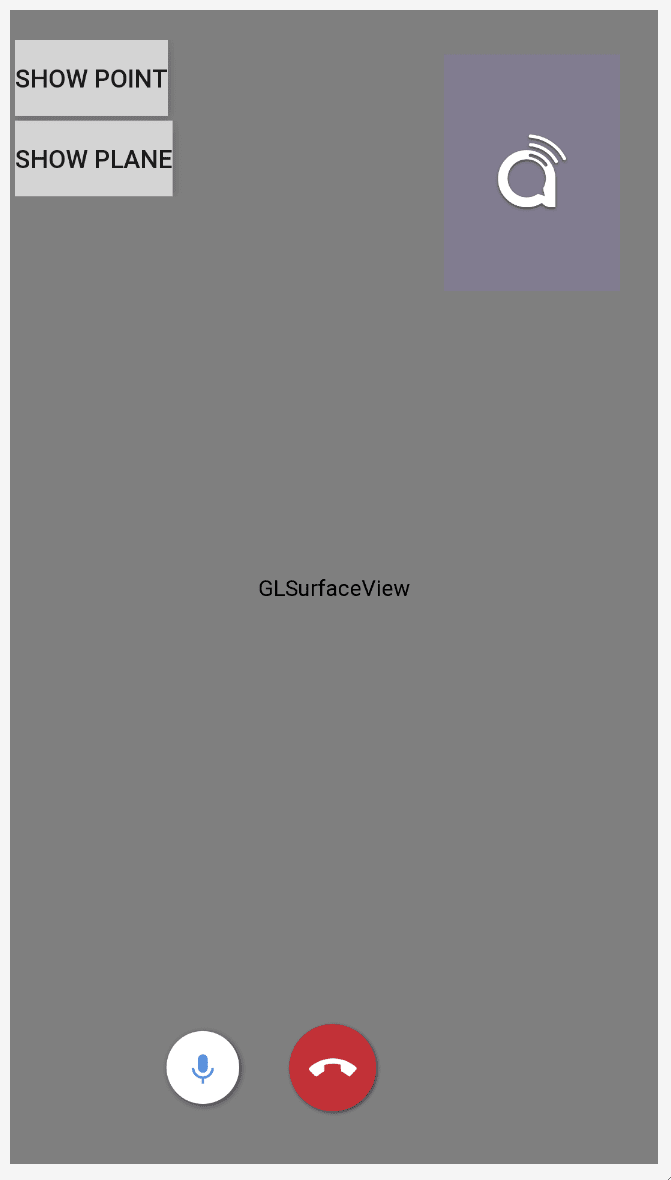

Let’s create the UI component for both users. For the user who is sharing their world with AR, we are going to refer to them as the “streamer”.For the other user who joins the channel for support, we will refer to them as the “audience”. Below is an example UI that I will be using:

Please Note: You can find the .xml files for the UI here.

The main difference between the streamer UI and audience UI is the streamer UI is using android.opengl.GLSurfaceView to render the AR camera view whereas the audience’s UI is using a RelativeLayout to render the video coming from the streamer.

The container on the top right corner of the streamer’s UI page is for rendering the remote video coming from the audience local camera. The container on the top right corner of the audience’s UI page is for rendering the audience’s local camera view.

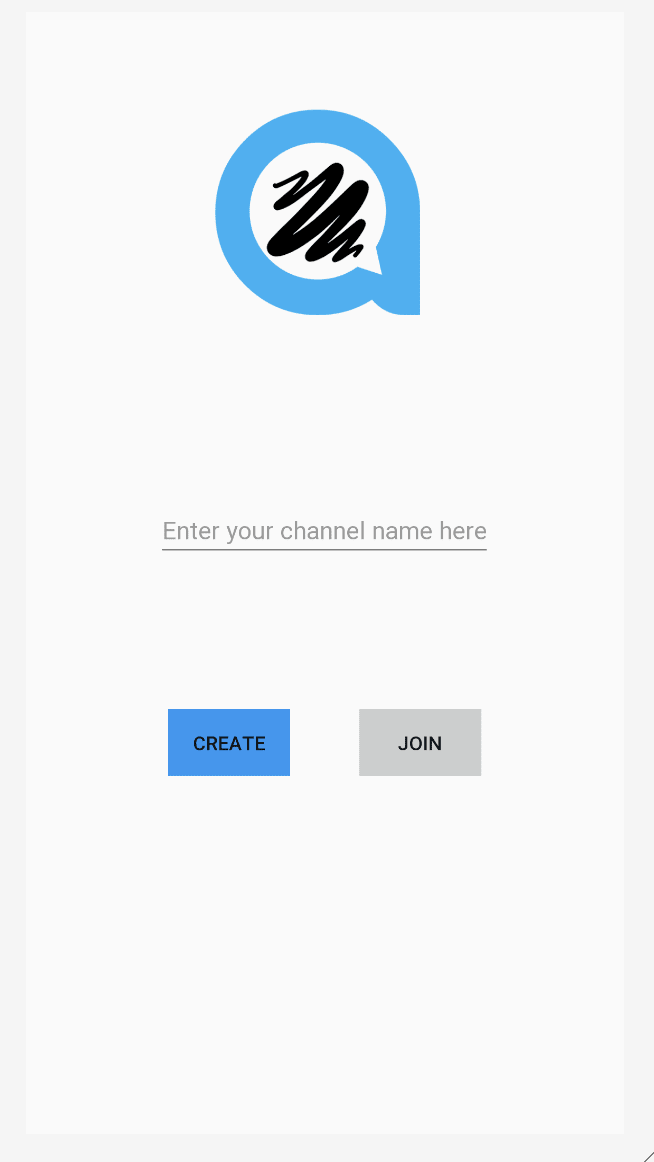

We will also create a screen for the user to enter the channel name and join the channel as a streamer or audience.

Please Note: You can find the .xml files for the Join Channel UI here.

The logic under the Channel UI is very simple. If the user clicks the CREATE button, it will jump to the streamer’s activity page which handles streaming logic. If the user clicks the JOIN button, it will jump to the audience's activity page which handles audience interaction logic. Now, we need to define the logic for both activities.

Set Up ARCore

Setting up the ARCore in the project is not as difficult as most people think. In the OnResume() method of the streamer activity, we need to create a Session instance. The Session instance is to manage the AR system state and handle the lifecycle. We can use it to receive frames that allow access to camera images. However, before that, we have to check if ARCore is installed.

@Override

protected void onResume() {

super.onResume();

if (mSession == null) {

String message = null;

try {

switch (ArCoreApk.getInstance().requestInstall(this, !installRequested)) {

case INSTALL_REQUESTED:

installRequested = true;

return;

case INSTALLED:

break;

}

// ARCore requires camera permissions to operate. If we did not yet obtain runtime permission on Android M and above, now is a good time to ask the user for it.

if (!CameraPermissionHelper.hasCameraPermission(this)) {

CameraPermissionHelper.requestCameraPermission(this);

return;

}

mSession = new Session(this);

} catch (Exception e) {

...

}

// Create default config and check if supported.

Config config = new Config(mSession);

if (!mSession.isSupported(config)) {

showSnackbarMessage("This device does not support AR", true);

}

...

}

Implements GLSurfaceView.Renderer

We will use the GLSurfaceView to render the AR camera. In order to do that, the streamer activity should implement GLSurfaceView.Renderer. There are three functions that need to be overridden: onSurfaceCreated, onSurfaceChanged and onDrawFrame.

Override onSurfaceCreated

In the method onSurfaceCreated, which is usually called at the beginning of the rendering, we will need to do some initializations for the AR scene.

- Create a BackgroundRenderer instance and pass the id of that to the session camera.

- Initialize the 3D object. This 3D object will be rendered in streamer’s AR world later.

- Initialize the plane detection render.

- Initialize the point cloud.

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

GLES20.glClearColor(0.1f,0.1f,0.1f,1.0f);

// Create the texture and pass it to ARCore session to be filled during update().

mBackgroundRenderer.createOnGlThread(/*context=*/ this);

if (mSession != null) {

mSession.setCameraTextureName(mBackgroundRenderer.getTextureId());

}

// Prepare the other rendering objects.

try {

mVirtualObject.createOnGlThread(/*context=*/this, "andy.obj", "andy.png");

mVirtualObject.setMaterialProperties(0.0f, 3.5f, 1.0f, 6.0f);

mVirtualObjectShadow.createOnGlThread(/*context=*/this,

"andy_shadow.obj", "andy_shadow.png");

mVirtualObjectShadow.setBlendMode(ObjectRenderer.BlendMode.Shadow);

mVirtualObjectShadow.setMaterialProperties(1.0f, 0.0f, 0.0f, 1.0f);

} catch (IOException e) {

...

}

try {

mPlaneRenderer.createOnGlThread(/*context=*/this, "trigrid.png");

} catch (IOException e) {

...

}

mPointCloud.createOnGlThread(/*context=*/this);

}

Override onSurfaceChanged

In the onSurfaceChanged method, which is called after the surface is created and when the surface size changes, we will set our viewport.

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

...

GLES20.glViewport(0, 0, width, height);

}

Override onDrawFrame

In the method onDrawFrame, which is called to draw the current frame, we need to implement the render logic for the GLSurfaceView.

- Clear screen.

- Get the latest frame from the ARSession.

- Capture user’s taps and check if any planes in the scene were found. If so, create an anchor at that point.

- Draw the background.

- Draw the point cloud.

- Iterate all the anchors and draw the 3D object on each of the anchors.

@Override

public void onDrawFrame(GL10 gl) {

// Clear screen to notify driver it should not load any pixels from previous frame.

GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT | GLES20.GL_DEPTH_BUFFER_BIT);

...

try {

// Obtain the current frame from ARSession. When the configuration is set to

// UpdateMode.BLOCKING (it is by default), this will throttle the rendering to the camera framerate.

Frame frame = mSession.update();

Camera camera = frame.getCamera();

// Handle taps. Handling only one tap per frame, as taps are usually low frequency

// compared to frame rate.

MotionEvent tap = queuedSingleTaps.poll();

if (tap != null && camera.getTrackingState() == TrackingState.TRACKING) {

for (HitResult hit : frame.hitTest(tap)) {

// Check if any plane was hit, and if it was hit inside the plane polygon

Trackable trackable = hit.getTrackable();

// Creates an anchor if a plane or an oriented point was hit.

if ((trackable instanceof Plane && ((Plane) trackable).isPoseInPolygon(hit.getHitPose()))

|| (trackable instanceof Point

&& ((Point) trackable).getOrientationMode()

== Point.OrientationMode.ESTIMATED_SURFACE_NORMAL)) {

// Hits are sorted by depth. Consider only closest hit on a plane or oriented point.

// Cap the number of objects created. This avoids overloading both the

// rendering system and ARCore.

if (anchors.size() >= 250) {

anchors.get(0).detach();

anchors.remove(0);

}

// Adding an Anchor tells ARCore that it should track this position in

// space. This anchor is created on the Plane to place the 3D model

// in the correct position relative both to the world and to the plane.

anchors.add(hit.createAnchor());

break;

}

}

}

// Draw background.

mBackgroundRenderer.draw(frame);

...

if (isShowPointCloud()) {

// Visualize tracked points.

PointCloud pointCloud = frame.acquirePointCloud();

mPointCloud.update(pointCloud);

mPointCloud.draw(viewmtx, projmtx);

// Application is responsible for releasing the point cloud resources after

// using it.

pointCloud.release();

}

...

if (isShowPlane()) {

// Visualize planes.

mPlaneRenderer.drawPlanes(

mSession.getAllTrackables(Plane.class), camera.getDisplayOrientedPose(), projmtx);

}

// Visualize anchors created by touch.

float scaleFactor = 1.0f;

for (Anchor anchor : anchors) {

if (anchor.getTrackingState() != TrackingState.TRACKING) {

continue;

}

// Get the current pose of an Anchor in world space. The Anchor pose is updated

// during calls to session.update() as ARCore refines its estimate of the world.

anchor.getPose().toMatrix(mAnchorMatrix, 0);

// Update and draw the model and its shadow.

mVirtualObject.updateModelMatrix(mAnchorMatrix, mScaleFactor);

mVirtualObjectShadow.updateModelMatrix(mAnchorMatrix, scaleFactor);

mVirtualObject.draw(viewmtx, projmtx, lightIntensity);

mVirtualObjectShadow.draw(viewmtx, projmtx, lightIntensity);

}

} catch (Throwable t) {

...

}

}

Please note: Some of the concepts are not explained here. Check the Github code for more understanding.

Enable Streamer’s Video Call

Set Up Video Call from Streamer

In the onCreate() method in the AgoraARStreamerActivity, let’s do following things:

- Initialize the GLSurfaceView onTouchListener

- Initialize the Agora RtcEngine

- Set up custom video source

- Join channel

1. Initialize the GLSurfaceView onTouchListener

Setting the onTouchListener for the GLSurfaceView allows us to capture the touch position and set an AR anchor at that position.

mGestureDetector = new GestureDetector(this,

new GestureDetector.SimpleOnGestureListener() {

@Override

public boolean onSingleTapUp(MotionEvent e) {

onSingleTap(e);

return true;

}

@Override

public boolean onDown(MotionEvent e) {

return true;

}

});

mSurfaceView.setOnTouchListener(new View.OnTouchListener() {

@Override

public boolean onTouch(View v, MotionEvent event) {

return mGestureDetector.onTouchEvent(event);

}

});

When the GestureDetector detects a single tap, it will trigger the onSingleTap method. In that method, we add this tap to a single tap queue.

private void onSingleTap(MotionEvent e) {

queuedSingleTaps.offer(e);

}

Remember when we created anchors in onDrawFrame method? We polled the user’s taps from the queuedSingleTaps. This is where the taps are added to the queue.

2. Initialize the Agora RtcEngine

In order to initialize the Agora video engine, just simply call RtcEngine.create(context, appid, RtcEventHandler) to create a RtcEngine instance.

mRtcEngine = RtcEngine.create(this, getString(R.string.private_broadcasting_app_id), mRtcEventHandler);

In order to get the App ID in the parameter, follow these steps:

- Create an Agora project in the Agora Console.

- Click the Project Management tab on the left navigation panel.

- Click “Create” and follow the on-screen instructions to set the project name, choose an authentication mechanism, and click “Submit”.

- On the Project Management page, find the App ID of your project.

The mRtcEventHandler is a handler to manage different events occurring with the RtcEngine. Let’s implement it with some basic event handlers needed for this application.

private IRtcEngineEventHandler mRtcEventHandler = new IRtcEngineEventHandler() {

@Override

public void onJoinChannelSuccess(final String channel, int uid, int elapsed) {

//when local user joined the channel

...

}

@Override

public void onRemoteVideoStateChanged(final int uid, int state, int reason, int elapsed) {

super.onRemoteVideoStateChanged(uid, state, reason, elapsed);

//when remote user join the channel

if (state == Constants.REMOTE_VIDEO_STATE_STARTING) {

runOnUiThread(new Runnable() {

@Override

public void run() {

addRemoteRender(uid);

}

});

}

}

@Override

public void onUserOffline(int uid, int reason) {

//when remote user leave the channel

runOnUiThread(new Runnable() {

@Override

public void run() {

removeRemoteRender();

}

});

}

@Override

public void onStreamMessage(int uid, int streamId, byte[] data) {

//when received the remote user's stream message data

...

}

};

Check the comments on the top of each event handler methods to have a better understanding of them. For more RtcEngine event handlers that you can use, check the Agora Rtc API document.

Please Note: Some of the logic to show video views on the screen is hidden. You can check the Github demo app for a better understanding of how to display and remove video views on the screen dynamically.

3. Set Up custom video source

In our application, we want to let the streamer send their AR world to the audience. So the video they send out is from a custom video source instead of a general camera video stream. Luckily, Agora Video SDK provides an API method to send custom video sources.

Create a class called AgoraVideoSource which implements IVideoSource interface. The IVideoSource interface defines a set of protocols to implement the custom video source and pass it to the underlying media engine to replace the default video source.

public class AgoraVideoSource implements IVideoSource {

private IVideoFrameConsumer mConsumer;

@Override

public boolean onInitialize(IVideoFrameConsumer iVideoFrameConsumer) {

mConsumer = iVideoFrameConsumer;

return true;

}

@Override

public boolean onStart() {

return true;

}

@Override

public void onStop() {

}

@Override

public void onDispose() {

}

@Override

public int getBufferType() {

return MediaIO.BufferType.BYTE_ARRAY.intValue();

}

public IVideoFrameConsumer getConsumer() {

return mConsumer;

}

}

In the onCreate method within the AgoraARStreamerActivity, call the constructor method to create the AgoraVideoSource instance.

mSource = new AgoraVideoSource();

Create a class called AgoraVideoRender which implements IVideoSink interface. The IVideoSink interface defines a set of protocols to create a customized video sink and pass it to the media engine to replace the default video renderer.

public class AgoraVideoRender implements IVideoSink {

private Peer mPeer;

private boolean mIsLocal;

public AgoraVideoRender(int uid, boolean local) {

mPeer = new Peer();

mPeer.uid = uid;

mIsLocal = local;

}

public Peer getPeer() {

return mPeer;

}

@Override

public boolean onInitialize() {

return true;

}

@Override

public boolean onStart() {

return true;

}

@Override

public void onStop() {

}

@Override

public void onDispose() {

}

@Override

public long getEGLContextHandle() {

return 0;

}

@Override

public int getBufferType() {

return MediaIO.BufferType.BYTE_BUFFER.intValue();

}

@Override

public int getPixelFormat() {

return MediaIO.PixelFormat.RGBA.intValue();

}

@Override

public void consumeByteBufferFrame(ByteBuffer buffer, int format, int width, int height, int rotation, long ts) {

if (!mIsLocal) {

mPeer.data = buffer;

mPeer.width = width;

mPeer.height = height;

mPeer.rotation = rotation;

mPeer.ts = ts;

}

}

@Override

public void consumeByteArrayFrame(byte[] data, int format, int width, int height, int rotation, long ts) {

}

@Override

public void consumeTextureFrame(int texId, int format, int width, int height, int rotation, long ts, float[] matrix) {

}

}

Similar to the AgoraVideoSource instance, we create the AgoraVideoRender instance by calling its constructor. Here we pass uid as 0 to represent a local video render.

mRender = new AgoraVideoRender(0, true);

After creating two instances, we call

mRtcEngine.setVideoSource(mSource);

mRtcEngine.setLocalVideoRenderer(mRender);

to set the custom AR video source and local video renderer.

However, the video source we set does not have data in it. We need to pass the AR camera view to our video source. To do that, we are going to add logic at the end of the onDrawFrame method that we override before.

@Override

public void onDrawFrame(GL10 gl) {

...

final Bitmap outBitmap = Bitmap.createBitmap(mSurfaceView.getWidth(), mSurfaceView.getHeight(), Bitmap.Config.ARGB_8888);

PixelCopy.request(mSurfaceView, outBitmap, new PixelCopy.OnPixelCopyFinishedListener() {

@Override

public void onPixelCopyFinished(int copyResult) {

if (copyResult == PixelCopy.SUCCESS) {

sendARView(outBitmap);

} else {

Toast.makeText(AgoraARCoreActivity.this, "Pixel Copy Failed", Toast.LENGTH_SHORT);

}

}

}, mSenderHandler);

}

private void sendARView(Bitmap bitmap) {

if (bitmap == null) return;

if (mSource.getConsumer() == null) return;

//Bitmap bitmap = source.copy(Bitmap.Config.ARGB_8888,true);

int width = bitmap.getWidth();

int height = bitmap.getHeight();

int size = bitmap.getRowBytes() * bitmap.getHeight();

ByteBuffer byteBuffer = ByteBuffer.allocate(size);

bitmap.copyPixelsToBuffer(byteBuffer);

byte[] data = byteBuffer.array();

mSource.getConsumer().consumeByteArrayFrame(data, MediaIO.PixelFormat.RGBA.intValue(), width, height, 0, System.currentTimeMillis());

}

The logic here is to copy the GLSurfaceView to a bitmap and send the bitmap buffer to our custom video source.

4. Join channel

Now, we are ready to join the channel by calling joinChannel() on the RtcEngine instance by passing the channelName from the previous activity.

mRtcEngine.joinChannel(null, channelName, "", 0);

Please Note: The token in the parameter can be set to null.

By calling this function and successfully joining the channel, the RtcEngineEventHandler will trigger the onJoinChannelSuccess() method that we implemented in the previous step. It will return a unique Agora video id which is generated by the Agora server.

Till this point, the streamer can join the channel and broadcast their AR world to the audience.

Enable Audience’s Video Call

Enabling the audience’s video call is very similar to the one we have written for the streamer. The only difference is to set up local camera video after initializing RtcEngine.

mRtcEngine.enableVideo();

mLocalView = RtcEngine.CreateRendererView(getBaseContext());

mLocalContainer.addView(mLocalView);

mLocalView.setZOrderMediaOverlay(true);

VideoCanvas localVideoCanvas = new VideoCanvas(mLocalView, VideoCanvas.RENDER_MODE_HIDDEN, 0);

mRtcEngine.setupLocalVideo(localVideoCanvas);

This will put the audience’s local camera view on the top right corner of the screen.

Please Note: Find Github code for more understanding.

We are now able to start a video call between streamer and audience. However, this is still not a feature-complete remote assistance app because the audience cannot interact with streamer’s AR world. We’ll start implementing the audience markup feature through ARCore next.

Remote Assistance Feature

Ideally, the remote technician (audience) should be able to provide assistance when they want to direct the customer (streamer) by drawing on the screen. This markup should be rendered on the customer’s side instantly and should remain in the same position as drawn.

In order to achieve that, we are going to collect the audience member’s touch positions and send them to the streamer. As long as the streamer receives those touch points, we can simulate the touches on the streamer's screen to create AR objects.

Let’s first collect the audience’s touch positions. In AgoraARAudienceActivity’s onCreate method, set up a touch listener on the remote view container. Collect all the touch point positions in relation to the center of the screen. Send them out to the streamer as a data stream message using the Agora API method, sendStreamMessage. This will trigger the streamer’s onStreamMessage callback. Since a user can only send 6kB of data per second, we send out the touch points whenever we collect 10 of them.

mRemoteContainer.setOnTouchListener(new View.OnTouchListener() {

@Override

public boolean onTouch(View v, MotionEvent event) {

switch (event.getAction()) {

case MotionEvent.ACTION_MOVE:

//get the touch position related to the center of the screen

touchCount++;

float x = event.getRawX() - ((float)mWidth / 2);

float y = event.getRawY() - ((float)mHeight / 2);

floatList.add(x);

floatList.add(y);

if (touchCount == 10) {

//send the touch positions when collected 10 touch points

sendMessage(touchCount, floatList);

touchCount = 0;

floatList.clear();

}

break;

case MotionEvent.ACTION_UP:

//send touch positions after the touch motion

sendMessage(touchCount, floatList);

touchCount = 0;

floatList.clear();

break;

}

return true;

}

});

Here is the sendMessage logic:

/**

* send the touch points as a byte array to Agora sdk

* @param touchCount

* @param floatList

*/

private void sendMessage(int touchCount, List<Float> floatList) {

byte[] motionByteArray = new byte[touchCount * 4 * 2];

for (int i = 0; i < floatList.size(); i++) {

byte[] curr = ByteBuffer.allocate(4).putFloat(floatList.get(i)).array();

for (int j = 0; j < 4; j++) {

motionByteArray[i * 4 + j] = curr[j];

}

}

mRtcEngine.sendStreamMessage(dataChannel, motionByteArray);

}

Now, in AgoraARStreamerActivity, we need to override the onStreamMessage callback to receive touch points sent by the audience and simulate the touches on the streamer’s screen.

@Override

public void onStreamMessage(int uid, int streamId, byte[] data) {

//when received the remote user's stream message data

super.onStreamMessage(uid, streamId, data);

int touchCount = data.length / 8; //number of touch points from data array

for (int k = 0; k < touchCount; k++) {

//get the touch point's x,y position related to the center of the screen and calculated the raw position

byte[] xByte = new byte[4];

byte[] yByte = new byte[4];

for (int i = 0; i < 4; i++) {

xByte[i] = data[i + 8 * k];

yByte[i] = data[i + 8 * k + 4];

}

float convertedX = ByteBuffer.wrap(xByte).getFloat();

float convertedY = ByteBuffer.wrap(yByte).getFloat();

float center_X = convertedX + ((float) mWidth / 2);

float center_Y = convertedY + ((float) mHeight / 2);

//simulate the clicks based on the touch position got from the data array

instrumentation.sendPointerSync(MotionEvent.obtain(SystemClock.uptimeMillis(), SystemClock.uptimeMillis(), MotionEvent.ACTION_DOWN, center_X, center_Y, 0));

instrumentation.sendPointerSync(MotionEvent.obtain(SystemClock.uptimeMillis(), SystemClock.uptimeMillis(), MotionEvent.ACTION_UP, center_X, center_Y, 0));

}

}

Build And Test on Device

That’s all you need to build a remote assistance app. Now let’s run our application!

Go to Android Studio, make sure your Android device is plugged in, and click Run to build the application on your device. Remember to build the application on two devices to start the video call. Both devices must run Android API level 24 or higher and originally ship with Google Play Store.

You can check detailed device requirements here.

Done!

Congratulations! You just build yourself a remote assistance application with augmented reality features!

Thank you for following along. Please leave a comment below! Here is the email address for any of the questions you might have: devrel@agora.io.

Posted on April 10, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.