Coding the Jamstack missing parts: databases, crons & background jobs

Vincent Voyer

Posted on August 19, 2020

Summary:

For my current product, I use Next.js deployed on Vercel (If you're using Netlify, this article is also for you). And I use AWS for the other services I need: databases, crons, and background jobs.

The AWS parts are deployed with AWS Cloud Development Kit (CDK). An Infrastructure as Code tool allowing you (for example) to create PostgreSQL databases on AWS, using JavaScript files.

I talk about AWS in this article, and it might be disturbing for readers: Because of its apparent complexity, you may not think of AWS as a solution for your Jamstack services needs. I thought the same. Then I discovered how easy it was to deploy AWS services using CDK and jumped right in.

From reading issues and forums, I think we share a common struggle: how do you couple a Jamstack application with the essential infrastructure you need (databases, crons & background jobs) to create great products. This article is here to answer that.

Bonus: At the end of this article I provide you a takeaway example you can deploy on AWS and $5,000 credits.

Discovering the Jamstack

As soon as I started to use platforms like Vercel and Netlify, I thought: what about databases, crons, background jobs...? Jamstack platforms are providers allowing you to easily deploy static websites and API calls (through "lambda functions"), but that's it. If you need more than that, you need to use other services.

Coming from Heroku, I was disappointed because it meant I had to spend time researching other solutions, comparing them, and deciding if I should use it or not.

And on top of that, it would possibly mean having lots of different moving parts.

Why do we even need crons and background jobs?

At some point you need a database, that's obvious. As for crons and background jobs:

Crons

Let's say you're building a product that monitors Twitter for specific words. For this to work, static generators and API routes won't be enough: you need something that can frequently call your API that in turn will check Twitter for such words. This is a cron job. You cannot rely on user actions to achieve such work.

Sure you could use EasyCron. But this would be another service to configure and pay for.

Background jobs

Now in your UI, you also have a button that will generate a PDF report for specific words and send it to an email address. This is potentially a "long" running job, something like 10 seconds. The right way to handle this is to use background jobs that will make sure this job is processed without your user having to wait in your UI for it to be done.

One could say that this could be handled by just having an API route that replies immediately and continue to execute some code. Unfortunately, 1. This won't work on AWS (Vercel and Netlify are using AWS and they document this limitation) and 2. If the job fails, you'd have to record it and inform the user they need to click again.

Jamstack platforms like Vercel and Netlify won't help you (for now) whenever you need some code to be executed on behalf of users.

Once I knew that and what I needed, I went solutions-hunting.

Requirements

The solution I am looking for, should, for the most parts:

- Be a single solution, I don't want to maintain a job queue hosted on x.com and cron jobs hosted on y.com. This would be a mess. This is the case on Heroku where they have in-house solutions (databases) but also third-party solution providers (queues) with different UIs.

- Be cheap, I am cheap

- Be on AWS, because I got credits. Plus, most of Vercel's infrastructure is hosted on AWS (Same for Netlify). So I thought the network between my Vercel API routes and my database would be good.

- Be easy to administrate, modify, and deploy. Ideally with code, not YAML files. And without requiring me to create different projects.

Solution

After multiple searches, I settled on using AWS this way:

- database: RDS with latest PostgreSQL engine.

- cron jobs: EventBridge coupled with lambda

- background jobs: SNS coupled with lambda. One could also use SQS, which is a real queue system, or a more complex pattern. But I yet have to become more AWS experienced before diving into SQS and its configuration.

For anyone experienced with AWS, this may be the usual stack. It took me days to find and understand those solutions.

Deploying the infrastructure

Great! Now, how do I easily deploy and maintain all of that? As a new user, I found the AWS console pretty good. You can create your infrastructure in just a few clicks and even code your lambda functions inside your browser: amazing.

But, I said I needed the solution to "Be easy to administrate, modify and deploy. Ideally with code.".

After more research, I understood that what I was looking for was Infrastructure as Code tools (IaC) to control AWS services. IaC tools can range from python files to configure servers, to gigantic YAML files to deploy AWS services.

The right tool for the job: AWS Cloud Development Kit

I found the AWS Cloud Development Kit (CDK) randomly browsing IaC tools. It is mentioned on the Pulumi vs. Other Solutions page which is another IaC tool.

Here's an example CDK stack creating a lambda that will get called every two minutes:

import * as cdk from "@aws-cdk/core";

import * as events from "@aws-cdk/aws-events";

import * as targets from "@aws-cdk/aws-events-targets";

import { NodejsFunction } from "aws-lambda-nodejs-webpack";

export default class ExampleStack extends cdk.Stack {

constructor(scope, id, props) {

super(scope, id, props);

// we define the lambda, it's a local file that exports a `handler` function

const checkTwitterLambda = new NodejsFunction(this, "checkTwitterLambda", {

entry: "jobs/checkTwitter.js",

timeout: cdk.Duration.seconds(30),

});

// run jobs/checkTwitter.js every 2 minutes

const rule = new events.Rule(this, "ScheduleRule", {

schedule: events.Schedule.cron({ minute: "*/2" }),

});

rule.addTarget(new targets.LambdaFunction(checkTwitterLambda));

}

}

Reading this code, as a JavaScript developer, I immediately thought it was the right tool for me. After being able to use JavaScript on the backend (Node.js), on the frontend (Next.js), now I can even define my infrastructure needs in JavaScript. CDK is also available for TypeScript, Python, Java and C#/.Net (And they do this through code generation with https://github.com/aws/jsii. Mind, blown.).

Note: It's amazing how good CDK is as a tool, but how bad they are, for now, SEO wise. It's extremly hard to stumble on CDK if you're searching for infrastructure tools.

If you're interested in: how CDK works, the only issue I had with it or the other IaC tools I tried. You can read the folded sections.

Otherwise, feel free to jump into the next part about the full application example!

I spent a LOT of time on this issue, and I was lucky to be able to discuss at length with CDK maintainers like Jonathan Goldwasser. At some point they will provide a Node.js construct that will not use Docker (this is almost released, see: https://github.com/aws/aws-cdk/pull/9632). In the meantime, I created my own construct with webpack which works pretty well: https://github.com/vvo/aws-lambda-nodejs-webpack.The only issue I had with CDK

I hit a single issue with CDK: They run Parcel inside Docker, by previously mounting your whole JavaScript project as a Docker volume. Unfortunately, on MacOS, mounting big volumes with DOcker is notoriously slow. And even with the latest updates to Docker volumes (Mutagen-based file synchronization), it will still be slow. Mounting a small Next.js project would take between 20s and 2 minutes.

Architect looked like what I needed: control AWS services via YAML files while also getting a local environment replicating your AWS infrastructure. I played with it a little, asked some questions on their slack, only to discover that once you were using Architect, your whole codebase should be controlled and deployed by it. You can't easily mix a Next.js codebase deployed on Vercel and cron jobs hosted on AWS crontrolled by Architect. Next! The first real Infrastructure as Code (Code as in JavaScript code, not YAML files) tool I tried was Pulumi. Pulumi is multi-cloud. I was happy to find out you could easily create lambda functions on AWS that would get regularly called, just like cron jobs. While also using SQS, the queue from AWS and link it to lambdas as well. I had dumb issues with Pulumi: not being able to easily use import statements if you're not using TypeScript, all dependencies from my package.json would be installed on AWS, instead of only the dependencies my jobs are using. Pulumi was the first IaC tool I really tried and most probably suffered from my lack of investment in it. I am sure it's a wonderful tool. But I needed to try another one. Next!Other IaC tools I tried: Architect and Pulumi

Architect

Pulumi

A full application example

Here's the first takeaway. I spent some time creating an example CDK stack you can reuse: https://github.com/vvo/nextjs-vercel-aws-cdk-example.

If you need a database, crons, or background jobs for your Jamstack application and you do not know where to start: start here and use this example. It will save you time and money. I guarantee you that in just an afternoon work you'll get it working.

The example provides:

- An AWS stack ready to be deployed with CDK, including a PostgreSQL database, sample crons and background jobs as lambdas

- A Next.js application you can deploy on Vercel, with a button to trigger the sample background job on AWS

- Local equivalents to the AWS services for you developer environment

You can pick whatever interest you in this example, so that if you're using a different framework (Nuxt), a different Jamstack platform (Netlify), you can still totally benefit from the CDK example stack.

The example also contains more information on how to run it and links to the right documentation you may need.

AWS Cost

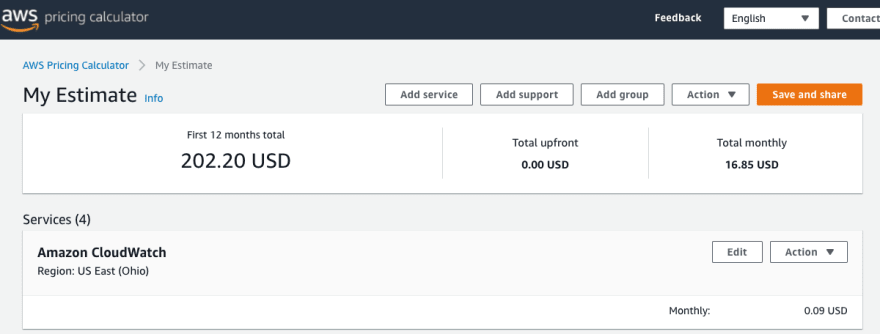

I am still new to AWS and there are free tiers, and credits, but I still tried to estimate the cost of the application example using the AWS pricing calculator.

Hypothesis:

- we use a t3.micro, 5GB SSD on-demand instance for the database

- we have 10 cron jobs that runs every 5 minutes, for 5 seconds and uses 300MB of memory each (that's ~90,000 lambda requests)

- we have one background job that runs 10,000 times a month, for 5 seconds, uses 300MB of memory (that's ~10,000 lambda requests)

The AWS cost would be $16.85/month without the free tier. You can find the computation here: https://calculator.aws/#/estimate?id=8c37f67bc09a5142d821c25ae3478959ea8ce6f3

And, if we switch the t3.micro for a t2.micro and use the free tier, then the monthly AWS cost is $0. I've used the t2.micro and frankly it is performant-enough for a product launch.

Next steps

This setup is good enough but far from perfect for sure. I expect AWS experts to roast me when they see. I see multiple next steps and will probably write more blog post as I make progress in my AWS usage. Here's a list of things that could be better or just things I don't know yet how to solve:

Secrets handling, as soon as you use Vercel and AWS then you will have "secrets" like database credentials that will be both in AWS secrets manager and Vercel environment variables. Ideally they should be in a single place (AWS secrets manager) and then read from Vercel. I am not sure yet what's the right path to follow.

Dual deployment, manual deployments, right now whenever I push my code to GitHub, Vercel deploys my application to their servers, fine! But as for AWS I need to manually run yarn cdk deploy to have my lambdas and infrastructure up to date. Ideally everything (infrastructure, lambdas, migrations, frontend) should be deployed automatically when I push to GitHub.

Pull requests handling, if I open a pull request on GitHub, the whole infrastructure, database, frontend, should be deployed in an isolated way. This is not done yet too.

Error handling, retries, right now if a cron fails or a background job fails, it will be retried, up to a certain point though (lambda retries). I know this could bite me in the future, so we'll see.

PS: AWS credits 💰💰💰

I promised you you could get up to $5,000 in AWS credits, here's how:

👉 Secret has over 150+ offers for credits on Algolia, Twilio, Airtable, Notion... You can save thousands of dollars. Immediately. Use my promo link: https://www.joinsecret.com/?via=vincentvoyer to get a 20% discount on the platinum or any other Secret plan.

You can also get $1,000 AWS credits with AWS founders activate, directly on aws.com/activate. And if you've already done that you can still get $4,000 from Secret!

You don't need to be inside a startup incubator to benefit from those offers.

Thanks for reading!

If you enjoyed this post, follow me on Twitter @vvoyer, share the article, and discuss on Hacker News.

Posted on August 19, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.