What the heck is GPT-3 anyway?

Vitor Paladini

Posted on July 20, 2020

If you've been minimally active on the internet last week you've probably seen the term "GPT-3" all over the place.

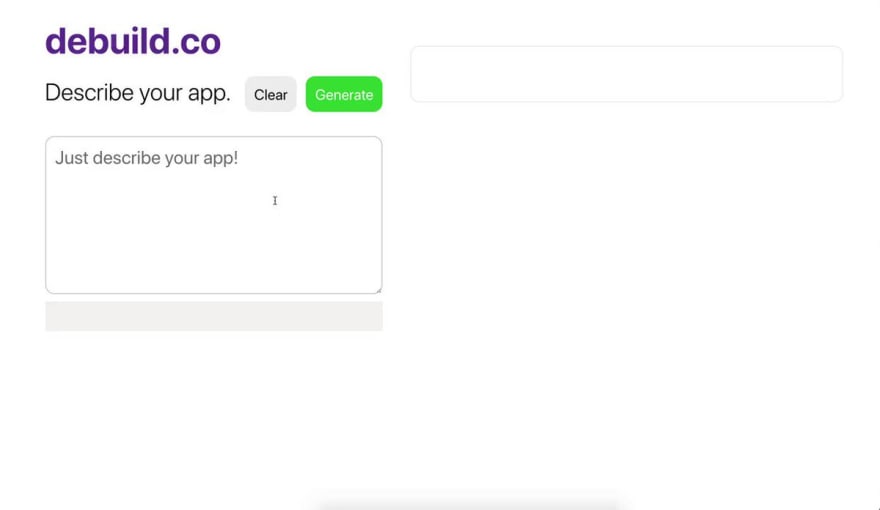

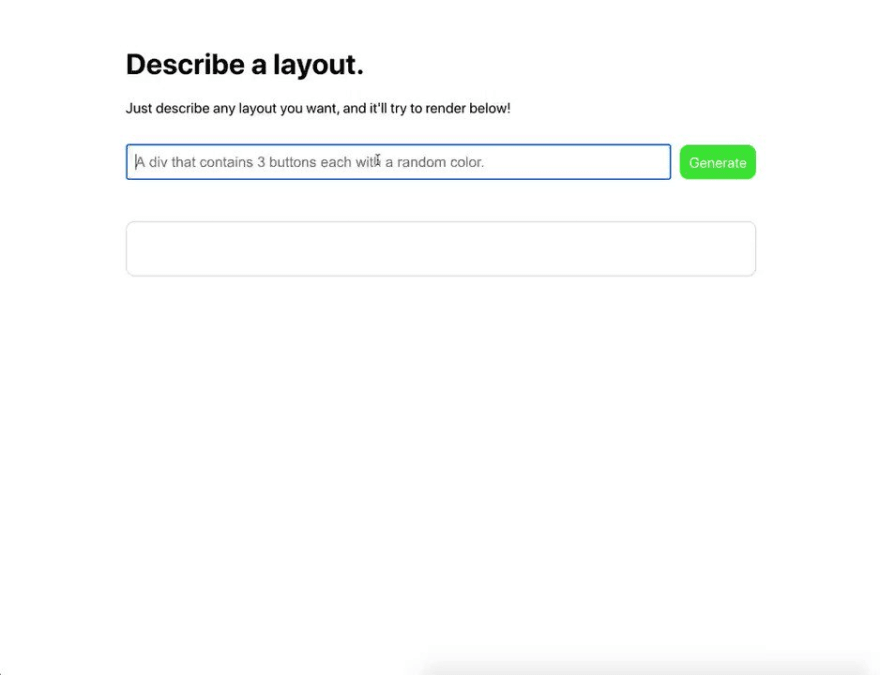

Be it in a JSX code generator, essay generators or in a text-to-UI Figma plugin, the results that GPT-3 seem to provide are impressive, if not a little bit scary.

But most of the tweets promoting it don't dwell much on what it is or how it works, so I'm writing this article to satisfy my curiosity, and hopefully yours.

Please note that I'm a complete layman in the subject so I'm not even trying to make any conclusion here, I'm only patching together bits of info about it so you don't have to.

So, yeah, what is this GPT-3 thing anyway?

GPT stands for Generative Pretrained Transformer and the "3" means third generation. You can think of it as a tool that, given an initial sample of text, tries to predict what should come next.

GPT-3 was created by OpenAI, "an AI research and deployment company based in San Francisco, California" whose mission is "to ensure that artificial general intelligence benefits all of humanity", as per their about page.

Also, OpenAI has people like Sam Altman and Elon Musk, plus some impressive researchers like Ilya Sutskever and John Schulman behind it. So, yeah, they're pretty big.

Can I play with it right now?

That's highly unlikely, OpenAI's API (which I presume is where GPT-3 runs on) is in a free private beta while they work on their pricing strategy.

Their sign up form also mentions academic access, and you may try your luck, but it seems that you'd need to provide a solid use case or reasoning on why you'd need it.

How does it work?

I'm not qualified to answer this question, sorry.

But, I've found a very in-depth article about GPT-2 which, I'm assuming, might share architecture and core concepts.

An article titled OpenAI's GPT-3 Language Model: A Technical Overview where the author approaches the hardware/training side of it.

And finally, Delian Asparouhov, a principal at Founders Fund, also shared his Quick thoughts on GPT-3 where he states:

The simplest way to explain how it works is that it analyzes a massive sample of text on the internet, and learns to predict what words come next in a sentence given prior context. Based on the context you give it, it responds to you with what it believes is the statistically most likely thing based on learning from all this text data

Also, he seems to be losing his mind a little bit.

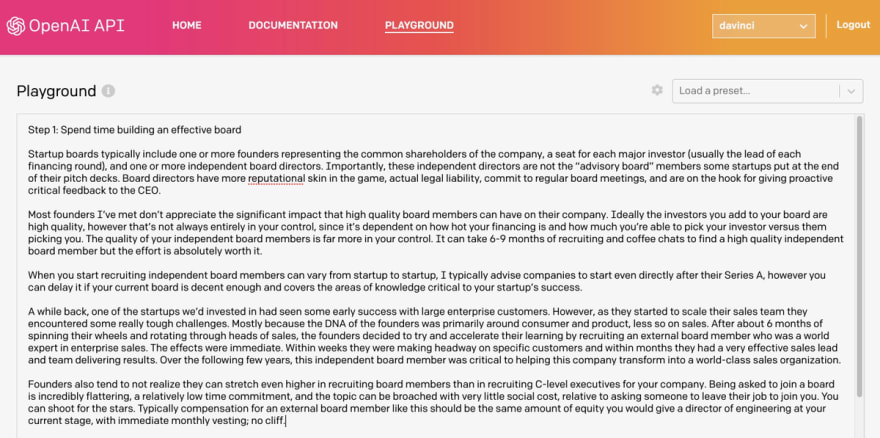

delian@zebulgar

delian@zebulgar Omfg, ok so I fed GPT3 the first half of my

Omfg, ok so I fed GPT3 the first half of my

"How to run an Effective Board Meeting" (first screenshot)

AND IT FUCKIN WROTE UP A 3-STEP PROCESS ON HOW TO RECRUIT BOARD MEMBERS THAT I SHOULD HONESTLY NOW PUT INTO MY DAMN ESSAY (second/third screenshot)

IM LOSING MY MIND00:52 AM - 17 Jul 2020

Oh, and if you want to dive really deep (I didn't) you can go ahead and check the GPT-3 paper itself: Language Models are Few-Shot Learners.

I'm a front-end developer, is it going to steal my job?

Not right now, I don't think so.

It surely brings some interesting questions about automation and what kinds of hurdles that "coding" with English might help remove. It may make coding easier for beginners or completely substitute boring parts of coding while leaving us more time and energy for actually productive things, who knows?

And I know that those tweets can be a bit frightening at a first glance, but I'm excited to see how this type of technology acts a fuel for new development tools.

So, what do you think of it? I'd love to have insights of AI/ML people about it.

Hey, let's connect 👋

Follow me on Twitter and let me know you liked this article!

And if you really liked it, make sure to share it with your friends, that'll help me a lot 😄

Posted on July 20, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

August 21, 2023