Dog Breed Classifier Using CNN Algorithms

usamnet000

Posted on July 4, 2020

Over thousands of years, dogs helped humans hunt and manage livestock, guarded home and farm, and played critical roles in major wars. The contrast of talent and apparent patterns along with emotional contact between dogs and humans created more than 350 distinct breeds, each of which is A closed reproductive clan that reflects a set of specific characteristics. In this project, I build a convolutional neural network (CNN) that can classify the breed of dog from any user-supplied image. If the image is of a human and not a dog, the algorithm will provide an estimate of the dog breed that is most resembling.

Principal Objectives

- Classify dog chains correctly and accurately

- Provide time for the user to determine the type and variety, whether for humans or dogs

- Choose the best classification algorithms to achieve the two previous goals

Your city is hosting a citywide dog show and you have volunteered to help the organizing committee with contestant registration. Every participant that registers must submit an image of their dog along with biographical information about their dog. The registration system tags the images based upon the biographical information.

Some people are planning on registering pets that aren’t actual dogs.

You need to use an already developed Python classifier to make sure the participants are dogs or human.

Using your Python skills, you will determine which image classification algorithm works the "best" on classifying images as "dogs" or "not dogs".

Determine how well the "best" classification algorithm works on correctly identifying a dog's breed.

If you are confused by the term image classifier look at it simply as a tool that has an input and an output. The Input is an image. The output determines what the image depicts. (for example: a dog). Be mindful of the fact that image classifiers do not always categorize the images correctly.

I take many algorithm takes to solve the classification problem .With computational tasks, there is often a trade-off between accuracy and runtime. The more accurate an algorithm, the higher the likelihood that it will take more time to run and use more computational resources to run.

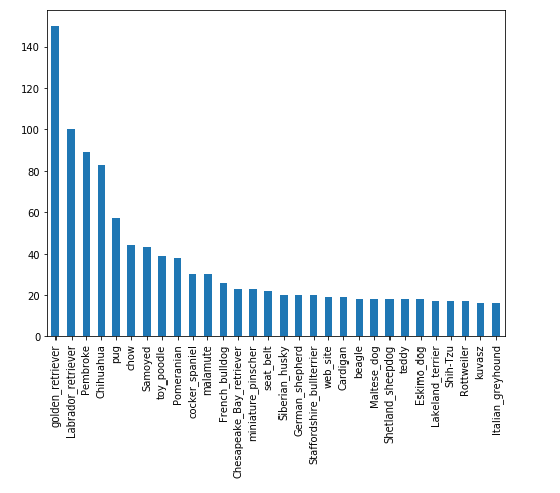

For this image classification task you will be using an image classification application using a deep learning model called a convolutional neural network (often abbreviated as CNN). CNNs work particularly well for detecting features in images like colors, textures, and edges; then using these features to identify objects in the images. You'll use a CNN that has already learned the features from a giant dataset of 1.2 million images called ImageNet. There are different types of CNNs that have different structures (architectures) that work better or worse depending on your criteria. With this project you'll explore the three different architectures (VGG19, Resnet50, InceptionV3, or Xception) and determine which is best for your application.

Certain breeds of dog look very similar. The more images of two similar looking dog breeds that the algorithm has learned from, the more likely the algorithm will be able to distinguish between those two breeds. We have found the following breeds to look very similar: Great Pyrenees and Kuvasz, German Shepherd and Malinois, Beagle and Walker Hound, amongst others.

The rare and widespread origins of the breed of dogs

What is CNN (Convolutional Neural Networks) ?

Convolutional neural networks are deep artificial neural networks that are used primarily to classify images, cluster them by similarity (photo search), and perform object recognition within scenes. They are algorithms that can identify faces, individuals, street signs, tumors, platypuses and many other aspects of visual data.

Convolutional networks perform optical character recognition (OCR) to digitize text and make natural-language processing possible on analog and hand-written documents, where the images are symbols to be transcribed. CNNs can also be applied to sound when it is represented visually as a spectrogram. More recently, convolutional networks have been applied directly to text analytics as well as graph data with graph convolutional networks.

The Convolution operation

The model learns by applying the small convonet or window by sliding over the full image and tries to learn the specific patterns as per our filter matches. With each convolutional layer, our model first learns small details of the image such as lines, curves, object edges etc. And as it traverse deeper into layers, model learns for more complex figures and parts of images.

How the layers work?

A convolutional neural network consists of an input and an output layer, as well as multiple hidden layers. The hidden layers of a CNN typically consist of convolutional layers, RELU layer i.e. activation function, pooling layers, fully connected layers and normalization layers.

- Convolutional: Convolutional layers consist of a rectangular grid of neurons. It requires that the previous layer also be a rectangular grid of neurons. Each neuron takes inputs from a rectangular section of the previous layer; the weights for this rectangular section are the same for each neuron in the convolutional layer

- Max-Pooling: After each convolutional layer, there may be a pooling layer. The pooling layer takes small rectangular blocks from the convolutional layer and subsamples it to produce a single output from that block. There are several ways to do this pooling, such as taking the average or the maximum, or a learned linear combination of the neurons in the block.

- Fully-Connected: Finally, after several convolutional and max pooling layers, the high-level reasoning in the neural network is done via fully connected layers. A fully connected layer takes all neurons in the previous layer (be it fully connected, pooling, or convolutional) and connects it to every single neuron it has.

Building the CNN algorithm’s architecture

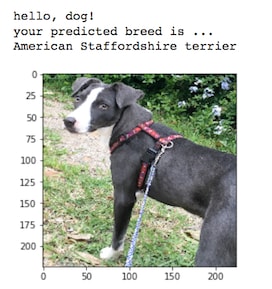

In notebook, you will make the first steps towards developing an algorithm that could be used as part of a mobile or web app. At the end of this project, your code will accept any user-supplied image as input. If a dog is detected in the image, it will provide an estimate of the dog's breed. If a human is detected, it will provide an estimate of the dog breed that is most resembling. The image below displays potential sample output of your finished project (... but we expect that each student's algorithm will behave differently!).

In this real-world setting, you will need to piece together a series of models to perform different tasks; for instance, the algorithm that detects humans in an image will be different from the CNN that infers dog breed. There are many points of possible failure, and no perfect algorithm exists. Your imperfect solution will nonetheless create a fun user experience!

The Road Ahead

We break the notebook into separate steps. Feel free to use the links below to navigate the notebook.

- Step 0: Import Datasets

- Step 1: Detect Humans

- Step 2: Detect Dogs

- Step 3: Create a CNN to Classify Dog Breeds (from Scratch)

- Step 4: Use a CNN to Classify Dog Breeds (using Transfer Learning)

- Step 5: Create a CNN to Classify Dog Breeds (using Transfer Learning)

- Step 6: Write your Algorithm

- Step 7: Test Your Algorithm

You can return to the code via the my github

Conclusion

Furthermore, for increasing the accuracy of our model- we can use Data Augmentation during the train data. As in the images, the dog image can be vertically or horizontally positioned anywhere in the frame. Also the image can be at an angle with partial visibility. So, augmentation can also add to increase our model’s accuracy.

Posted on July 4, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

October 22, 2024