Simple Model: Naive Bayes

Timothy Cummins

Posted on September 9, 2020

When starting a Machine Learning project it is wise to run a "simple" model first so that you can find important features in your dataset, have something to compare your more complicated models to and you might even get a surprise of getting some good results right off the bat. For classification problems I would recommend running Naive Bayes. Since Naive Bayes is a very uncomplicated and very fast classification algorithm, it is probably the best thing to run when you have a large amount of data and few features.

In this blog I will go through what Bayes Theorem is, what makes Naive Bayes naive and then give a short tutorial on using the Naive Bayes Algorithm with "sklearn".

Bayes Theorem

Bayes Theorem describes the probability of something happening, given that something else has occurred. This conditional probability uses prior knowledge of an event to give us the probability of the event occuring. The formula is:

P(A|B) The probability of A given that B is True.

P(B|A) The probability of B given that A is True.

P(A) The probability of A being True.

P(B) The probability of B being True.

Naive

Naive Bayes expands Bayes Theorem to account for multiple variables by making assumptions that these features are independent of one another. Though when using this model these features may not actually be independent of one another and that is where we get the naivety, though it can still produce strong results with clean and normalized datasets. Letting us expand the formula like so:

Now that we have made these assumptions we can estimate an overall probability by multiplying the conditional probabilities for each of the independent features.

Model

So let's see the Naive Bayes in action. To make this example easy to follow along with if you are trying it at home I will be using the wine dataset from sklearn. So first lets get all of the packages we need imported.

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import GaussianNB

from sklearn.datasets import load_wine

from sklearn import metrics

import pandas as pd

import numpy as np

Now we can load in the dataset and personally I prefer the layout of pandas so I am going to format it as well.

wine = load_wine()

data = pd.DataFrame(data= np.c_[wine['data'], wine['target']],

columns= wine['feature_names'] + ['target'])

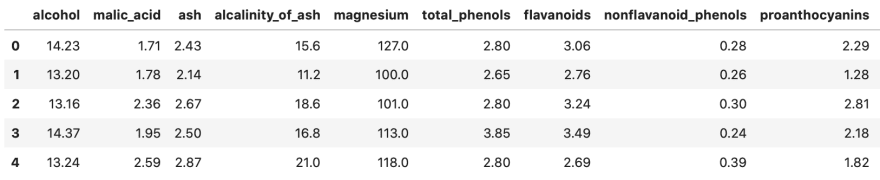

So if we take a look at what we have it should be looking like this with a few more features:

Then from here it is just splitting the data into training and testing groups then fitting it into the model.

X=data.drop('target',axis=1)

y=data['target']

Xtrain, Xtest, ytrain, ytest = train_test_split(X,y)

model = GaussianNB()

model.fit(Xtrain,ytrain)

Now lets have it predict our values and see how the model did.

ypred = model.predict(Xtest)

print(metrics.classification_report(ytest, ypred))

print(metrics.confusion_matrix(ytest, ypred))

Wow pretty awesome for almost instant processing!

Conclusion

I hope this helps you understand a little more about Naive Bayes and how it is useful. It is very fast for classification and as a result very good for large amounts of data. Naive Bayes is actually used in most junk mail applications!

Posted on September 9, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.