How to Build a Perfect API Description

Vedran Cindrić

Posted on February 23, 2024

This article originally appeared on Treblle blog and has been republished here with permission.

On improving the overall quality on OpenAPI spec and we would focus on both keywords and tools. Especially tools, cause the idea is to also present our new API testing app.

You're starting out describing your API with OpenAPI, you've read the OpenAPI Documentation, you've even read the OpenAPI Specification, now you want to make sure you're doing it well. How can you know?

There's a few quick bits of advice to start with.

Use YAML over JSON for your OpenAPI. Nobody wants to mess with brackets. Convert it automatically somewhere in your pipeline if you need JSON for a specific tool.

Name your main file

openapi.yaml. This is recommended by the OpenAPI Specification, and using.ymlwill cause confusion over and over for years.Use OpenAPI v3.1.0. It's the best version yet by far. If you find any tools that do not support it, either use another tool, or chip in some sponsorship money to get them there.

Getting a Jump Start

Opening up an empty text editor and trying to build a whole OpenAPI structure is really hard. There are a few ways you can get a jump start.

Graphical Editors

There are a few visual editors out there that can help you click buttons and type into forms, building out the OpenAPI structure as you go.

- ApiBldr - Free Online Visual API Designer for OpenAPI and AsyncAPI Specifications

- Stoplight Platform - A hosted editing experience that connects to existing Git repos, or real-time collaborative web projects.

- H*ackolade* - Polyglot data modeling for NoSQL databases, storage formats, REST APIs, and more.

AI

ChatGPT and Copilot both understand OpenAPI perfectly, and I use both regularly.

ChatGPT is a handy one to get started with. Try writing a prompt like:

Write an OpenAPI description for an API game of tic tac toe where multiple games can be played at once, with endpoints for starting a new game, making a move, and seeing the status of the game. Use OAuth 2 authentication and add errors using the RFC 7807 format.

The OpenAPI you get back will not be perfect, but it's a great start. I usually follow up with a few extra requests.

Add schema for 200 responses, make examples pure YAML, and add descriptions to properties.

Copy and paste the output into your text editor, and give it a tweak. You might find it's made a few mistakes like putting the OAuth 2 security schemes and schemas into two different components keys which will trigger YAML errors, but give it a quick tidy up and you'll be fine.

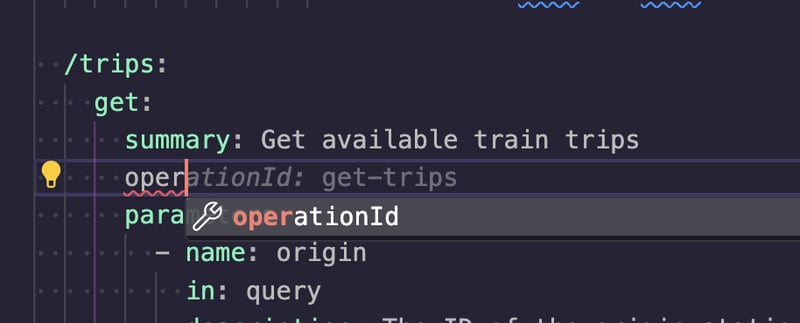

Once I've got the guts of it, I switch to the GitHub Copilot VS Code extension, which really speeds up text-based OpenAPI editing. I can start writing oper and it will know I need to add an operationId, and come up with a good name for it based on the conventions used elsewhere.

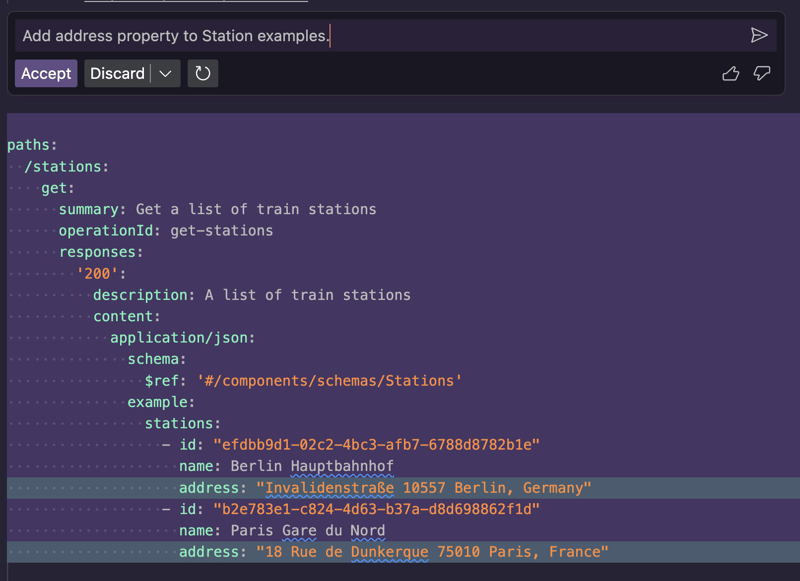

The autocomplete is handy, but there's a GitHub Copilot Chat too. This lets you ask questions, have it update your document, and generate code as it goes.

I would not trust this or rely on it every day, but I do think it's helpful at rapidly creating big chunks of API description quickly.

Aspen

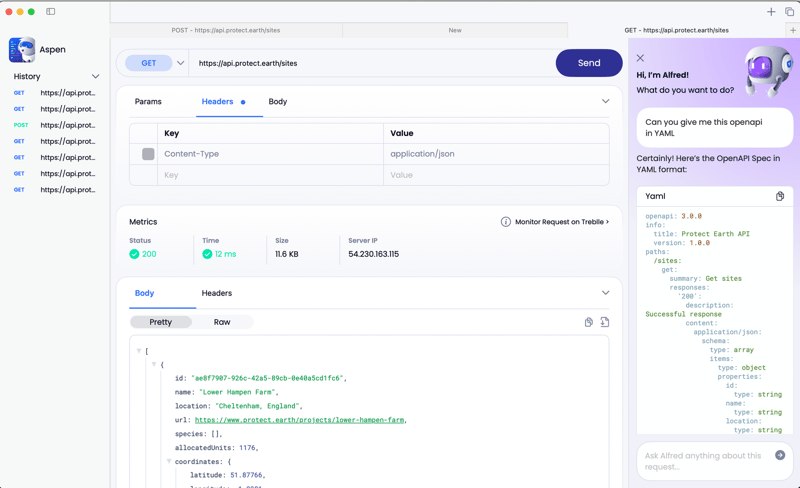

If you've already built your API, you can produce OpenAPI by hitting it with Aspen, the brand new desktop API testing tool from Treblle.

Just like other API clients you can enter a URL, headers, and parameters, and make a request, but unlike those other clients, Aspen has a built in AI-butler called Alfred who will offer some handy options. One of those is to produce OpenAPI from the response as seen there.

Learn more about what Aspen can do in our announcement.

Validation & Linting

Whether you're writing your API descriptions manually, using a GUI, or putting robots to work, you're going to want to make sure the OpenAPI you have is technically valid, and excellent. Spectral can help you with both.

$ npm install -g @stoplight/spectral-cli

$ echo 'extends: ["spectral:oas"]' > .spectral.yaml

Now you can get feedback on your OpenAPI description, either via the CLI, or extensions like VS Code Spectral, to get feedback as you type, and if you set up a Git hook you can even make sure your OpenAPI is good before you commit.

$ spectral lint openapi.yaml

2:6 warning info-contact Info object must have "contact" object. info

11:9 warning operation-description Operation "description" must be present and non-empty string. paths./stations.get

11:9 warning operation-tags Operation must have non-empty "tags" array. paths./stations.get

20:23 error invalid-ref '#/components/schemas/Stations' does not exist paths./stations.get.responses[200].content.application/json.schema.$ref

33:9 warning operation-description Operation "description" must be present and non-empty string. paths./trips.get

33:9 warning operation-tags Operation must have non-empty "tags" array. paths./trips.get

82:10 warning operation-description Operation "description" must be present and non-empty string. paths./bookings.post

82:10 warning operation-operationId Operation must have "operationId". paths./bookings.post

82:10 warning operation-tags Operation must have non-empty "tags" array. paths./bookings.post

126:13 warning oas3-unused-component Potentially unused component has been detected. components.schemas.Station

This is letting me know to add some contact information, and pointing out that I've made some mistakes referencing #/components/schemas/Stations when I should have referenced #/components/schemas/Station, which is sitting there unused.

Once I've fixed all of these problems and made the OpenAPI valid, the next step is to enable some linting rules to spot other issues.

Security Linting

For starters, let's make sure there are no obvious security mistakes showing up in our API design using the Spectral OWASP ruleset.

$ npm install --save -D @stoplight/spectral-owasp-ruleset

To enable it, open up .spectral.yaml and add the line to your extends.

# .spectral.yaml

extends:

- "spectral:oas"

- "@stoplight/spectral-owasp-ruleset"

Now run Spectral again.

$ spectral lint openapi.yaml

15:9 information owasp:api2:2019-protection-global-safe This operation is not protected by any security scheme. paths./stations.get

19:17 warning owasp:api3:2019-define-error-responses-401 Operation is missing responses[401]. paths./stations.get.responses

19:17 warning owasp:api3:2019-define-error-responses-401 Operation is missing responses[401].content. paths./stations.get.responses

19:17 warning owasp:api3:2019-define-error-responses-500 Operation is missing responses[500]. paths./stations.get.responses

19:17 warning owasp:api3:2019-define-error-responses-500 Operation is missing responses[500].content. paths./stations.get.responses

19:17 warning owasp:api3:2019-define-error-validation Missing error response of either 400, 422 or 4XX. paths./stations.get.responses

19:17 warning owasp:api4:2019-rate-limit-responses-429 Operation is missing rate limiting response in responses[429]. paths./stations.get.responses

19:17 warning owasp:api4:2019-rate-limit-responses-429 Operation is missing rate limiting response in responses[429].content. paths./stations.get.responses

20:15 error owasp:api4:2019-rate-limit All 2XX and 4XX responses should define rate limiting headers. paths./stations.get.responses[200]

24:22 error owasp:api4:2019-array-limit Schema of type array must specify maxItems. paths./stations.get.responses[200].content.application/json.schema

Quite a few problems detected! Various error responses are missing, which is something OWASP specifically recommends to help with contract testing tools. If it knows what the error is supposed to look like, it is easy to spot if the API implementation is doing something else, like leaking implementation details in a backtrace.

It's also reminding me to describe the rate limiting strategy used and define the headers used in responses. If my API does not have rate limiting I should probably add it to avoid my API being taken down by malicious or misconfigured clients.

API Style Guides

Organizations with multiple APIs face a problem: how to keep their APIs consistent. Consistency is key for easy integration for clients, allows for code sharing, and generally looks smarter than random mismatch APIs.

Some companies have dedicated API Governance teams who will create an API Style Guide. In days of old this was a large PDF or Wiki which everyone would have to constantly reread to remember it all and spot changes, or it would just be ignored.

Modern best practice is to create an [automated API Style Guide](https://apisyouwonthate.com/blog/automated-style-guides-for-rest-graphql-grpc/, defining naming conventions, preferred authentication strategies, which data formats are used (JSON:API, Siren, etc.), and everything else, all of which can be put through Spectral using GitHub Actions or other CI/CD to make sure the OpenAPI matches the style guide before a pull request can be merged.

You can build your own automated API style guide as a custom Spectral ruleset, or you can use one of these existing ones:

Contract Testing

Once you've got your OpenAPI describing a wonderful API with no obvious security issues or style guide violations, the next priority is to make sure your API actually matches that description.

Contract testing can be complicated, and in the past it's required using brand new special tooling, but these days its easy to work with regular unit/integration test suites like PHPUnit, RSpec, Jest, making them aware of OpenAPI by pointing them to our openapi.yaml and running an assertion to see if the test HTTP response matches the OpenAPI.

For example, working with Laravel PHP, you can configure the popular testing tool Pest (or PHPUnit) to contract testing OpenAPI using Spectator.

# tests/Pest.php

<?php

use Illuminate\Foundation\Testing\RefreshDatabase;

use Spectator\Spectator;

uses()->beforeEach(fn () => Spectator::using('openapi.yaml'))->in('Feature');

Once Spectator knows where your OpenAPI lives in the filesystem, it can then use it as the basis for its contract testing assertions.

# tests/Feature/WidgetTest.php

<?php

use App\Models\Widget;

describe('POST /widgets', function () {

it('returns expected response when request is valid', function () {

$this

->postJson("/api/widgets", [

'name' => 'Test Widget',

'description' => 'This is a test widget',

])

->assertValidResponse(201);

});

it('returns a 400 for invalid request', function () {

$this

->postJson("/api/widgets", [

'name' => 'Missing a Description',

])

->assertValidResponse(400);

});

});

describe('GET /widgets/{id}', function () {

it('returns 200 for record that exists', function () {

$widget = Widget::factory()->create();

$this

->getJson("/api/widgets/{$widget->id}")

->assertValidResponse(200);

});

it('returns a 404 for missing record', function () {

Widget::factory()->create();

$this

->getJson("/api/widgets/12345")

->assertValidResponse(404);

});

});

All the magic is happening in assertValidResponse(), where it’s looking at the OpenAPI description, seeing which HTTP method and endpoint being called, then comparing what it sees in the HTTP response coming from postJson against the OpenAPI descriptions response schema.

If the status code is not described in OpenAPI, you'll see something like this:

FAILED Tests\Feature\WidgetTest > `GET /widgets/{id}` → it returns a 404 for missing record

No response object matching returned status code [404].

If there is a mismatch between the schema and the OpenAPI, you'll see something like this:

The properties must match schema: data

All array items must match schema

The required properties (name) are missing

object++ <== The properties must match schema: data

status*: string

data*: array <== All array items must match schema

object <== The required properties (name) are missing

id*: string

name*: string

slug: string?

Lots of popular language testing tools have something like this:

- JavaScript: jest-openapi works with Jest.

- Ruby on Rails: openapi_contracts/ works with RSpec.

- Laravel (PHP): Spectator works with PHPUnit/Pest.

If you cannot find something to integrate with your existing test suite, consider using Wiretap which can run as a proxy, and handle contract testing of the requests/responses that come through it in testing or staging environments.

API Insights

Once you've got some great OpenAPI, made sure it is valid, made sure its consistent, avoided common security mistakes, and then made certain the API and OpenAPI are fully agreeing with each other, what else is there to do to make your OpenAPI excellent?

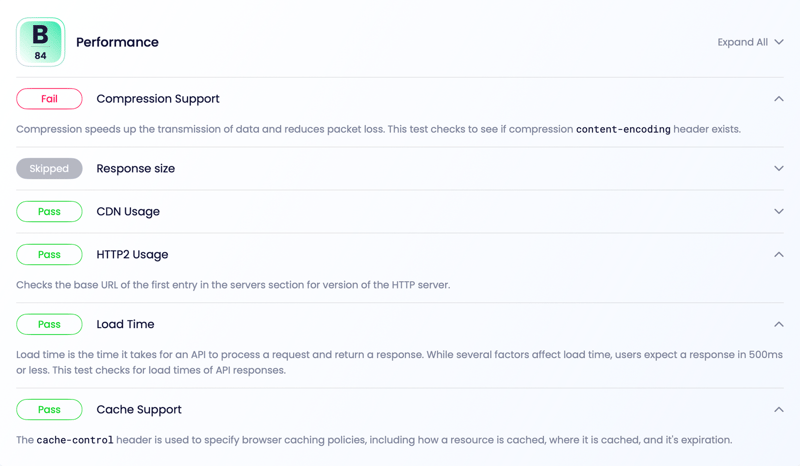

Treblle has just the tool for you: API Insights. This web/desktop app is API rating tool, which can give you a A-F rating that scores you on all sorts of things you almost certainly forgot about, covering Design, Performance, and Security.

Spectral was looking out for problems, but API Insights is going to let you know what you've done well. It's better to use this tool when the API has been built, because many of the rules will look at the actual API implementation based on the URLs it finds in the OpenAPI server array, to give a more complete picture of your API quality.

Summary

These tools all help focus on consistency, security, performance, completeness, and generally putting in enough descriptions and examples so that OpenAPI can be used effectively throughout the API lifecycle.

Top quality OpenAPI helps with producing excellent documentation, mock servers, testing, SDK generation, server-side validation, API consoles, and developer portals, but as the use-cases are constantly evolving, your quest for excellence will continue to evolve.

Let us know what you prioritize in your OpenAPI descriptions, and if we missed any ideas or tools that help you out.

Posted on February 23, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.