How Cloud Run changes Cloud Architecture

Tom Larkworthy

Posted on May 3, 2021

Cloud Run is interesting, it's a general-purpose elastic container hosting service, a bit like Fargate or Azure-Containers but with a few critical differences.

Most interesting is that it scales to zero, and auto-scales horizontally, making it very cost-effective for low traffic jobs (e.g. overnight batch processes).

It also runs arbitrary docker containers and can serve requests concurrently, meaning that for modest traffic you don't usually need more than 1 instance running (and you save money).

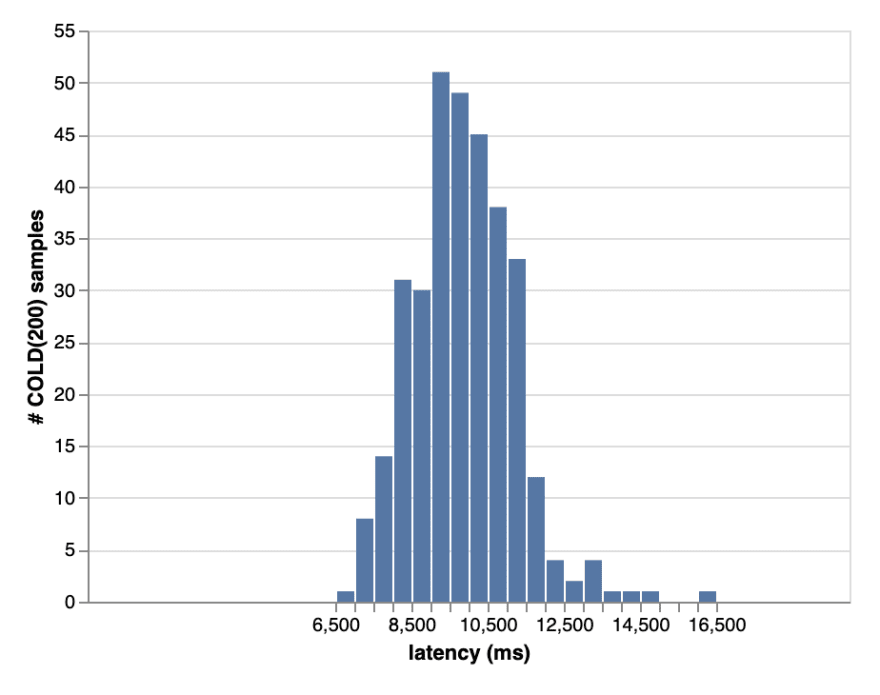

Its flexibility comes at the cost of higher cold starts though. Take a look at our cold start latencies for an on-demand puppeteer service in a low traffic region:

We are seeing cold start latencies of around 10 seconds, to boot up a 400MB container and start Chrome. This was annoyingly slow.

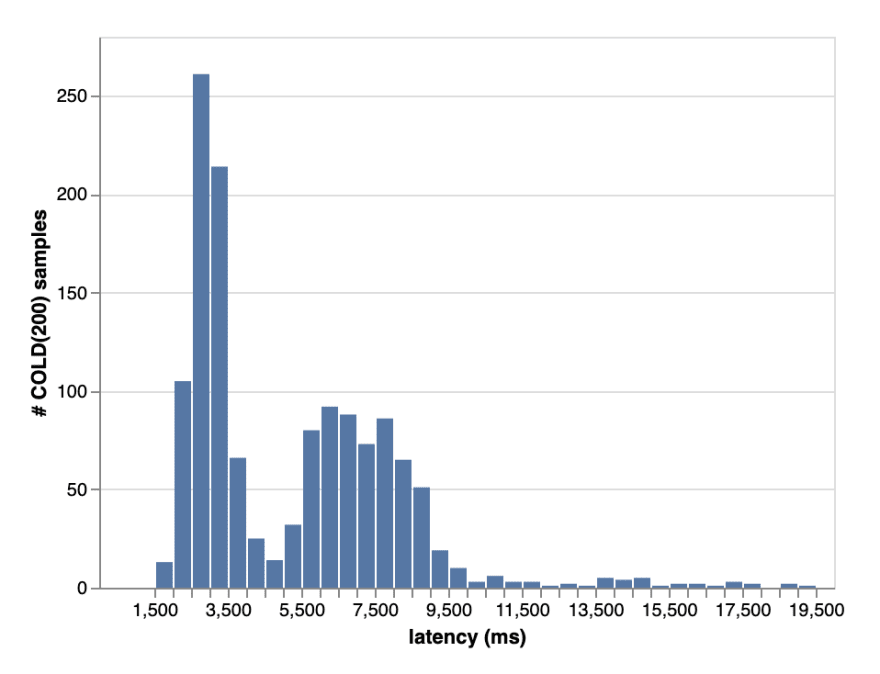

Not all our regions were that slow though, in one of the busier regions we saw a bimodal latency graph:

suggesting that 2.5 seconds is booting up a puppeteer instance and serving the request, and 5-7 seconds is booting the container. For busier regions often a container is running so that's why sometimes the cold latencies are much lower. (for completeness a warm latency measurement is 1.5 seconds, so probably 1 second is booting chrome, and 1.5 seconds is serving the request).

So... how could we speed things up? 5-7 seconds is spent on container startup. It's our biggest spender of the latency budget, so that's what we should concentrate on reducing.

One solution is to run a dedicated VM, though that loses the horizontal elasticity. Even so, let's do the numbers.

A 2 vCPU 2GB RAM machine (e2-highcpu-2) is $36.11 per month

Now Cloud Run has a relatively new feature called min-instances.

This keeps some containers IDLE but with no CPU budget, so they can be flipped on quicker. IDLE instances are still charged, BUT, at around a 10x reduced cost. The cost for an IDLE 2 vCPU 2GB RAM Cloud Run is $26.28 per month.

This gets pretty close to having your cake and eating it. You get lower latency like a dedicated machine, but also still horizontally elastic. It may even cost less.

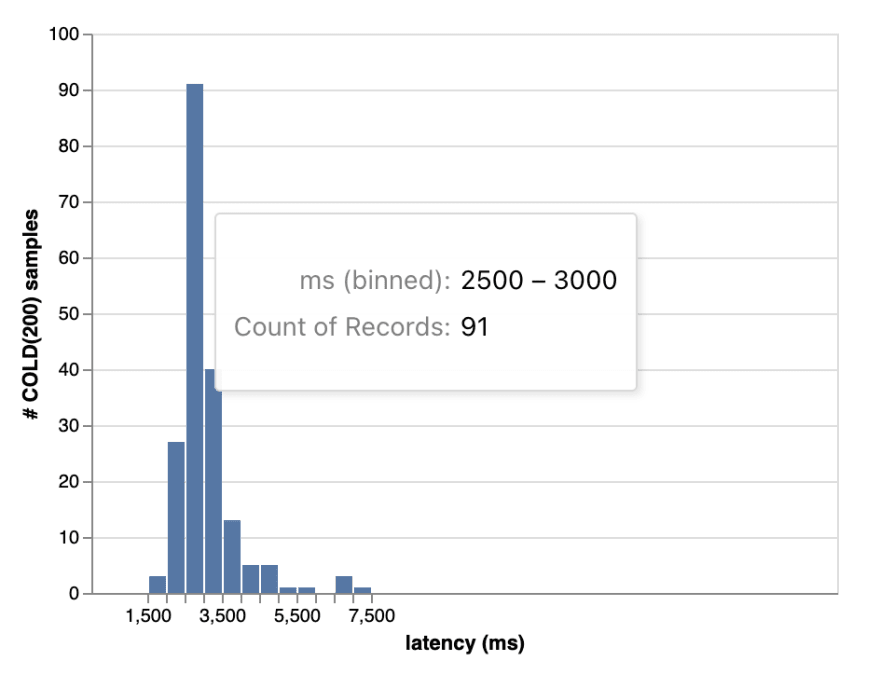

For our application, we tried a min-instance of 1 and this was the result.

Our cold start latencies from container startup are decimated! We have not had to change any code.

I think this min-instances feature is a game-changer for cloud architecture. You can now get the benefits of dedicated VMs at a comparable price to dedicated VMs but with elasticity and image-based deployments. The new min-instances feature broadens the range of applications that serverless compute can address.

Our latency monitoring infrastructure and data is public.

Posted on May 3, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.