How to Proxy and Modify OpenAI Stream Responses for Enhanced User Experience

Tech Tim (@TechTim42)

Posted on January 20, 2024

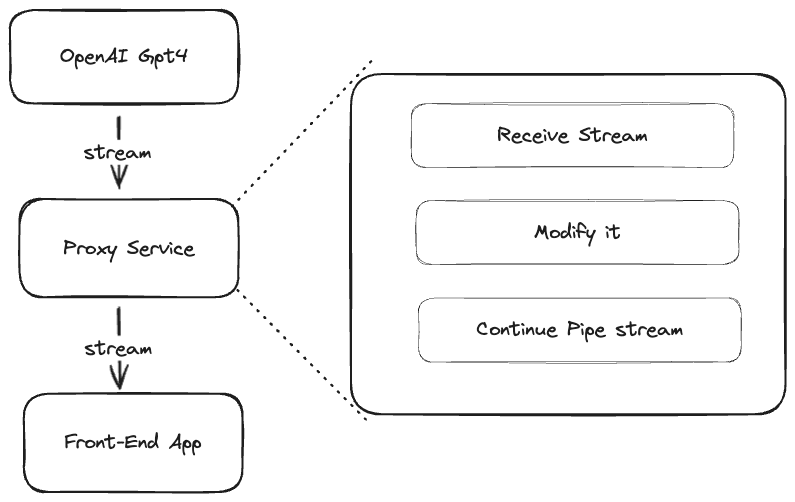

Recently, we encountered a requirement to develop a feature that proxies and modifies a stream from OpenAI. Essentially, our goal is to receive the GPT-4 response in a stream, but instead of directly returning the stream to the front-end, we aim to modify the value within the stream and then return the modified value to the front-end application.

Is this possible? The answer is yes. This article discusses how to pipe a stream in NodeJS/ExpressJS to achieve better FCP (First Contentful Paint). Simultaneously, it explains the process of modifying the stream content.

Why people want to use stream as Response?

Reason is Simple and Straightforward, to have better UX

It is similar to the concept we have in front end and UX, FCP (First Contentful Paint), streaming is a way to reduce the FCP time to let users to have a better UX.

| Move from this | To this |

|---|---|

|

|

| This Table and Gifs are From Mike Borozdin |

How to achieve it in NodeJS?

It is very simple and straightforward too.

If using Axios, it could be done in a few lines through a pipe

const streamResponse = await axios.get(sourceApiUrl, {responseType: 'stream'}); // this line was used to simulate OpenAI GPT4 Response

res.writeHead(200, {'Content-Type': 'text/plain'});

streamResponse.data.pipe(res);

What are the challenges here then?

If it the requirement is just to proxy the stream, pipe is everything we need to do here, and the whole work is simply to build a proxy service for OpenAI API. Unluckily, the challenges here are the modification part.

Modification

In NodeJs, Transformer class is use to modify the streaming chunks, so we could update the codes to be like this

const response = await axios.get(sourceApiUrl, {responseType: 'stream'});

res.setHeader('Content-Type', 'application/json');

const modifyStream = new Transform({

transform(chunk, encoding, callback: any) {

const modifiedChunk = chunk.toString().toUpperCase(); // modify the content to uppercase

callback(null, modifiedChunk);

}

});

response.data.pipe(modifyStream).pipe(res);

Before the pipe to express response, modifyStream transformer was added, to modify the content before return to consumers.

What if the stream response is not a chunk of text

We have to consider about this case as well, now many people are using the function and json response in GPT4 now, which means a simple transformer will not work as expected for modifying the stream content.

For example, to modify a chunk of unfinished JSON response in a stream,

- We need to make sure the content is a valid JSON

- At the same time, we need to make change on top of it, and return the modified content as early as possible to consumers.

But JSON is a strongly structured data type, it will not be valid until it is closed with its curly bracket }.

This is Node package to parse partial JSON.

import { parse } from 'best-effort-json-parser'

let data = parse(`[1, 2, {"a": "apple`)

console.log(data) // [1, 2, { a: 'apple' }]

On top of it, there is even simpler one, 'http-streaming-request'

const stream = makeStreamingJsonRequest({

url: "/some-api",

method: "POST",

});

for await (const data of stream) {

// if the API only returns [{"name": "Joe

// the line below will print `[{ name: "Joe" }]`

console.log(data);

}

In this case, we can update our codes to use it to make the modification for the streaming content.

const stream = makeStreamingJsonRequest<any>({

url: sourceApiUrl,

method: "GET",

});

res.setHeader('Content-Type', 'application/json');

for await (const data of stream) {

modifiedData.modified = true; // add more modify

res.write(JSON.stringify(modifiedData));

}

Performance will not be as good as the original stream pipe, but it will meet all requirements for this case.

Please be noted when you used this library, you may need add some de-bouncing logic on top of it.

Summary

Depends on your modification/transformation code, unless the modification logic is crazy, it will not affect the performance too much of the whole streaming process. The FCP will be reduced, comparing to waiting the whole JSON response being completed.

The Github Repo of the Sample codes is attached below.

Thanks for reading.

Posted on January 20, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

January 20, 2024