Serverless latency avoided with MongoDB connection pool

Jake Page

Posted on December 2, 2022

There are many benefits to building apps using Serverless frameworks. Apart from offloading a large amount of the heavy lifting to a cloud-managed service, it is much easier to configure and scale deployments. Logic can be placed closer to the end user and environments can be easily created by aliasing functions. You are only billed for the memory used and the execution time incurred so the cost is usually lower compared to other architectures.

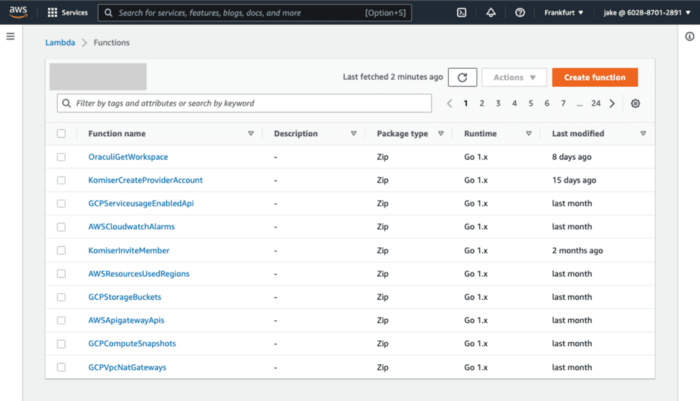

Due to these and many other benefits, Tailwarden is built as a Serverless platform. Made up of hundreds of Lambda functions with an aliased API Gateway in front of them that directs traffic to the functions in our three different environments (Sandbox, Staging, and Production).

On paper that all sounds great but what happens if you have hundreds of lambda functions simultaneously connecting to a MongoDB cluster on a regular basis? Every time a new database connection needs to be created, which we quickly saw was impacting performance as latency increased considerably. We had to avoid having to create a new connection every time that database was queried.

This is what we did to fix it.

The connection pool

A peculiar but valid way of thinking about a database connection pool is the example of a nightclub. Usually, when you go to a nightclub, you wait in line, pay your entry fee and then you might get a side-eye glance from the bouncer but you are allowed to enter. If you want to briefly leave, the bouncer will either stamp your hand or give you a bracelet which means you can now freely leave and come back without having to get in a queue and do all of the steps you did the first time to get in.

A connection pool is similar in the sense that it’s a pool of available connections that can be used without having to go through all the motions of creating a new connection every time. When a request is complete the connection is returned to the pool and even though it’s not used it’s still available. If you leave the nightclub for some fresh air, you can walk back in as long as you have a bracelet. You can also re-use MongoDB connections, as long as the driver can handle connection pools, and you specify the configuration through your connection method. In our case, we used the MONGO_URI environment variable to carry the connection string.

By appending the following option ?poolSize=5 (the bracelet) to the value of the MONGO_URI we maintained a constant pool size of 5 that allowed for a huge increase in performance.

The Tailwarden stack

First, we had to filter out all Lambda functions that are connecting to our MongoDB cluster by writing a bash script to lookup for functions with a MONGO_URI environment variable.

The lambda functions connect to the database with a connection string similar to this one.

In the code snippet below you can see how we pass in the connection credentials with the MONGO_URI

A few best practices to keep in mind when handling AWS Lambda connections to MongoDB Atlas:

- The client to the MongoDB server needs to be outside of the AWS Lambda handler function.

- If you place it inside the function the driver will create a new db connection for each function call which can become expensive and impact performance.

- If your handler takes a callback as its last argument set the callbackWaitsForEmptyEventLoop property on the AWS Lambda Context object to false.

- This allows a Lambda function to return its result to the caller without requiring that the MongoDB database connection be closed.

We had to retrieve the functions which have the MONGO_URI environment variable and then append ?PoolSize=5 to the end of them, without deleting the other existing environment variables.

The script

There are multiple strategies we could have gone with but we decided to keep the script as simple as possible. We interface with AWS directly using the AWS CLI in combination with jq to parse the values or our variables which are in JSON format.

This is the script that got the job done called updateLambdaVars.sh:

Let’s break it down line by line:

First, we grab the functions that have the MONGO_URI environment variable present using jq and store the filteredFunctions as a list.

We then start a for loop which updates the MONGO_URI for each Lambda function in three steps and ends when it has filtered through the full filtredFunctions list.

In the first step of the loop we store the function name in the functionName variable, filtering it first with jq

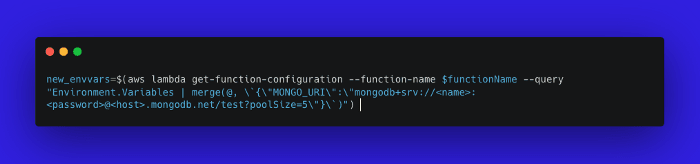

Get the current values of the environment variables configured and merge the value test?poolSize=5 to the end of the MONGO_URI value and store it in new_envvars

Update the lambda configuration with update-function-configuration command by passing the function name variable and the new list of environment variables.

Give the script permission to execute and run it. The script will go through all Lambda functions in the specified AWS region and include the poolSize param to each MongoDB string URI:

Conclusion

When it comes to Serverless frameworks any issues that affect latency can quickly trigger a chain reaction and potentially snowball to turn your application into something your users don’t want to use. When it comes to some sorts of latency our hands are tied, like service integration latency. But that’s not the case with inefficiencies around database connections. Utilize connection pools to ease the load on the database, doing so will increase performance speed and you won’t have to be afraid of the bouncer kicking you out.

Regardless if you are a Developer, DevOps, or Cloud engineer. Dealing with the cloud can be tough at times, especially on your own. If you are using Tailwarden or Komiser and want to share your thoughts doubts and insights with other cloud practitioners feel free to join our Tailwarden Discord server. Where you will find tips, community calls, and much more.

Posted on December 2, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.