Supervised Learning: VC Dimensions

swyx

Posted on February 15, 2019

This is the 9th in a series of class notes as I go through the Georgia Tech/Udacity Machine Learning course. The class textbook is Machine Learning by Tom Mitchell.

⚠️ This chapter is a LOT more theoretical than the others but set up the theoretical foundations for machine learning - skip or persevere, your choice but be warned

The Core Question

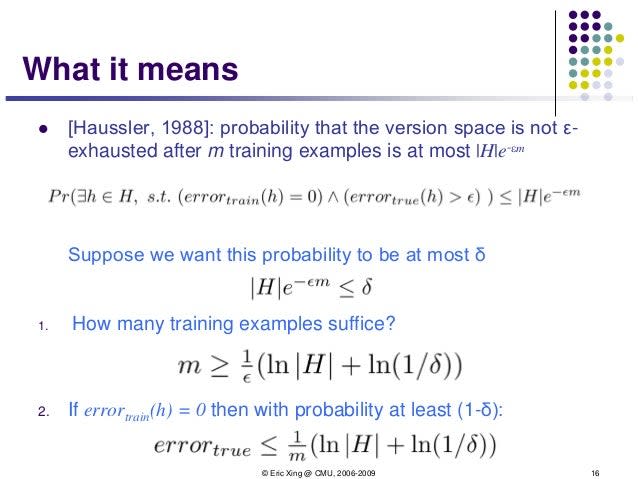

In our prior chapter we learned to use Haussler's Theorem to bound our true error as a function of the number of examples drawn.

However this formula does rely on the hypothesis space being finite (i.e. risking that the "true" hypothesis is something we aren't considering). In this chapter we consider the question of having infinite hypothesis spaces.

Infinite Spaces Everywhere

If the previous chapter got you excited, this is going to get you down. Infinite spaces are everywhere. Not every problem has binary input spaces. Anything with continuous values has infinite spaces.

However we can make the distinction between a syntactic hypothesis space (all the things you could possibly guess) and a semantic hypothesis space (all the functions you can possibly represent) and collapse infinite spaces down to discrete ones. We do this with Decision Trees on continuous values, where we pick a split point for a continuous value based on having a meaningful difference despite the theoretically infinite possible values.

Picking two points on a number line to split on doesn't significantly increase the complexity of the hypothesis space, because one number is necessarily greater than the other and vice versa. You can be either less than or greater than 5, but it is impossible to be less than 3 AND greater than 7. So the number line is a fairly "weak" hypothesis space.

VC Dimension and the Power of a Hypothesis Space

In fact, picking a split point on a continuous number line is such an easy split that we can generalize this idea and ask:

What is the largest set of inputs that the hypothesis class can label in all possible ways (aka shattering)?

In other words, what is the largest number of variables you can use to semantically express the same hypothesis space as the real (infinite) hypothesis space? This quantity is called the VC dimension.

VC stands for Vapnik (the same guy that invented SVMs) and Chervonenkis. VC theory relates the VC Dimension of a class to the amount of data you need to be able to learn effectively in that class.

VC Dimension of intervals

This is a very simple example just for learning.

If H: The set of all intervals from [a,b]:

- and X is the set of Real Numbers in one dimension

- So this is an infinite hypothesis space

- VC dimension asks: what is the largest set of inputs that the hypothesis class can label in all possible ways using hypotheses from H.

- You can fit an interval with just one input point (just fit around or outside it)

- You can fit an interval with two input points (just fit around one or either or both or neither)

- But you can't fit an interval with three input points that have a

+ - +sequence since the interval has to be contiguous - therefore the VC dimension is 2.

Shattering is a small but critical concept and denotes assigning different labels to points. For example, if the points are all on top of each other they could not be shattered.

VC Dimension of linear separators

Linear separators, like the ones we have looked at with the most basic SVM example, separate a plane into a positive and a negative side. We've already established these are infinite hypothesis spaces.

If H: The set of all separators (lines) from y=mx+b:

- and X is the set of Real Numbers in two dimensions

- So this is an infinite hypothesis space

- VC dimension asks: what is the largest set of inputs that the hypothesis class can label in all possible ways using hypotheses from H.

- You can fit a line with just one input point

- You can fit a line with two input points (just fit around one or either or both or neither)

- You can fit a line with three input points (except if they're all on the same line, aka not shatterable)

- But you can't fit a line with four input points that are in a

+ - + -2x2 matrix since the lines split a plane that has to be contiguous - therefore the VC dimension is 3.

More dimensions

There's a pattern here:

| separator | VC Dimension | Inputs |

|---|---|---|

| 0d point | 1 | theta |

| 1d line | 2 | a,b |

| 2d surface | 3 | w1,w2,theta |

| 3d space | 4 | w1,w2,w3,theta |

In fact VC dimension often is the dimensionality of the space in which the hypothesis inputs live. For any d-dimensional hyperplane hypothesis class, the VC dimension is d+1 - the weights for each dimension + the theta (for greater than or equal to overlap)

More separators and their VC dimension examples and the reasoning behind them can be found here.

We can have nonlinear separators as well - 2d circles have a VC Dimension of 3 for example.

Infinite VC Dimension

Despite recasting the conversation from infinite spaces to dimensionality, it is still possible to form hypotheses with infinite dimension. A trivial example is a hypothesis class for points inside a convex polygon:

(best watched to be understood)

So it is that a circle has a VC Dimension of 3 but a convex polygon has infinite VC dimension (because it can have arbitrarily many input points)

Sample Complexity (infinite case) and VC Dimension

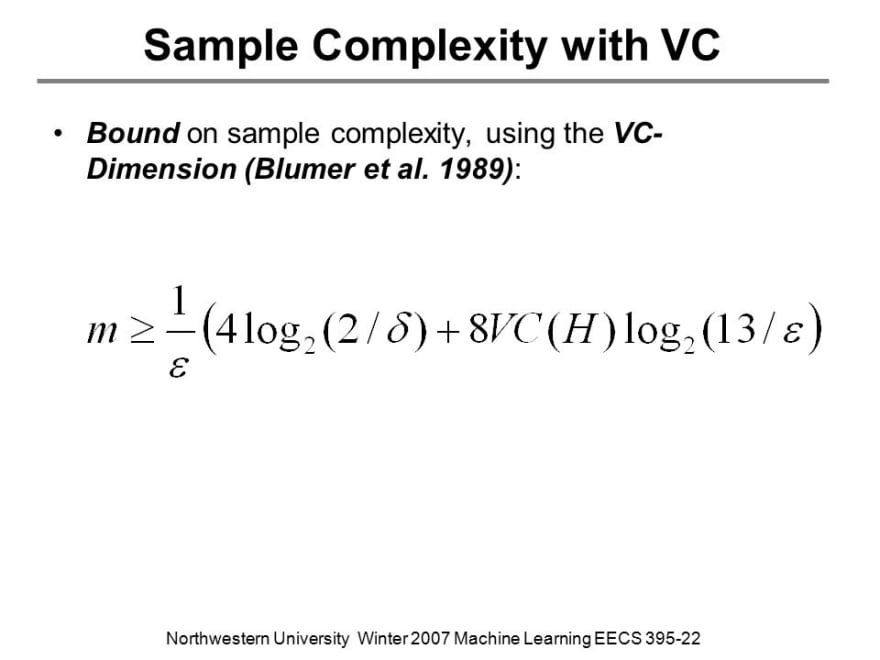

Now we have developed a concept of VC Dimension, we can connect it back to our former formula for Sample Complexity (completely skipping the proof...):

The VC of Finite Hypothesis Space

If we denote the VC of Finite Hypothesis Space by d, there has to be 2^d distinct concepts (as each different labelling can be captured by a different hypothesis in a class) - therefore 2^d is less than or equal to the number of hyptheses |H|.

Rearranging, d <= log2 (|H|). So a finite hypothesis class gives us finite VC dimensions, and therefore make things "PAC Learnable". There is a further proof that this goes the other way - H is PAC Learnable if and only iff the VC Dimension is finite. VC Dimension captures the notion of PAC Learnability in a single concept.

Next in our series

Further notes on this topic:

Hopefully that was a good introduction to VC Dimensions. I am planning more primers and would love your feedback and questions on:

- Overview

- Supervised Learning

- Unsupervised Learning

- Randomized Optimization

- Information Theory

- Clustering - week of Feb 25

- Feature Selection - week of Mar 4

- Feature Transformation - week of Mar 11

- Reinforcement Learning

- Markov Decision Processes - week of Mar 25

- "True" RL - week of Apr 1

- Game Theory - week of Apr 15

Posted on February 15, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

January 11, 2024