Why Is Performance Testing Important? All You Need to Know

Arek Krysik

Posted on January 25, 2023

One of the things we look into when searching for a new car is its performance. How much horsepower does it have? How much torque does the powertrain produce?

These stats are crucial to know as they directly translate into the value of the vehicle. But there’s one more place when performance really matters – software development.

Why should developers care about application performance? How can it impact the KPIs of a business? All of that and more in today’s post on our blog. Keep reading!

What is performance testing?

Performance testing is a type of software testing technique, which helps in determining how stable, scalable, and responsive a given piece of software is under a specific load. It helps developers to locate and eliminate performance bottlenecks and issues.

A key thing to understand is that for different types of applications, performance might mean different things.

For services accommodating the needs of ordinary users, a key thing is how quickly they can respond to inputs and how many simultaneous users they can handle. This is also probably the first thing that comes into mind when we think about software performance.

Enterprise-grade software systems used for internal company processing often deal with batch data that can amass tens of gigabytes. In this case, a much more important performance metric is how quickly the software can transfer and process each batch of data.

A key takeaway from this is that performance testing is not uniform. Performance tests should first and foremost focus on one of the two key things: latency or throughput, depending on what type of applications we are dealing with. Let’s now take a closer look at both of these terms.

Latency vs. Throughput

Let’s say that we have a shipping enterprise owner who wants his company to be more efficient.

There are two ways he can do it: he can invest in faster trucks or make them carry more cargo at once.

This, in a nutshell, is how latency and throughput work.

Latency is like the speed of a truck. It is the time it takes for the data packets to arrive at the end user. Throughput on the other hand is represented in this example by the cargo capacity of each vehicle. It is the number of data packets being successfully sent per second, and latency is the actual time those packets are taking to get there.

Both terms are related as they both refer to data transfer and speed. You can say that they are two sides of the same coin, even though they are different metrics. Performance tests and optimizations aim to maximize throughput and minimize latency.

Performance testing vs. QA testing

Let’s also briefly touch on the differences between QA and performance tests, as these can be often confused by less technical people.

Quality assurance testing, or QA for short, is a process of ensuring that developed software fits all the initially set product requirements. QA testing includes many standards and procedures aimed at making sure that software meets certain specifications before it’s released to the public.

If we go back to our shipping company analogy, QA testing would mean making sure that each cargo payload arrived at the destination intact, just as the company had promised.

Alright, but which is more important, QA testing or performance testing?

Neither, as every software development project needs both.

QA tests are intended to show that software does what it is supposed to do. Performance tests, on the other hand, show that an application is performing as it should – that means they test non-functional product requirements.

Both categories are designed to fulfill their own unique roles, and neither can be neglected.

What are the types of performance tests?

We can differentiate many different types of performance tests, but generally, they go into two main categories:

Tests that simulate web traffic – This type is pretty much self-explanatory. They, in a performance testing environment, simulate virtual users to check how well the system performs under load. These include stress, load, endurance and spike tests.

Tests that simulate data processing capabilities – This performance testing measure aims to test how the system handles the constant influx of new data batches. These include soak and scalability tests.

Let’s now go a little bit more in detail about each of these subtypes as these are the main performance indicators for most business scenarios:

Load tests

Load testing simulates the number of virtual users that might use an application. In reproducing realistic usage and load conditions, based on response times, this test can help identify potential bottlenecks.

Stress tests

Stress testing checks how well the system performs during the peak activity. These types of tests significantly and continuously increase the number of concurrent users to test the limits of the system.

Spike Tests

During spike tests, an application receives an extreme increase or decrease in load. Their main goal is to test how well the software handles sudden changes in load and whether it influences user experience.

Soak tests

Soak tests gradually increase the number of virtual users and check how well the system handles load over a more extended period. The main objective of these tests is to check if sustained high user activity over longer time periods negatively influences performance levels.

Endurance testing

Endurance testing is yet another type of performance test that is performed to observe whether an application can handle the expected load it is designed to endure for a long period of time. During endurance testing, memory utilization is the main factor influencing the application’s performance.

Scalability testing

A key thing to know for CTOs is whether their system is capable of scaling out horizontally.

Let’s say that we have a system capable of processing a set batch of data in 100 seconds. Our intuition tells us that doubling its hardware capabilities should enable it to process twice as much data in the same time period.

This logic cannot be applied universally to all software systems.

Scalability tests are designed to test just that – the ability of the system to process more data the more hardware resources are dedicated to it.

Why do you need performance testing?

Senior developer from Stratoflow, Wojtek, has put it that way:

“It is important for the sake of family life. If you don’t test your code, something is bound to cause performance issues sooner or later. And in that situation, you will be forced to sacrifice your free time to solve dumpster fires on the production”.

On a more serious note, though, software performance has a profound influence on businesses regardless of their types.

We can go back to our two main types of application performance. For B2C applications, poor performance translates to subpar user experience. That, in turn, leads to frustrated customers and lost revenues.

This is true, especially for businesses like online stores, where users interact with multiple content-rich subpages. According to the latest data, a seemingly unnoticeable 100ms decrease in page load speed can lead to a 1.11% increase in session-based conversion. In the case of large e-commerce companies, this translates to hundreds of thousands of dollars of additional revenue.

B2B clients are less sensitive to load speeds. After all, you won’t see an employee leave his job because a company’s internal system loads half a second too long.

For them, it is much more important to transfer and process large batches of data in a reasonable time. In the case of some large corporate-grade internal software architectures, the difference between optimized and unoptimized systems can be measured in hours of data processing times.

One thing is for sure – performance issues can significantly hinder a company’s KPIs, regardless of the type of business it runs.

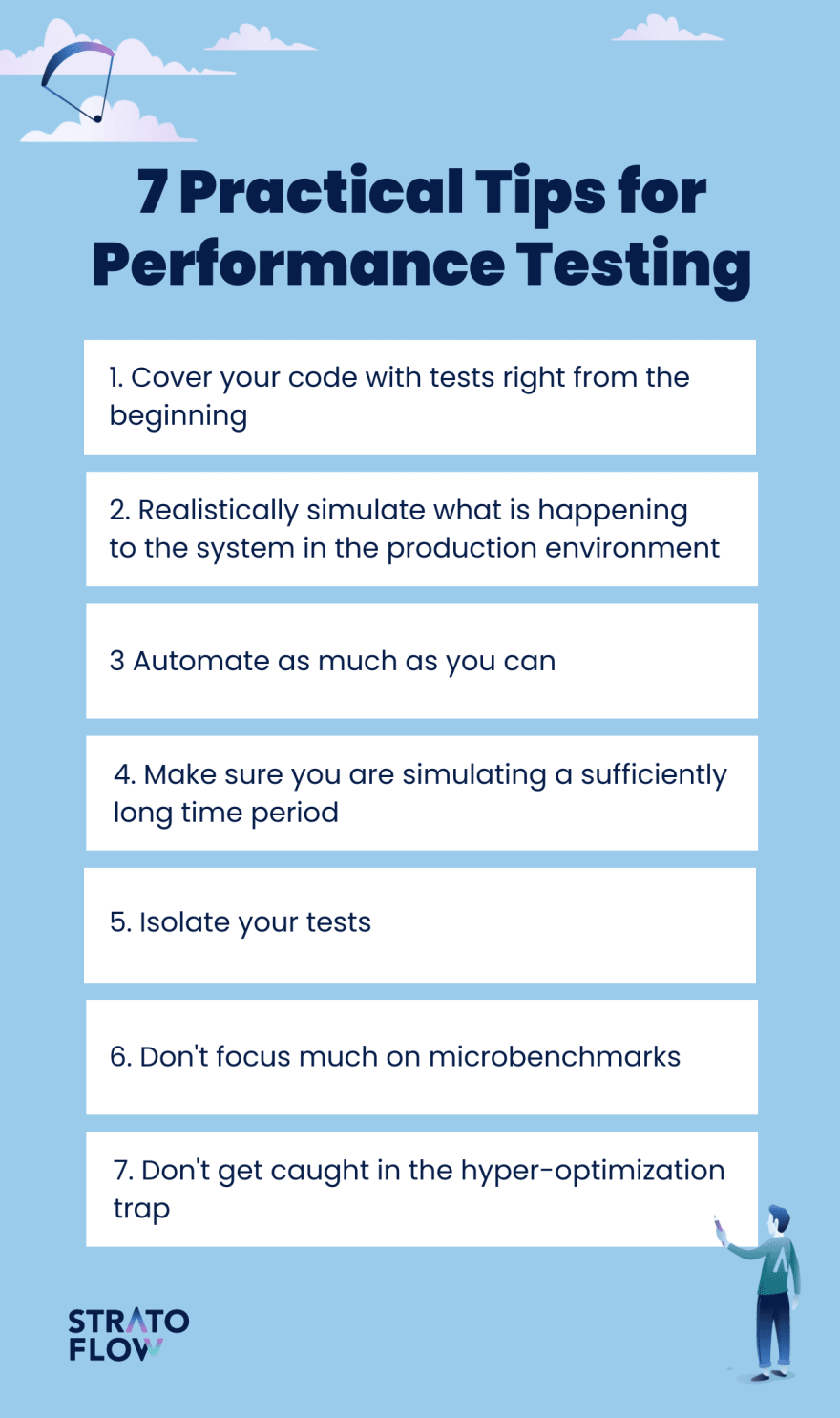

What to remember when doing performance testing – 7 tips from the expert

So what to look out for when designing and setting up performance testing for your application? Here are 7 tips from senior software developers:

Cover your code with tests right from the beginning

If you know that one of the main objectives of the system that you are designing is outstanding performance, testing should be done from the very initial stages of the development process.

This approach will minimize the risk of doing large architecture rebuilding in the future when performance issues become more apparent.

Realistically simulate what’s happening to the system in the production environment

In order for performance tests to yield tangible results, it is important to run them on data sets and hardware as close to their production counterparts as possible.

Especially in the case of enterprise systems, it is sometimes hard to obtain information about the expected “shape and size” of the data that the system will have to deal with in production. These criteria have to be established beforehand with the client in order to properly set up the testing environment.

Automate as much as you can

Time is arguably the most valuable resource in software development.

By leveraging automated performance testing, you can test virtually all aspects of your code faster. Nevertheless, some human intervention in the performance engineering process is still necessary, especially when setting up sophisticated testing tools on assembled applications.

Make sure you are simulating a sufficiently long time period

If your service is based on serving your users with content (like an e-commerce site or hotel booking service) you will probably be fine with simulating only a single day of the system’s performance.

When dealing with systems that process batch data, their performance can be vastly different after uploading hundreds of gigabytes of data. It is thus crucial to simulate multiple days from the life of the system in order to properly test its performance over time and how well it can “soak” subsequent data batches.

Isolate your tests

If you had heard something about multitenant software architecture, you should not be a stranger to a concept of a noisy neighbor problem.

A similar thing can happen when conducting performance tests. Performance testing capabilities can be negatively affected if the tests are run on the same hardware with other processes in the background.

Don’t focus much on microbenchmarks

When performance testing and optimizing your code, you should always use the top-down analysis method.

What do we mean by that?

You should always focus first on these parts of the code that have the biggest influence on the overall code performance.

Let’s imagine that out of the big process that takes 2 hours to complete, you are working on its smaller part that finishes in roughly 2 seconds. Even if you manage to speed it up a thousand-fold, it won’t make such a big difference in the grand scheme of things. It’s much wiser to test and optimize these parts of the code that take the longest time to complete rather than focus on microbenchmarks.

Don’t get caught in the hyper-optimization trap

Optimization of your code is important, but probably an even more important question is when to stop.

Developers often find themselves in a situation where they spend days working on a piece of code that will improve the overall latency and throughput by only a fraction of a percent. In most cases, this is a futile effort.

Before diving even deeper into fine-tuning of your code, you should make a quick cost-benefit analysis.

How much time will I need to optimize this section of the code? What benefits in terms of time savings will it bring? Always look for the golden means. Maybe your code is optimized enough, and you’d be better off devoting your time and effort elsewhere.

Why do you need performance testing – summary

Moore’s law might be dead, but the pace of development in the IT industry doesn’t slow down. End-users expect their web applications to load almost instantaneously, and many large enterprises have to transfer and process hundreds of gigabytes of raw data on a daily basis. All of that fuels the pursuit of top-class performance in software systems of all types.

If your company needs a high-performance software system, get in touch with us! Our Java developers have a lot of experience with software systems in which throughput and latency matter above all else.

Posted on January 25, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.