Delegating control with an advanced multi-tenant setup in Kubernetes

Sander Rodenhuis

Posted on March 1, 2022

Introduction

Kubernetes is still a relatively new technology but is being adopted at high speed. But be aware that Kubernetes is only a general-purpose cluster operating system kernel and requires additional applications and configuration to safely run and manage your containerized business applications. Also, Kubernetes is not the holy grail for everything. If you're just running a couple of containers, then Kubernetes might not be the ideal go-to technology because of the risk of underutilization. This makes sharing a K8s cluster with multiple teams an interesting option.

But allowing multiple tenants on a shared cluster comes with challenges. For instance, how do you make sure the tenants can work independently of each other without interfering with one another? Now, this would not be that hard to implement. But it becomes a little more complicated if you would like these tenants to also be able to share generic platform applications and allow one tenant more control compared to another. Delegating control within the whole stack requires a lot of integration and custom engineering.

In this article, I’ll explain how delegation of control can be easily implemented using the Otomi open source project.

What is delegation of control?

From a management perspective, the best definition of delegation is when an administrator gives a user or group of users the responsibility and authority to complete specific tasks. In IT, delegated administration or delegation of control is about the decentralization of role-based access control. In Kubernetes, this model scales poorly because access control can only be done on the API level using Kubernetes RBAC. Allowing one tenant more access then another tenant can result in a very complex configuration. And RBAC on the application level is implemented per application.

As a result, operation teams become burdened with lots of tasks. These - mainly not automated - tasks can incur high latency times or result in poor security practices.

Suppose you would like to:

- Enforce specific resource quota to team A and offer team B the ability to adjust the configured resource quota

- Allow team B to be able to configure network policies while the network policies of team A can only be implemented by the admin

- Allow access to a team (tenant) on the platform based on an LDAP group membership and allow team B to change the group mapping, while the group mapping of team A can only be changed by the admin

- Allow access to shared Kubernetes apps like HashiCorp Vault and/or Harbor per team/tenant

How much time would you think it takes to support this?

What is Otomi?

Otomi is a Kubernetes applications configuration & automation platform for Kubernetes and can be installed in one run on a Kubernetes cluster in Azure, AWS, or GCP. Otomi consists of a suite of pre-configured and integrated Kubernetes apps, combined with self-service and automation. The teams feature in Otomi offers an advanced multi-tenancy setup, where teams (tenants) get access to a web UI with self-service tasks and shared applications. Administrators can create teams and delegate control (configure what a team is allowed to do and access).

How Otomi supports delegation

Otomi can run in multi-tenant mode, allowing the creation of tenants (called Teams in Otomi). The foundation of a team is a Kubernetes namespace, combined with a default RBAC policy. Access to a team is controlled based on group membership. You can use Keycloak as an IdP or configure Keycloak to act as an identity broker using an external IdP (like Azure AD). A user who is a member of the group mapped to the team will automatically get access to a project in Harbor, a shared space in Vault, the logs of all pods running in the team namespace, and much more.

Teams have access to self-service tasks to add pre-deployed K8s and Knative services to the service mesh (based on Istio), configure public exposure, create K8s Jobs and Cronjobs, and configure ingress/egress network policies. An admin can decide which self-service tasks are available for a team.

An admin can delegate control based on the following self-service flags:

Services

- Configure ingress: allow the use of the self-service feature to publicly expose services

- NetworkPolicy: allow the use of the self-service feature to configure ingress/egress network policies

Team

- Alerts: grant the team permission to configure Alerts for the team

- OIDC: grant the team permission to configure OIDC for the team

- Resource Quota: grant the team permission to configure Resource Quota for the team

- DownloadKubeConfig: grant the team permission to download the KubeConfig file

- Network policy: grant the team permission to enable/disable network policies for the team

Delegation in action

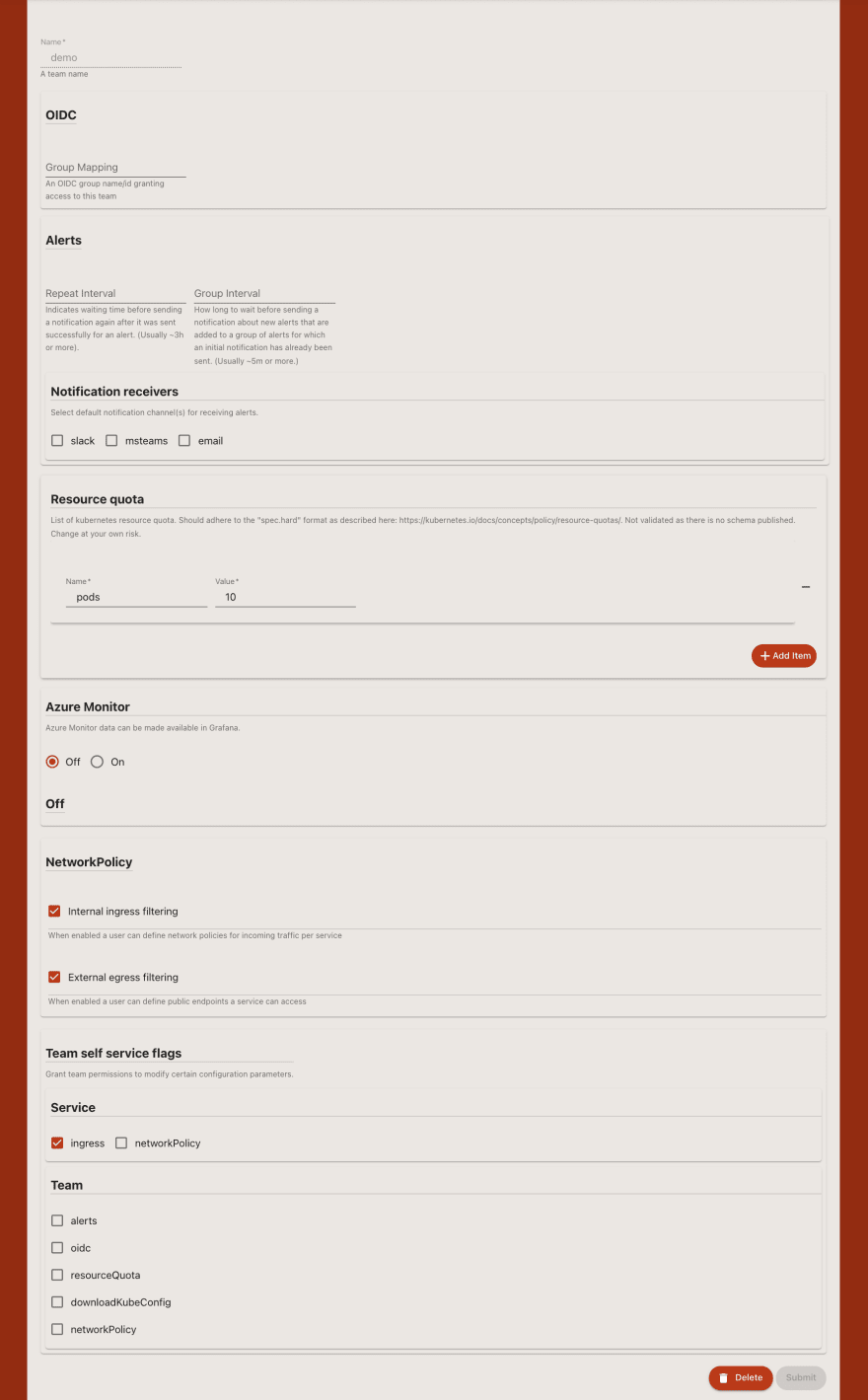

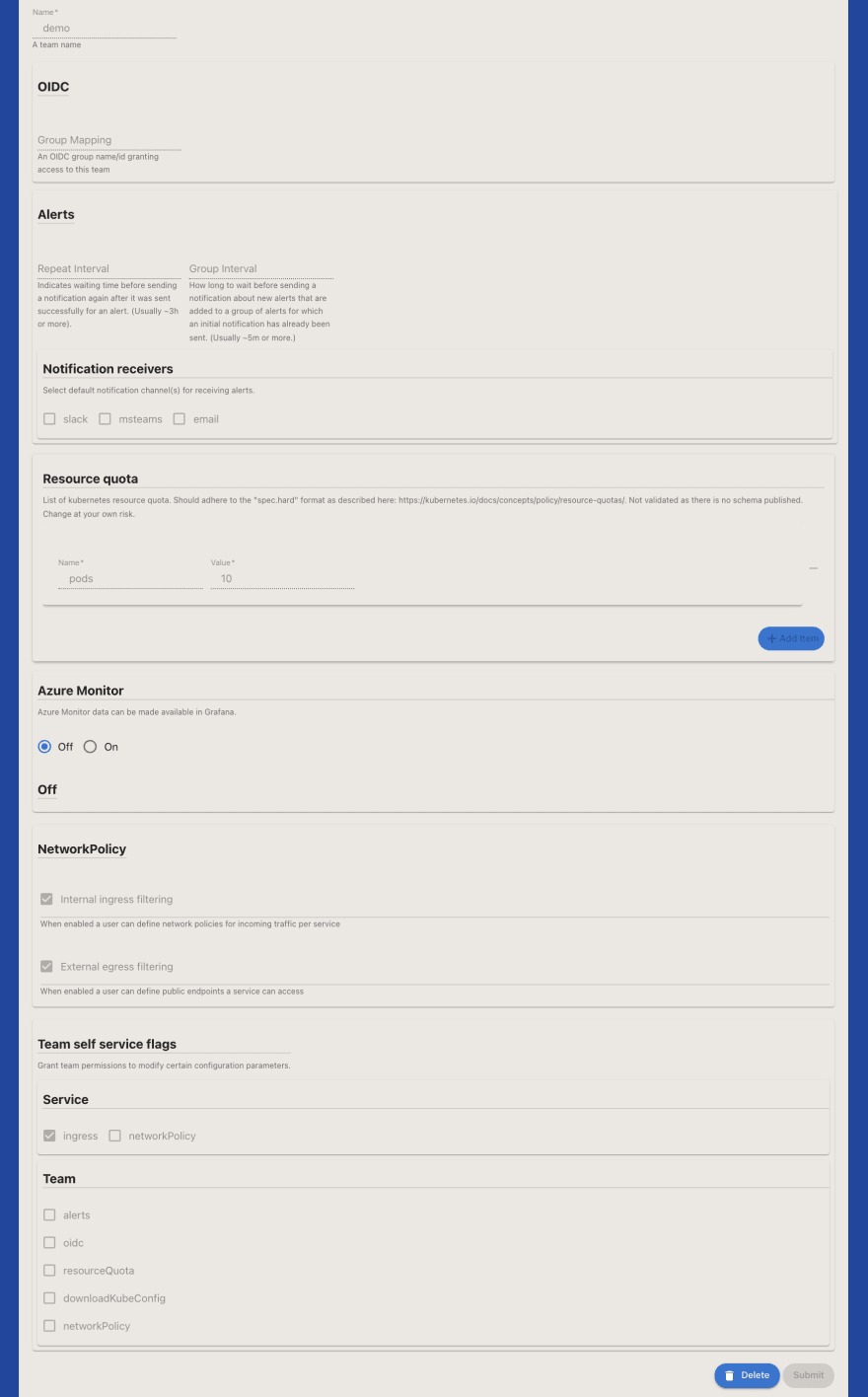

Sign in to the web UI (Otomi console) and create a new team. Provide a name for the team, specify Resource quota, enable network policies and select the self-service flags to only allow service/ingress.

Now sign out as the administrator and sign in to the console with a user who is only part of the team-demo group. Go to the Settings of the team (in the left pane) and notice that you can not change any settings. The only allowed self-service task is to create services and configure public exposure for a service.

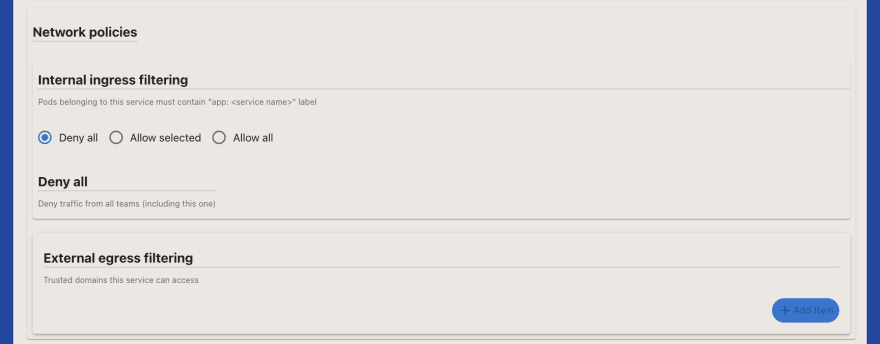

Now create a new Service. Go to the Service section in the left pane and then click new Service. Notice that you can create a new service, and you can configure public exposure, but you can not modify the default network policies (by default all access other than public access is not allowed and the service is not allowed to access any external resources).

Wrapping up

Providing self-service to execute repeatable tasks on Kubernetes and also controlling who can execute these tasks using a simple web UI is something that is not offered by any product in the market today.

If you would like to have more control over what users of your Kubernetes clusters can and can not do, while at the same time offering them a standardized way of working, automation, self-service, and role-based access to shared applications in an advanced multi-tenant setup, then go and try out Otomi. Go to the GitHub project here to get started or visit otomi.io for more information.

Posted on March 1, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.