Serverless one of the new trends everyone has been looking forward to. The concept is quite simple: instead of coding it as a stateful server that we have to monitor it's state everytime, the API would be split into the most basic primitives called a "function" - and these functions execute once and then turn themselves off immediately after the request has been fulfilled.

The problem is I haven't seen anyone done a writeup on how Serverless weighs against stateful APIs in production-scale like setting, so I decided to help out one of my friends in the most daring projects I've ever helped with creating.

The Beginning

Back in a year ago or so, My friend who goes by the name Nathan built an API with a simple goal: to act as a data source to allow third party integrations from a game called Azur Lane. The idea is simple but we have to pull from a JSON dataset to be able to do so. The API was written in Express, and the bottlenecks were this:

It cannot handle large concurrent requests due to how the endpoints requiring humongous amounts of resources due to the need to pull the source once, deserialize it, and filter it according to the user's filters.

The backend's components are composed of a Resolver, a GitHub puller and a primitive form of cache. the problem is that pulling files once and putting it on cache inside the backend's working set was a memory issue since it's shared with the main loop.

Response times were horrendously slow. To fulfill a request, you have to wait for 5000ms to actually get a request.

If we're ever going to add a new feature, you have to code a new DI for it. This was rather unacceptable.

Seeing how the code done was pretty much good but with the response time of a hot pile of garbage, I decided to give Nathan a call and said: "Hey, I think we can fix this, we might need to do this in serverless"

Why Serverless?

Based on my analysis of the stack, it boils down to this:

Debuggability can be narrowed down to one components and one lambda. In stateful applications you have this other noise from the framework because they call each other and send a state from each other.

Endpoint's caches can be isolated to one function instead of sharing the same working set. You can argue you can do the same for stateful servers but they simply don't have the resources.

It allows a much more cleaner code. The DI was performing in such a inefficient manner that it costs time for the DI to accomplish and return data that the bottleneck were around 500ms.

We wanted to keep costs low. That was the entire point as well. We wanted to scale without needing to commit a large sum of money to run an API mainly built by broke college students on their free time.

We wanted to make the API easy to extend. The previous backend was not very flexible with scaling itself that the dev itself was admitting that they basically built a server they couldn't maintain properly anymore.

And so, my goal was set: redo the entire f***ing thing from scratch in Next.js and code it in TypeScript - a industry-tested, type-safe, scalable JavaScript superset.

The journey

The work began by porting the rest of the stuff to Next.js and TypeScript. I began to redo the Mixin models we use then ported a few of my own handlers. Keep in mind this time, Nathan, the original author wasn't into it yet and it's only a week later he decided to join in after some convincing.

PS: to give you perspective: he argued that Next.js wasn't scalable in a way but I argued that if it wasn't scalable, Netflix and GitHub won't be using it. Let's just say that alone was also the reason I picked Next as well.

Of course porting what is known to be a monolithic express application is very daunting - not all of the express stuff applied: we had to code our own middlewares and other equivalents of the backend. Next was a very minimal framework at it's best - it's there to get rid of the routing and some of the React stuff you have but you still have to code a lot of your components by your own, which is a good thing since too much abstraction just leads to unused code.

2 weeks passed by and the API was already feature parity. DI is no longer performed by abusing require(), we were using actual Mixin patterns using ECMAScript decorators - and boy that experience made Nathan like the new rewrite. Eventually we got a third guy on board and they helped us write tests for it.

Another week passed by: we weren't focusing on parity anymore: we were just adding features. After a while, we decided to add MongoDB support and local function caching using a Map, and finally, in a historic moment, we merged everything to master.

And the API was never the same again.

Key Takeaways

The API is more dynamic and thanks to Vercel, making an API made by 3 volunteers global scale without any effort from our end.

One of the things we also improved is how we did Mixins and DI, and believe me, considering how it looked like before:

The new Next.js and TypeScript code was much more better. While Next.js is hard to adapt properly in first, but once you finally finish, adding features and maintaining it will be easier than before.

What's next

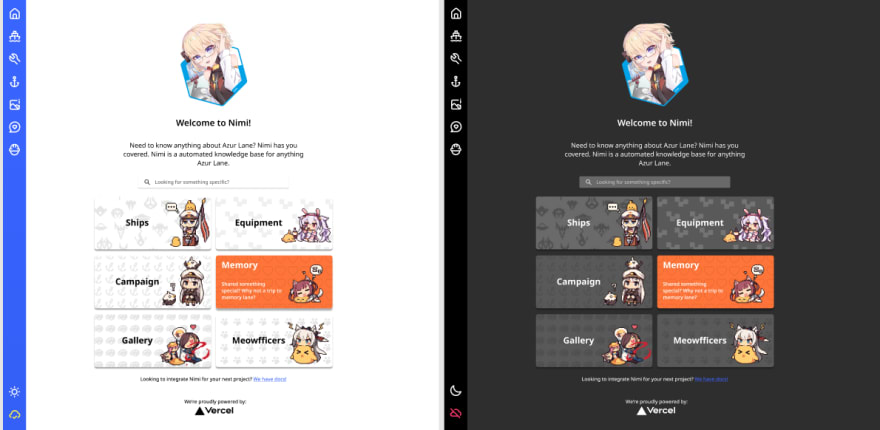

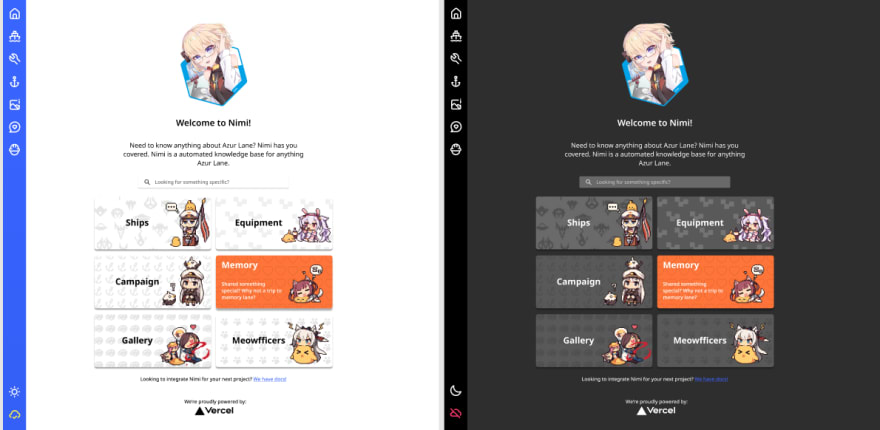

Of course we're not stopping there. Now we have the API done, Nathan decided we should make a viewer, and here's a sneak peek on how it looks like:

Interested on helping us out? Our repository is here:

The unofficial API for Azur Lane

Nimi

Welcome aboard! Nimi is the unofficial API that returns JSON data from Azur Lane and is always updated to the latest game version. Unlike most projects of the same mission, we're using a serverless approach for providing you these data, however, since we're iterating rapidly, there might be some bugs and of course, we change some stuff really fast so beware of maelstroms and icebergs, Captain!

Compared to previous version

Due to the stateless architecture of the new API, we are able to reach more audiences much more better than before. And thanks to the new API's architecture and Vercel, the service is now accessible anywhere in the world, we're no longer isolated in one region! You're always 80ms or 160ms away from the service.

What's next?

We're just getting started. We have a viewer coming up (and it's fully automated unlike the Azur Lane Wiki), and…

Conclusion

It really shows that Serverless when done right can be a replacement for a regular stateful server in such cases, however, before you do jump on a stack, make sure you do a stack analysis and see which works for your team and for your client.