Effortlessly explore the nuScenes dataset with SiaSearch

SiaSearch

Posted on April 12, 2021

A guide to better access, explore, and understand unstructured sensor data for autonomous driving development

To accelerate the speed of autonomous vehicle adoption, an increasing number of organizations and individuals are making their projects available to the public. Open data is fueling commercial and technological advancement in autonomous driving—one of most well known resources being the nuScenes dataset.

Developed by the team at Motional (formerly nuTonomy), nuScenes is one of the most popular open-source datasets for autonomous driving. The nuScenes dataset enables researchers to study a wide range of urban driving situations using data captured by the full sensor suite of a self-driving car. The first dataset of its kind, nuScenes was a key player in cultivating a culture of data sharing and collaboration within the mobility industry.

To further advance this mission, Motional recently partnered with Berlin-based startup SiaSearch to introduce a completely new way to interact with nuScenes—by using a data curation platform to delve deeper into the data than ever before.

This guide will walk you through how to better access, explore and understand the nuScenes dataset with the SiaSearch platform.

What is the nuScenes Dataset?

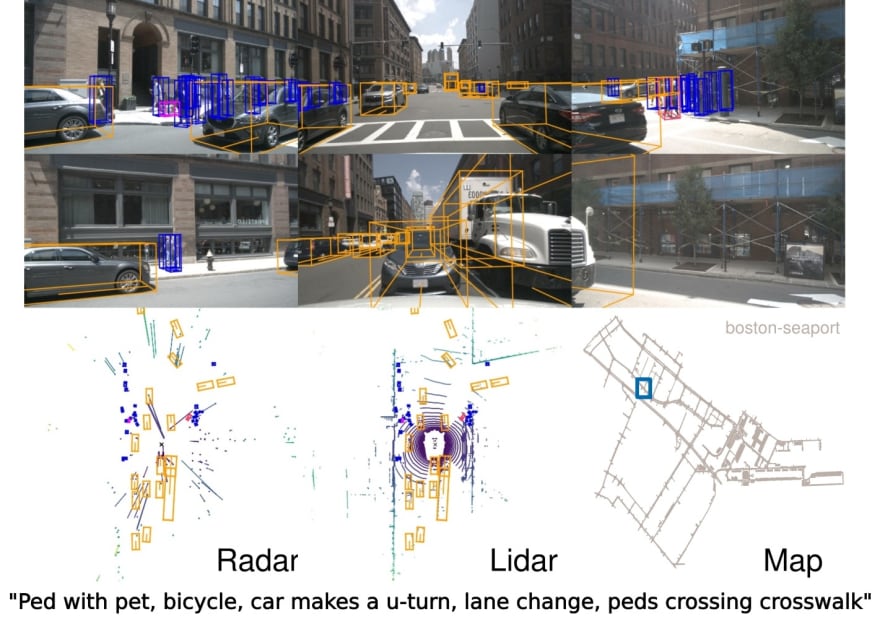

The nuScenes dataset is one of the largest public datasets for autonomous driving. The dataset contains a rich library of meticulously hand-annotated scenes collected by real self-driving cars. Recorded in Boston and Singapore, nuScenes features a diverse range of traffic situations, driving maneuvers, and unexpected behaviors.

The dataset includes:

- Full sensor suite: 32-beam LiDAR, 6 cameras and radars with complete 360° coverage

- 1000 urban street scenes, 20 seconds each

- 1,440,000 camera images

- 23 classes and 8 attributes

Accessing nuScenes data in SiaSearch

To access the data yourself, you’ll need to sign up for a free account on SiaSearch. After creating an account, you can immediately load and visualize nuScenes data within the web-based GUI.

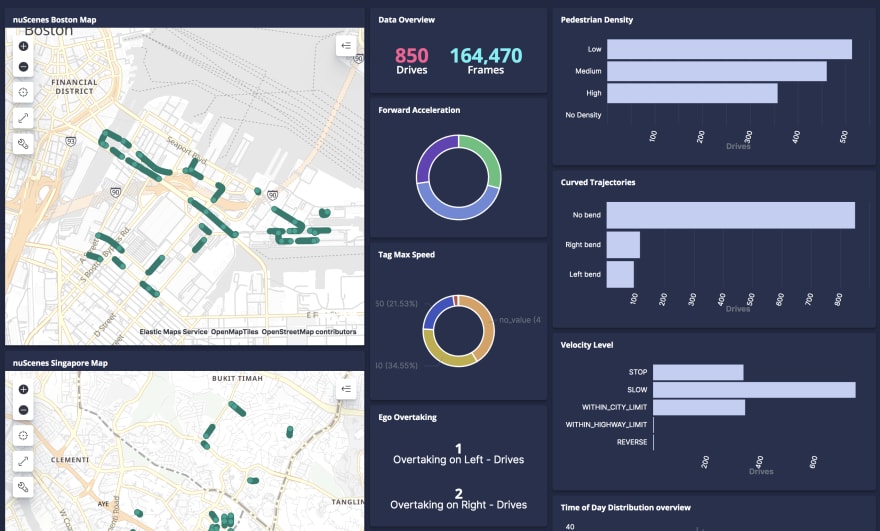

Now that we’re all set up, let’s start exploring! Upon loading nuScenes in SiaSearch, you’ll see the main dashboard, which captures key features of the dataset. This view lets you quickly understand the overall dataset composition, as well as identify any gaps in data distribution.

Querying the nuScenes Dataset

Having a holistic view of the dataset, while useful, is not enough. The ability to drill into specific subsets can uncover insights and imbalances in the data—a critical step in model building and validation.

SiaSearch makes every piece of nuScenes data searchable against all available and auto-extracted dimensions using its intelligent search interface. The platform features two ways to search for the exact sequences you want, using either a visual or code interface. The visual query lets you select extractors from a list of semantic attributes and driving situations, while the code query functions like any API call statement would.

For example, if we are searching for rainy weather scenes with over three cars in view, you could submit the following query using the code interface:

vehicle_following = 'True' AND precip_type = 'RAIN' AND

road_joint = 'True' AND forward_velocity >= 5 AND num_cars >= 3

Within seconds, the API returns clips that match the query attributes. This makes it easy to search the entire data lake to return situations that fit the case in question. From the search interface, specific clips can be selected by clicking into them to reveal more details, such as charts for forward velocity and acceleration profiles.

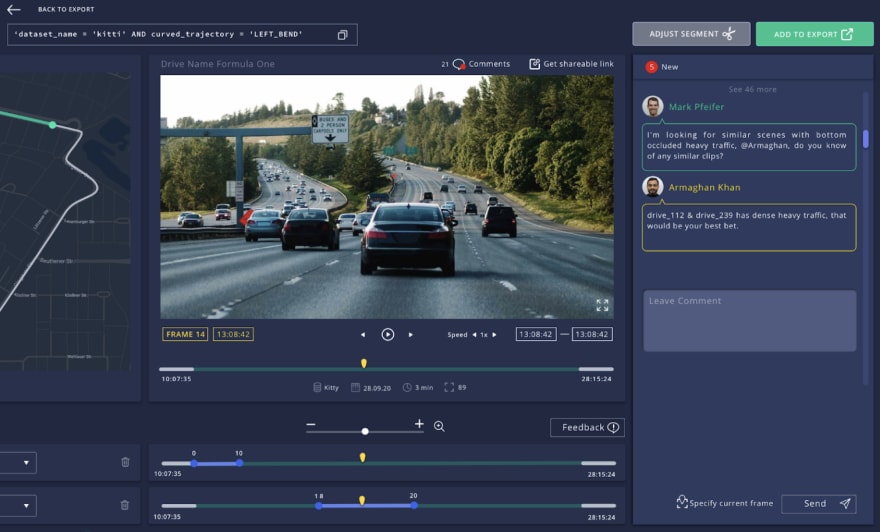

Curating custom training datasets from nuScenes

Another key benefit of using the platform is the ability to transform data to produce custom training datasets. The flexibility of the SiaSearch platform allows you to finetune data for training and testing to see a measurable improvement in your models.

For example, you may want to isolate the data right before or after an unprotected left turn in order to contextualize vehicle behavior. The SiaSearch interface gives you the ability to adjust the length of snippets to match your requirements in just a few clicks.

Here are just a few ways you can use the SiaSearch to transform data:

- Re-adjust clip length by clicking on the adjust clip button in the replay viewer. Easily trim or expand snippets according to your needs.

- Add custom tags to selected segments to produce new subsets of data.

- Leave comments for collaborators on a frame or sequence level to notify them of any questions or feedback.

Now that we’ve selected our training samples, we need to find a way to feed the data back into the computer vision model.

Exporting data from SiaSearch

The final step is to export your data into a format you can import directly into your model. To access the export page, add one or more snippets to your export list from either the search or playback pages.

On the export page, you can review a breakdown of the selected snippets based on their associated queries, as well as basic statistics to get an idea of the quantity and contents of the export. When you’re happy with your results, you can export the raw data and or specific metadata attributes as a parquet file to feed back into your model or validation pipeline.

Carefully curated data is critical in the development of any great computer vision model. At SiaSearch, we are determined to accelerate ADAS development by empowering engineers with full access and visibility into their data.

If you found this article interesting, please leave a comment and follow us for similar content. For more information about the SiaSearch platform, please check out our website at https://www.siasearch.io/.

nuScenes is available for commercial use under our commercial license agreement. Please reach out to nuScenes@motional.com to learn more.

Posted on April 12, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.