Web scraping Google Play App Reviews with Nodejs

Mikhail Zub

Posted on November 8, 2022

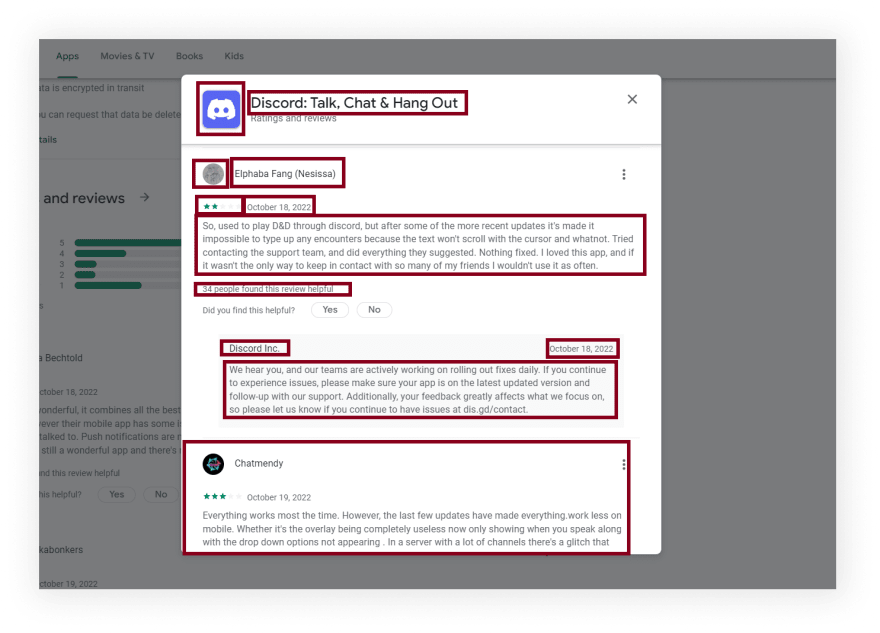

What will be scraped

📌Note: You can use official the Google Play Developer API which has a default limit of 200,000 requests per day for retrieving the list of reviews and individual reviews.

Also, you can use a complete third-party Google Play Store App scraping solution google-play-scraper. Third-party solutions are usually used to break the quota limit.

This blog post is meant to give an idea and step-by-step examples of how to scrape Google Play Store App Reviews using Puppeteer to create something on your own.

Full code

If you don't need an explanation, have a look at the full code example in the online IDE

const puppeteer = require("puppeteer-extra");

const StealthPlugin = require("puppeteer-extra-plugin-stealth");

puppeteer.use(StealthPlugin());

const reviewsLimit = 100; // hardcoded limit for demonstration purpose

const searchParams = {

id: "com.discord", // Parameter defines the ID of a product you want to get the results for

hl: "en", // Parameter defines the language to use for the Google search

gl: "us", // parameter defines the country to use for the Google search

};

const URL = `https://play.google.com/store/apps/details?id=${searchParams.id}&hl=${searchParams.hl}&gl=${searchParams.gl}`;

async function scrollPage(page, clickElement, scrollContainer) {

let lastHeight = await page.evaluate(`document.querySelector("${scrollContainer}").scrollHeight`);

while (true) {

await page.click(clickElement);

await page.waitForTimeout(500);

await page.keyboard.press("End");

await page.waitForTimeout(2000);

let newHeight = await page.evaluate(`document.querySelector("${scrollContainer}").scrollHeight`);

const reviews = await page.$$(".RHo1pe");

if (newHeight === lastHeight || reviews.length > reviewsLimit) {

break;

}

lastHeight = newHeight;

}

}

async function getReviewsFromPage(page) {

return await page.evaluate(() => ({

reviews: Array.from(document.querySelectorAll(".RHo1pe")).map((el) => ({

title: el.querySelector(".X5PpBb")?.textContent.trim(),

avatar: el.querySelector(".gSGphe > img")?.getAttribute("srcset")?.slice(0, -3),

rating: parseInt(el.querySelector(".Jx4nYe > div")?.getAttribute("aria-label")?.slice(6)),

snippet: el.querySelector(".h3YV2d")?.textContent.trim(),

likes: parseInt(el.querySelector(".AJTPZc")?.textContent.trim()) || "No likes",

date: el.querySelector(".bp9Aid")?.textContent.trim(),

response: {

title: el.querySelector(".ocpBU .I6j64d")?.textContent.trim(),

snippet: el.querySelector(".ocpBU .ras4vb")?.textContent.trim(),

date: el.querySelector(".ocpBU .I9Jtec")?.textContent.trim(),

},

})),

}));

}

async function getAppReviews() {

const browser = await puppeteer.launch({

headless: true, // if you want to see what the browser is doing, you need to change this option to "false"

args: ["--no-sandbox", "--disable-setuid-sandbox"],

});

const page = await browser.newPage();

await page.setDefaultNavigationTimeout(60000);

await page.goto(URL);

await page.waitForSelector(".qZmL0");

const moreReviewButton = await page.$("c-wiz[jsrenderer='C7s1K'] .VMq4uf button");

if (moreReviewButton) {

await page.click("c-wiz[jsrenderer='C7s1K'] .VMq4uf button");

await page.waitForSelector(".RHo1pe .h3YV2d");

await scrollPage(page, ".RHo1pe .h3YV2d", ".odk6He");

}

const reviews = await getReviewsFromPage(page);

await browser.close();

return reviews;

}

getAppReviews().then((result) => console.dir(result, { depth: null }));

Preparation

First, we need to create a Node.js* project and add npm packages puppeteer, puppeteer-extra and puppeteer-extra-plugin-stealth to control Chromium (or Chrome, or Firefox, but now we work only with Chromium which is used by default) over the DevTools Protocol in headless or non-headless mode.

To do this, in the directory with our project, open the command line and enter:

$ npm init -y

And then:

$ npm i puppeteer puppeteer-extra puppeteer-extra-plugin-stealth

*If you don't have Node.js installed, you can download it from nodejs.org and follow the installation documentation.

📌Note: also, you can use puppeteer without any extensions, but I strongly recommended use it with puppeteer-extra with puppeteer-extra-plugin-stealth to prevent website detection that you are using headless Chromium or that you are using web driver. You can check it on Chrome headless tests website. The screenshot below shows you a difference.

Process

First of all, we need to scroll through all games listings until there are no more listings loading which is the difficult part described below.

The next step is to extract data from HTML elements after scrolling is finished. The process of getting the right CSS selectors is fairly easy via SelectorGadget Chrome extension which able us to grab CSS selectors by clicking on the desired element in the browser. However, it is not always working perfectly, especially when the website is heavily used by JavaScript.

We have a dedicated Web Scraping with CSS Selectors blog post at SerpApi if you want to know a little bit more about them.

The Gif below illustrates the approach of selecting different parts of the results using SelectorGadget.

Code explanation

Declare puppeteer to control Chromium browser from puppeteer-extra library and StealthPlugin to prevent website detection that you are using web driver from puppeteer-extra-plugin-stealth library:

const puppeteer = require("puppeteer-extra");

const StealthPlugin = require("puppeteer-extra-plugin-stealth");

Next, we "say" to puppeteer use StealthPlugin, write the necessary request parameters, search URL and set how many reviews we want to receive (reviewsLimit constant):

puppeteer.use(StealthPlugin());

const reviewsLimit = 100; // hardcoded limit for demonstration purpose

const searchParams = {

id: "com.discord", // Parameter defines the ID of a product you want to get the results for

hl: "en", // Parameter defines the language to use for the Google search

gl: "us", // parameter defines the country to use for the Google search

};

const URL = `https://play.google.com/store/apps/details?id=${searchParams.id}&hl=${searchParams.hl}&gl=${searchParams.gl}`;

Next, we write a function to scroll the page to load all reviews:

async function scrollPage(page, clickElement, scrollContainer) {

...

}

In this function, first, we need to get scrollContainer height (using evaluate() method).

Then we use while loop in which we click (click() method) on the review element to stay in focus, wait 0.5 seconds (using waitForTimeout method), press "End" button to scroll to the last review element, wait 2 seconds and get a new scrollContainer height.

Next, we check if newHeight is equal to lastHeight or if the number of received reviews is more than reviewsLimit we stop the loop. Otherwise, we define newHeight value to lastHeight variable and repeat again until the page was not scrolled down to the end:

let lastHeight = await page.evaluate(`document.querySelector("${scrollContainer}").scrollHeight`);

while (true) {

await page.click(clickElement);

await page.waitForTimeout(500);

await page.keyboard.press("End");

await page.waitForTimeout(2000);

let newHeight = await page.evaluate(`document.querySelector("${scrollContainer}").scrollHeight`);

const reviews = await page.$$(".RHo1pe");

if (newHeight === lastHeight || reviews.length > reviewsLimit) {

break;

}

lastHeight = newHeight;

}

Next, we write a function to get reviews data from the page:

async function getReviewsFromPage(page) {

...

}

In this function, we get information from the page context and save it in the returned object. Next, we need to get all HTML elements with ".RHo1pe" selector (querySelectorAll() method).

Then we use map() method to iterate an array that built with Array.from() method:

return await page.evaluate(() => ({

reviews: Array.from(document.querySelectorAll(".RHo1pe")).map((el) => ({

...

})),

}));

And finally, we need to get all the data using the following methods:

title: el.querySelector(".X5PpBb")?.textContent.trim(),

avatar: el.querySelector(".gSGphe > img")?.getAttribute("srcset")?.slice(0, -3),

rating: parseInt(el.querySelector(".Jx4nYe > div")?.getAttribute("aria-label")?.slice(6)),

snippet: el.querySelector(".h3YV2d")?.textContent.trim(),

likes: parseInt(el.querySelector(".AJTPZc")?.textContent.trim()) || "No likes",

date: el.querySelector(".bp9Aid")?.textContent.trim(),

response: {

title: el.querySelector(".ocpBU .I6j64d")?.textContent.trim(),

snippet: el.querySelector(".ocpBU .ras4vb")?.textContent.trim(),

date: el.querySelector(".ocpBU .I9Jtec")?.textContent.trim(),

},

Next, write a function to control the browser, and get information:

async function getAppReviews() {

...

}

In this function first we need to define browser using puppeteer.launch({options}) method with current options, such as headless: true and args: ["--no-sandbox", "--disable-setuid-sandbox"].

These options mean that we use headless mode and array with arguments which we use to allow the launch of the browser process in the online IDE. And then we open a new page:

const browser = await puppeteer.launch({

headless: true, // if you want to see what the browser is doing, you need to change this option to "false"

args: ["--no-sandbox", "--disable-setuid-sandbox"],

});

const page = await browser.newPage();

Next, we change default (30 sec) time for waiting for selectors to 60000 ms (1 min) for slow internet connection with .setDefaultNavigationTimeout() method, go to URL with .goto() method and use .waitForSelector() method to wait until the selector is load:

await page.setDefaultNavigationTimeout(60000);

await page.goto(URL);

await page.waitForSelector(".qZmL0");

And finally, we check if "show all reviews" button is present on the page (using $() method), we click it and wait until the page was scrolled, save reviews data from the page in the reviews constant, close the browser, and return the received data:

const moreReviewButton = await page.$("c-wiz[jsrenderer='C7s1K'] .VMq4uf button");

if (moreReviewButton) {

await page.click("c-wiz[jsrenderer='C7s1K'] .VMq4uf button");

await page.waitForSelector(".RHo1pe .h3YV2d");

await scrollPage(page, ".RHo1pe .h3YV2d", ".odk6He");

}

const reviews = await getReviewsFromPage(page);

await browser.close();

return reviews;

Now we can launch our parser:

$ node YOUR_FILE_NAME # YOUR_FILE_NAME is the name of your .js file

Output

{

"reviews":[

{

"title":"Faera Rathion",

"avatar":"https://play-lh.googleusercontent.com/a-/ACNPEu_jb8bwx7nBMUAm6ogXkSy2udBVV7GYnygiESuv=s64-rw",

"rating":1,

"snippet":"I would've given this 5 stars a few months ago, being a long time user, but these recent updates have made the app extremely frustrating to use. I get randomly put into channels when I open the app, they scroll me back sometimes hundreds of messages, it's impossible to see all the channels in some Discords, doesn't clear notifications without having to try to fully scroll through a channel I was mentioned in to the point of having to refresh it multiple times and many more consistent issues.",

"likes":2,

"date":"October 19, 2022",

"response":{

"title":"Discord Inc.",

"snippet":"We're sorry for the inconvenience. We hear you and our teams are actively working on rolling out fixes daily. If you continue to experience issues, please make sure your app is on the latest updated version. Also, your feedback greatly affects what we focus on so please let us know if you continue to have issues at dis.gd/contact.",

"date":"October 19, 2022"

}

},

{

"title":"Avoxx Nepps",

"avatar":"https://play-lh.googleusercontent.com/a-/ACNPEu_WAW8BQ6SiTqR2gFzjxXpjSjFiAEx3E3cMKGQ1w5o=s64-rw",

"rating":2,

"snippet":"The new update has made it borderline unusable. It is extremely glitchy and a lot of times doesn't even work properly. Can't even join a voice call without it leaving and rejoining by itself or muting me for unknown reason. The new video system absolutely sucks. All of the minor inconveniences the previous version had is nothing compared to this update which looks like it was thrown together by a team of teenagers in Middle School in a month for a school project.",

"likes":"No likes",

"date":"October 20, 2022",

"response":{

"title":"Discord Inc.",

"snippet":"We'd like to know more about the issues you've encountered after the recent update. Could you please submit a support ticket so we can look into the issue?: dis.gd/contact If you have any suggestions about what should be changed or improved, please share them on our Feedback page here: dis.gd/feedback",

"date":"October 21, 2022"

}

},

...and other reviews

]

}

Using Google Play Product API from SerpApi

This section is to show the comparison between the DIY solution and our solution.

The biggest difference is that you don't need to create the parser from scratch and maintain it.

There's also a chance that the request might be blocked at some point from Google, we handle it on our backend so there's no need to figure out how to do it yourself or figure out which CAPTCHA, proxy provider to use.

First, we need to install google-search-results-nodejs:

npm i google-search-results-nodejs

Here's the full code example, if you don't need an explanation:

const SerpApi = require("google-search-results-nodejs");

const search = new SerpApi.GoogleSearch(process.env.API_KEY); //your API key from serpapi.com

const reviewsLimit = 100; // hardcoded limit for demonstration purpose

const params = {

engine: "google_play_product", // search engine

gl: "us", // parameter defines the country to use for the Google search

hl: "en", // parameter defines the language to use for the Google search

store: "apps", // parameter defines the type of Google Play store

product_id: "com.discord", // Parameter defines the ID of a product you want to get the results for.

all_reviews: "true", // Parameter is used for retriving all reviews of a product

};

const getJson = () => {

return new Promise((resolve) => {

search.json(params, resolve);

});

};

const getResults = async () => {

const allReviews = [];

while (true) {

const json = await getJson();

if (json.reviews) {

allReviews.push(...json.reviews);

} else break;

if (json.serpapi_pagination?.next_page_token) {

params.next_page_token = json.serpapi_pagination?.next_page_token;

} else break;

if (allReviews.length > reviewsLimit) break;

}

return allReviews;

};

getResults().then((result) => console.dir(result, { depth: null }));

Code explanation

First, we need to declare SerpApi from google-search-results-nodejs library and define new search instance with your API key from SerpApi:

const SerpApi = require("google-search-results-nodejs");

const search = new SerpApi.GoogleSearch(API_KEY);

Next, we write how many reviews we want to receive (reviewsLimit constant) and the necessary parameters for making a request:

const reviewsLimit = 100; // hardcoded limit for demonstration purpose

const params = {

engine: "google_play_product", // search engine

gl: "us", // parameter defines the country to use for the Google search

hl: "en", // parameter defines the language to use for the Google search

store: "apps", // parameter defines the type of Google Play store

product_id: "com.discord", // Parameter defines the ID of a product you want to get the results for.

all_reviews: "true", // Parameter is used for retriving all reviews of a product

};

Next, we wrap the search method from the SerpApi library in a promise to further work with the search results:

const getJson = () => {

return new Promise((resolve) => {

search.json(params, resolve);

});

};

And finally, we declare the function getResult that gets data from the page and return it:

const getResults = async () => {

...

};

In this function first, we declare an array allReviews with results data:

const allReviews = [];

Next, we need to use while loop. In this loop we get json with results, check if reviews are present on the page, push (push() method) them to allReviews array (using spread syntax), set next_page_token to params object, and repeat the loop until results aren't present on the page or number of the received reviews more than reviewsLimit:

while (true) {

const json = await getJson();

if (json.reviews) {

allReviews.push(...json.reviews);

} else break;

if (json.serpapi_pagination?.next_page_token) {

params.next_page_token = json.serpapi_pagination?.next_page_token;

} else break;

if (allReviews.length > reviewsLimit) break;

}

return allReviews;

After, we run the getResults function and print all the received information in the console with the console.dir method, which allows you to use an object with the necessary parameters to change default output options:

getResults().then((result) => console.dir(result, { depth: null }));

Output

[

{

"title":"Johnathan Kamuda",

"avatar":"https://play-lh.googleusercontent.com/a-/ACNPEu9QaKcoysS5G21Q5DQxs5nm2pg07GfJa-M_ezvOWfU",

"rating":5,

"snippet":"Been using Discord for many, many years. They are always making it better. It's become so much more robust and feature filled since I first started using it. And it's platform to pay for extras is great. You don't NEED to, but it's nice to have that kind of service a available if we wanted some perks. I think some of the options could be laid out better. Personal example - changing individuals volume in a call, not an intuitive option to find at first. Things like that fixed, would be perfect.",

"likes":29,

"date":"October 19, 2022"

},

{

"title":"Lark Reid",

"avatar":"https://play-lh.googleusercontent.com/a-/ACNPEu-RDynxDvoH-8_jnUj48AbZvXYrrafsLP3WT0fyTA",

"rating":1,

"snippet":"Ever since the new update me and other people that I know have completely lost the ability to upload more than one image/video at a time. It freezes on 70-100% when uploading multiple at a time. Now the audio on videos that I upload turn into static. I played the videos on my phone to make sure they weren't corrupted, and they are just fine. Sometimes when I open the app it gets stuck connecting and I have to restart it. Please fix your app asap. It's just not my phone that is effected.",

"likes":84,

"date":"October 21, 2022",

"response":{

"title":"Discord Inc.",

"snippet":"We're sorry for the inconvenience. We hear you and our teams are actively working on rolling out fixes daily. If you continue to experience issues, please make sure your app is on the latest updated version. Also, your feedback greatly affects what we focus on so please let us know if you continue to have issues at dis.gd/contact.",

"date":"October 21, 2022"

}

},

... and other reviews

]

Links

If you want to see some projects made with SerpApi, write me a message.

Add a Feature Request💫 or a Bug🐞

Posted on November 8, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.