Using Yelp Reviews API from SerpApi with Python

Artur Chukhrai

Posted on January 28, 2023

Intro

In this blog post, we'll go through the process of extracting reviews from the Yelp Place search page using the Yelp Reviews API and the Python programming language. You can look at the complete code in the online IDE (Replit).

In order to successfully extract Yelp Reviews, you will need to pass the place_id parameter, this parameter is responsible for reviews from a specific place. You can extract this parameter from organic results. Have a look at the Scrape Yelp Filters, Ad and Organic Results with Python blog post, in which I described in detail how to extract all the needed data.

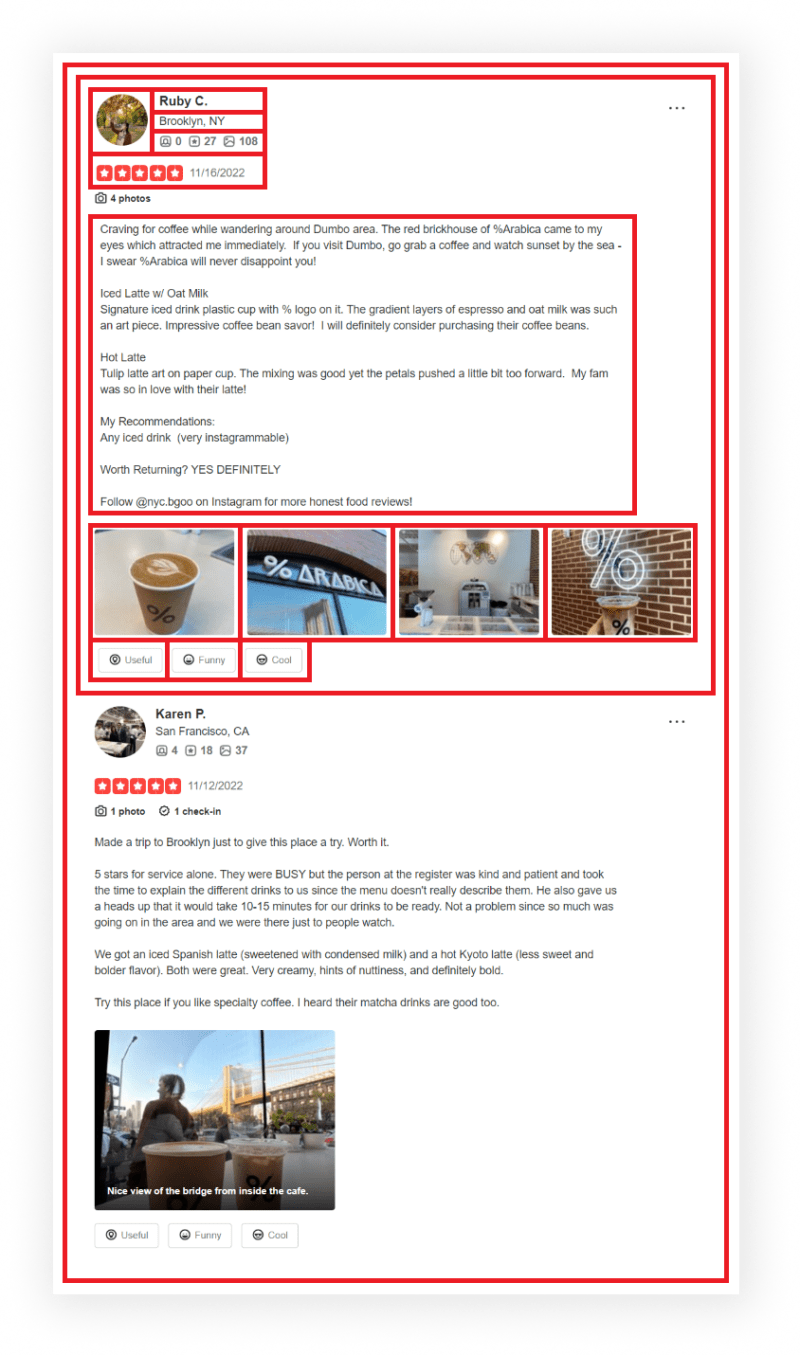

What will be scraped

Why using API?

There're a couple of reasons that may use API, ours in particular:

- No need to create a parser from scratch and maintain it.

- Bypass blocks from Google: solve CAPTCHA or solve IP blocks.

- Pay for proxies, and CAPTCHA solvers.

- Don't need to use browser automation.

SerpApi handles everything on the backend with fast response times under ~2.5 seconds (~1.2 seconds with Ludicrous speed) per request and without browser automation, which becomes much faster. Response times and status rates are shown under SerpApi Status page.

Full Code

This code retrieves all reviews from place with pagination:

from serpapi import GoogleSearch

import os, json

params = {

# https://docs.python.org/3/library/os.html#os.getenv

'api_key': os.getenv('API_KEY'), # your serpapi api

'engine': 'yelp', # SerpApi search engine

'find_desc': 'Coffee', # query

'find_loc': 'New York, NY, USA', # location

'start': 0 # pagination

}

search = GoogleSearch(params) # where data extraction happens on the SerpApi backend

results = search.get_dict() # JSON -> Python dict

organic_results_data = [

(result['title'], result['place_ids'][0])

for result in results['organic_results']

]

yelp_reviews = []

for title, place_id in organic_results_data:

reviews_params = {

# https://docs.python.org/3/library/os.html#os.getenv

'api_key': os.getenv('API_KEY'), # your serpapi api

'engine': 'yelp_reviews', # SerpApi search engine

'place_id': place_id, # Yelp ID of a place

'start': 0 # pagination

}

reviews_search = GoogleSearch(reviews_params)

reviews = []

# pagination

while True:

new_reviews_page_results = reviews_search.get_dict()

if 'error' in new_reviews_page_results:

break

reviews.extend(new_reviews_page_results['reviews'])

reviews_params['start'] += 10

yelp_reviews.append({

'title': title,

'reviews': reviews

})

print(json.dumps(yelp_reviews, indent=2, ensure_ascii=False))

Preparation

Install library:

pip install google-search-results

google-search-results is a SerpApi API package.

Code Explanation

Import libraries:

from serpapi import GoogleSearch

import os, json

| Library | Purpose |

|---|---|

GoogleSearch |

to scrape and parse Google results using SerpApi web scraping library. |

os |

to return environment variable (SerpApi API key) value. |

json |

to convert extracted data to a JSON object. |

At the beginning of the code, you need to make the request in order to get organic results. Then the titles and identifiers of places will be extracted from them.

The parameters are defined for generating the URL. If you want to pass other parameters to the URL, you can do so using the params dictionary:

params = {

# https://docs.python.org/3/library/os.html#os.getenv

'api_key': os.getenv('API_KEY'), # your serpapi api

'engine': 'yelp', # SerpApi search engine

'find_desc': 'Coffee', # query

'find_loc': 'New York, NY, USA', # location

'start': 0 # pagination

}

Then, we create a search object where the data is retrieved from the SerpApi backend. In the results dictionary we get data from JSON:

search = GoogleSearch(params) # data extraction on the SerpApi backend

results = search.get_dict() # JSON -> Python dict

At the moment, the first 10 organic results are stored in the results dictionary. If you are interested in all organic results with pagination, then check out the Scrape Yelp Filters, Ad and Organic Results with Python blog post.

The organic_results_data list stores data such as title and place_id which are extracted from each organic result. These data will be needed later:

organic_results_data = [

(result['title'], result['place_ids'][0])

for result in results['organic_results']

]

📌Note: There are two unique identifiers in the result['place_ids'] list. You can use any of them by accessing index 0 or 1. For example:

"place_ids": [

"ED7A7vDdg8yLNKJTSVHHmg",

"arabica-brooklyn"

]

Declaring the yelp_reviews list where the extracted data will be added:

yelp_reviews = []

Next, you need to access each place's reviews separately by iterating the organic_results_data list:

for title, place_id in organic_results_data:

# data extraction will be here

These parameters are defined for generating the URL for reviews about the current place. If you want to pass other parameters to the URL, you can do so using the reviews_params dictionary:

reviews_params = {

# https://docs.python.org/3/library/os.html#os.getenv

'api_key': os.getenv('API_KEY'), # your serpapi api

'engine': 'yelp_reviews', # SerpApi search engine

'place_id': place_id, # Yelp ID of a place

'start': 0 # pagination

}

| Parameters | Explanation |

|---|---|

api_key |

Parameter defines the SerpApi private key to use. |

engine |

Set parameter to yelp_reviews to use the Yelp Reviews API engine. |

place_id |

Parameter defines the Yelp ID of a place. Each place has two unique IDs (e.g. ED7A7vDdg8yLNKJTSVHHmg and arabica-brooklyn) and you can use either of them as a value of the place_id. To extract the IDs of a place you can use our Yelp Search API. |

start |

Parameter defines the result offset. It skips the given number of results. It's used for pagination. (e.g., 0 (default) is the first page of results, 10 is the 2nd page of results, 20 is the 3rd page of results, etc.). |

📌Note: You can also add other API Parameters.

Then, we create a reviews_search object where the data is retrieved from the SerpApi backend:

reviews_search = GoogleSearch(reviews_params)

Declaring the reviews list where the extracted data from the current place will be added:

reviews = []

In order to get all reviews from a specific place, you need to apply pagination. Therefore, an endless loop is created:

while True:

# pagination from current page

In the new_reviews_page_results dictionary we get a new package of the data in JSON format:

new_reviews_page_results = reviews_search.get_dict()

Next, the following check is performed: if there is an error in the new_reviews_page_results object of the current page, we exit the loop. This is necessary in order for the loop to stop when the reviews are over:

if 'error' in new_reviews_page_results:

break

Expanding the reviews list with new data from this page:

reviews.extend(new_reviews_page_results['reviews'])

# total_reviews = new_reviews_page_results['search_information']['total_results']

# user_name = new_reviews_page_results['reviews'][0]['user']['name']

# user_comment = new_reviews_page_results['reviews'][0]['comment']['text']

# review_date = new_reviews_page_results['reviews'][0]['date']

📌Note: In the comments above, I showed how to extract specific fields. You may have noticed the new_reviews_page_results['reviews'][0]. This is the index of a review, which means that we are extracting data from the first review. The new_reviews_page_results['reviews'][1] is from the second review and so on.

The start parameter is incremented by 10 to get results from the next page:

reviews_params['start'] += 10

After pagination, we append title and reviews from this place in the yelp_reviews list:

yelp_reviews.append({

'title': title,

'reviews': reviews

})

After the all data is retrieved, it is output in JSON format:

print(json.dumps(yelp_reviews, indent=2, ensure_ascii=False))

Output

[

{

"title": "% Arabica",

"reviews": [

{

"user": {

"name": "Ruby C.",

"user_id": "i7pw5JmqEFi_ooWZ-r9AzA",

"link": "https://www.yelp.com/user_details?userid=i7pw5JmqEFi_ooWZ-r9AzA",

"thumbnail": "https://s3-media0.fl.yelpcdn.com/photo/CccPdSignI0J5ThhwDJHAw/60s.jpg",

"address": "Brooklyn, NY",

"photos": 108,

"reviews": 27

},

"comment": {

"text": "Craving for coffee while wandering around Dumbo area. The red brickhouse of %Arabica came to my eyes which attracted me immediately. If you visit Dumbo, go grab a coffee and watch sunset by the sea - I swear %Arabica will never disappoint you! Iced Latte w/ Oat MilkSignature iced drink plastic cup with % logo on it. The gradient layers of espresso and oat milk was such an art piece. Impressive coffee bean savor! I will definitely consider purchasing their coffee beans. Hot LatteTulip latte art on paper cup. The mixing was good yet the petals pushed a little bit too forward. My fam was so in love with their latte! My Recommendations:Any iced drink (very instagrammable) Worth Returning? YES DEFINITELYFollow @nyc.bgoo on Instagram for more honest food reviews!",

"language": "en"

},

"date": "11/16/2022",

"rating": 5,

"tags": [

"4 photos"

],

"photos": [

{

"link": "https://s3-media0.fl.yelpcdn.com/bphoto/dPT4qVtR030f-Tefnm4U-g/o.jpg",

"caption": "Hot Latte",

"uploaded": "November 16, 2022"

},

{

"link": "https://s3-media0.fl.yelpcdn.com/bphoto/jQSW785FG28q0GA12xhr0g/o.jpg",

"uploaded": "November 16, 2022"

},

{

"link": "https://s3-media0.fl.yelpcdn.com/bphoto/vSTYMIMoyIJ3ucUmWi6bNA/o.jpg",

"uploaded": "November 16, 2022"

},

{

"link": "https://s3-media0.fl.yelpcdn.com/bphoto/_85T0OKir1l1VeEWeSpQvw/o.jpg",

"caption": "Iced Latte w/ Oat Milk",

"uploaded": "November 16, 2022"

}

]

},

... other reviews

]

},

... other places

]

📌Note: Head to the playground for a live and interactive demo.

Links

Add a Feature Request💫 or a Bug🐞

Posted on January 28, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.