SeattleDataGuy

Posted on December 3, 2019

Photo by tian kuan on Unsplash

We recently wrote about ETLs and why they're important. We wanted to provide an outline for what ETL tools are. You could refer to these ETL tools as workflow tools that help manage moving data from point A to point B.

Two of these popular workflow tools are Luigi by Spotify and Airflow by Airbnb. Both of these workflow engines have been developed to help in the design and execution of computationally heavy workflows that are used for data analysis.

What Is a DAG?

Now before comparing Airflow to Luigi, it's important we understand an important concept both libraries have in common. Both, essentially, build what is known as a directed acyclic graph (DAG). A DAG is a collection of tasks that run in a specific order with dependencies on previous tasks.

For example, if we had three tasks named Foo, Bar, and FooBar, it might be the case that Foo runs first and Bar and FooBar depend on Foo finishing.

This would create a basic graph like the one below. As you can see, there's a clear path. Now imagine this with tens of hundreds of tasks.

Large data organizations have massive DAGs with dependencies on dependencies. Having clear access to the DAG allows companies to track where things are going wrong and doesn't allow bad data into their data ecosystems because if something fails, it'll often force the tasks downstream to wait until their dependencies are complete.

This is where tools like Airflow and Luigi come in handy.

What's Luigi?

Luigi is an execution framework that allows you to write data pipelines in Python.

This workflow engine supports tasks dependencies and includes a central scheduler that provides a detailed library for helpers to build data pipes in MySQL, AWS, and Hadoop. Not only is it easy to depend on the tasks defined in its repos, it's also very convenient for Code Reuse; you can easily fork execution paths and use output of one task as the input of the second task.

This framework was written by Spotify and became open source in 2012. Many popular companies such as Stripe, Foursquare, and Asana use the Luigi workflow engine.

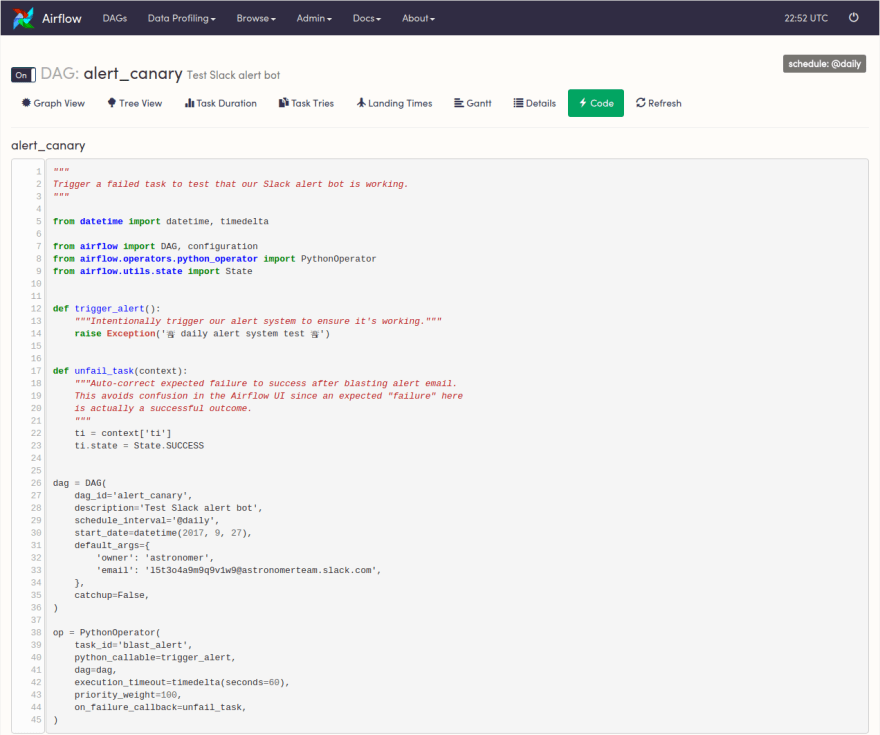

What's Airflow?

This workflow scheduler supports both task definitions and dependencies in Python.

It was written by Airbnb in 2014 to execute, schedule, and distribute tasks across a number of worker nodes. Made open source in 2016, Airflow not only supports calendar scheduling but is also equipped with a nice web dashboard which allows user to view current and past task states.

Designed to store and persist its state, this workflow engine supports relational databases. Owing to its web dashboard-visualization feature, Airflow can also be used as a starting point for traditional ETL.

Some of the processes fueled by Airflow at its parent company, Airbnb, include: data warehousing, experimentation, growth analytics, and email targeting.

Comparison Between Both Open-Source Workflow Engines

Both of these WMS are great. But each has its own pros and cons. This section will give you a brief comparison between Airflow and Luigi.

Commonalities in Luigi and Airflow

Before we get to the strengths and weaknesses of both these tools, let's discuss the commonalities both have:

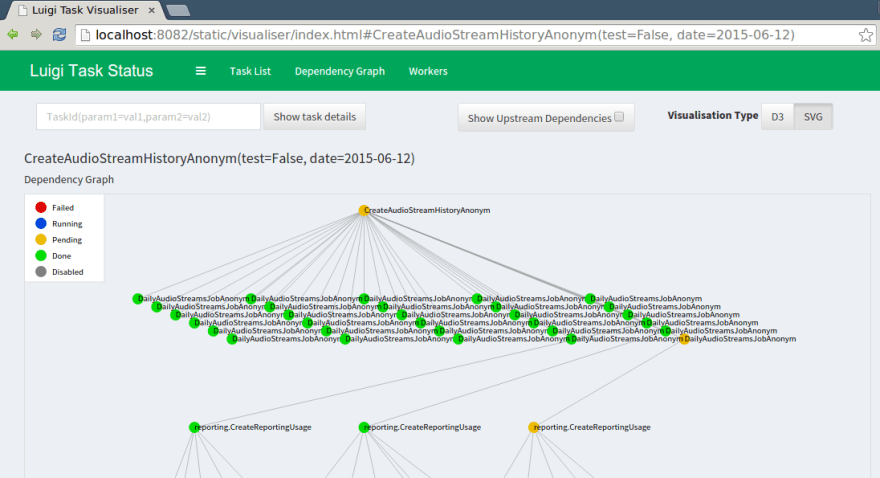

● Visualization tools

● Tasks represent a single unit of work

● Data-structure standards

● One node for a directed graph

● Option to specify synchronous/asynchronous tasks, conditional paths, and parallel commands

What's more is both these workflow engines are coded in Python. This makes it very easy to implement these libraries in most companies as Python is pretty ubiquitous.

Pros and cons of Luigi

Pros:

● Custom calendar scheduling: Although most ETL systems have tasks which require running every hour, Luigi doesn't have the concept of calendar scheduling. Thus, its up to the user to run tasks as per their convenience. The central scheduler contains a task history feature which also logs task completion onto a relational database, which, in turn, exposes it onto the main dashboard.

● Impressive stock library: One of the best parts of Luigi is its library of stock tasks and target data systems --- both SQL- and NOSQL-based. Each library includes a number of functionalities baked in as helper methods. These helper classes support Hadoop, Hive queries, scaling, Redshift, PostgreSQL, Google BigQuery, and much more.

● Targets files and data sets: Luigi targets files and data sets directly as inputs or outputs for tasks, which makes it easier to restore the historic state of an ETL system --- even if the state database is lost.

Cons:

● Task creation and testing: When it comes to creating and testing a task, the Luigi API is quite difficult. In the creation process, the task state is tightly coupled with data that task is actually meant to produce. Thus, engineers tend to simply push the tasks toward the production phase since it's pretty counter-intuitive.

● Not very scalable: Since Luigi and crons are tightly coupled, the number of worker processes in Luigi is bound by the number of cron workers assigned to the job. What's even worse is the fact a worker can only run a task that's uploaded on the central scheduler. Thus, if you wish to parallelize tasks in the massive pipeline, you'll have to split them into different sub pipelines.

● Hard to use: There's no easy way out if you need to see task logs and fails. Every single time, you'll have to look at the logs of the cron worker and then find the task log --- which itself is very task consuming. Moreover, the DAG of tasks cannot be viewed before execution. Thus, you wouldn't know what code is running in correlating tasks during deployment.

Pros and cons of Airflow

Pros:

- Easy-to-use user interface: With Airflow, you can easily view task logs, hierarchy, statuses, and code runs. This user interface also allows you to easily change task statuses, rerun historical tasks, and ever force a task to run.

- Independent scheduler: Airflow comes with its own scheduler, which allows you to separate the tasks from crons and easily scale them independently. Furthermore, Airflow supports multiple DAGs simultaneously. These DAGs work with tasks of two categories: sensors and operators.

- Active open-source community: With its strong community, Airflow actively gets features like pager duty, Slack integration, and SLAs. The chatroom itself is very active so newbies can get their questions answered within a span of few hours.

Cons:

- Task optimization: For any medium-sized company, there are multiple tasks in the pipeline, and Airflow is sometimes unclear of how to organize these tasks into the massive pipeline.

- No direct dealing with tasks: Apart from the special sensor operators, Airflow doesn't deal with data sets or files as inputs of tasks directly. In Airflow, the state database only stores the state of tasks and notes the data set, so if a database is lost, it's harder to restore the historic state of the ETL. Moreover, this makes it harder to deal with the tasks that appear correctly but don't produce and output.

- Lesser flexibility: With Airflow, workers cannot start tasks independently or pick tasks flexibly --- as per custom scheduling. Since tasks can only be picker by the central scheduler, Airflow starts workers with central scheduling.

Which Workflow Engine Is Better?

If you ask me, I would recommend Airflow over Luigi. Why? Not only is it easier to test pipelines with Airflow, but it also has an independent task state.

When it comes to scheduling, Luigi runs tasks in the cron jobs, while Airflow has its own LocalScheduler which allows users to scale the tasks independently. Furthermore, Airflow supports multiple DAGs, while Luigi doesn't allow users to view the tasks of DAG before pipeline execution.

Another huge point is the user interface. While Luigi offers a minimal UI, Airflow comes with a detailed, easy-to-use interface that allows you to view and run task commands simply.

Furthermore, the niceties of Airflow --- community contributions --- include service-level agreements, trigger rules, charts, and XComs, while Luigi has none of these.

Final Words

Both these open-source workflow systems have gained a lot of popularity owing to their core designs and functionalities.

In terms of communities, Airflow wins the game, but if your plan to use Hadoop or Google BigQuery, take a look at Luigi.

Although Luigi is easier to get started with, Airflow offers more expressive visualization and support. At the end of the day, it's up to your team to figure out what your technical needs are.

If you would like to read more posts about data science and data engineering, Check out the links below!

4 Must Have Skills For Data Scientists

The Advantages Healthcare Providers Have In Healthcare Analytics

142 Resources for Mastering Coding Interviews

Learning Data Science: Our Top 25 Data Science Courses

The Best And Only Python Tutorial You Will Ever Need To Watch

Dynamically Bulk Inserting CSV Data Into A SQL Server

What Is A Data Scientist

Posted on December 3, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.