Jumping into Big O

rwparrish

Posted on November 22, 2020

Not all programmers are math people and that is OK. Big O notation can be a little intimidating to approach if you are one of those people. In this blog, I would like to gently introduce you to the concept of Big O notation.

Here is a link to an awesome widget that Colt Steele created. I highly recommend playing around with it to become more familiar with the concept of Big O. I will be using the same code snippets from the widget in this blog. Check it out!

So what is Big O Notation?

It is simply a way for us to talk about the runtime of an algorithm as its input grows. That's it. Big O is expressed as O(n) where n is the size of the input. When input grows, how will that affect the time it takes for the algorithm to run? When discussing Big O notation, we are talking in very broad terms and considering the worst-case scenario - the upper bound for runtime. So what does it mean to consider the worst-case scenario?

Simplifying Big O

When considering the worst-case scenario we are thinking about what would happen when the input(n) becomes insanely large. This means constants don't matter and operations like addition, subtraction, multiplication, and division become basically irrelevant. In most cases, we can omit all constants and ignore lower powers of n - for example:

- O(1) - O(500), O(10,000), and O(1,000,000,000) all simplify to O(1).

- O(n) - O(25n + n), O((57n / 3) * n), and O(1,000n) all simplify to O(n).

- O(n²) - O(35n + n²) simplifies to O(n²).

- O(n³) - O(35n + n² + n³) simplifies to O(n³).

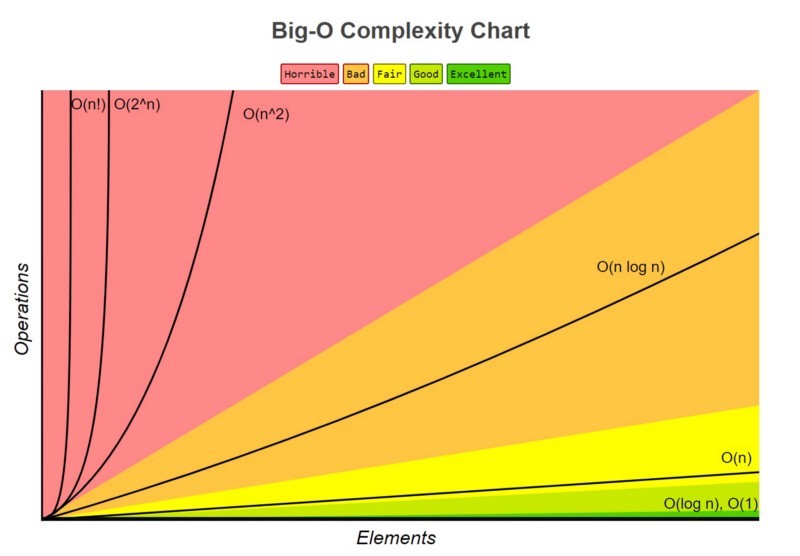

Take a look at the graph below. As programmers, we want to stay out of the "bad" and "horrible" zone.

- O(1) - as you can see in the graphic above, as the input grows, runtime remains constant. This is awesome. The algorithm runs quickly regardless of the size of the input. This is the ideal and most operations that perform a single operation fall into this complexity (in Computer Science the performance or runtime of an algorithm is also referred to as its complexity). Pushing to an array, getting an item at a particular index, adding a child element, etc, will take the same amount of time regardless of the array length. Algorithms with O(1) are ideal in their Big O complexity:

function addUpToSecond(n) {

return n * (n + 1) / 2;

}

- O(n) - as input(n) grows, broadly speaking, runtime increases at a linear rate. This is because there is a 1:1 relationship between data size (input) and runtime. All loops fall into this big O complexity:

function addUpToFirst(n) {

var total = 0;

for (var i = 0; i <= n; i++) {

total += i;

}

return total;

}

Look closely at the two functions above - addUpToSecond and addUpToFirst. They accomplish the same thing but have different Big O complexity.

- O(n²) - from a worst-case perspective, an algorithm with this complexity will not perform well. As input(n) increases the runtime will increase exponentially - check out the graphic above again. Stay away from nested loops:

function printAllPairs(n) {

for (var i = 0; i < n; i++) {

for (var j = 0; j < n; j++) {

console.log(i, j);

}

}

}

Recap

In this post, we dipped our toes into the Big O complexity pool. We learned that Big O has everything to do with "measuring" the time it takes an algorithm to run in the worst-case scenario. It is best practice to avoid writing code that includes nested loops and O(1) is the ideal.

I hope this intro into Big O notation and complexity was gentle, clear, and helpful. There is more to the concept than was covered in this post, but this is a good start.

Please feel free to school me if you think you can explain something more clearly. Leave feedback and ask questions! Happy coding!

Posted on November 22, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

April 25, 2024

December 5, 2023

November 25, 2023