Managed Kubernetes Comparison: EKS vs GKE

Romaric P.

Posted on January 14, 2022

Kubernetes is changing the tech space as it becomes increasingly prominent across various industries and environments. Kubernetes can now be found in on-premise data centers, cloud environments, edge solutions, and even space.

As a container orchestration system, Kubernetes automatically manages the availability and scalability of your containerized applications. Its architecture consists of various planes that make up what is known as a cluster. This cluster can be implemented (or deployed) in various ways, including adopting a CNCF-certified hosted or managed Kubernetes cluster.

This article explores and contrasts two of the most popular hosted clusters: Amazon Elastic Container Service for Kubernetes (EKS) and Google Kubernetes Engine (GKE). You’ll compare the tools looking at ease of setup and management, compatibility with Kubernetes version releases, support for government cloud, support for hybrid cloud models, cost, and developer community adoption.

Overview of Managed Kubernetes Solution

A managed Kubernetes solution involves a third-party, such as a cloud vendor, taking on some or full responsibility for the setup, configuration, support, and operations of the cluster. Google Kubernetes Engine (GKE), Amazon Elastic Container Service for Kubernetes (EKS), Azure Kubernetes Service, and IBM Cloud Kubernetes Service are examples of managed Kubernetes clusters.

Managed Kubernetes solutions are useful for software teams that want to focus on the development, deployment, and optimization of their workloads. The process of managing and configuring clusters is complex, time-consuming, and requires proficient Kubernetes administration, especially for production environments.

Overview of GKE

Let’s look at qualities that your organization should consider before choosing GKE as their hosted cluster solution:

Cluster Configurations

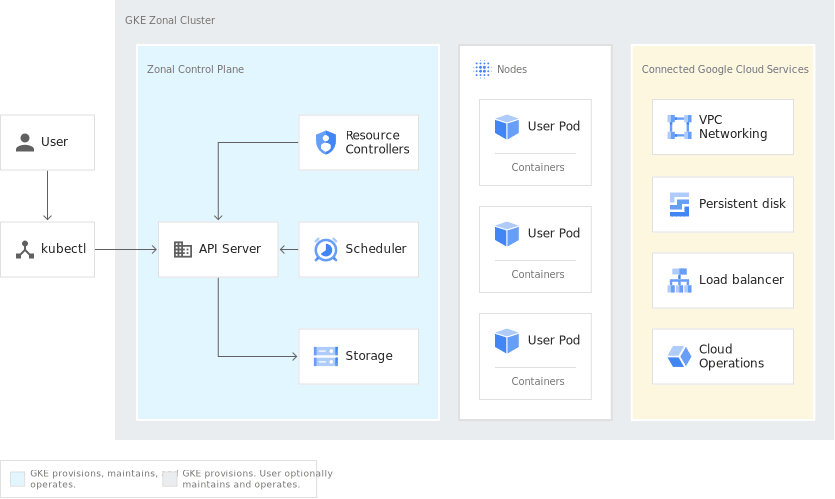

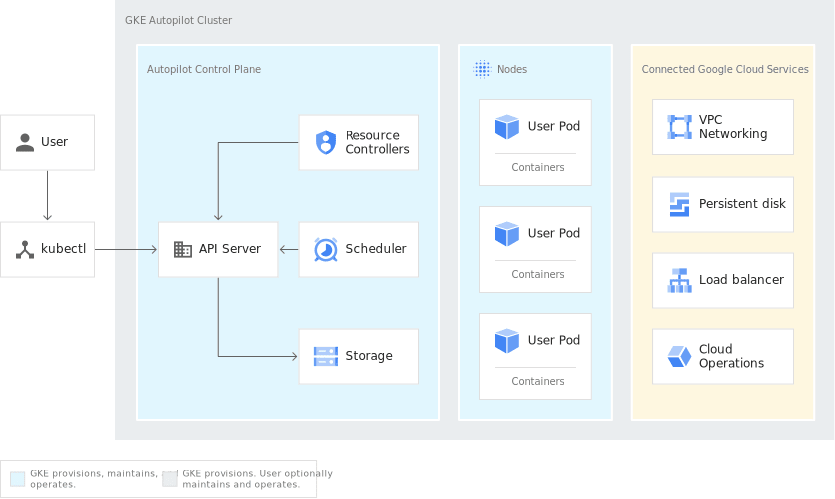

GKE has two cluster configuration options (or modes, as they are called): Standard and Autopilot.

- Standard mode: This mode allows software teams to manage the underlying infrastructure (node configurations) of their clusters.

- Autopilot mode: This mode offers software teams a hands-off experience of a Kubernetes cluster. GKE manages the provisioning and optimization of the cluster and its node pools.

Setup and Configuration Management

Cluster setup and configuration can be a time-consuming and arduous process. In a cloud environment, you must also understand networking topologies since they form the backbone of cluster deployments.

For teams and operators looking for a solution with less operational overhead, GKE has the automated capabilities you’re looking for. This includes automated health checks and repairs on nodes, as well as automatic cluster and node upgrades for new version releases.

Service Mesh

Software teams deploying applications based on microservice architectures quickly find out that Kubernetes service level capabilities are insufficient in a number of ways.

Service meshes are dedicated infrastructure layers that address network and security issues at an application service level and help complement large complex workloads.

GKE comes with Istio installed by default. Istio is an open-source service mesh implementation that can help organizations secure large and critical workloads.

Kubernetes Versions and Upgrades

In comparison to EKS, GKE offers a wide variety of release versions depending on the release channel you select (stable, regular, or rapid). The rapid channel includes the latest version of Kubernetes (v1.22 at the time of this post).

GKE also has auto-upgrade capabilities for both clusters and nodes in Standard and Autopilot cluster modes.

No Government Cloud Support

Google doesn’t offer a government cloud solution like AWS for hosted clusters. Any software solutions that require security posture, regulation, and stringency by government agencies will have to be developed based on your standard regional offerings.

Exclusive to Cloud VMs

A majority of enterprises prefer a hybrid model over other cloud strategies; however, GKE only offers cluster architecture models that consist of Virtual Machines (VMs) in a cloud environment.

For organizations looking to distribute their workloads between nodes in on-premise data centers and the cloud, EKS would be more suitable.

Conditional Service Level Agreement (SLA)

When making use of a single zone cluster, GKE is the more affordable solution, as there are no costs involved in managing the control plane; however, this solution type doesn’t offer a Service Level Agreement (SLA) unless you opt for a regional cluster solution, which costs ten cents per hour for control plane management.

EKS offers SLA coverage at 99.95 percent, whereas GKE only offers 99.5 percent for its zonal clusters and 99.95 percent for its regional clusters.

CLI Support

The GKE CLI is a sub-module of the official GCP CLI (gcloud). Once a user has installed gcloud and authenticated with gcloud init, they can proceed to perform lifecycle activities on their GKE clusters.

Pricing

GKE clusters can be launched either in Standard mode or Autopilot mode. Both modes have an hourly charge of ten cents per cluster after the free tier.

From a pricing perspective, GKE differs from EKS because it has a free tier with monthly credits that, if applied to a single zonal cluster or Autopilot cluster, will completely cover the operational costs involved in running the cluster.

Use Cases

Based on the characteristics outlined above, GKE works particularly well in the following scenarios:

- Minimal management overhead

- High degree of operational automation

- Wide support of Kubernetes versions (including an option for latest versions)

- Cost-effective model for small clusters

- Out-the-box service mesh integration (with Istio)

Overview of EKS

Now let’s take a look at EKS and what factors you should consider before using their hosted cluster solution.

Cluster Configurations

EKS has three cluster configuration options for launching or deploying your managed Kubernetes cluster in AWS. These three configurations are managed node groups, self-managed nodes, and Fargate.

Managed Node Groups

The launch configuration automates the provision and lifecycle management of your EC2 worker nodes for your EKS cluster. In this mode, AWS manages the running and updating of the EKS AMI on your nodes, applying labels to node resources and draining of nodes.

Self-managed Worker Nodes

As the name implies, this option gives teams and operators the most flexibility for configuring and managing their nodes. It’s the DIY option from the different launch configurations.

You can either launch Auto Scaling groups or individual EC2 instances and register them as worker nodes to your EKS cluster. This approach requires that all underlying nodes have the same instance type, the same Amazon Machine Image (AMI), and the same Amazon EKS node IAM role.

Serverless Worker Nodes with Fargate

AWS Fargate is a serverless engine that allows you to focus on optimizing your container workloads while it takes care of the provisioning and configuration of the infrastructure for your containers to run on.

EKS Anywhere

Businesses recognize the cloud as a great enabler and are using it to meet their needs in combination with on-premise data centers.

Amazon EKS recently launched Amazon EKS Anywhere, which enables businesses to deploy Kubernetes clusters on their own infrastructure (using VMware vSphere) while still being supported by AWS automated cluster management.

This deployment supports the hybrid cloud model, which in turn enables businesses to have operational consistency in their workloads, both on-premises and in the cloud. At this point in time, EKS doesn’t offer the option for using bare metal nodes, but AWS has stated that this feature is expected in 2022.

Integration with AWS Ecosystem

For years, AWS has been the leading cloud compute services provider. EKS can easily integrate with other AWS services, allowing enterprises to make use of other cloud compute resources that meet their requirements. If your business’ cloud strategy consists of resources in the AWS landscape, your Kubernetes workloads can be seamlessly integrated using EKS.

Developer Community

EKS has a vast developer community with the highest adoption and usage rate among the Kubernetes managed cluster solutions. Because of the complex challenges that configuring and optimizing Kubernetes entails, this community offers you a great deal of value as it can support structure around common use cases, forms a knowledge base for you to query as you face problems, and offers examples from others using similar technologies.

Government Cloud Solution

AWS has a government cloud solution that enables you to run sensitive workloads securely while meeting the relevant compliance requirements. As a result, the power of Kubernetes can be used in the AWS ecosystem to support operations that fit this criterion.

Setup and Configuration Management

Compared to GKE, operating EKS from the console requires additional manual steps and configuration in order to provision the cluster. This requires knowledge and proficiency from software teams to understand the underlying networking components of AWS and how it impacts the cluster to be provisioned.

Furthermore, installation of components like Calico CNI, as well as upgrading the AWS VPC CNI has to be done manually, and EKS doesn’t support automatic node health repair checks.

Kubernetes Versions and Upgrades

EKS supports three or more minor Kubernetes version releases, not including the most recent Kubernetes release. In addition, when using EKS, Kubernetes version upgrades have to be done manually.

For software teams that want to stay on top of the latest security patches as well as work with the latest features, the limited options that EKS offers can make meeting certain requirements challenging.

CLI Support

Similar to GKE, EKS has full CLI support in the form of a sub-module of the official AWS CLI tool. When a software developer configures their AWS profile (that has the right permissions) with the CLI, they can proceed to perform operations on their EKS cluster.

Updating the local kube config file to contain the credentials for the Kubernetes cluster API endpoint can be done with the following command: aws eks update-kubeconfig --region <region> --name <cluster-name>.

In addition, the team from Weaveworks produced an EKS CLI tool called eksctl which is used to implement and manage the lifecycle of EKS clusters in the form of infrastructure-as-code.

Pricing

Amazon EKS charges ten cents per hour, which is a fee based on the management of the control plane. Any additional charges are incurred based on the standard prices for other AWS resources (i.e., EC2 instances for worker nodes).

When Amazon EKS is run on AWS Fargate (serverless engine), the additional pricing (outside of the hourly rate for the control plane) is calculated based on the memory and vCPU usage of the underlying resources used to run the container workloads.

Unlike GKE, AWS doesn’t offer a limited free tier service for EKS.

Use Cases

Based on the characteristics outlined above, EKS works particularly well in the following scenarios:

- Running workloads in a hybrid cloud model

- Integrating workloads with AWS ecosystem

- Desired support from a large community of practitioners

- Running workloads in a dedicated government cloud environment

Conclusion

By design, managed Kubernetes solutions like EKS and GKE reduce the operational overhead and complexities that come with managing a Kubernetes cluster. Each cluster solution has pros and cons that organizations need to consider against their needs and workload requirements.

Software teams also need to consider an optimal way of deploying their infrastructure and application workloads. In this case, Qovery can help your teams become more autonomous and efficient. Qovery is a cloud-agnostic deployment platform that can help teams with Kubernetes cluster management, whether EKS or GKE, in a scalable way.

Posted on January 14, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

September 6, 2020

July 9, 2020