Azure Spring Clean: AI to the edge with Azure Percept

Ron Dagdag

Posted on March 14, 2022

Azure Spring Clean: AI to the edge with Azure Percept

This post is a part of Azure Spring Clean which is a community event focused on Azure management topics from March 14-18, 2022. This is an amazing community initiative dedicated to promoting well managed Azure tenants. You can catch all the updates from https://www.azurespringclean.com/.

In this blog post, I will share how to manage Azure Percept Devices. How to capture image data for training a custom object detection model; deploy a model and push telemetry data to IoT Hub.

See no evil, hear no evil, speak no evil

These are the three wise monkeys.

What it means is that a person shouldn’t dwell on evil thoughts. Do not listen to, look at or say anything bad. Another interpretation is to ignore bad behavior by pretending not to hear or see it.

Looking at these 3 monkeys; I got to thinking, what is “evil” for machines?

What is evil that we humans want our machines to ignore?

If we’re building these AI machines, how can we make it smarter than these wise monkeys? How can we train our devices be wise enough that it won’t collect “evil”

I do not want to be philosophical here. You may ask, how is this connected to Azure Percept?

Let’s get started.

Surveillance camera captures everything, records everything, night or day. Once it starts recording, it doesn’t filter what it sees. It’s always watching; always recording. In my opinion this is not always a good thing, it’s “evil” in a way.

See no evil.

What we really want is Intelligent Video Analytics, these cameras provide valuable insights and informed business decisions. It can limit what is recorded by processing video locally. Rather than sending recorded data to the cloud or outside of it’s local network; it would process the video locally and filter frames accordingly. Only send processed telemetry data to the cloud.

Azure Percept Vision has capability to do Intelligent Video Analytics. It’s using Intel Movidius Vision Processing Unit (VPU) for AI Acceleration. It has an 8 megapixel RGB camera running 30 frames per second. It’s capable of AI recognition of common objects without requiring coding. We’ll demonstrate on how to train an ML Model using Azure Custom Vision and deploy to Azure Percept.

Everytime we see this “on-air” sign, it typically means someone is broadcasting and sound is being recorded. It alerts everyone to be quiet! At this point, it is always listening and recording every little sound around the microphone.

Hear no evil.

What we really want is to have devices that are not always listening but smart enough to respond and listen to us when we need it. These devices should be context aware and limit it’s listening by activating only when it hears the “wake” keyword. That’s the premise of these smart assistant devices. Appliances these days are becoming smart assistant capable of voice commands including TVs and refrigerators.

Azure Percept Audio has the capability to become a smart assistant. It has 180 degree far-field linear array microphones and visual indicators. It adds the speech AI capability to Azure Percept dev kit and contains a preconfigured audio processor. Once the keyword is detected, it will start recording and send to the cloud for natural language processing.

Browsers have capability to track activities and be monitored with or without consent. Websites and advertisers team up to gather browsing data to build up a detailed profile for commercial gain. Sometimes it feels like being watched and being followed around by salespeople in a store.

Speak no evil.

What we really want is to have devices that are intelligent enough to limit information sent to the cloud. These devices should process data closer to the user, and limit its tracking capabilities. That’s when we talk about Intelligent Edge. The analysis of data happens where the data is generated. Intelligent edge reduces latency, costs, and security risks, yielding better efficiency.

Azure Percept Trust Module can run AI at the edge. It has built-in hardware acceleration, it can run AI models without a connection to the cloud. It’s powerful enough for developing vision and audio AI solutions. It has Wi-Fi and Bluetooth connection, Ethernet, USB-A and USB-C for connecting peripheral devices. Processor is fast enough to filter and process data.

Azure Percept Device Kit

Azure Percept device kit has 3 components: Vision, Audio and Trust Module.

See no evil. Hear no evil. Speak no evil.

Azure Percept Studio is the management tool for Azure Percept Devices, it makes it easy to integrate edge AI-capable hardware and Azure IoT cloud services. It is used for creating edge AI models and solutions. Azure Percept Studio integrates with IoT Hub, Custom Vision, Speech Studio and Azure ML so it can be used to create AI solutions without significant IoT and AI experience.

Each Azure Percept device has endpoints for collecting initial and ongoing training data. Percept Studio handles the deployment of AI models to devices, allowing rapid prototyping and iterative model development for vision and speech scenarios.

Here’s the unboxing and assembly instructions and setup onboarding instructions Azure Percept DK device. It walks through how to connect to WiFi and device initial setup. It requires Azure subscription to setup and proper connection to Azure Percept Studio and IoT Hub. The onboard process allows you to name the device, in this case, I named it “percept-device-01”

Once the device is set up, check Azure Percept Studio if the device is online. The status would be set to “Connected”. If it’s offline, it will be set to “Disconnected”

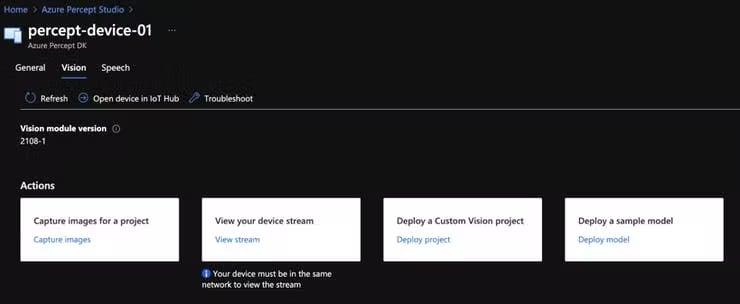

Click on the device “percept-device-01”, and it will describe the device details. Go to the “Vision” tab to manage AI models and view the device stream. Let’s walk through each action.

“View stream” - use this to view live stream video from the device. Note that the device must be in the same network to view the stream. This is added security to Azure Percept.

“Deploy a sample model” - The easiest way to test capability of Azure Percept is to deploy a sample model. In this case I used the General object detection model and deployed it to the device. Azure Percept Studio would automatically connect to the device and set the proper location where to download the AI model. The device would download it from Azure storage.

Looking at the webstream video, it shows a bounding box of the item that’s detected. In this case, the Orangutan figurine is being detected as a teddy bear.

Back to the topic of monkeys. Here’s a better view of those Orangutans. I bought it on Amazon.

Since the sample object detection model couldn’t detect these objects, Let’s train a new AI model using Azure Custom Vision.

I followed this tutorial to create an Azure Custom Vision project.

Before we can train, we need sample images. That’s where the “Capture images” becomes useful. Azure Percept Studio can send a message to the device to capture an image from the current video stream and send it to Azure Custom Vision. First, select the Azure Custom Vision Project, then click “Take photo”.

Once it’s taken a photo. Click on “View Custom Vision Project”. Click on “Untagged”, select the photo that needs annotation. Draw a bounding box.

After annotating, click the “Train” button on the upper left of the screen . In this case we’re doing “quick training”. You can also do Advanced Training and specify the number of hours to train. Click the “Train” button to start the process.

After training, click the “Performance” tab to view the results of training. The goal is close to 100% but not 100%. Sometimes it may need more images to be effective.

Go back to Azure Percept Studio and click on “Deploy project”. This will deploy a model from Azure Custom Vision to Percept Device. Select the Project and Model Iteration then click the “Deploy Model” button below.

Go back to the device stream, wait for a few minutes until the model is updated. Verify if the model is working. In this case, it can detect “speak, hear, see” with corresponding bound boxes.

To view the data sent by Azure Percept device, Click “General” -> “View live telemetry”. Wait for a few seconds until it starts receiving the data stream.

Sample output data that would be sent to IoT Hub would look something like this:

[{

"body": {

"NEURAL_NETWORK": [

{

"bbox": [

0.32,

0,

0.592,

0.834

],

"label": "speak",

"confidence": "0.970703",

"timestamp": "1633275502757261752"

}

]

},

"enqueuedTime": "2021-10-03T15:38:24.365Z"

}

]

Summary

Azure Percept is an easy way to prototype and deploy AI to the Edge. Azure Percept Studio makes it easy to manage models for Azure Percept Devices.

We deployed a sample model to the device, captured images for training, trained a new machine learning model using Azure Custom Vision, then deployed the AI model to Azure Percept device and verified the data stream.

Getting Started With Azure Percept:

https://docs.microsoft.com/azure/azure-percept/

Join the adventure, Get your own Percept here

Get Azure https://www.azure.com/account/free

Posted on March 14, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.