Crafting Efficient MongoDB Queries

Roman Right

Posted on November 30, 2023

MongoDB, a leading database in the NoSQL domain, is not just about storing data—it's about harnessing it efficiently. Dive into the heart of its query system, understand its inner workings, and discover strategies to transform the way you interact with and retrieve your data.

MongoDB's Response Mechanism: The Power of the explain() Method.

Imagine sending a message out into the void, hoping for an answer but never quite knowing if your message was received, let alone understood. That's what querying without feedback feels like. With MongoDB's explain() method, we get to peek behind the curtain, understanding how our queries fare in the grand scheme of things.

What's this explain() magic?

At its core, explain() provides insights into the execution of MongoDB operations. By analyzing the output, developers can decode the database's decision-making process, getting a step-by-step walkthrough of how a query is executed. This doesn't just make you feel like a database wizard, but it also arms you with the knowledge to optimize queries for better performance.

Deciphering the Talkback

From the troves of information that explain() returns, a few fields stand out in their importance:

executionStats: This provides an overview of how your query was executed. Here you'll see details about the number of documents scanned, the number of documents returned, and how long the query took.

executionStages: A closer look into the mechanics of your query's execution. It can reveal whether your query did a full collection scan (

COLLSCAN) or if it used an index (IXSCAN).queryPlanner: Offers an insight into MongoDB's thought process. What strategy did it decide was best for your query? Did it consider multiple strategies before choosing one?

To bring these abstract concepts to life, let's consider a simple query where we wish to fetch all products in category "A" from our fictional collection:

collection.find({"category": "A"})

When we use the explain() method without an index on the "category" field, the output highlights that MongoDB had to scan every single document in the collection to find matches. This is evident in the executionStages field with the value COLLSCAN and totalDocsExamined being 1,000,000.

However, when an index on the category field is introduced and the same query is analyzed with explain(), the difference is night and day. Instead of COLLSCAN, we now see IXSCAN in the executionStages field, indicating that the database smartly used the index. Moreover, the totalDocsExamined drastically reduces to 199,916, showcasing the immense power of indexing. It's important to remember why such improvements in query performance are valuable: querying less data means using less time to do it. This efficiency translates into freeing up compute cycles, allowing other work to proceed more smoothly. Consequently, there's a direct impact on database hosting costs, as reduced processing time and resource usage can lead to significant cost savings.

Bridging Understanding for Efficiency

Understanding the feedback from MongoDB is akin to listening intently in a conversation. By focusing on what MongoDB is 'saying', we can adjust our questions (queries) to be more in tune with the database's strengths. Indexing, for instance, becomes a clear pathway to optimization once we've grasped how powerful it can be from the explain() outputs.

Mastering Indexes in MongoDB: Balancing Adaptability and Performance.

Imagine trying to find a particular book in a colossal library. Without any categorization or system, the task would be daunting and time-consuming. Similarly, in the grand library of MongoDB data, indexes serve as our guiding system, directing our queries with precision and speed. We've just witnessed how a well-placed index can turn an exhaustive COLLSCAN into a much more performant IXSCAN. But what's behind this magic? Let's journey into the heart of MongoDB indexing.

A Deep Dive into Indexing's Role

In essence, an index in MongoDB is a data structure that holds a subset of the collection's data in an easy-to-traverse form. This subset, ordered by the value of a specific field or set of fields, allows MongoDB to avoid scanning every document in a collection when searching for data. As seen in our earlier example with product categories, indexing can greatly enhance search efficiency.

The Colorful Palette of Indexes in MongoDB

MongoDB offers a properly rich set of index types and options for anyone seriously interested in query tuning. Each index type is crafted to serve specific use-cases, ensuring efficient querying and data retrieval. Here's a deep dive into these index types, explaining their purpose, how they work internally, and their primary use-cases:

-

Single Field:

- Purpose: To support efficient searches on individual fields.

- How it works: This index type creates a B-tree structure on a single field, enabling fast lookups.

- Use-case: Suited for situations where queries primarily focus on one field, like looking up users by their username.

-

Compound:

- Purpose: To handle queries that combine multiple fields.

- How it works: This index spans multiple fields within a document. A B-tree structure is maintained for the combination of these fields.

- Use-case: Useful when querying on conditions that involve multiple fields, like finding orders based on both customer ID and product ID.

-

Multikey:

- Purpose: To index array data effectively.

- How it works: Indexes each individual element of an array.

- Use-case: Suited for situations where queries target specific array values, like filtering products by tags.

-

Text:

- Purpose: To provide efficient full-text search capabilities.

- How it works: It tokenizes strings and stores them as an inverted index, making text searches faster.

- Use-case: Suitable for applications that require text search functionality, like searching for articles containing specific words or phrases.

-

Geospatial:

- Purpose: To facilitate queries based on geographical locations.

- How it works: Uses a special indexing mechanism to efficiently store and query data based on geographic coordinates.

- Use-case: Ideal for location-based services, like finding nearby restaurants or calculating distances between points.

-

Hashed:

- Purpose: Primarily to support sharding.

- How it works: It hashes the field's value and then indexes this hash, ensuring an even distribution of data.

- Use-case: Suitable for databases that use sharding to distribute data across multiple servers or clusters.

-

Wildcard:

- Purpose: To offer flexibility by indexing fields dynamically.

- How it works: Instead of indexing specified fields, it indexes all fields, ensuring no field is left unindexed.

- Use-case: Useful in scenarios where the structure of documents is dynamic or not well-defined in advance.

-

Sparse:

- Purpose: To conserve memory.

- How it works: Only includes documents where the indexed field is present, ensuring the index remains lightweight.

- Use-case: Ideal for collections where only some documents contain the indexed field, like optional attributes in user profiles.

-

TTL (Time-to-Live):

- Purpose: To automate data pruning.

- How it works: Monitors the indexed timestamp field and removes documents once they've existed past a specified duration.

- Use-case: Perfect for data with a natural expiration, such as session data or temporary logs.

-

Partial:

- Purpose: To ensure resource efficiency.

- How it works: Only indexes a subset of documents, based on a defined filter condition.

- Use-case: Designed for large collections where only a specific fraction of documents are relevant for indexing, like indexing only 'active' user accounts.

Each of these indexes plays a crucial role in ensuring MongoDB's versatility and performance. By understanding the strengths and ideal use-cases for each type, developers can craft optimized, responsive, and resource-efficient applications.

The Delicate Balance: Tradeoffs of Indexing

However, with great power comes great responsibility. Indexing isn't a silver bullet. While they speed up read operations, they introduce overheads:

Write Operation Delays: Every time data is written or updated in the collection, the corresponding index also needs an update. This can introduce latency, especially if multiple indexes exist for a collection.

Space Overheads: Indexes consume disk space. In some scenarios, especially with multiple compound indexes, the indexes' size can exceed the size of the data itself.

In the world of databases, there's always a balancing act between read and write efficiency and the resource footprint. The art lies in strategically creating indexes based on the specific needs of the application and the query patterns, ensuring that the benefits outweigh the costs.

MongoDB's Best Practices: Streamlining Data Operations with Native Techniques

Paging Through Your Data: From Clunky Steps to Graceful Strides

Pagination is one of those ubiquitous data operations that sounds simple but can be tricky to implement efficiently, especially when dealing with copious amounts of data.

Traditional Approach: Skip and Limit

A conventional method involves using skip() and limit():

collection.find({}).skip(50000).limit(100)

This method skips the first 50,000 documents and then fetches the next 100. It works perfectly for smaller datasets. But as the amount of data grows, the performance can degrade. Imagine skipping over a million records to get to the next set. Imagine trying to jump to the last chapter of a book but having to flip through every single page to get there.

The Efficient Turn: Range Queries

A more efficient strategy involves using range queries. Instead of skipping records, you position your query based on a certain field's range.

Let's say we're working with a timestamp. Instead of skipping over documents, we'd pull records that have a timestamp greater than the last one we've processed:

collection.find({"timestamp": {"$gt": lastKnownTimestamp}}).limit(100)

Here, we've bypassed the clunky skip and are now taking graceful strides through the data, enhancing the efficiency manifold.

Textual Battles: Regex vs. Text Search

Text searches are pivotal for any database, and MongoDB offers two primary tools: regular expressions (regex) and text search.

Original Approach: Text Search

MongoDB's text search is designed to be nuanced, taking into account language-specific stop words and stemming. However, MongoDB mandates that a text search step must be the primary operation in an aggregation pipeline:

collection.find({$text: {$search: "coffee"}})

The Efficient Route: Regex

If you can replace the text search with a regex, especially in scenarios where a match query reduces the number of documents to be scanned, the regex will be more efficient. This is because it can be applied post the match step:

collection.aggregate([

{$match: {"productName": {"$exists": true}}},

{$match: {"productName": /coffee/i}}

])

Here, the regex operates on a reduced set of documents due to the preceding match step, resulting in a more efficient query.

Choosing Wisely: The $in Operator vs. the $or Operator

When we're dealing with selecting documents based on multiple criteria, MongoDB presents us with two operators: $in and $or. Both serve a purpose, but there's a marked difference in how they impact performance. Let's embark on a journey to understand when and why you might favor one over the other.

Original Approach: Using $or

Consider this scenario: you want to fetch products from categories 'A' and 'B' but only those priced at $500 or less. Using the $or operator, your query would resemble:

db.getCollection("products").find({

"$or": [

{"category": "A", "price": {"$lte": 500}},

{"category": "B", "price": {"$lte": 500}}

]

}).explain()

Here, MongoDB is essentially evaluating two distinct conditions. For every document, it questions, "Is this a product from category 'A' with a price of $500 or less? If not, is it from category 'B' and similarly priced?". Now, this method might be efficient for modest datasets, but with an increasing volume of data, the two conditions being checked independently can consume more time and resources. If there's no index to assist, the process can further lag.

The Streamlined Method: Using $in

Enter the more succinct $in operator:

db.getCollection("products").find({

"category": {"$in": ["A", "B"]},

"price": {"$lte": 500}

}).explain()

This refined query is essentially an encapsulation of the previous $or conditions. But what sets $in apart? When MongoDB encounters the $in operator and an index exists on the category field, it can quickly pinpoint the documents with categories "A" and "B". Instead of evaluating two distinct conditions like $or, it checks the indexed values for the presence of both "A" and "B" categories, ensuring a more streamlined retrieval.

The choice between $in and $or might appear nuanced at first glance, but these subtleties can profoundly influence database performance. In our endeavor to master the MongoDB vernacular, recognizing such distinctions enables us to converse more efficiently with our data repositories. Knowledge is power, and in this context, it's also speed.

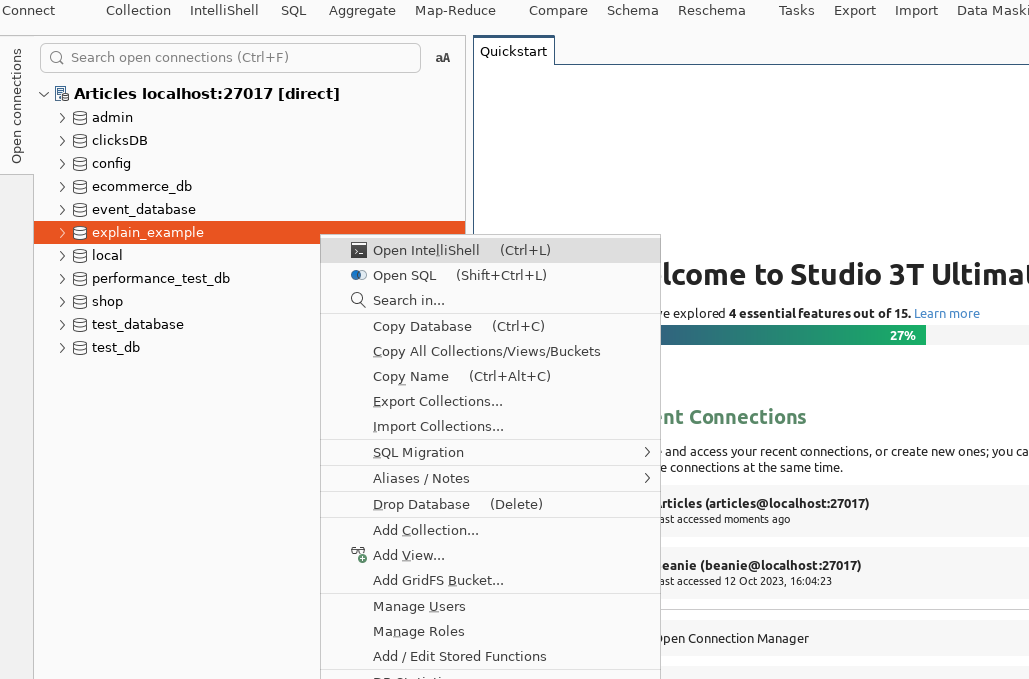

4. Decoding Query Performance with Studio 3T's IntelliShell

Studio 3T is one of the foremost MongoDB GUIs and provides a myriad of tools to facilitate seamless database interaction, including the IntelliShell, which gives developers the ability to write, edit, and execute MongoDB commands in a shell-like environment.

When trying to decipher the efficiency of different MongoDB operations, the .explain() method becomes crucial. Using Studio 3T's IntelliShell tool, we can run .explain() on queries to garner insights into how MongoDB processes them. Let's dive deep into this by comparing the $in vs. $or operators using the provided examples.

Step-by-Step Guide:

1. Launch IntelliShell:

In Studio 3T, click on the IntelliShell button to open a new tab where you can input MongoDB commands.

2. Execute the $in Query:

db.products.find({

"category": {"$in": ["A", "B"]},

"price": {"$lte": 500}

}).explain()

Upon executing, you'll get a JSON response. The key sections to pay attention to:

-

winningPlan: This section reveals the optimized plan chosen by MongoDB to execute your query. As seen in the provided output, the chosen plan uses aFETCHstage to filter theprice, and then anIXSCANstage to use the index on thecategoryfield. -

indexName: This confirms that the query is using thecategory_1index, signifying the use of the indexed field which boosts query performance.

3. Execute the $or Query:

db.products.find({

"$or": [

{"category": "A", "price": {"$lte": 500}},

{"category": "B", "price": {"$lte": 500}}

]

}).explain()

In the $or query response:

- The

winningPlansection shows theSUBPLANstage, which is essentially MongoDB evaluating multiple query plans. It then uses anORstage to combine the results of two independentFETCHandIXSCANstages. -

Notably, even though the

$orquery uses the samecategory_1index, it evaluates each condition in the$orarray separately. This distinction highlights why the$inoperator is generally more efficient than the$oroperator for this specific use-case.

4. Comparative Analysis:

- Both queries utilize the

category_1index. However, the execution is different. -

$inoffers a streamlined approach with a singularFETCHandIXSCAN. - In contrast,

$orseparately evaluates each condition leading to two separateFETCHandIXSCANoperations.

Conclusion:

Using the IntelliShell tool in Studio 3T, we can vividly see the under-the-hood differences in how MongoDB executes the $in and $or operations. For the given example, the $in operation proves to be more efficient as it employs a singular indexed scan for multiple values. On the other hand, the $or operation separately assesses each condition, adding overhead to the query processing.

Understanding these nuances empowers developers to write optimized queries, ensuring swifter response times and efficient use of resources. Remember, the devil is in the details, and with Studio 3T's IntelliShell, you can unveil these crucial details.

Posted on November 30, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.