AKS and Azure Policy baseline standards - Making clusters compliant

Roberth Strand

Posted on July 26, 2021

Azure policy for AKS has been around for a while now, and is a great for that extra control. It uses the Open Policy Agent (OPA) Gatekeeper controller to validate and enforce policies, all hands off as it's handled through the Azure Policy add-on. As of writing, there are several built-in policies for AKS but you cannot create your own. But as far as I can tell, you should have more than enough capabilities to cover most situations and if you don't you probably should install and handle OPA policies manually.

While researching this topic, I took a bunch of notes. I usually do this so that I can refer to it later. I later then realised that I should probably have written these as blog posts instead so others also can easily refer to it so that's what I'm rectifying now. From now one all my notes are going to be in the form of blog posts.

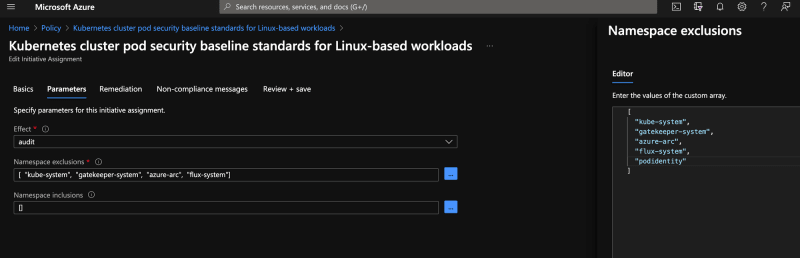

Kubernetes cluster pod security baseline standards for Linux-based workloads

There are a bunch of Kubernetes related policies in Azure now, but so far there are only two built-in initiates. The baseline standard which has 5 policies, and the restricted standard which has 3 additional ones. Which one do you want? Well, as with everything that's for you to decide. I usually end up using the standard one at clients that are new to the technology, all depending on their need for security and control. This post will be a guide through the policies in the standard initiate, a follow up is in the making for the three other policies.

Let's take a detailed look through the policies set by the initiative, then how we can write manifest that are compliant or how to exclude namespaces if we need to.

How deployments should look

To be fully compliant with the security baseline there are almost nothing you need to do. It's more, what shouldn't you do. Almost everything that you shouldn't do in your average deployment defaults to to that, and is something that you don't need to define.

So if your deployment doesn't explicitly allow the pod to run as privileged, use a hostPath as volume, is set to use host network, host process ID or IPC Namespace, you're all good.

Don't know what this means? Down below I summarise all the policies used in this policy and what they actually mean. Have something that needs to be allowed, then I have added a quick note on that at the end.

Kubernetes cluster pods should only use approved host network and port range

This one consist of a boolean for if host network namespace should be allowed for pods, and a minimum and maximum port to set the range. The default value of allowHostNetwork is false, so means that no host network and port range is allowed out of the box. This should be fine in most cases unless you have something that already needs that special privileges, like certain identity managers or monitoring solutions, and if that is the case one would just exclude their namespace.

This follows the standard for a pod deployment, again something that you would have to specifically set. So, unless you create an application that needs this, you could just leave the deployment with no hostNetwork set.

See more details under PodSpec in the API reference.

Kubernetes cluster containers should not share host process ID or host IPC namespace

Sharing PID namespace with the host process is probably needed for certain types of application that directly works against nodes, but this imposes a security risk if allowed by all. Again, both of these are set to false by default in a pod deployment. You would have to define hostPID and hostIPC as true and create an exemption for your application to get this through the policy.

See more details under PodSpec in the API reference.

Kubernetes cluster pod hostPath volumes should only use allowed host paths

The policy used by the initiate is set with an empty list, meaning that no hostPath volumes are allowed. The reason for this is that the hostPath type of volume is risky, because it allows the pod access directly to the host filesystem. There aren't actually any scenarios that I can think of where this is needed unless, again, the application is very special and have some sort of function for utilised by the entire platform. If that is the case, it probably would be best to add that application's namespace to the list of exemptions.

Kubernetes cluster containers should only use allowed capabilities

Default settings here is an empty list, which means that there are no allowed capabilities by default. This is usually not a problem, as this only applies to containers that are set up with extra capabilities like NET_ADMIN. In other words, unless you actually need something extra you could just not define anything in your deployment and everything will work out.

If you want to secure even more, you could define this particular policy with a list of capabilities to drop as well. This would then have to be reflected in your deployments, obviously. This might be something you would need if you are running certain workloads in a very strict environment but I think that this would be way too much upkeep for the average security conscious Kubernetes operator.

Kubernetes cluster should not allow privileged containers

By default, containers should not be allowed to run as privileged. This policy ensures that if you have a manifest where containers are allowed to run as privileged, it will get flagged as non-compliant. In the Kubernetes securityContext privileged defaults to false so you would have to explicit set it to true to be non-compliant.

If you have an application that needs to run as privileged, I would put that in it's own namespace and create an exemption.

Creating exceptions

Sometimes, you need to run some workload that requires a bit more access. This usually is monitoring solutions or security tools, for instance the Prometheus node exporter which needs access to the host network. So how do we deal with the exceptions to these policies?

For this particular initiate, you have the following options:

- You could exclude certain namespaces

- You could include all namespaces you want effected by the policy, exceptions through omission

- You could create an exemption for a particular resource (AKS cluster)

There are cases where any one of these is usable, but the easiest is probably excluding namespaces. This means that the policy will not apply to pods in namespaces of your choosing, and anyone deploying in others will then have to be compliant. If you look at the policy, Microsoft already has some namespaces here like kube-system and gatekeeper-system. Just add your namespace to the list, and you're done!

For even more flexibility, like using labels to select pods you want excluded, you would need to create your own initiative or assign policies one by one.

Adding a namespace exclusion

Posted on July 26, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.