On demand debug logging with memoize_until

Ritikesh

Posted on June 26, 2020

Caching

Caching is a commonly used software optimization technique and is employed in all forms of software development, be it web, or mobile, or even desktop. A cache stores the results of an operation for later use. For example, your web browser would use a cache to load this blog faster should you visit again in the future, enabled by the storage of static resources such as .js, .css, and images in your browser’s memory.

The most common reasons for using cache are:

- ttl (time to live) — cache data automatically expiring after a specified time interval

- Consistency — The data is always the same when read from different processes — multiple app servers or background processes is a norm in today’s cloud-first architectures.

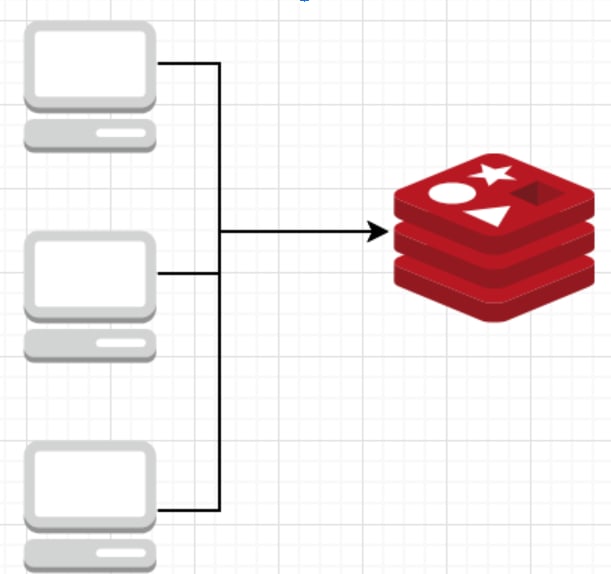

This allows the cache to be fresh — frequently invalidated and refreshed because of the ttl — and Consistent — because it’s a single source of truth. Though caching is a powerful tool, it, usually, is a separate process running on another server accessed by network calls. Cache systems are invariably fast but network calls add bottlenecks to the overall response time. With multiple processes making simultaneous calls over the same network — in a closed vpc setup — the cache would need to scale along with your components to keep up.

Memoization

Memoization is a specific type of caching pattern that is used as a software optimization technique. It involves remembering, or in other words, caching a complex operation’s output in-memory on the machine that’s executing the code. Memoization finds its root word in “memorandum”, which means “to be remembered.”

Memoization has an advantage in having the data cached in-memory on your machine, thereby avoiding network latencies. But you would rarely find memoization, multi-process consistency, and expiration used together.

We wanted a library that could memoize expensive operations like network calls to the database or file stores like S3. The library should support expiring the memoized values from time-to-time and also be consistent across our multiprocess stateless architecture. Looking up “memoize” in rubygems gave us multiple options like memoize_ttl, persistent_memoize, memoize_method, etc. — They either lacked on the consistency front, or the expiration front, or on both. Some added the bottlenecks of writing to disk, which might increase your IOPs and latencies.

MemoizeUntil

Introducing memoize_until. A powerful yet simple memoization library that focuses on the dynamic nature and consistency of all caching systems in a multi-process environment and brings them to the memoization world.

MemoizeUntil memoizes(remembers) values until the beginning of a predetermined time metric — this can be minute, hour, day and even a week.

To begin with, install the gem:

or simply add it to your Gemfile

The public API defines methods for each time interval that is supported by the library. In a Rails environment, MemoizeUntil checks for a YML file defined in config/memoize_until.yml and initialises the application with this set. This becomes a single place to track all your predefined memoization keys(also referred to as a “purpose”) for each interval. Run time purposes are also supported and can be extended through the

add_to API.

The store class is responsible for the core memoization logic. MemoizeUntil class creates and maintains a factory constant of store objects for each interval during initialization. These objects are initialized with a nested hash with each purpose as a unique first level key. Upon invocation, MemoizeUntil looks up the factory constant for the interval through the public method definition with the same name, and calls fetch on that store object. The fetch method computes the current moment — if the interval is day, it simply calculates the moment as today’s date — and uses that moment as a subkey in the nested hash. If the interval has a value assigned to it, that value is returned. If the key does not exist, the store clears all previously memoized data to avoid memory bloat, and the original block passed to the method is called and the result is stored for the given moment. MemoizeUntil also handles nils, i.e. nils are also memoized.

Auto fetching of data at the beginning of the pre-specified time metric guarantees consistency across processes.

How we use it

We built MemoizeUntil as a general purpose library and are using it as a general optimisation pattern across our products and platform services. Most common applications of it involve memoizing configurations such as spam thresholds, API limits for services, etc. There are some specific use-cases as well, some of which we will cover in future blog posts.

One such use-case is the need for switching to debug logging on the fly. We default to info-level logging in production to save on log sizes and costs associated with serving those logs to third party and in-house services for debugging and metric purposes. Like with all software, there are issues reported by our customers that are reproducible only in their environments and accounts. To debug such issues, we often need debug logs, which are not available because of the default “info” log level set in our production app. Typically, this would require a production deployment to change the log level and then another deployment to revert back to the older level.

To avoid this friction with deployment dependencies, we wrote middleware for both our app and background workers, which checks for a redis key and changes the log level if the key is set. Sample app middleware is as follows:

To avoid cache calls for every web request, we wanted to memoize this setting in local memory but also frequently check the cache store if it has been updated. This seemed like a perfect use case for using MemoizeUntil. The cached data required refreshing, but not instantly.

The readme covers additional use cases like how to extend MemoizeUntil for runtime keys and values — and more.

Not another cache store

MemoizeUntil is not a replacement for a cache store, it’s merely an optimization technique to reduce network calls to your cache store or database through memoization by guaranteeing consistency. Since everything is stored in-memory, memory constraints on the remote servers also need to be considered — although, thanks to the cloud, this isn’t as big a concern as it once used to be. Also, unlike truly standard memoization libraries that memoize method calls for each unique set of parameters, we have taken a slightly modified approach with purposes. These purposes can be application level(predefined purposes) or tenant level(runtime purposes).

Posted on June 26, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.