Famous Programming Errors That Everyone Should Learn From

Rino Kovačević

Posted on February 22, 2024

"He who makes no mistakes makes nothing” and "The best way to learn is from mistakes" - these are sayings that comfort us when we make a mistake. Programmers like to make things, and if they want to follow new trends, they have to learn constantly. Based on these sayings, fictional and made-up information, and my seven years of work as a programmer surrounded by other programmers, I came to the conclusion that programmers make a lot of mistakes. To detect or avoid those mistakes, we write automated tests, check each other’s code, use environment staging and preproduction, perform backups, work with QA engineers as well as use various tools that will indicate errors at the earliest possible stage.

Despite the precautions, an occasional bug leaks into production from time to time. Then what? Quickly detect the cause of that error, fix it, and put it into production as quickly as possible. The fewer users who feel the consequences of your mistake, the better. On the other hand, the bigger or longer the error is in production, the longer it will be discussed within the company.

However, sometimes mistakes can be too big or too interesting to talk about only to those who have experienced them. They will appear in the news, they will be talked about in newspapers, some will get their own Wikipedia page, and some will even be remembered 60 years later. In this article, we'll explore some of these notable mistakes, discover how they happened, and what we can learn from them.

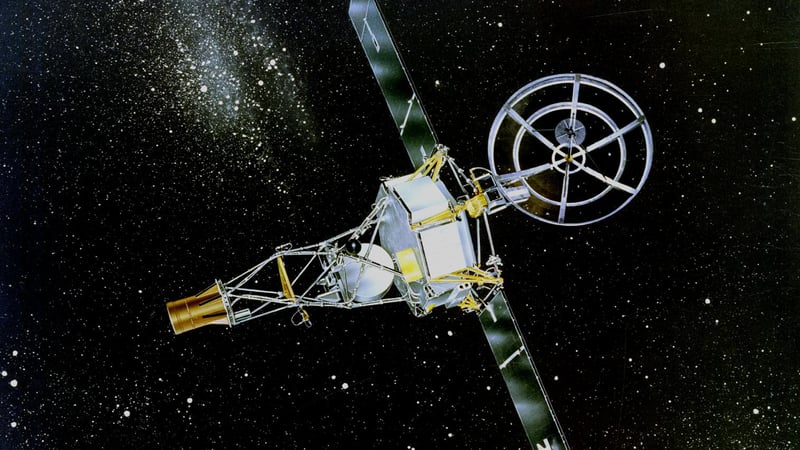

Mariner 1

Back in 1962, NASA launched its first interplanetary spacecraft, Mariner 1. It was supposed to pass by Venus and measure its temperature, magnetic fields, and other things that interest those smart individuals, but things didn't go according to plan. Shortly after launch, it began flying in the wrong direction, and they decided to initiate self-destruct protocols rather than risk potential damage to Earth.

This failure is usually attributed to a programming error. However, this was not just any bug but a single omitted dash in the code. What happened? In the code, there was a mathematical operation that contained the symbol "R" as the radius. There should have been an average radius, whose label should have been "R-bar" in the code and R̄ in physics. But, it is not just "one dash.” To make the title of this article more dramatic, "the most expensive dash in the world" sounds better than "the most expensive four characters in the world."

Given that this was a mistake in physics and mathematics, why is it called a programming error? The mistake was originally made in the calculations, and the programmers merely rewrote it. Ideally, it would be discovered through testing or collaboration between programmers and mathematicians or physicists. Programmers will often write code for applications outside their domain. Therefore, you don't have to be a lawyer to make an application for lawyers, nor an architect to make a design tool, but it is important to learn the basics and, in cooperation with professionals in these domains, write some scenarios with which you will be able to test the code (and if possible cover it with automated tests).

What can we learn from the Mariner 1 mistake? First of all, it is necessary to write as many tests as possible for the code. On large projects where several domains are mixed, cooperation is very important. Also, it's always good to have a backup plan, maybe not necessarily a self-destruct system like NASA, but it's good to plan for situations when things go wrong.

Millennium (Y2K) bug

All those who remember their 2000 New Year's Eve also remember all the conversations about whether, after midnight, all computers would stop working, banks lose all of their data, and airplanes stop flying. After midnight almost none of what had been predicted actually happened. There were a couple of reports of computer systems failing, but that was fixed quickly.

To give a little context to the younger generations who weren’t there for the millennial bug and also to those who had better things to do in the late 90s and only overheard what happened:

In the 50s, computer memory was very expensive, about $1 per bit, meaning $8 per byte. That's why programming languages from that era, like COBOL, stored only the last two digits of the year in memory. The program assumed that the first two digits were "19".

Therefore, programmers saved a few bits, i.e., dollars, per saved date. They believed that the price of computer memory would fall, and it took almost 50 years to fix it. The assumptions they made were accurate, and the reason for the error was that they delayed making that adjustment.

People like Bob Bemer already discussed the need to make an adjustment in the 60s, and he wrote articles about it in the 70s. Not many people listened to him, and they didn’t begin working on it until 1994. By then, systems with the bug had become so widespread, and so much new software had been built based on the bug that the amount of work put in to remedy the situation had become enormous. Some estimates say that over $300 billion was spent on that adjustment.

Did we learn anything from the Y2K mistake? We shall see in 2038 when the Y2K38 bug (or the Epochalypse) awaits. This applies to Unix 32-bit time records that will experience a similar problem on January 19, 2038, when it will run out of bits to display the time. Postponing things for later is not necessarily a bad thing because there are situations when it can pay off. But whenever you put something off, make sure you get it done on time. When leaving "TODO" comments in the code, it is crucial to specify the individual responsible for resolving them and the deadline for completion.

World of Warcraft Corrupted blood pandemic

World of Warcraft is a computer game that everyone has probably heard of. Those who haven't should only know that in 2005 it was very popular, and many players played it simultaneously online on the same server. One of the elements of the game is the joint conquest of dungeons in which a group of players must work together to defeat all the enemies that hide inside of it. At the end of one such dungeon, added in September 2005, the final boss would cast a spell that would slowly hurt anyone hit by it. That negative effect, called Corrupted blood, would spread to all nearby players. It wasn't meant to ever come out of that dungeon because players would be healed upon exiting the dungeon. However, a bug in the code allowed the negative effect to spread to the rest of the virtual world through the player's pets.

The corrupted blood "virus" quickly began to spread throughout the World of Warcraft like a pandemic. Due to the density of players and NPCs (Non-player characters - characters that are not controlled by players, but are an essential part of all cities), the largest cities were infected first. The player characters would either be cured of that virus over time or die and then come back to life, while the NPCs would remain infected all the time and greatly contribute to the spread of the virus. Some players tried to use their magic to heal as many others as possible or would stand outside cities and warn weaker players that it was dangerous to enter, all to stop the spread. For some, it was amusing trying to expand it as much as possible.

The programmers quickly found out about their mistake and began trying to fix the situation. They tried several patches, but none of them managed to completely remove that virus because it would always remain present at least somewhere and spread again. After almost a month of trying, they decided to reset the servers and get them back to where they were before that dungeon even came out and before it all started.

What can we learn from the WoW Corrupted blood bug? It happened similarly to most programming mistakes - not thinking about all the possible edge cases. Spreading a virtual "virus" like a real virus inside a computer game to various people was a very interesting concept. For example, some players years later asked the company to do it again (this time on purpose). Some programmers liked to hear about other people's failures and think about the chaos that must have been happening to force them to roll back the servers to how they were a month ago. Interestingly, even immunologists were interested in this case, so they used this event as a pandemic simulation to research not only the spread of the virus but also human behavior in those specific situations.

The Heartbleed Bug

OpenSSL is a cryptographic software library, i.e., open source code that helps arrange a secure, encrypted connection between server and client following industry security standards. When developers want to follow those security standards, instead of reinventing the wheel and doing things from scratch, they just add that library to their code and use ready-made solutions.

It's very troublesome when your code, which is intended for security, has a security hole. OpenSSL had a problem with leaking information that should be protected due to a bug in the implementation of the TLS heartbeat extension, which is why it got its dramatic name: the Heartbleed Bug.

Over time, the problem was eventually fixed, but everyone using older versions of the OpenSSL library (released between 2011 and 2014) had to update it with a newer version to get rid of the security risk. It is estimated that in 2014, as many as two-thirds of all active Internet sites used OpenSSL. Namely, the reason for this is that it is used by popular open-source web servers such as Apache and Ngnix.

What can we learn from The Heartbleed Bug? Using open-source libraries is a daily routine for programmers today. Programming would be significantly slower if you had to solve every problem from scratch, ignoring other people's accessible solutions. Regardless, you should be cautious every time you add a new library to your system, and the mere fact that something is popular does not guarantee that it is safe.

GitLab backup incident

GitLab is a collaborative platform utilized in the process of software development, with an approximate user base of around 30 million individuals.

In January 2017, engineers at GitLab noticed a spike in the database load. While investigating what was going on and trying to normalize the situation, they encountered several problems. Therefore, they decided to manually synchronize certain databases. In doing so, they noticed an error and stopped within seconds, but they had already lost 300 GB of user data.

In such cases, backups that GitLab had, proved to be useful. However, when they started applying that backup, they noticed that their recovery procedures were not working well and that they did not have a fresh backup. In the end, they had to use the six-hour-old data they had for copying to the test environment. Losing six hours of data when you have 30 million users is not a minor failure, and learning that your process for saving and applying backups is not good, only when you need it, certainly did not make anyone happy.

What can we learn from this mistake? As a programmer, you know that whenever some data is involved, you always have to have a backup. This situation teaches us that having a backup alone is not enough. The way you perform backups should be adapted to the system, data type, and users. In addition, it is very important to regularly test your backups as well as backup procedures.

What can we conclude from these programming errors?

A philosopher once said, "Those who do not learn from history are doomed to repeat it." We as programmers are lucky that the history we are interested in only begins in the 20th century, so there is not much to look back on. It's a double-edged sword because it is enough to infect hundreds of thousands of players with a magical virus in your game once, and you will have people still talking about it 18 years later.

Every time you read an article about how a company somehow messed up, I suggest you think about how you would react in that situation and conclude what you can learn from their mistakes. At Devōt, we like learning from other people's mistakes, so I'm proud to say that we haven't had to destroy a single $20 million spacecraft yet.

Posted on February 22, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.