The Big O

PC

Posted on July 31, 2022

So to kick off this series of technical blogs I'm going to start with what I'm currently learning right now, which is Data Structures and Algorithms! To start off I'm going to talk about the basics of what you need to know before you get into DSA which is Big O so lets get to it!

The Big O...

No I am not talking about the 1990's anime that's a cross between Batman and Gundam, It kinda is, but rather Big O Asymptotic Analysis Notation. It sounds really complicated and to some degree it can get complicated. However all Big O is, is a way to measure how well your code solves a problem. This is important because any engineer can code something to solve a problem but what matters most is how well the problem is solved.

To understand Big O we first need to understand what good code is and why. There are 2 Important aspects of good code.

Readability

Scalability

When it comes to Big O we mainly want to focus on the latter aspect while considering the first when the situation arises. Just like when you are baking a cake and using a recipe. Writing code to program a computer is the same way. There are right instructions, wrong instructions and some recipes are better than others. When things get bigger and bigger having instructions be as efficient as possible can save time and a company millions. Just like how you wouldn't want to preheat the oven after mixing in all the ingredients to bake a cake. But instead you would want to do it before you start mixing so that when your done mixing hopefully your oven is preheated and ready to go. Take this for example:

const everyone = ['dory', 'nemo','gill','squirt','darla','hank','clifford']

function findClifford(array) {

let t0 = performance.now();

for (let i = 0; i < array.length; i++){

if (array[i] === 'clifford') {

console.log('Found The Big Red Dog!');

}

}

let t1 = performance.now()

console.log(t1 - t0)

}

This is a simple program that prints out 'Found The Big Red Dog!' when we find him in the array. However the performance of this function, or algorithm, is determined differently by each computers CPU and they have different varying factors such as other programs running that may slow down the program. How do we solve this?

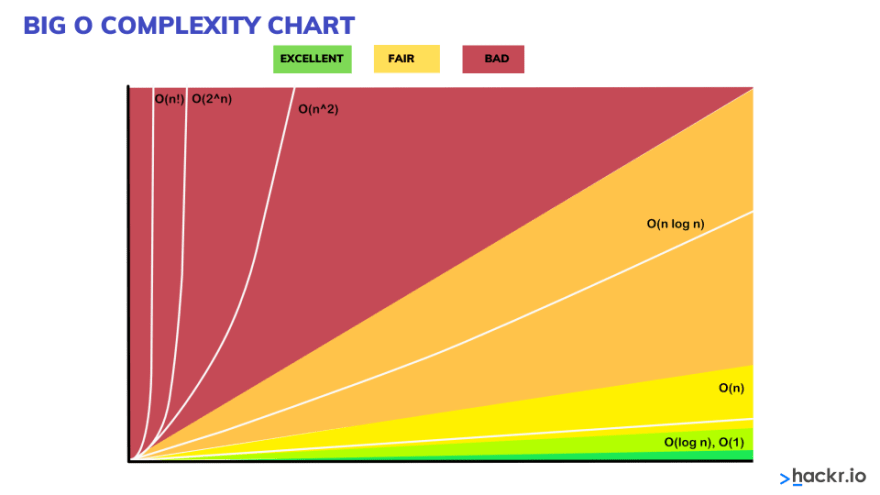

The Big O solves this issue by being the universal language we use as engineers to determine how long an algorithm takes to run. It tells us about how, as we grow bigger and bigger with our input, long an algorithm takes to run. This is irrespective of computer differences and can be described in the following graph:

O(1) Constant Time - This means that as the number of inputs increases the operations stays the same.

O(n) Linear Time - As inputs increase operations increases at the same rate. (for loops, while loops through n items)

O(n^2) Quadratic Time - every element in a collection of data needs to be compared to every other element. Two nested loops

O(n!) Factorial Time - As inputs increase operations increases by n factorial (you are adding a loop for every element)

Ideally we want our programs to be as close to O(1) constant time and as algorithmically efficient as possible. Although it might not always be possible we can get algorithms to tend towards that by varying methods. We will discuss these methods in the future including the Big O complexities that I haven't mentioned. To measure Big O we have 4 rules that help in simplifying it for general purposes.

1. Worst case.

We only care about the worst case scenario. E.g.,

const everyone = ['dory', 'bruce', 'marlin', 'nemo','gill','bloat','nigel','squirt','darla','hank','nemo']

function findNemo(array) {

let t0 = performance.now();

for (let i = 0; i < array.length; i++){

if (array[i] === 'nemo') {

console.log('Found Nemo!');

}

}

let t1 = performance.now()

console.log(t1 - t0)

}

Even though in the array everyone, nemo is in the 4th index. We assume that in the worst case it is in the last index.

2. Remove Constants

function printFirstItemThenFirstHalfThenSayHi100Times(items) {

console.log(items[0]);

let middle Index = Math.floor(items.length / 2);

let index = 0;

while (index < middleIndex) { // O(n/2)

console.log(items[index]);

index++;

}

for (let i = 0; i < 100; i++) { // O(100)

console.log('hi');

}

}

Even though the above is O(n/2 + 100). We don't care about the constants so it becomes O(n) because as it scales and approaches "Infinity". We don't care how steep the line is just how the line is moving.

3. Different inputs should have different variables

function compressBoxesTwice(boxes, boxes2) {

boxes.forEach(function(boxes) { // O(n)

console.log(boxes);

});

boxes2.forEach(function(boxes) { // O(n)

console.log(boxes);

})

}

This rule states that since there are two different items the Big O is not O(n + n) but rather O(a + b) because the two inputs could be of varying sizes.

4. Drop Non Dominants

function printAllNumbersThenAllPairSums(numbers) {

console.log('these are the numbers:');

numbers.forEach(function(number) { // O(n)

console.log(number);

});

console.log('and these are their sums:');

numbers.forEach(function(firstNumber) { // O(n)

numbers.forEach(function(secondNumber) { // O(n)

console.log(firstNumber + secondNumber);

});

});

}

printAllNumbersThenAllPairSums([1,2,3,4,5])

The example above is O(n + n^2) since loops right next to each other are added and loops nested in each other are multiplied. However rule number 4 states that we only want to keep the most dominant term. So we can actually simplify this example's Big O to be O(n^2) because as n scales the linear operations will become insignificant compared to the quadratic operations.

What does it all mean?

We usually only think in the here and now when it comes to coding and we are usually only handling small amounts of data. But what happens when we get millions and millions of users on our site? If our code isn't scalable our code will break. This is why big O is so important because it allows us to think about how our code scales as inputs and operations increase on a larger scale. The 3 pillars of programming are:

Readability

Memory (Space Complexity)

Speed (Time Complexity)

Like what makes a good programmer, scalability can be broken into two aspects such as memory and speed. So far we've only discussed Time Complexity, speed, but another important aspect is Space Complexity, memory, which is how much space an algorithm or data structure takes up. This is just as important because computers have a limited amount of memory. The things that cause Space Complexity are these:

Variables

Allocations

Data Structures

Function calls

Although we want less time and less memory there is a tradeoff between having less speed or less memory, one usually increases the other.

And that's a wrap FOLKS! That's pretty much all I have to talk about for now when it comes to Big O. If there is anything that I hope you take away from this article, its that Big O is the language Software Engineers use to determine what's good code and bad code. Last but not least, If you remember to write scalable code maybe one day you too can program something as big as...

The Big O.

Posted on July 31, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.