Getting started with Google's Multi-modal "Gemini Pro Vision" LLM with Javascript for Beginners

Oyemade Oyemaja

Posted on December 29, 2023

Building AI-powered Applications w/ Javascript using Langchain JS for Beginners

Getting started w/ Google's Gemini Pro LLM using Langchain JS

In the previous blog posts ⬆️⬆️⬆️, we've discussed building AI-powered apps with different LLMs like Open AI's GPT and Google's Gemini Pro. One thing they all have in common is that they are text-based models which means that they are designed to understand, interpret, and generate text.

How do we then create AI-powered apps that need to interact with images? Well this is where multi-modal LLMs come in, they are LLMs that can understand, interpret, and generate not just text, but also other modes of data such as images, audio, and video.

Google also released a multimodal version of their new Gemini Pro model called Gemini Pro Vison that enables the LLM to interact with images.

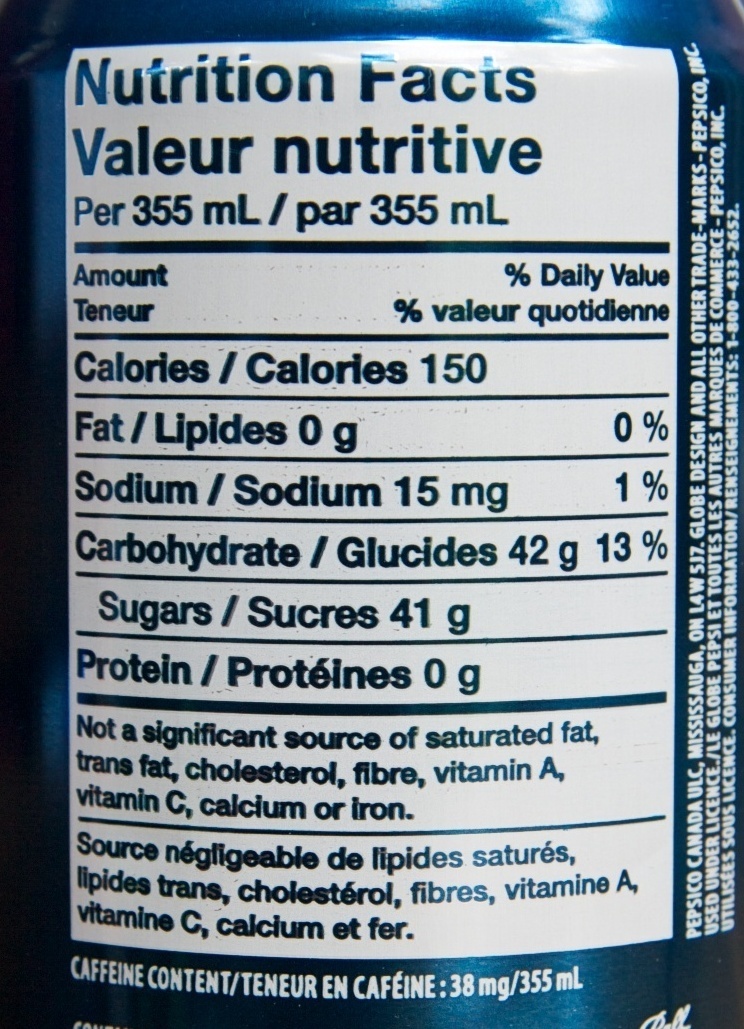

In this blog post, we will be using the newly released Gemini Pro Vision LLM to create a nutrition-fact explainer app that takes the image of the nutrition fact on an edible product and returns some insights on it.

Some requirements before we can begin, you need node.js installed on your machine if you do not have it installed you can download it here, we would also need an API key for the Gemini LLM. Here is a guide for creating an API key for Gemini.

To get started:

Navigate to your preferred folder of choice, open it in your code editor, and run

npm init -yin the terminal. Apackage.jsonfile would be generated in that folder.Edit the

package.jsonfile by adding atypeproperty with a valuemoduleto the JSON object."type": "module",.

This indicates that the files in the project should be treated as ECMAScript modules (ESM) by default.Run

npm i @google/generative-ai dotenvin the terminal to install the required dependencies.Create two (2) new files in the current folder, one called

index.jswhich would house all our code, and another called.envto store our API key. We can also copy the image files to be analysed into the folder.In our

.envfile, we need to add our API key. You can do this by typingGOOGLE_API_KEY=YOUR_GOOGLE_API_KEY_GOES_HEREexactly as shown, replacingYOUR_GOOGLE_API_KEY_GOES_HEREwith your actual Gemini API key.In our

index.jsfile we would import the following dependencies:

// This imports the new Gemini LLM

import { GoogleGenerativeAI } from "@google/generative-ai";

// This imports fs from node.js, which we

// will use to read the image file

import * as fs from "fs";

// This helps connect to our .env file

import * as dotenv from "dotenv";

dotenv.config();

const template = `Task: Analyze images of nutritional facts from various products, which feature diverse designs and include detailed nutritional information typically found on food packaging.

Step 1: Image Recognition and Verification

Confirm if each image is a nutritional facts label.

If the image is not a nutritional facts label, return: "Image not recognized as a nutritional facts label."

Step 2: Data Extraction and Nutritional Analysis

Extract and list the key nutritional contents from the label, such as calories, fats, carbohydrates, proteins, vitamins, minerals and other nutritional details.

Step 3: Reporting in a Strict Two-Sentence Format

First Sentence (Nutritional Content Listing): Clearly list the main nutritional contents. Example: "This product contains 200 calories, 10g of fat, 5g of sugar, and 10g of protein per serving."

Second Sentence (Diet-Related Insight): Provide a brief, diet-related insight based on the nutritional content. Example: "Its high protein and low sugar content make it a suitable option for muscle-building diets and those managing blood sugar levels."

Note: The response must strictly adhere to the two-sentence format, focusing first on listing the nutritional contents, followed by a concise dietary insight.

`;

// Above we created a template variable that

// contains our detailed instructions for the LLM

// Below we create a new instance of the GoogleGenerativeAI

// class and pass in our API key as an argument

const genAI = new GoogleGenerativeAI(process.env.GOOGLE_API_KEY);

// This function takes a path to a file and a mime type

// and returns a generative part (specific object structure) that

// can be passed to the generative model

function fileToGenerativePart(path, mimeType) {

return {

inlineData: {

data: Buffer.from(fs.readFileSync(path)).toString("base64"),

mimeType,

},

};

}

// We create the model variable by calling the getGenerativeModel method

// on our genAI instance and passing in an object with the model name

// as an argument. Since we want to interact with images, we use the

// gemini-pro-vision model.

const model = genAI.getGenerativeModel({ model: "gemini-pro-vision" });

// We create our image variable by calling the fileToGenerativePart function

// and passing in the path to our image file and the mime type of the image.

const image = [fileToGenerativePart("./pepsi.jpeg", "image/jpeg")];

// We call the generateContent method on our model and pass in our template

// and image variables. This returns a promise, which we await and assign

// to the result variable. We then await the response property of the result

// variable and assign it to the response variable. Finally, we await the text

// method of the response variable and assign it to the text variable.

const result = await model.generateContent([template, ...image]);

const response = await result.response;

const text = response.text();

// Log the text variable to the console.

console.log(text);

Now we would run

node index.jsin our terminal. We should then see a result similar to this in our console:

This product contains 150 calories, 0g of fat, 42g of carbohydrates, 41g of sugar, and 0g of protein per 355ml serving.

Given the high sugar content, moderate consumption is advisable for those watching their sugar intake.

Keep in mind that each time you run the model, the results might vary slightly. However, the overall meaning and context should remain consistent.

Here is a link to the GitHub repo

Don't hesitate to ask any questions you have in the comments. I'll make sure to respond as quickly as possible.

Posted on December 29, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

December 29, 2023