How I built an automated vulnerability scanner SECaaS

Ninjeneer

Posted on June 6, 2023

Few days ago, I released the Cyber Eye web platform. A SECaaS that allows any user, even those without technical knowledge, to run some basic & advanced security scans on their infrastructure.

Each of those scans take place from the outside, simulating what an attacker could be able to search for.

Today, we'll go down the technical side of this platform, and I'll explain how I did it. I am open to any suggestions to improve it :)

Server architecture

For this project, I decided to take as a priority the scalability. In fact, as users can themselves decide the periodicity of their scans (via a cron user-friendly selector), I expect some heavy loads during nights at 12AM.

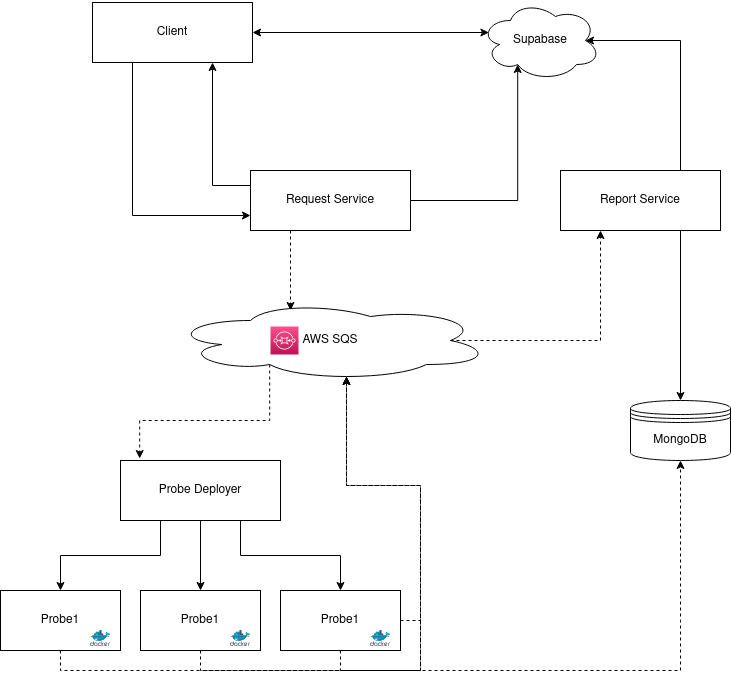

So I decided to split the architecture this way :

- A service managing the user requests responsible of validation, user credits checking, publishing the request to other services)

- An AWS SQS queue in which the requests are sent

- A service listening to the above queue, responsible of deploying the security probes (each probe being containerized with Docker)

- An AWS SQS queue in which probes are sending notifications of results

- A MongoDB atlas database storing the raw results of the probes

- A service managing the probe results, to parse them and create a final report provided to the user

- Finally, a real time database provided by Supabase to handle basic data storage and allow UI responsiveness

- 3 other additional services handling automated jobs, platform stats and billing using Stripe

The Request Service

This service is the entry point of the platform. It is responsible of handling user requests, making sure they have enough credits to run their scan and publishing the request to the AWS SQS Queue.

The Report Service

This service is the final piece of the workflow, triggered when the last probe of the scan is ended. It will pull all the probes results from Mongo, and then parse each of them based on a defined parser. Once every result is properly parsed, they are aggregated to form a final report embedding some more metadata. This report will be served to the user.

The Deployer Service

This one is the one that gave me headaches. Initially, I wanted to use an AWS Lambda function triggered by the AWS SQS Queue to deploy on demand some containers. However, I struggled a lot using ECS because it encourage the use of predefined configuration files, while I want my containers to be deployed on the fly without any structure.

At this point, my solutions were to dig deeper in AWS services hell or to go by myself and create my own very simple ECS. And that's what I did.

This homemade AWS ECS is written in Python, using the boto3 module to listen the AWS Queue. On each event received, the request is parsed, the relevant container is identified and a configuration is set. Then, the container is placed inside a deployment waiting queue (set randomly to 5 parallel run max.). When a deployment slot is free, the container is executed on the host machine, and killed at the end.

This choice also allows me to reduce a lot the costs that would have been generated by AWS.

Probes

Each probe is wrapped inside a Docker container to be able to run it almost anywhere, decoupled from the rest of the codebase, and written in any language

I basically do not create my own security tools, but build wrappers around famous tools like nmap, nikto, sqlmap... to provide trustful results.

Frontend architecture

Brace yourself, this gonna be a long read

I am using React and Tailwindcss :)

Git methology & CI / CD

To be able to manage easily my models and common code, I decided to go Monorepo for all the whole server side.

I set up some Github Actions to run my tests on each push/pull requests on my dev and production branches. Then, my docker images are built & stored using DockerHub.

On the CD side, I am working on setting up a watchtower on the production server to automatically pull new images and restart containers.

In conclusion, this project was for me an exciting playground where I could use all my knowledge and learnt a huge amount of new things (software architecture, DevOps practices, the rigor of production...). I will surely continue this project to bring it to a mature product, with more and more probes, and will post more updates of it on this blog

Thank you reading ! Happy to hear about your thoughts in the comment section

Posted on June 6, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.